Industrial robot debugging method based on combination of natural language and computer vision

An industrial robot and computer vision technology, applied in neural learning methods, software testing/debugging, semantic analysis, etc., can solve problems that cannot be applied to the production environment, poor task code robustness, and debugging results that cannot be applied to the on-site environment, etc., to achieve Improve development efficiency, solve time-consuming problems, and reduce deployment time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0020] The present invention is further analyzed below in conjunction with specific embodiments.

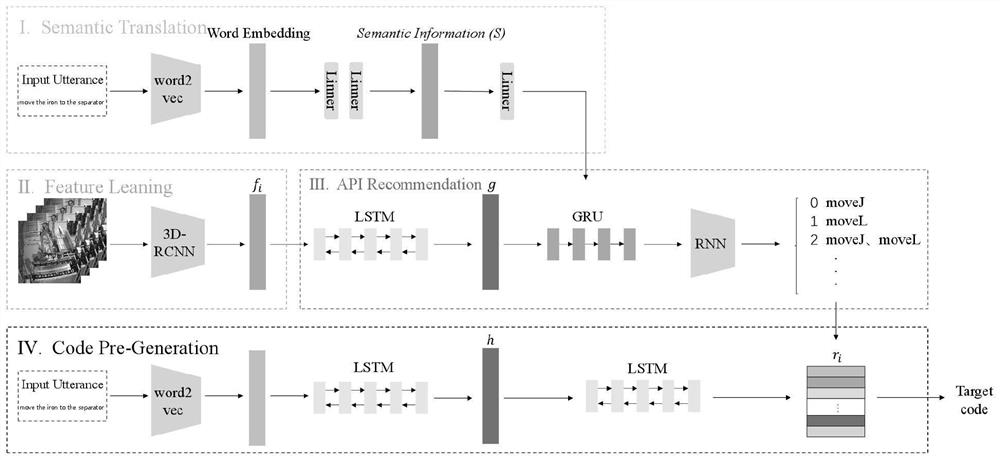

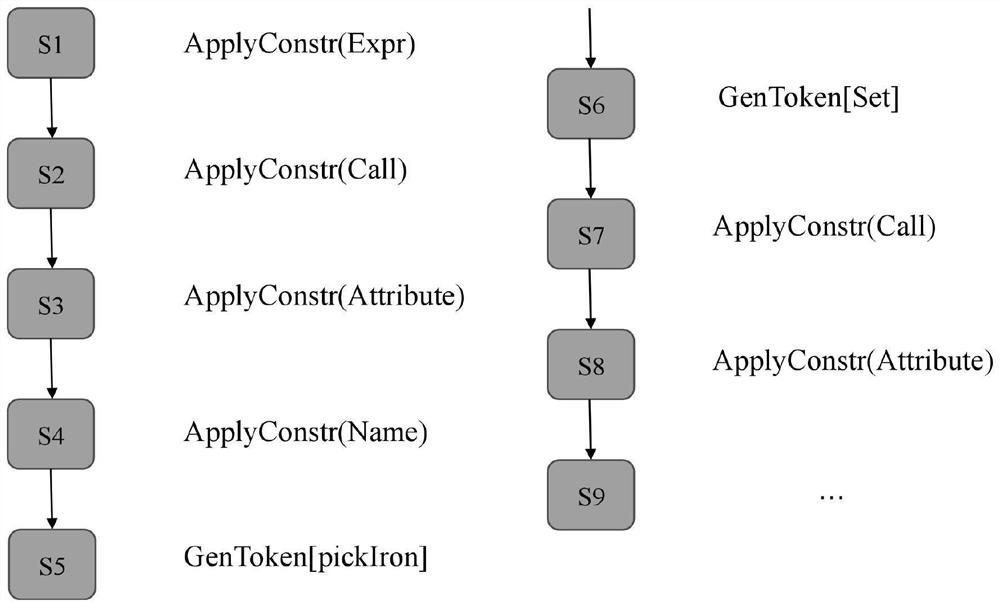

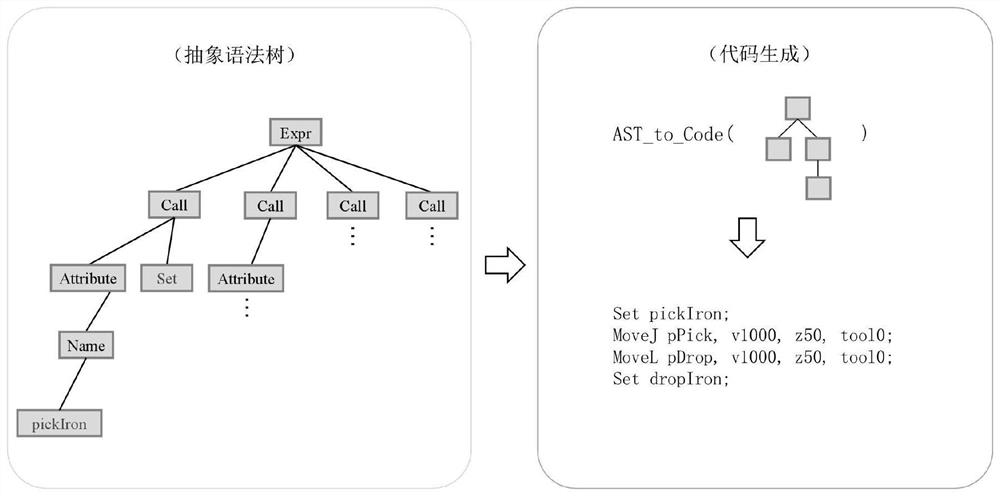

[0021] An industrial robot debugging method based on the combination of natural language and computer vision, such as figure 1 Include the following steps:

[0022] Step (1), generate semantic information

[0023] 1-1 Input the natural language description describing the robot action code into the word2vec network to generate text embedding; the details are as follows:

[0024] The natural language instruction X={x composed of i natural language words i |i=1,2,...,n} Generate text embedding vector matrix E={e through word2vec network i |i=1,2,…,n}∈R L×C ;

[0025] E=word2vec(X) (1)

[0026] where x i represents the ith natural language word, e i Represents the i-th text embedding vector, word2vec() represents the word2vec network function, L is the number of text embeddings, and C is the embedding dimension;

[0027] 1-2 Use the text embedding vector matrix generated in ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com