Patents

Literature

157 results about "Robot action" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

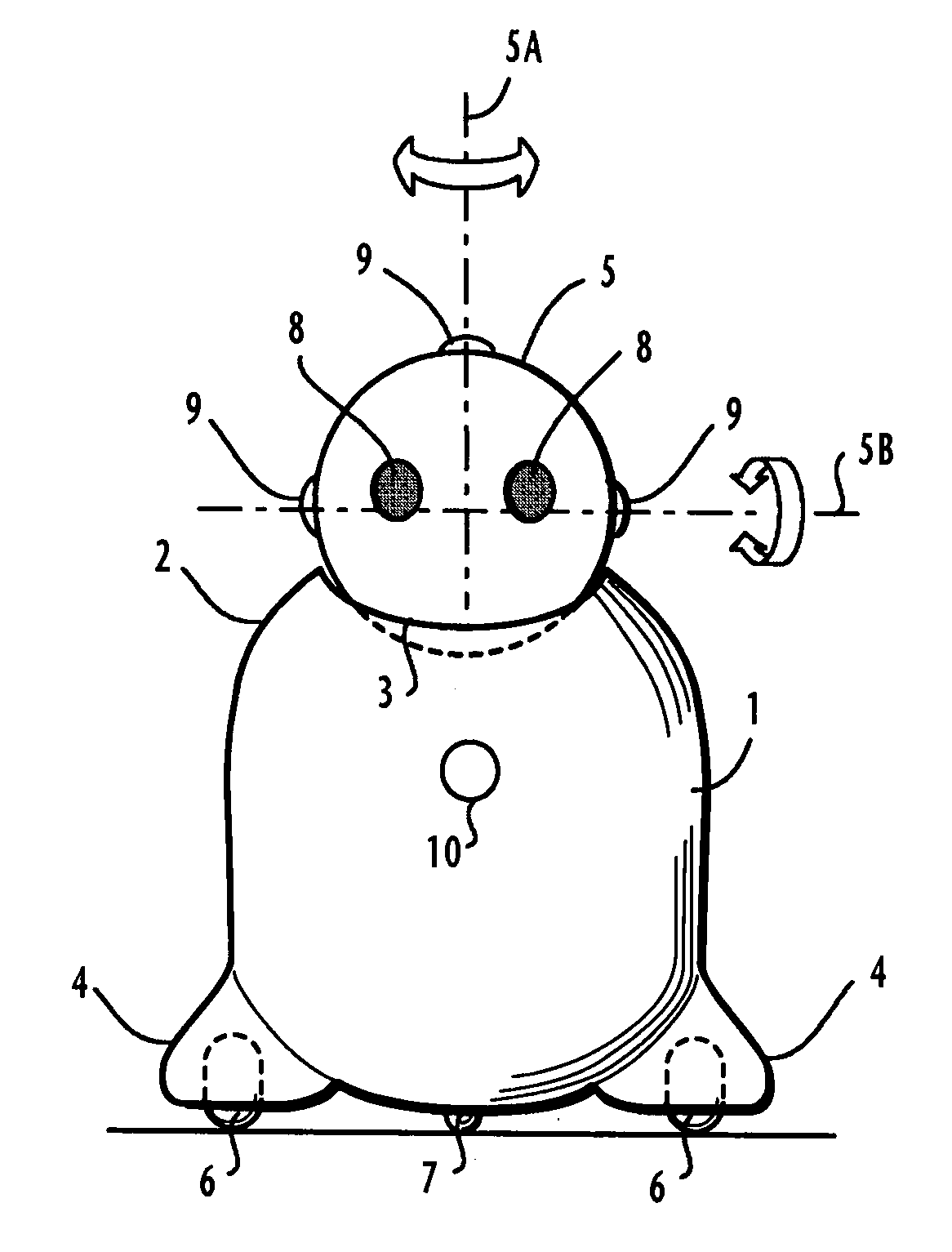

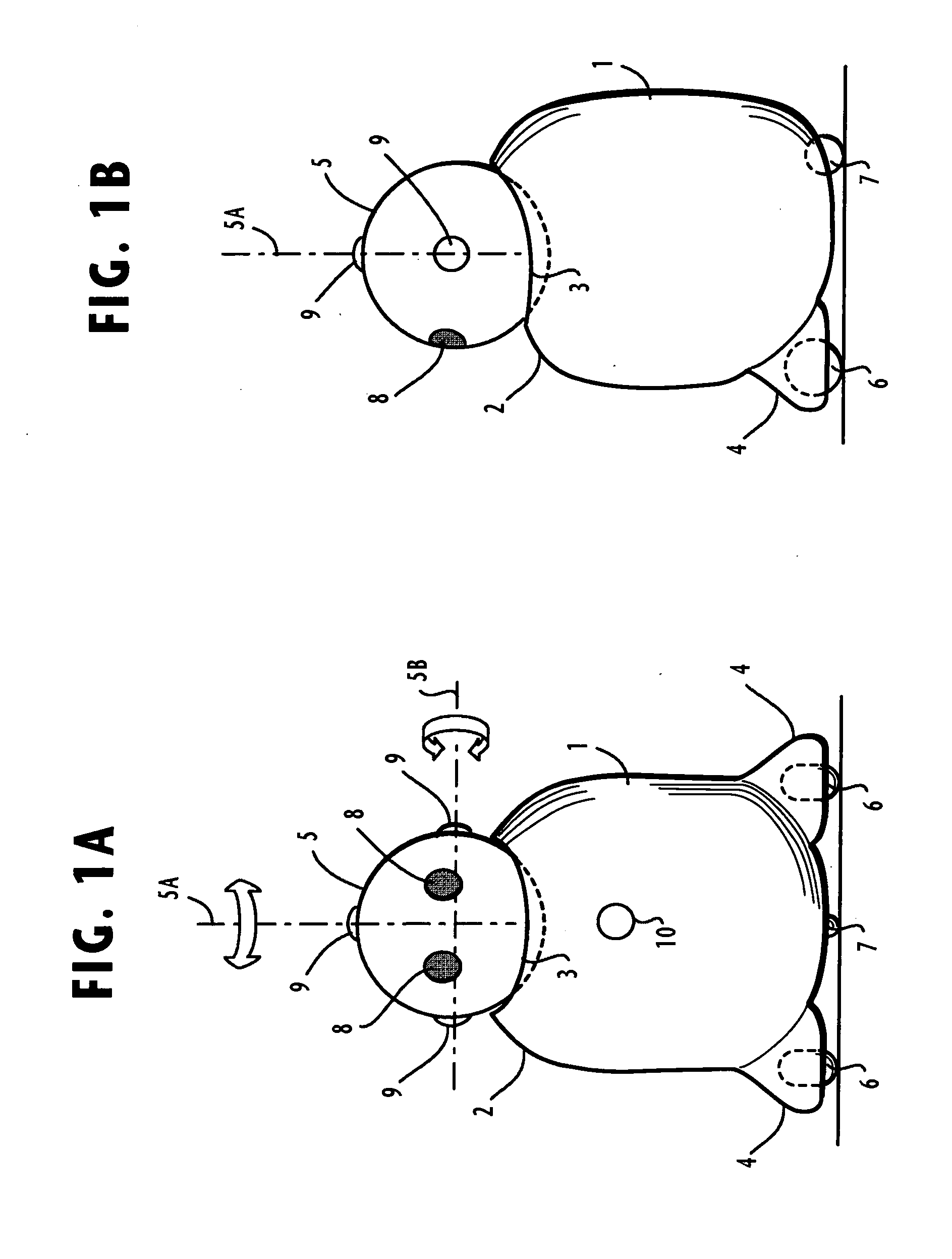

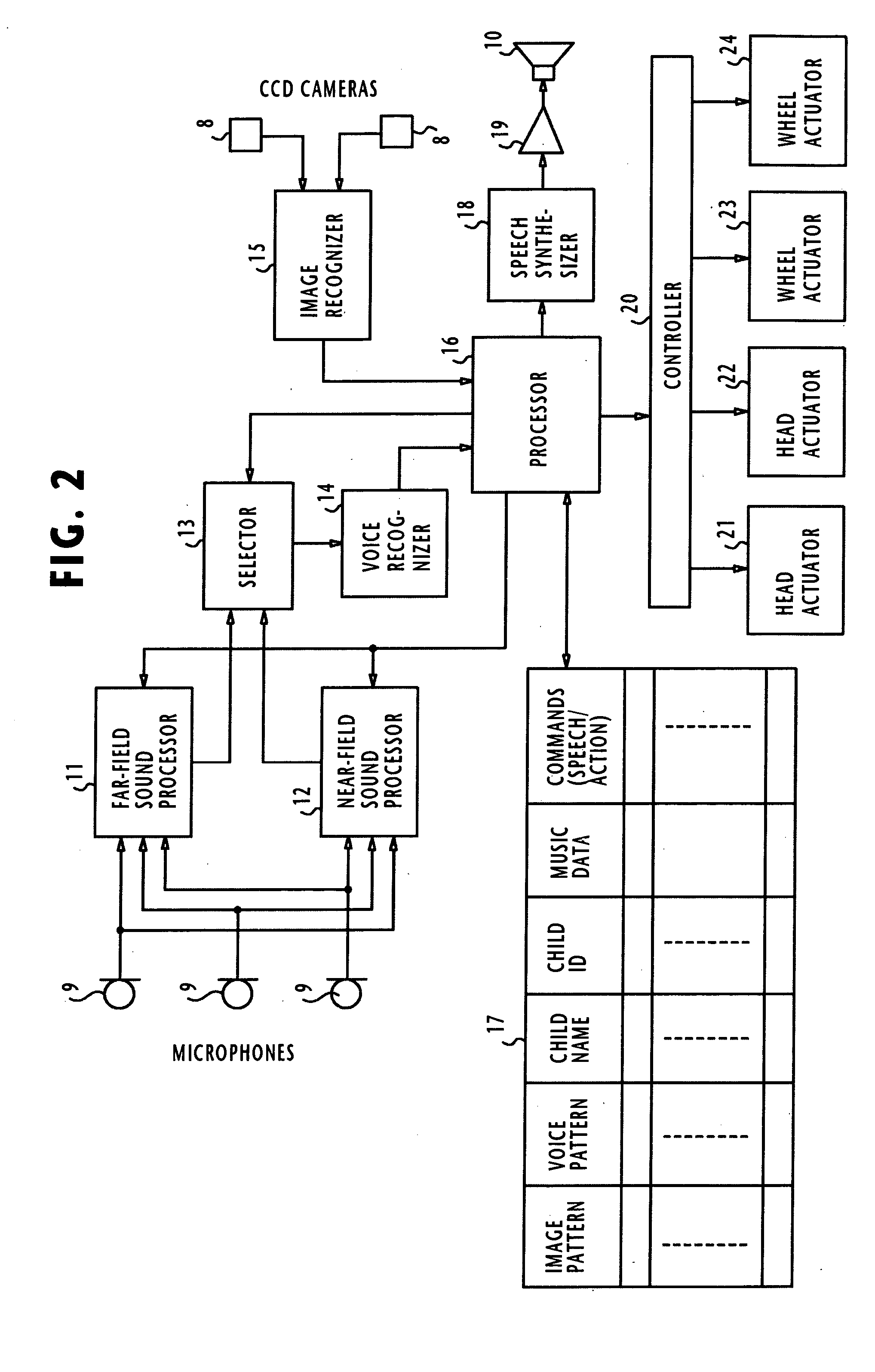

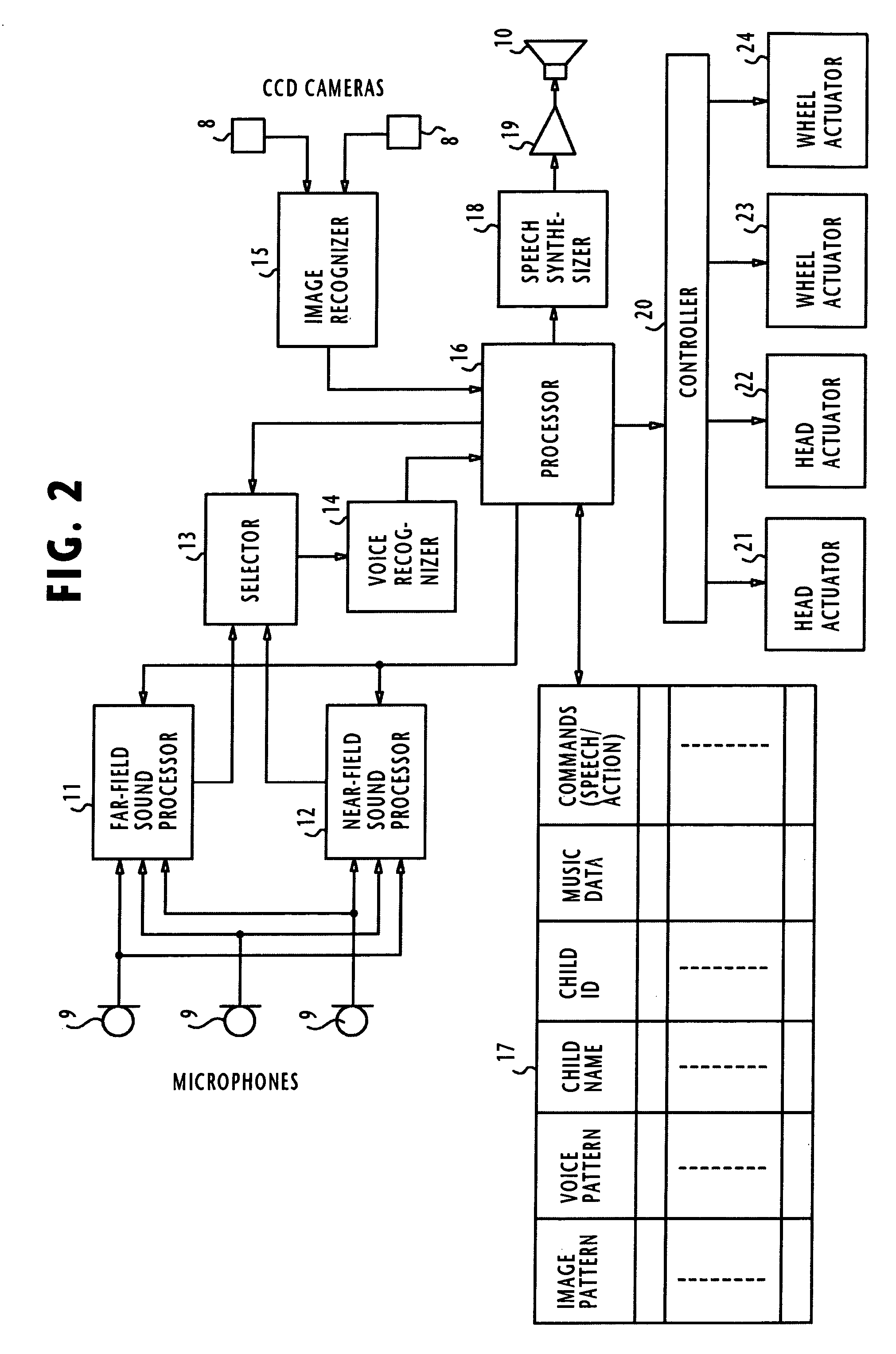

Child-care robot and a method of controlling the robot

ActiveUS20050215171A1Reduce the burden onPersonnel expenditureComputerized toysDiagnostic recording/measuringEngineeringSpeech sound

A child-care robot for use in a nursery school associates child behavior patterns with corresponding robot action patterns, and acquires a child behavior pattern when a child behaves in a specified pattern. The robot selects one of the robot action patterns, which is associated with the acquired child behavior pattern, and performs the selected robot action pattern. Additionally, the robot associates child identifiers with parent identifiers, and receives an inquiry message from a remote terminal indicating a parent identifier. The robot detects one of the child identifiers, which is associated with the parent identifier of the inquiry message, acquires an image or a voice of a child identified by the detected child identifier, and transmits the acquired image or voice to the remote terminal. The robot further moves in search of a child, measures the temperature of the child, and associates the temperature with time of day at which it was measured.

Owner:NEC CORP

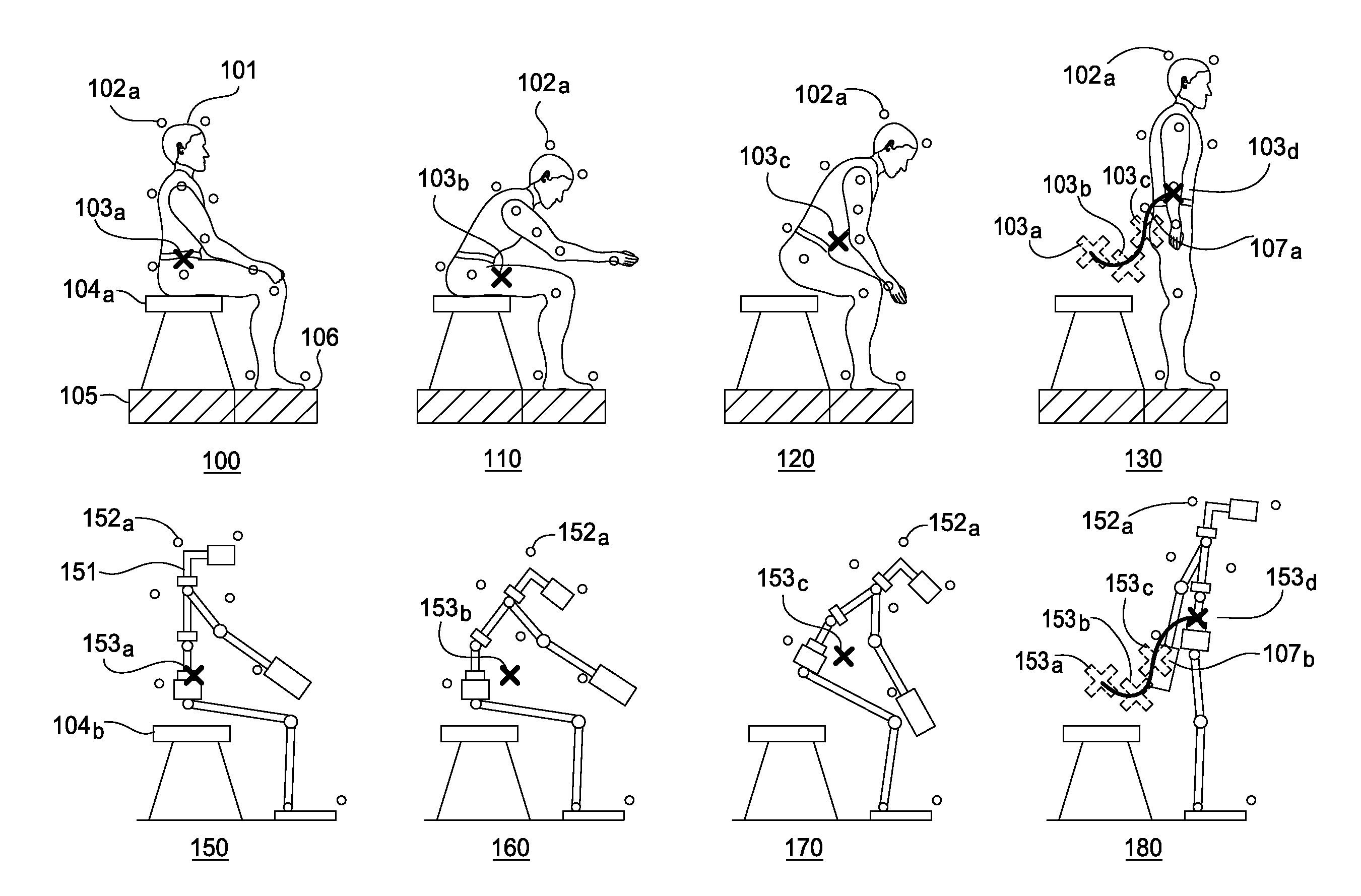

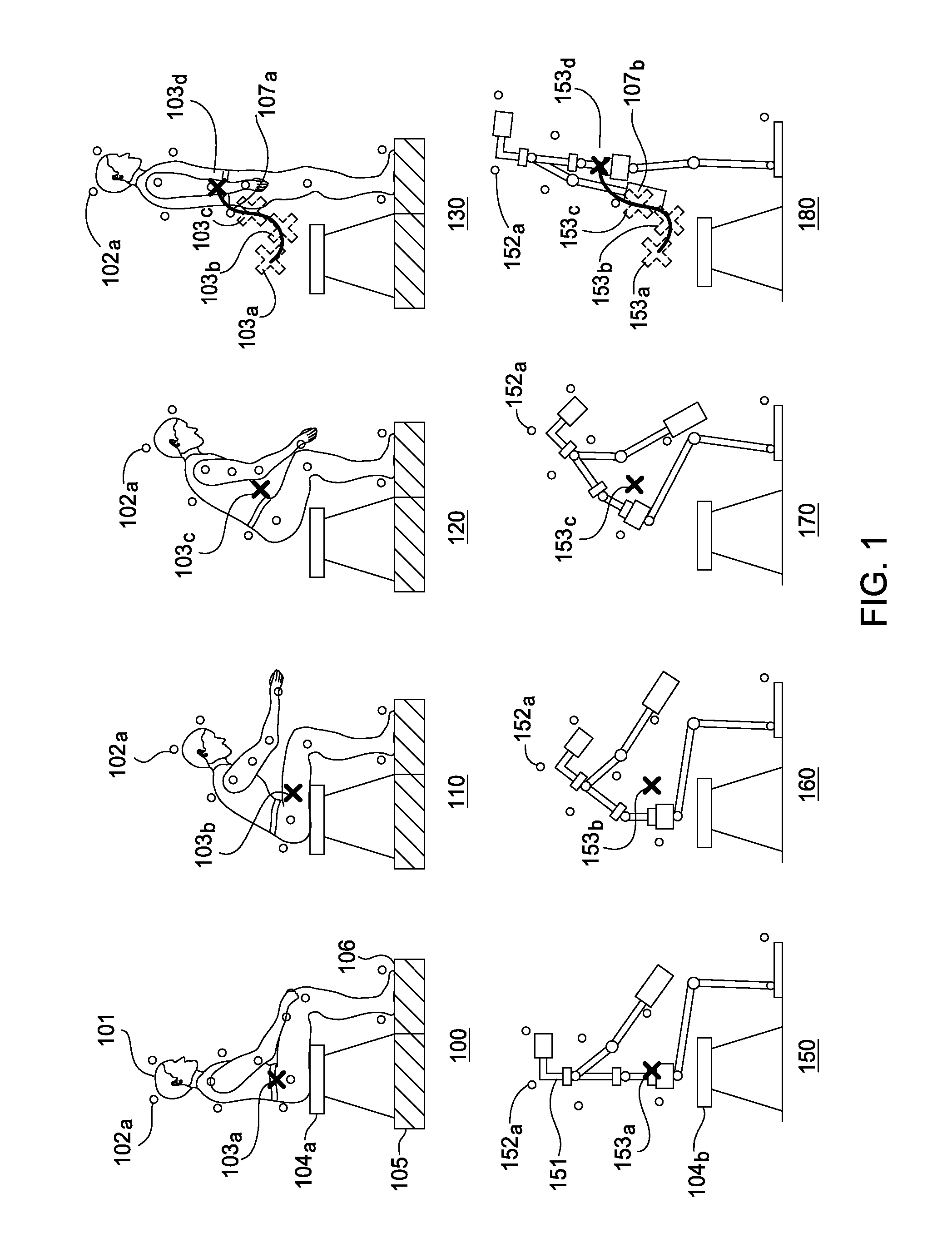

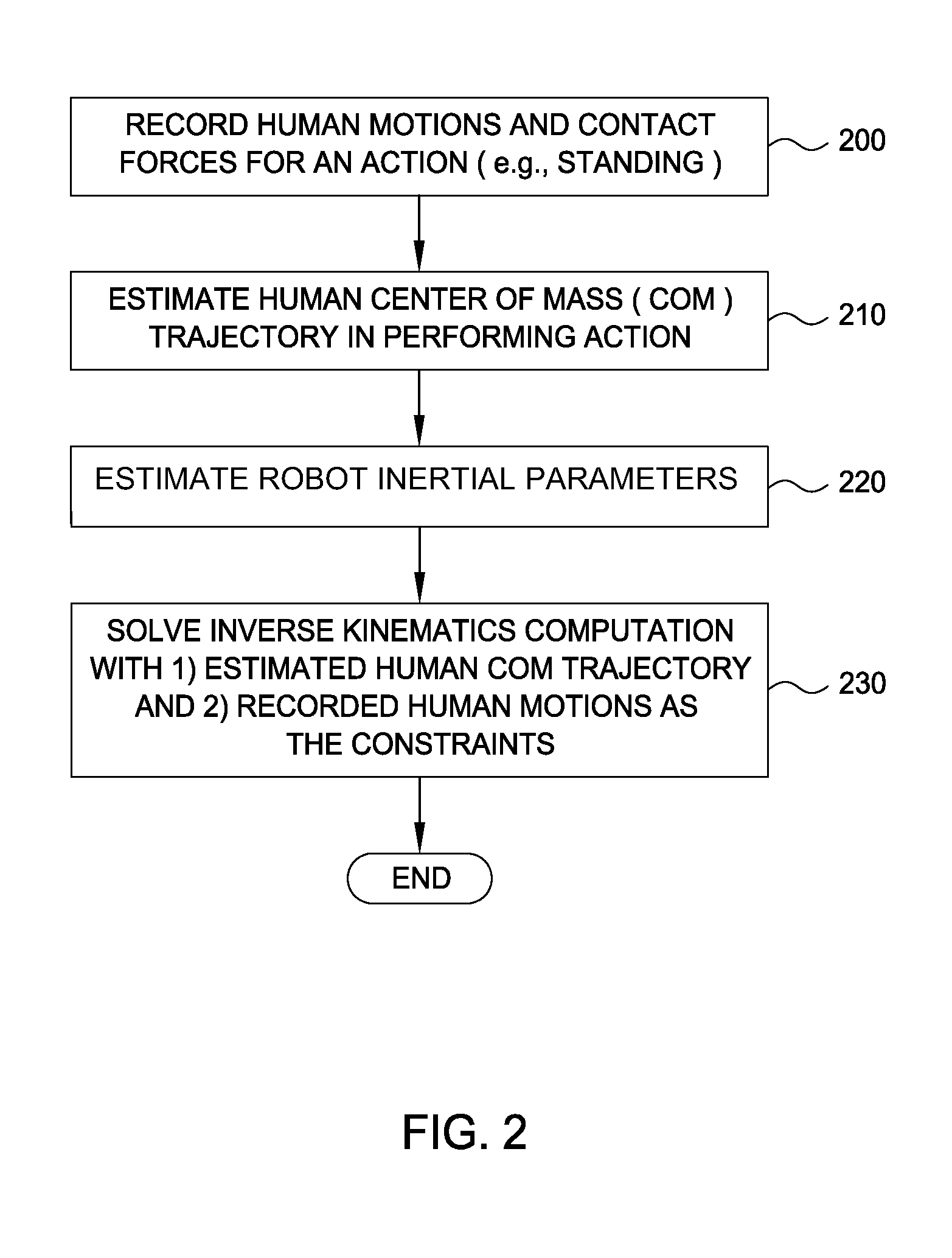

Robot action based on human demonstration

Embodiments of the invention provide an approach for reproducing a human action with a robot. The approach includes receiving data representing motions and contact forces of the human as the human performs the action. The approach further includes approximating, based on the motions and contact forces data, the center of mass (CoM) trajectory of the human in performing the action. Finally, the approach includes generating a planned robot action for emulating the designated action by solving an inverse kinematics problem having the approximated human CoM trajectory as a hard constraint and the motion capture data as a soft constraint.

Owner:DISNEY ENTERPRISES INC

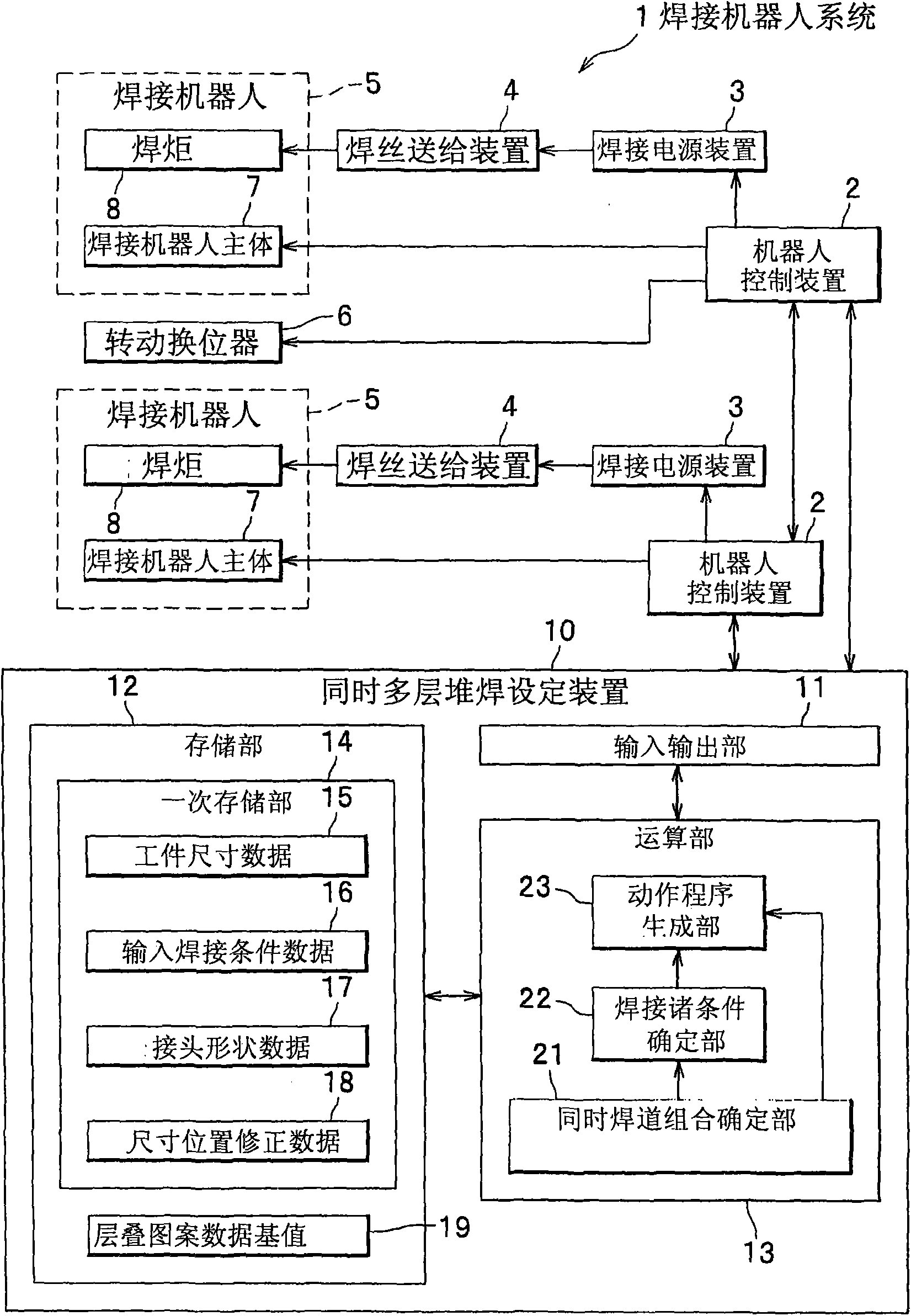

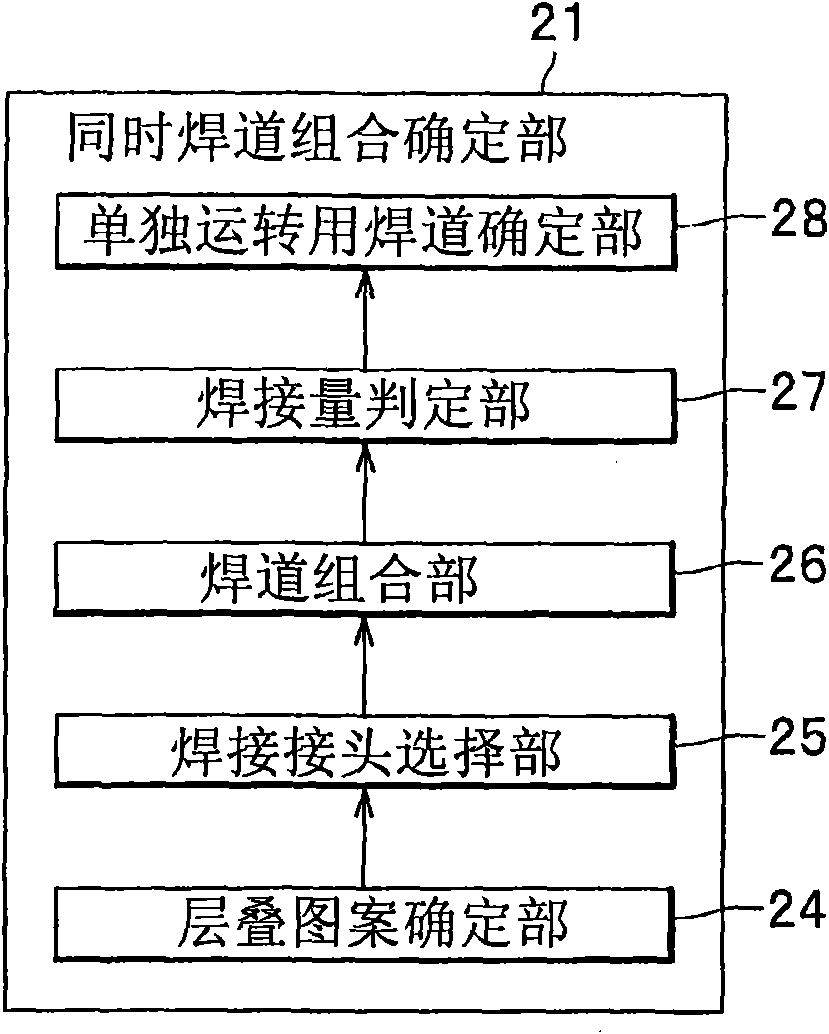

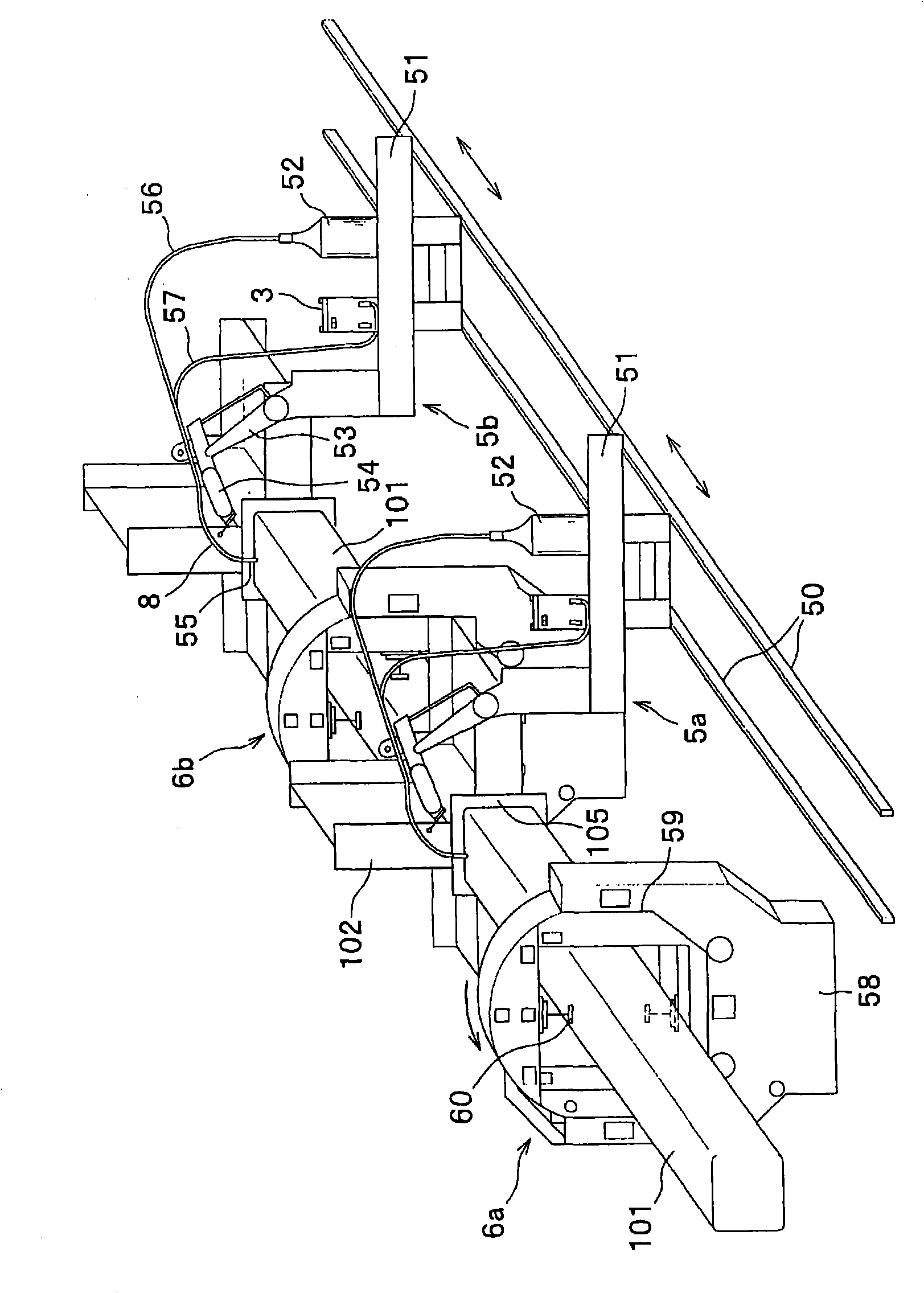

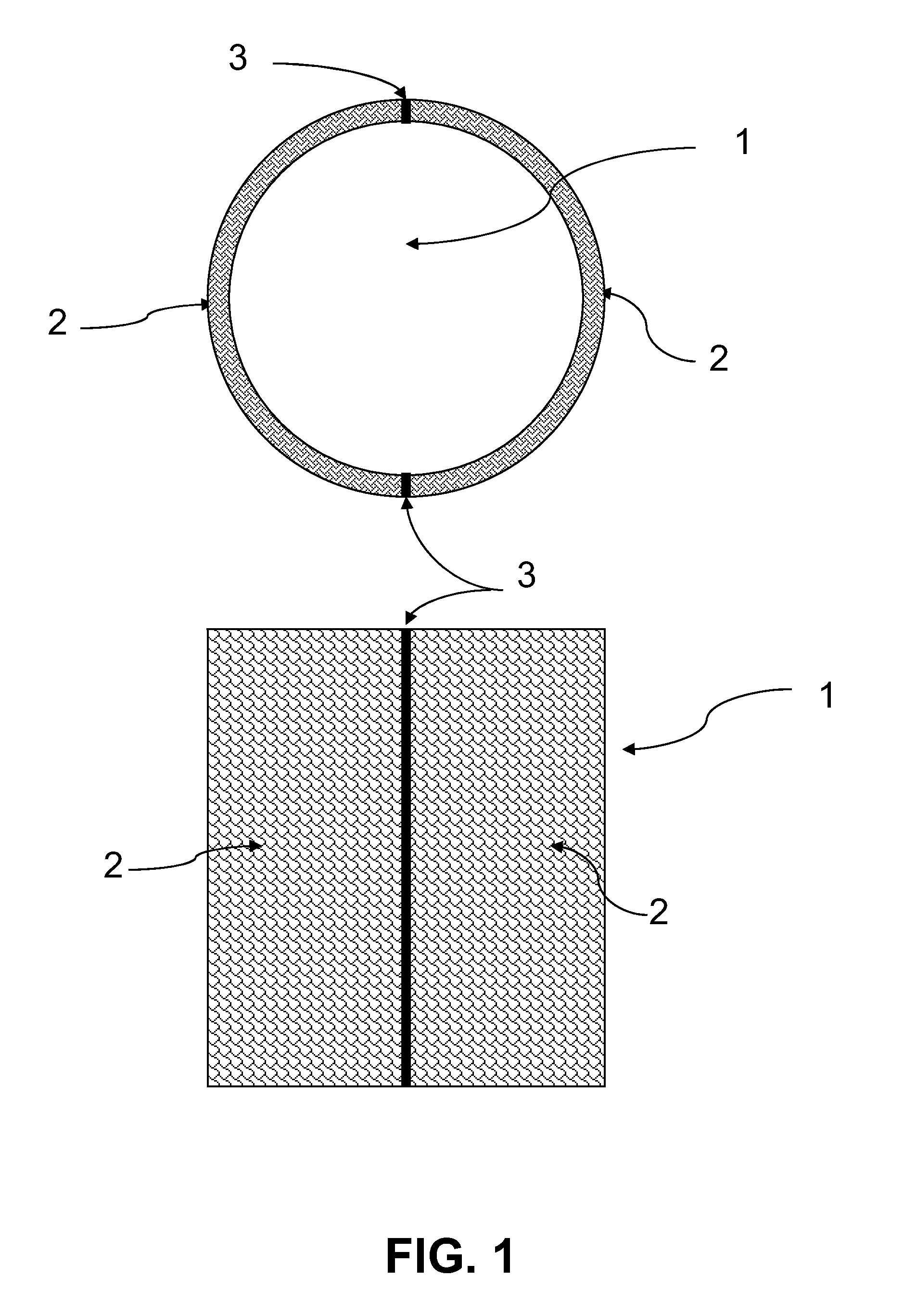

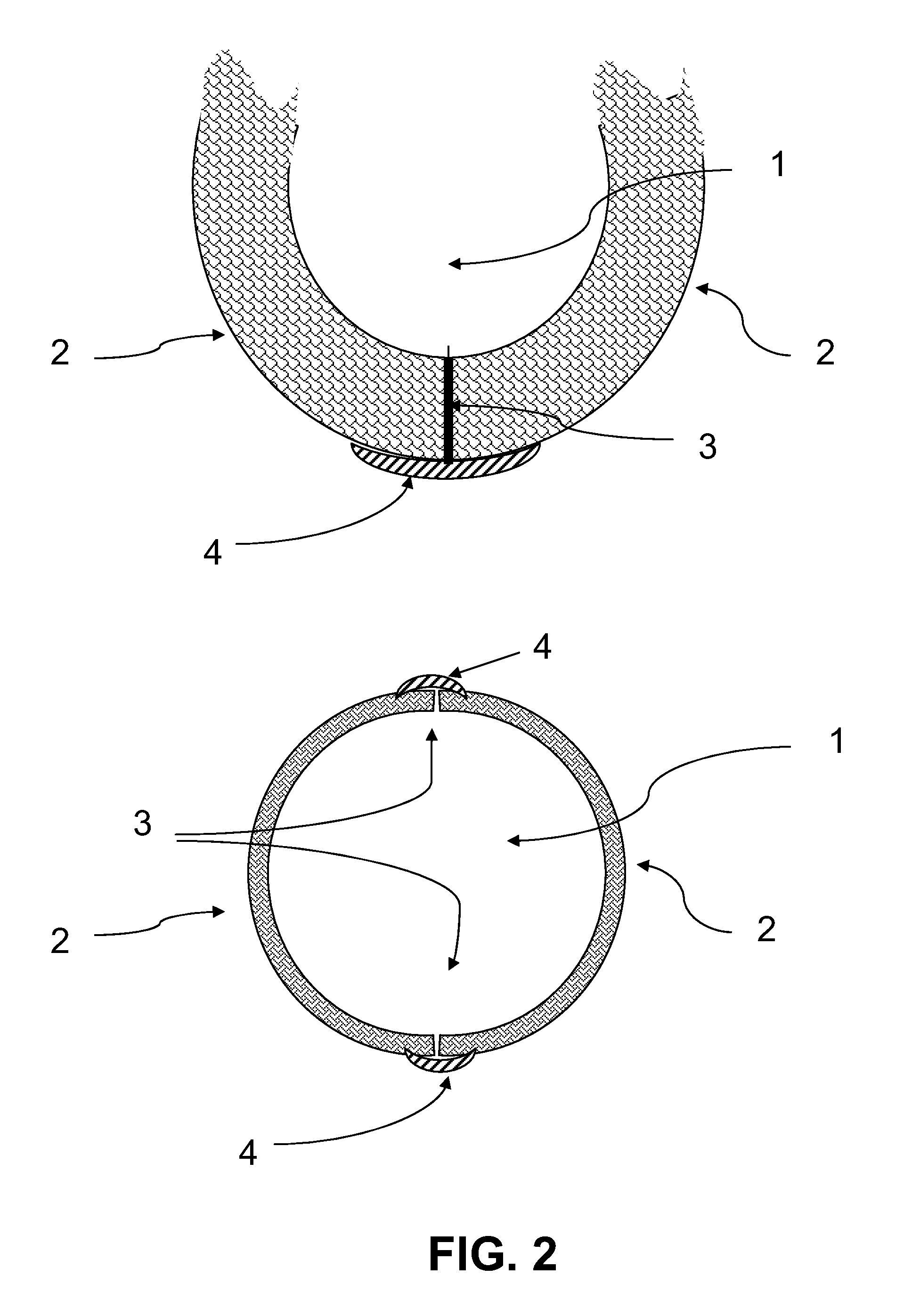

Welding setting device, welding robot system and welding setting method

The invention provides a simultaneous multiple-layer welding setting device, comprising a cascading pattern determining part for determining the cascading pattern of a welding pass corresponding to joints of objects based on basic values of each input data and the cascading pattern data; a welding pass determining part for independently running which is used for determining the welding combination after excluding the welding pass with a large sectional area as the welding pass for independently running when the difference value of the sectional area of a deposited metal quantity is over the predetermined threshold value when combining the welding passes in the cascading pattern of two joints of the objects; a welding conditions determining part for determining each welding pass welding condition comprising current values corresponding to a welding wire feeding speed calculated based by an input welding condition and a seam forming position; and an action procedure generating part for generating a robot action procedure based on the determined welding conditions, and setting in a robot controlling device. The simultaneous multiple-layer welding setting device shortens the welding time of simultaneously welding a plurality of layers of a plurality of welding joints of a steel structure by two welding robots.

Owner:KOBE STEEL LTD

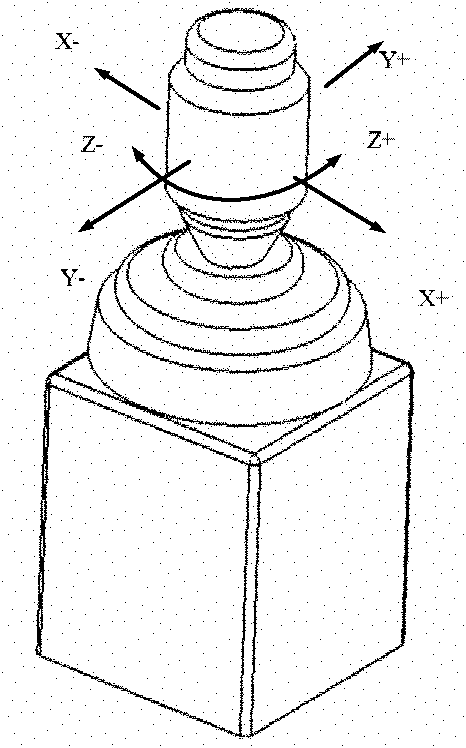

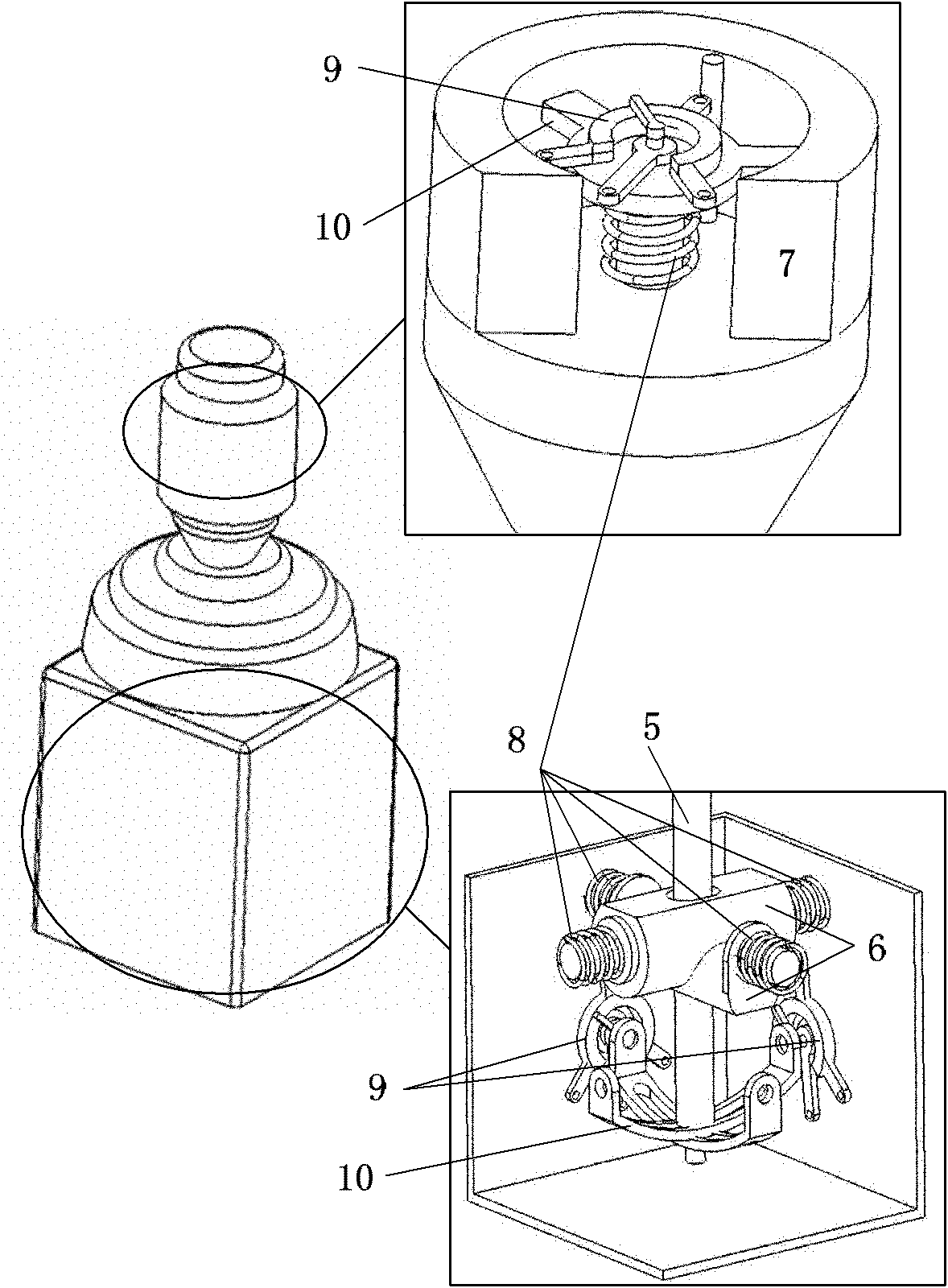

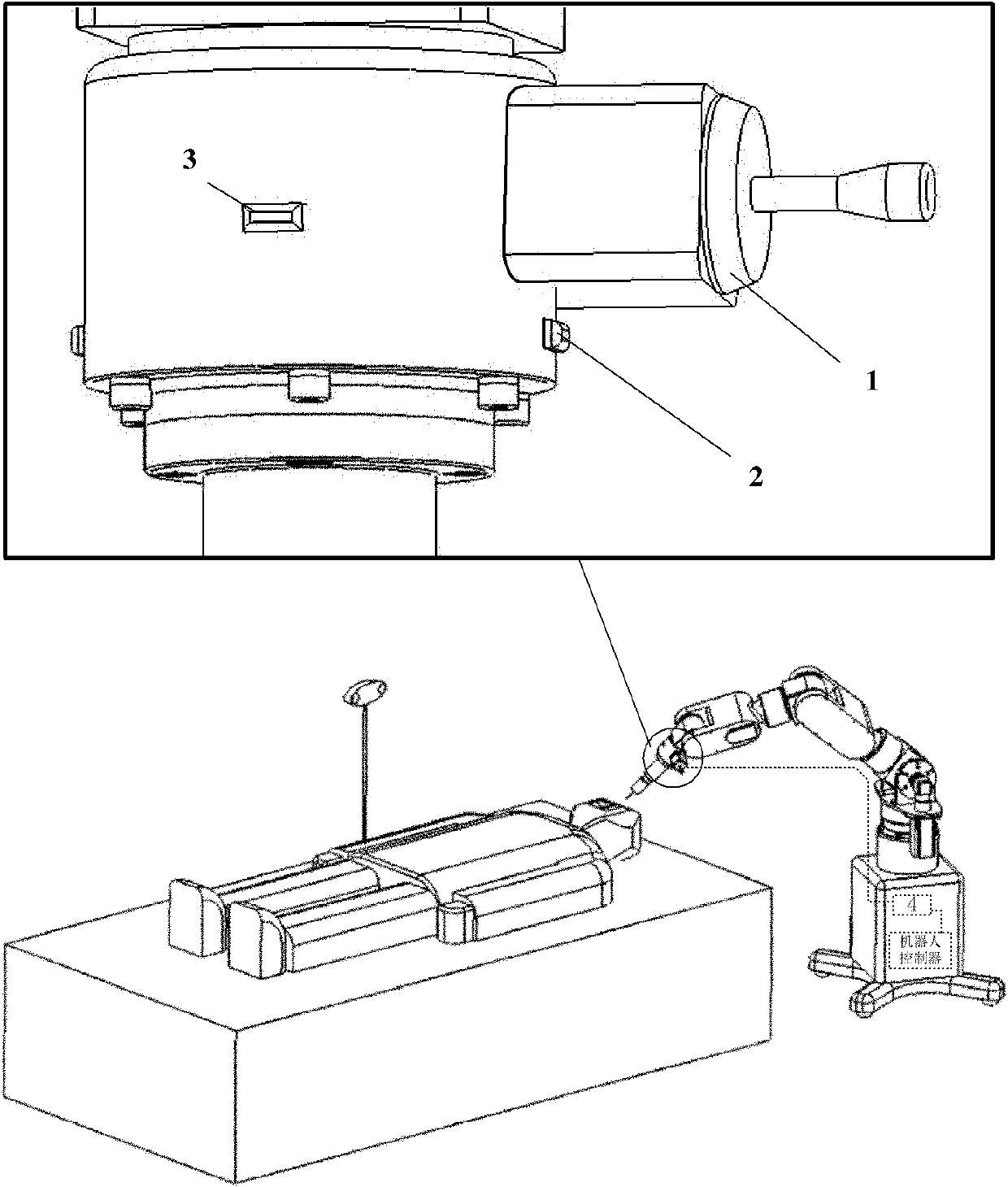

Manual operating device for bone surgery assisted robot

InactiveCN101999938ADirect manipulationManipulation safetyDiagnosticsSurgical robotsAttitude controlRobotic arm

The invention provides a manual operating device for a bone surgery assisted robot, belonging to the technical field of medical appliances. The device is arranged on the tail end of a mechanical arm, and comprises a three-freedom operating rod, a mode selection switch and a redundancy gesture control switch, wherein the three-freedom operating rod, the mode selection switch and the redundancy gesture control switch are respectively connected with a robot controller through a signal cable; a signal processor comprises a digital IO module, an A / D conversion module and a calculation module; the A / D conversion module converts a voltage analogue signal from a potentiometer in the operating rod into a digital signal and inputs to the calculation module; the digital IO module inputs the state information of the mode selection switch and the redundancy gesture control switch into the calculation module; the calculation module transmits a control instruction to the robot controller through a serial bus and the robot controller realizes the robot action. The device can enable the operators to control position and gesture of the executor at the tail end of a seven-freedom robot.

Owner:SHANGHAI JIAO TONG UNIV

Robot action generation method and device

InactiveCN106945036ASimple designEasy and fast to generateProgramme-controlled manipulatorTime informationSimulation

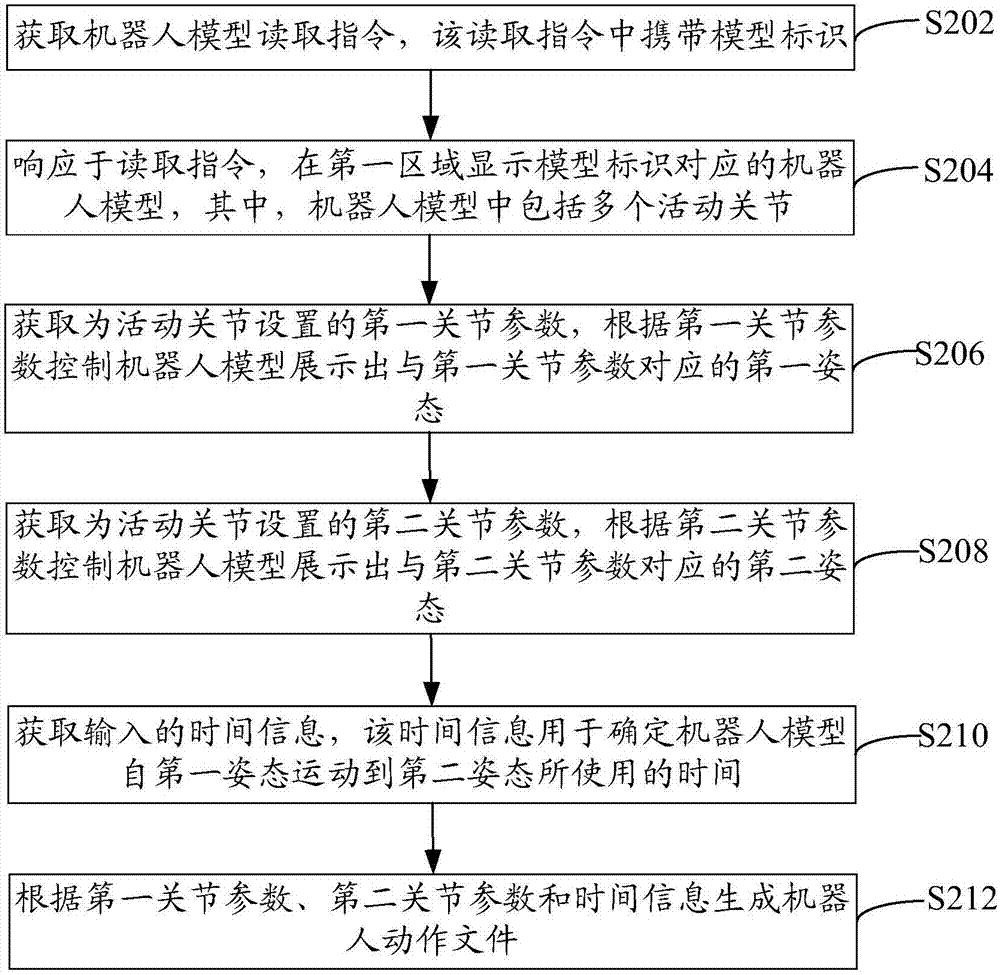

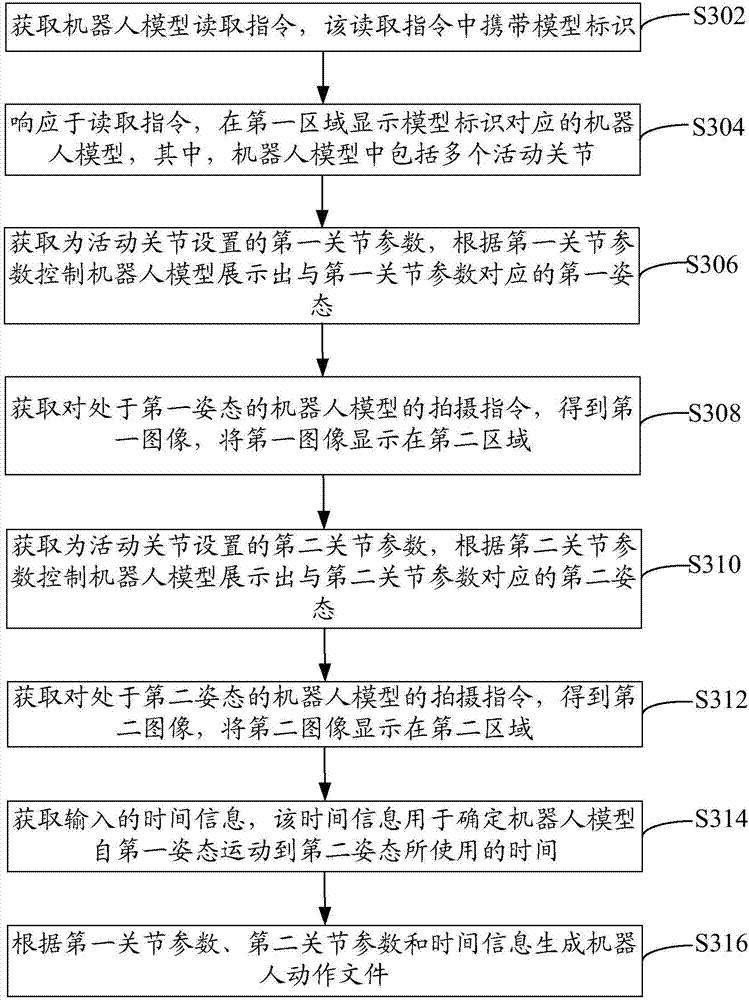

The invention relates to a robot action generation method. The robot action generation method comprises the following steps that a robot model read instruction is obtained, and a model identification carried in the read instruction is read; responding to the read instruction, a robot model corresponding the model identification is displayed on the first area, wherein the robot model comprises a plurality of movable joints; a first joint parameter set for all the movable joints is obtained, and meanwhile, the robot model displayed on the first area shows a first posture corresponding to the first joint parameter; a second joint parameter set for all the movable joints is obtained, and meanwhile, the robot model displayed on the first area shows a second posture corresponding to the second joint parameter; input time information is obtained, specifically, the time information is the used time for the robot model to move from the first posture to the second posture; and according to the first joint parameter, the second joint parameter and the time information, robot action documents are generated. According to the robot action generation method, generation of robot actions can be easier and more visual.

Owner:深圳泰坦创新科技有限公司

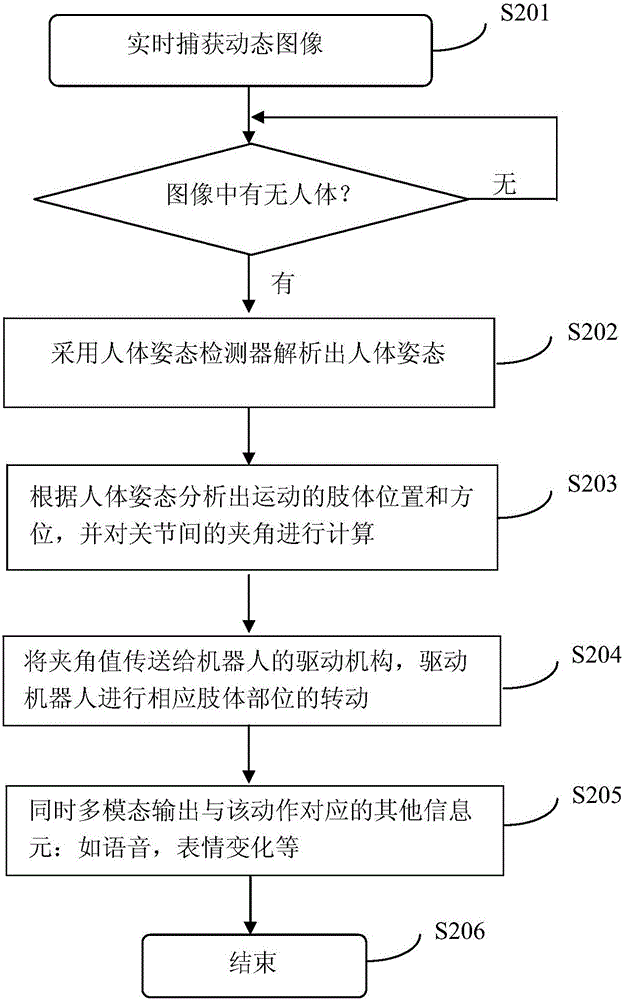

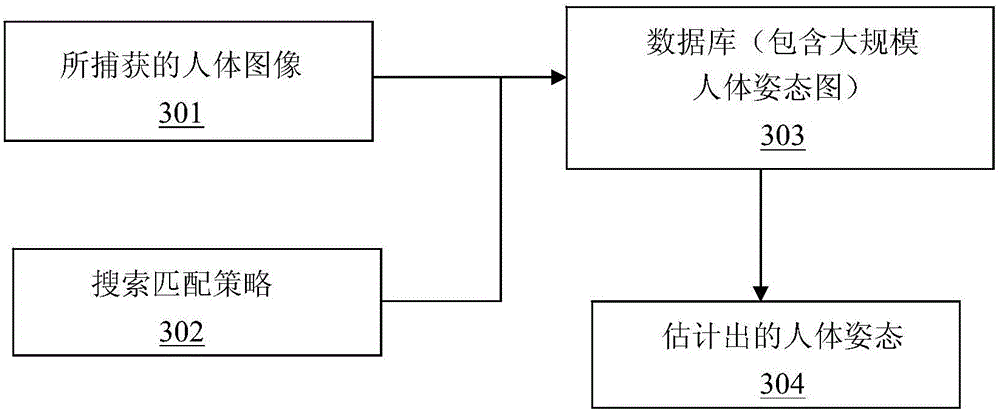

Method and system for data processing for robot action expression learning

ActiveCN105825268AInteractive natureVarious forms of communicationArtificial lifeMemory bankHuman–computer interaction

The invention provides a data processing method for robot action expression learning. The method comprises the following steps: a series of actions made by a target in a period of time is captured and recorded; information sets correlated with the series of actions are recognized and recorded synchronously, wherein the information sets are composed of information elements; the recorded actions and the correlated information sets are sorted and are stored in a memory bank of the robot according to a corresponding relationship; when the robot receives an action output instruction, an information set matched with the expressed content in the information sets stored in the memory bank is called to perform an action corresponding to the information set, and a human action expression is simulated. Action expressions are correlated with other information related to language expressions, after simulation training, the robot can perform diverse output, the communication forms are rich and more humane, and the intelligent degree is enhanced more greatly.

Owner:BEIJING GUANGNIAN WUXIAN SCI & TECH

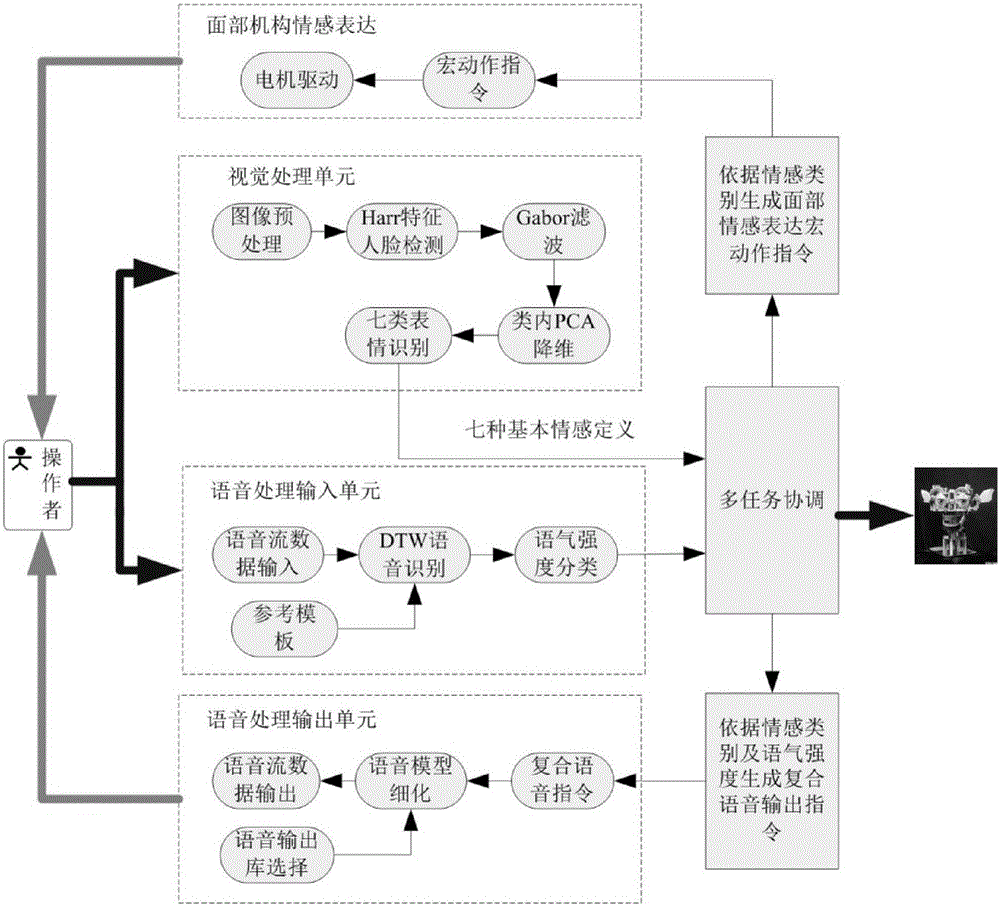

Facial expression robot multi-channel information emotion expression mapping method

InactiveCN105046238AImprove intelligenceSimple methodSpeech analysisCharacter and pattern recognitionExpression LibrarySpeech input

The present invention relates to a facial expression robot multi-channel information emotion expression mapping method comprising the steps of: pre-building an expression library, an input speech reference model, an output speech reference model and a speech output library; acquiring a to-be-identified face image, identifying emotional expressions by comparing with the expression library, acquiring a speech input, identifying voice expressions by comparing with the input speech reference model, fusing the emotional expressions and the voice expressions to obtain a composite expression instruction, and according to the composite expression instruction and by comparing with the output speech reference module, selecting corresponding speech data for output by the facial expression robot; and correspondingly setting a macro action instruction for the composite expression instruction, and performing facial expression by the facial expression robot according to the macro action instruction, thereby realizing multi-channel information emotional expression by the expression robot. By adopting the method, visual expression analysis, speech signal processing and expression robot action coordination are integrally fused to reflect visual expressions and speech emotions, so that the method has relatively high intelligence.

Owner:HUAQIAO UNIVERSITY

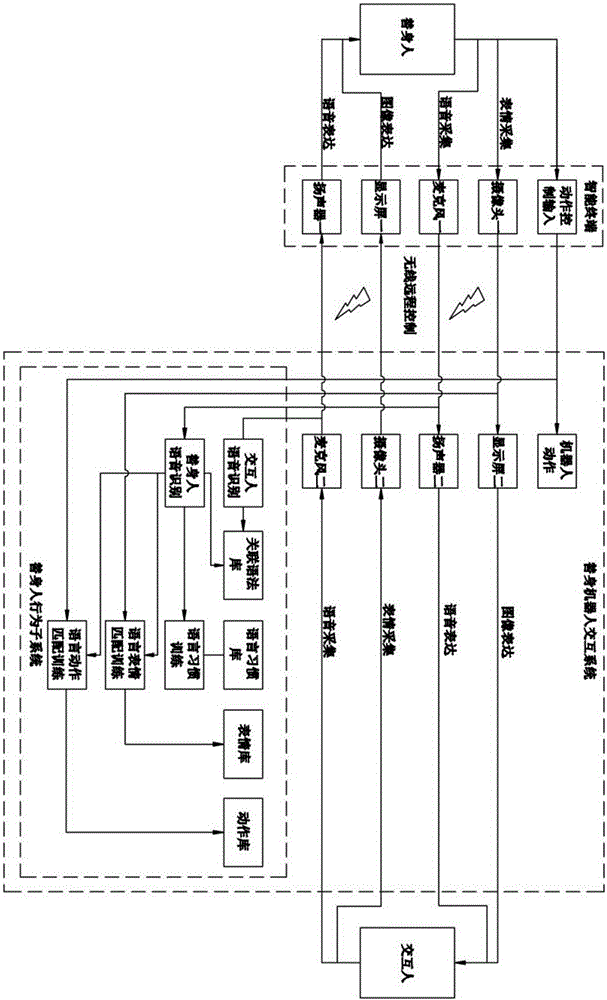

Behavior pattern learning system for augmentor

InactiveCN106547884AHigh affinityProgramme-controlled manipulatorSpeech recognitionInteraction systemsControl system

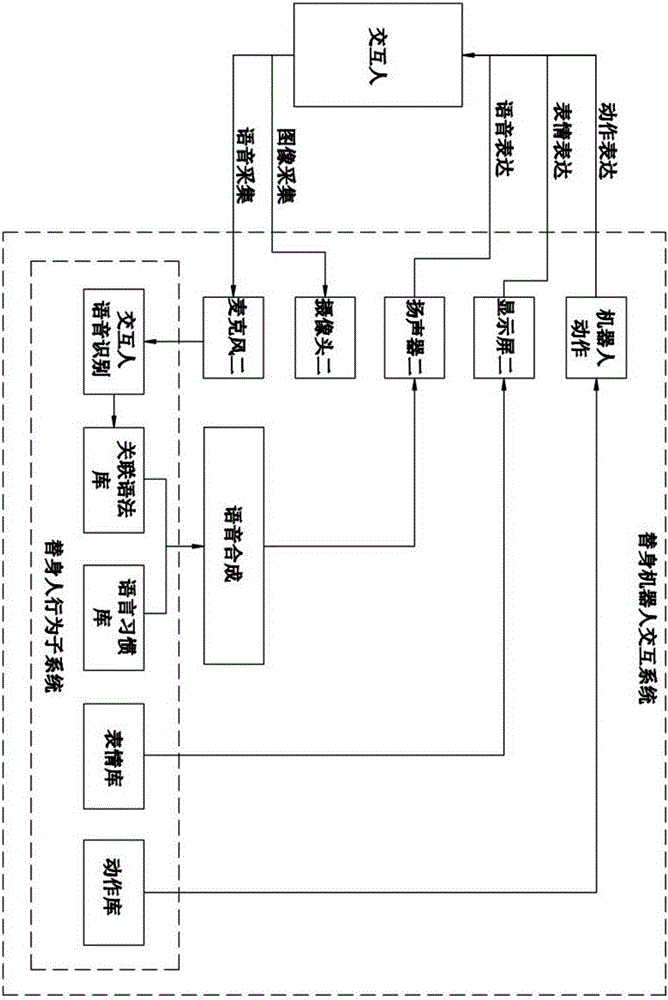

The invention discloses a behavior pattern learning system for an augmentor, and relates to the technical field of a robot control system. An intelligent terminal comprises an action control input part, a camera I, a microphone I, a display screen I, a loudspeaker I, and the camera I; an augmentor interaction system comprises robot actions, a display screen II, a loudspeaker II, a camera II and a microphone II; a substituted person behavior sub system comprises an interaction person voice recognition part, an associated syntax database, a language habit library, an expression library, an action library, a substituted person voice recognition part, a language habit training part, a language expression matching training part, and a language action matching training part; a robot performs long-term deep learning on action habits, language habits, expression habits and the like which can express behavior habit characteristics of the substituted person through learning algorithms of a neural network and the like in the use process of the substituted person, so that when the substituted person is not online, the robot still can express behavior characteristics of the substituted person so as to improve the affinity of the augmentor in personification substituting.

Owner:深圳量旌科技有限公司

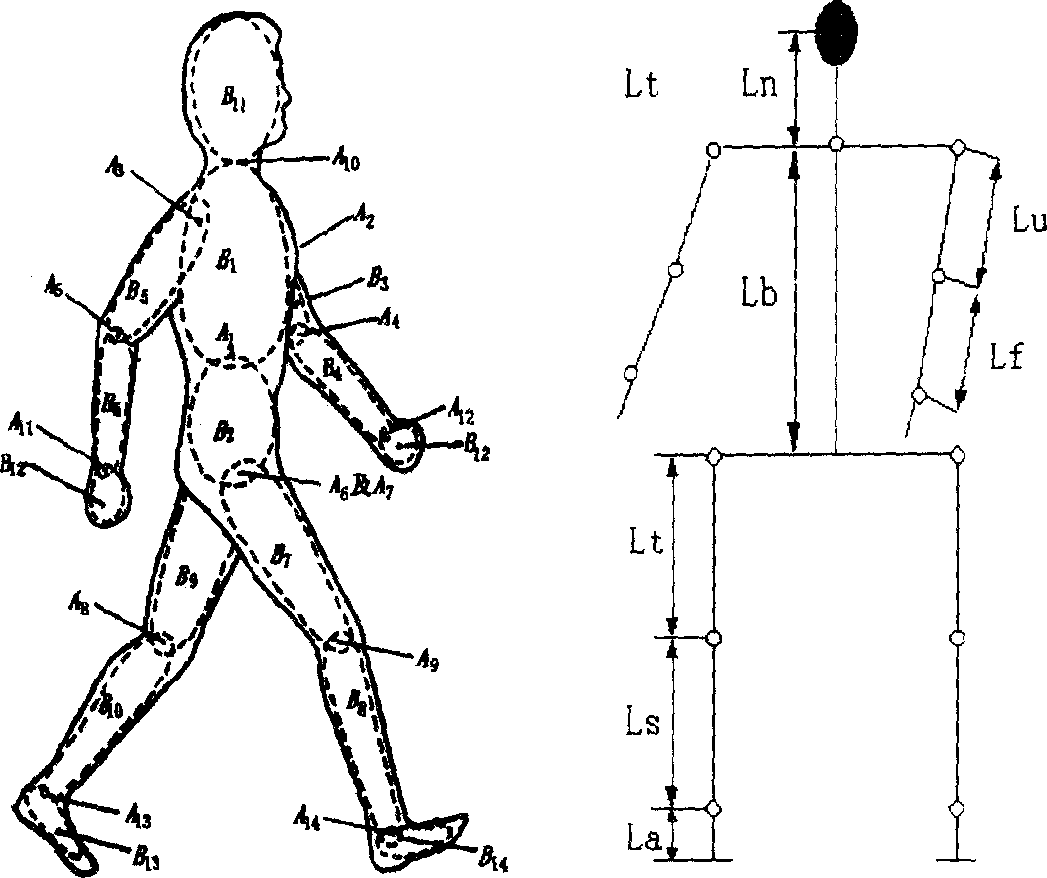

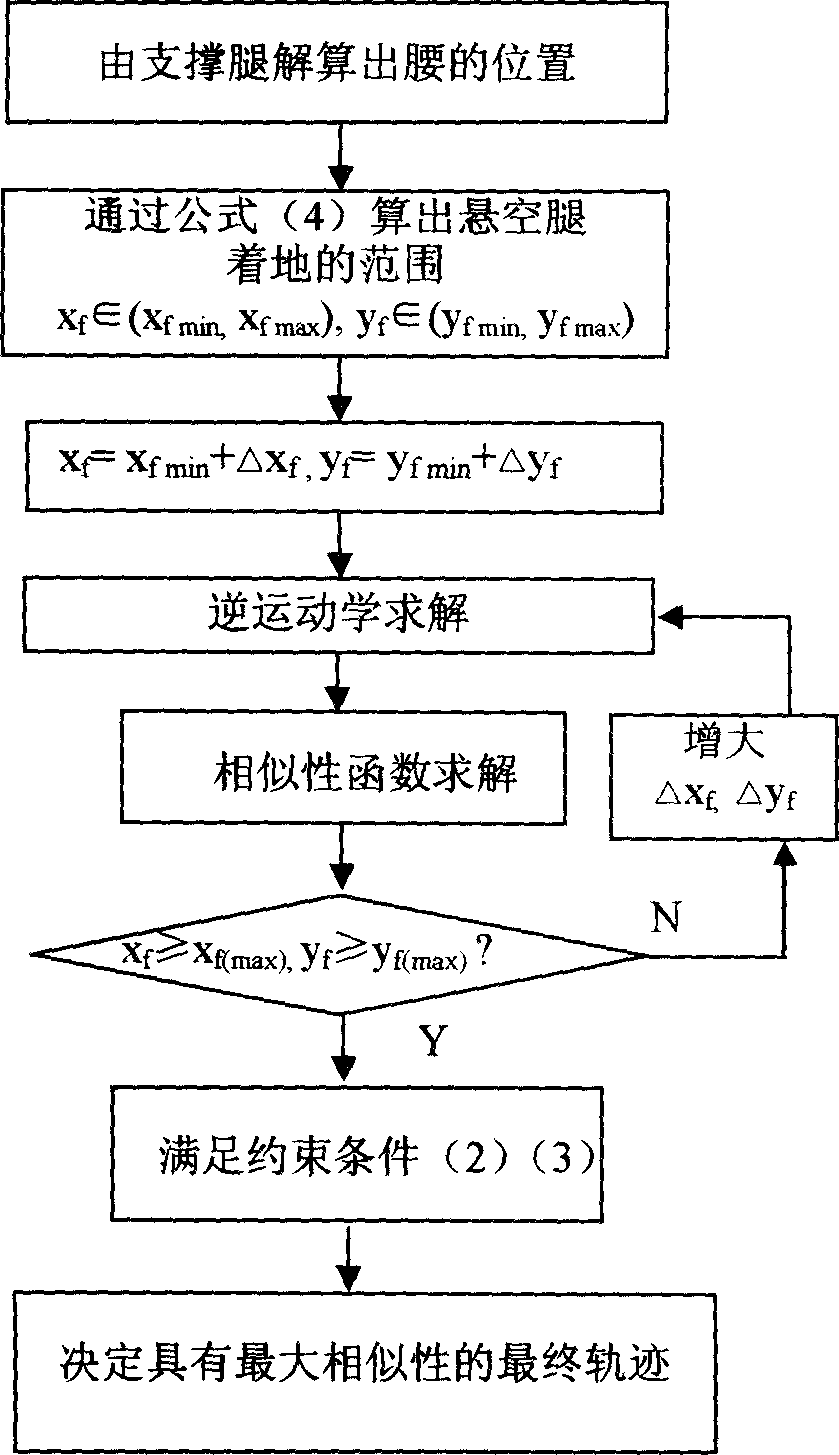

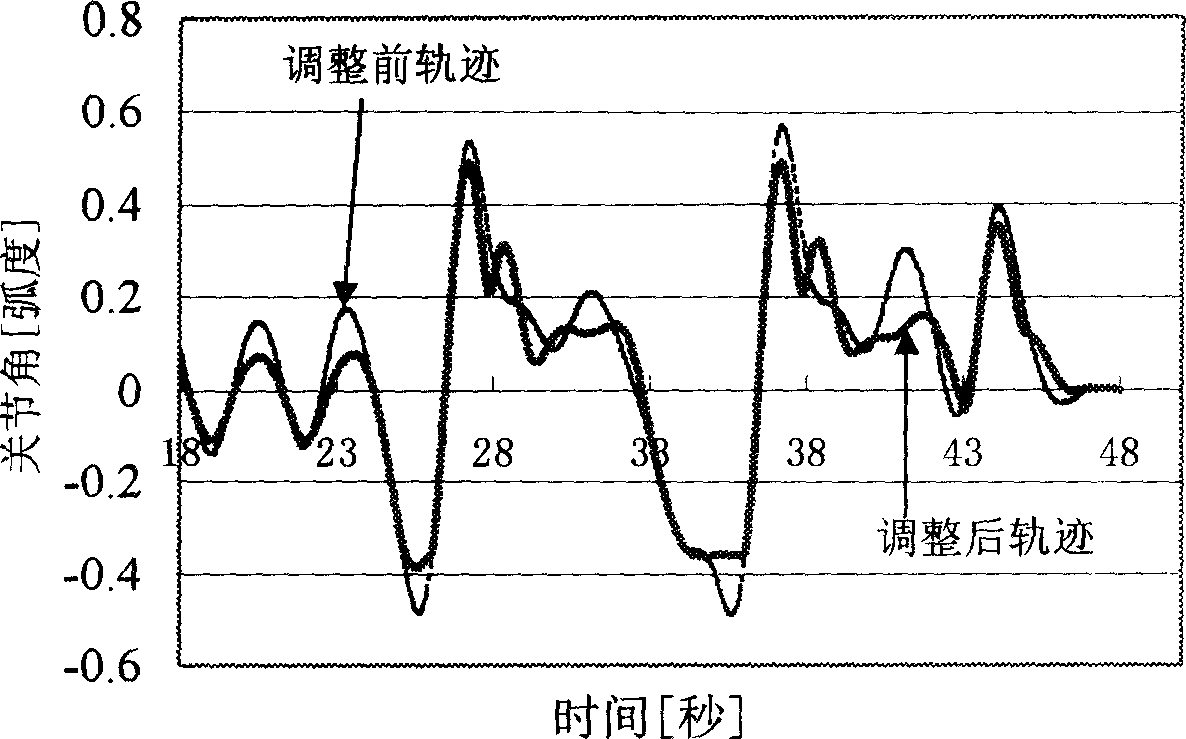

Human imitation robot action similarity evaluation based on human body motion track

InactiveCN1830635AHigh similarity imitationEasy to implementProgramme-controlled manipulatorPattern recognitionHuman body

A method based on the moving traces of human body for evaluating the action similarity of a human body-shaped robot comprises such 4 areas as extracting the moving data of human body, kinematic match, dynamic match and experiment. An action similarity evaluating function is disclosed for the calculations in kinematic match and dynamic match.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

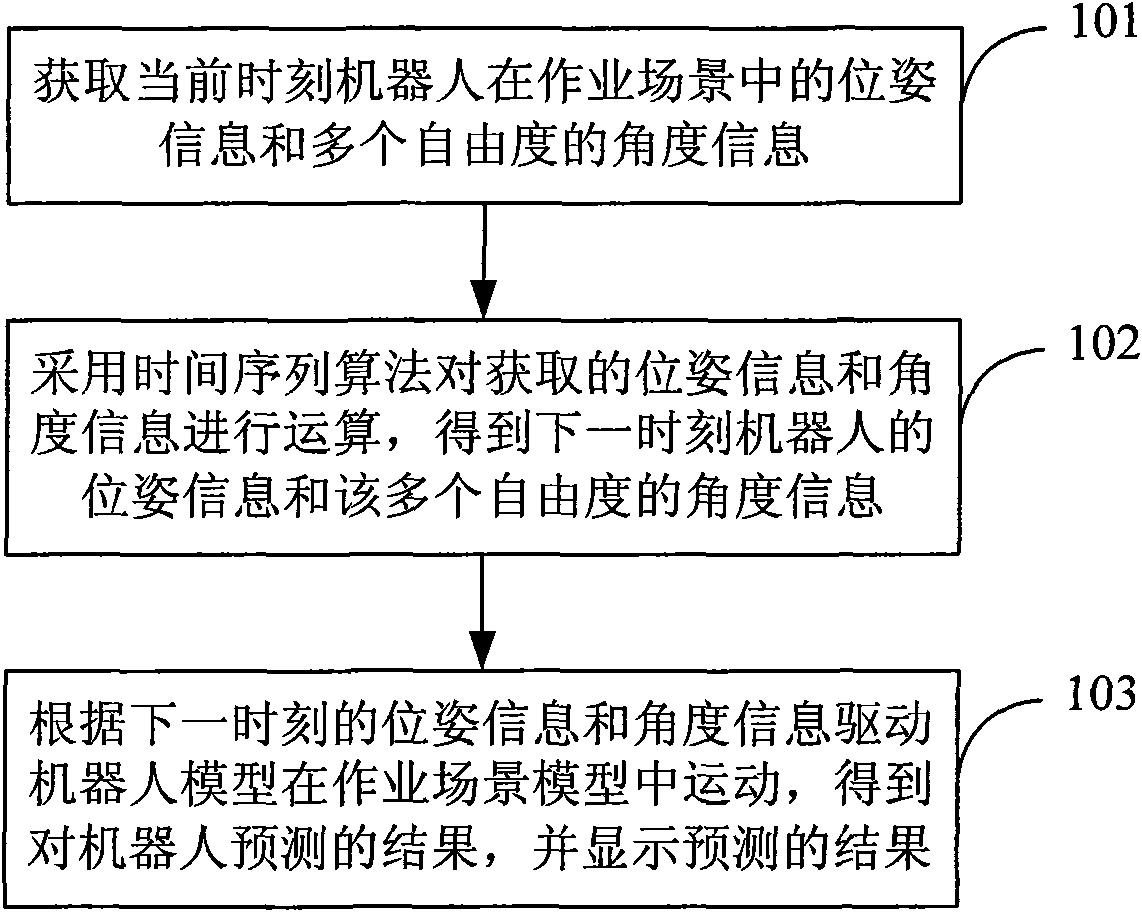

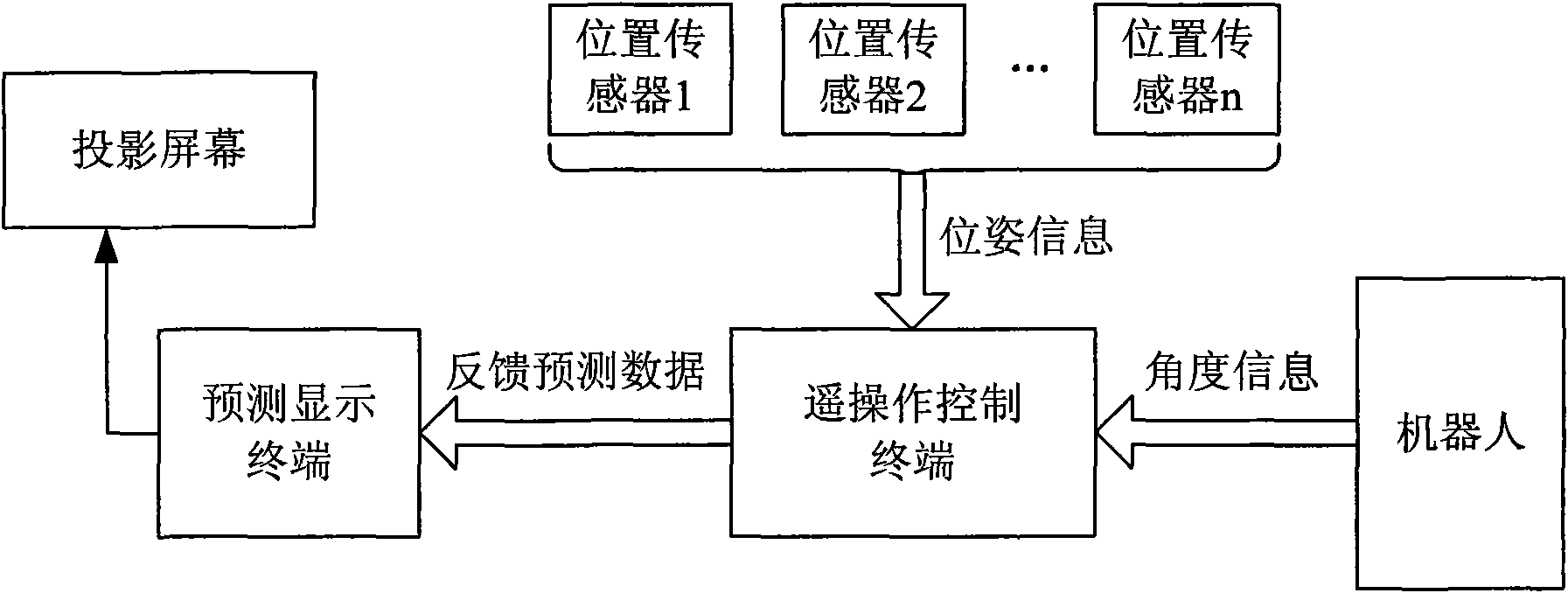

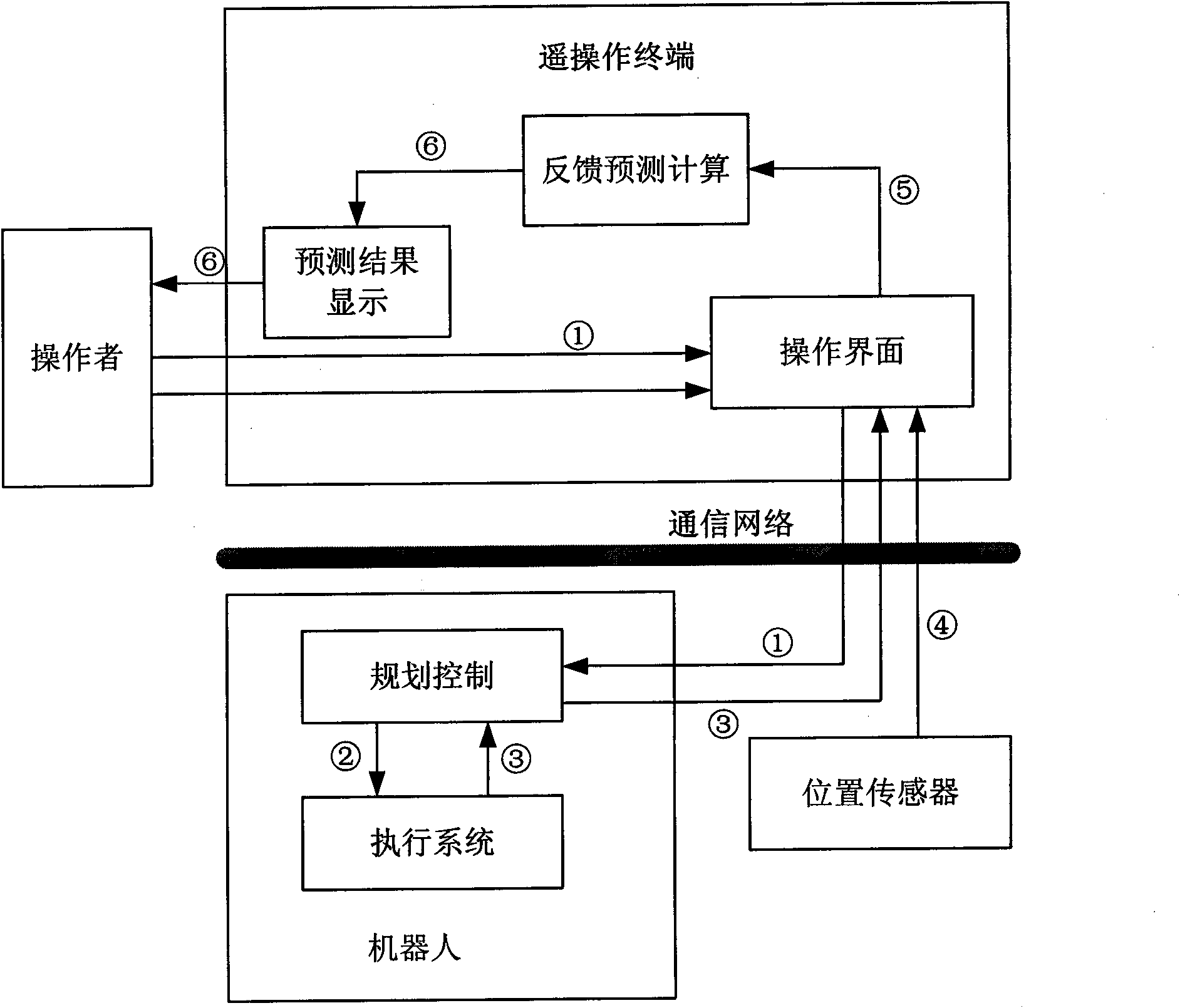

Robot predicting method and system

InactiveCN101587329ARealize full viewing angleChange the viewing angle at willSimulator controlSimulationVisual angle

The invention discloses a robot predicting method and system, belonging to the robot field. the method includes: obtaining the pose information and the angle information of a plurality of freedom degrees of the robot in the operation scene at the present time; calculating the obtained pose information and angle information by using the time queue algorithm to obtain the pose information and the angle information of a plurality of freedom degrees of the robot at the next time; driving the robot model to move in the operation scene model according to the pose information and angle information at the next time to obtain the result for predicting the robot, and displaying the predicting result. The system comprises an obtaining module, a calculating module and a predict displaying module. In the invention, the attending personnel can help to operate or correctly control the robot action according to the condition, thus realizing the whole-visual-angle observation of the image and having flexible control and convenient application.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

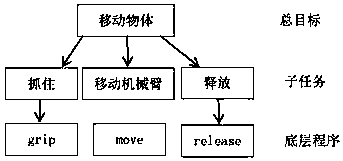

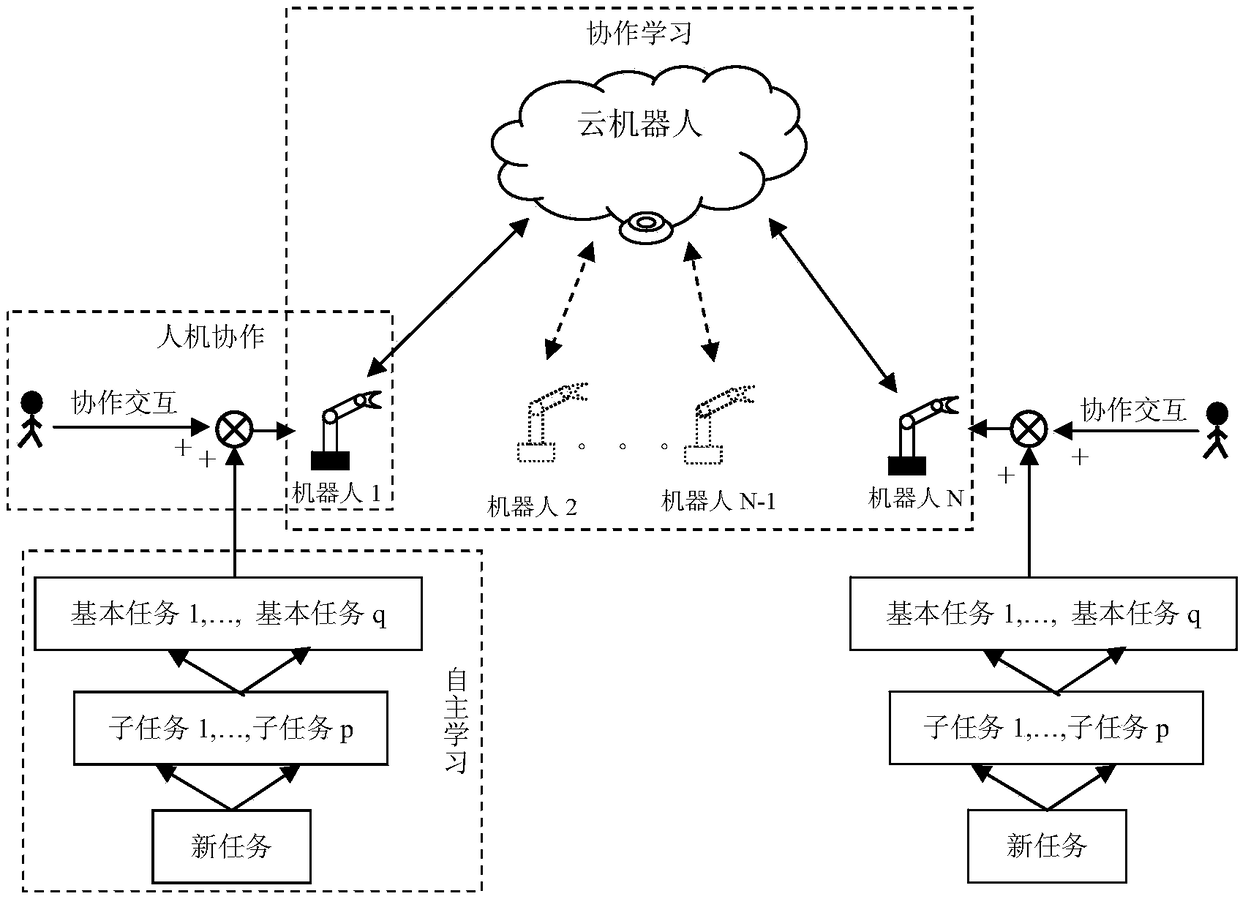

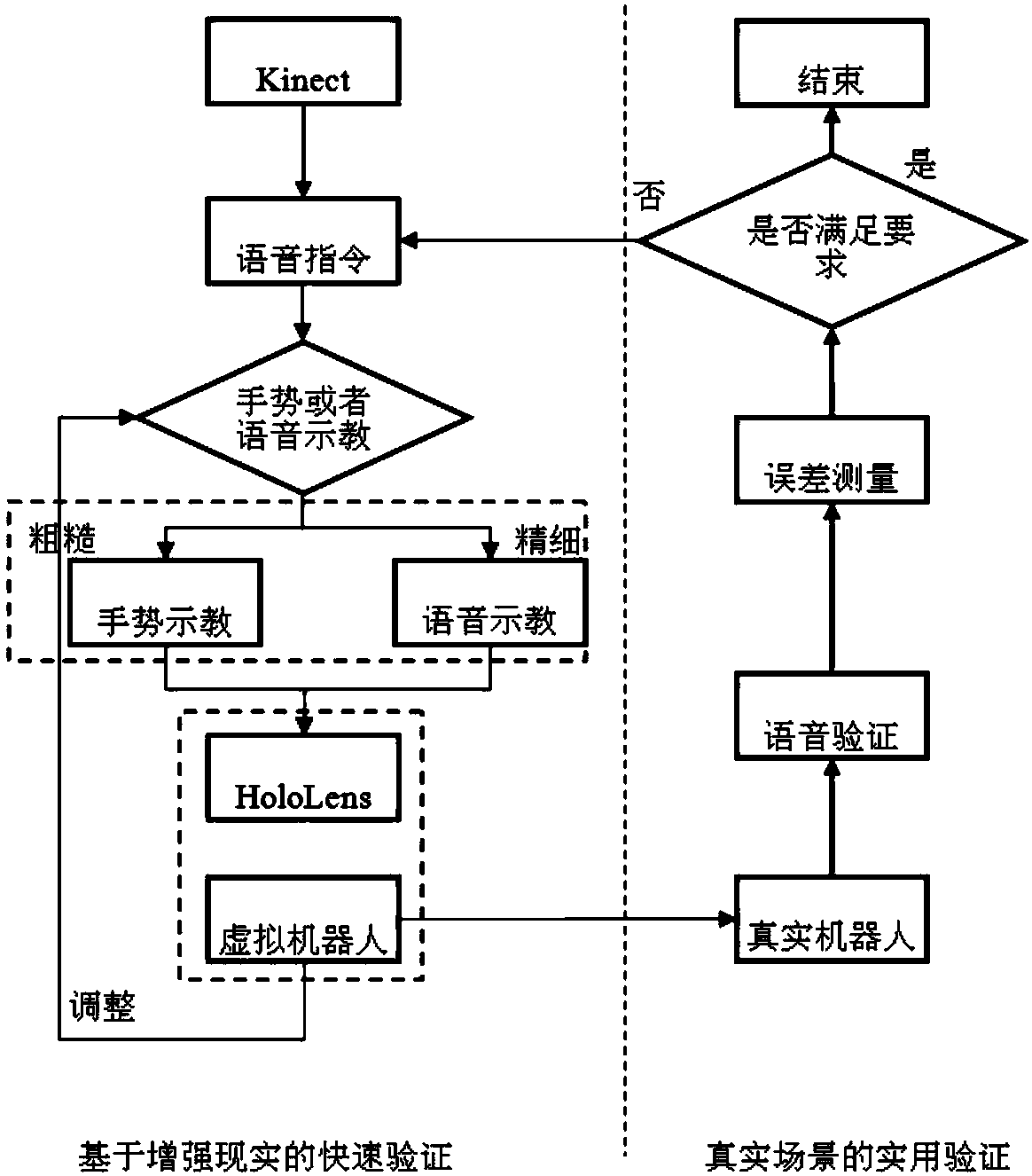

Cloud robot collaborative learning method based on hybrid enhanced intelligence

The invention provides a cloud robot collaborative learning method based on hybrid enhanced intelligence. The method comprises the following steps of S1, adopting a meta-learning method of NTP (NeuralTask Programming) for dividing a large task recursion into simple sub-tasks for teaching robots; S2, achieving robot action imitative learning based on a human-computer interaction technology, and teaching the robots about skills; S3, utilizing a self-organizing incremental neural network (SOINN) to gather the scattered robot skills for sharing use. According to the method, multiple robots learnhuman skills separately, then upload learned information into a server and share the information in the server for training and adjustment. Not only can the learning time be greatly shortened, but also the diversity of tasks can be expanded. The robots conduct collaborative learning and transmit experiences to one another through a network (or a cloud robot), that is to say, the robots can learn from one another.

Owner:SOUTH CHINA UNIV OF TECH

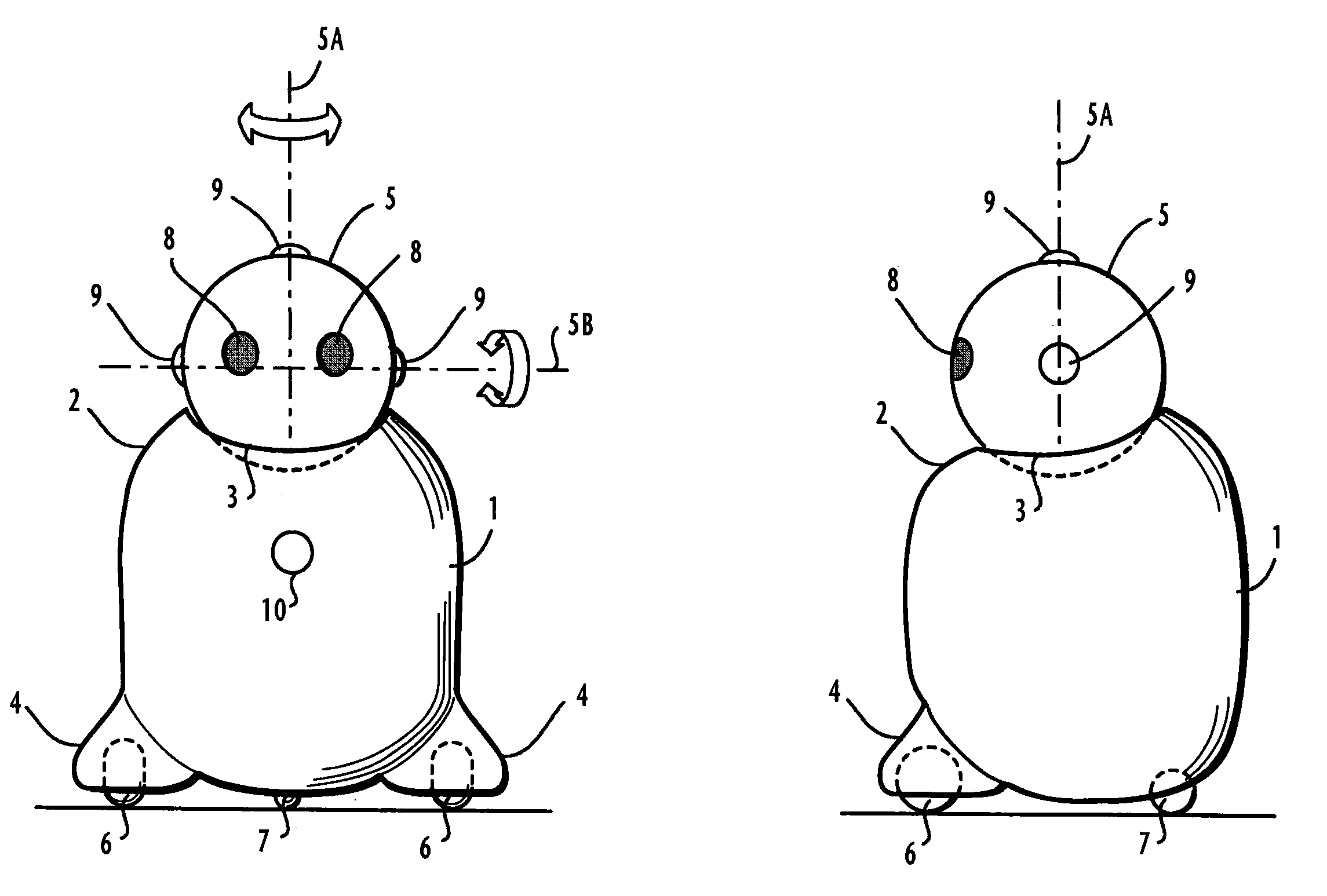

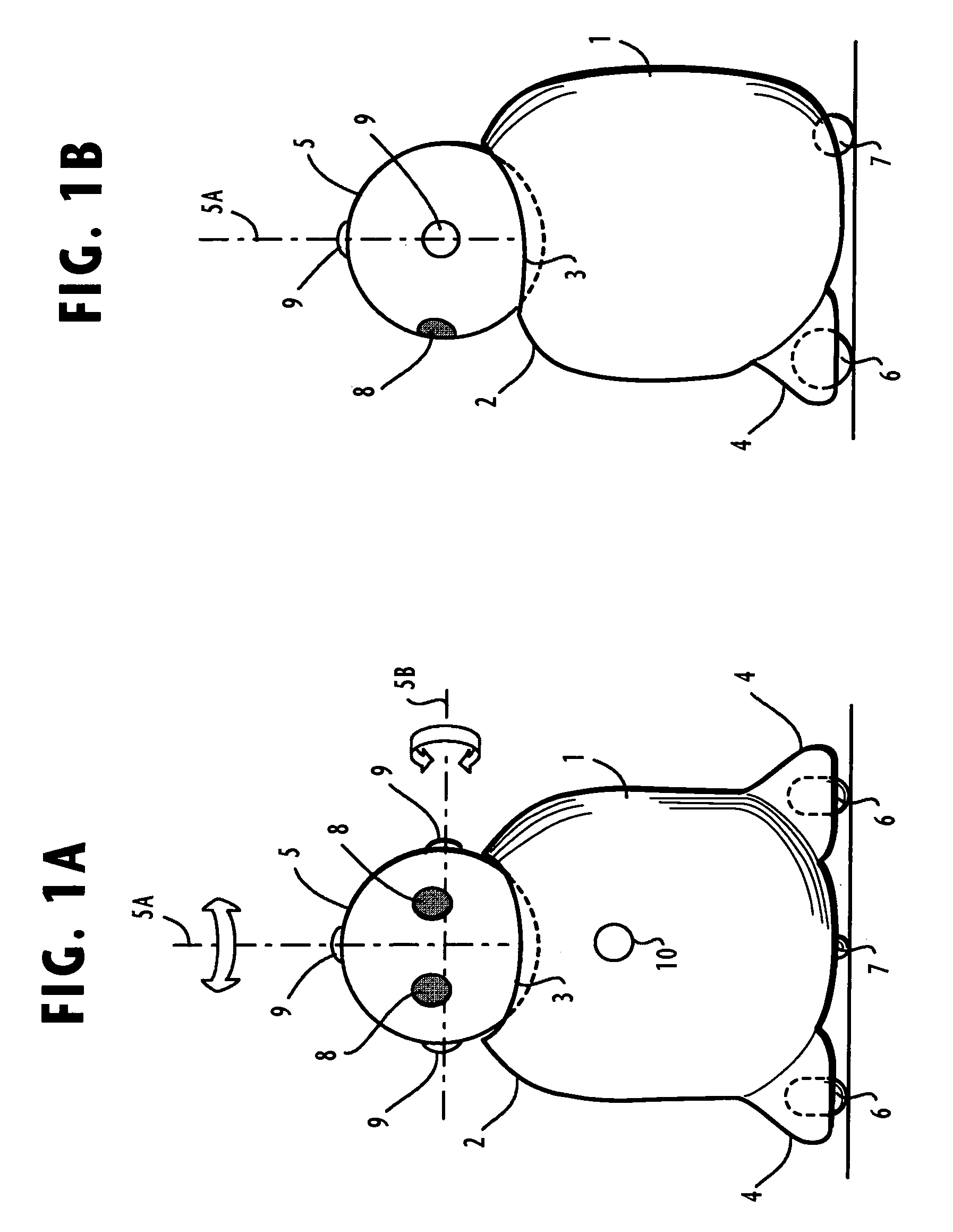

Child-care robot and a method of controlling the robot

ActiveUS8376803B2Reduce the burden onReduce expenditureComputerized toysDiagnostic recording/measuringEngineeringSpeech sound

A child-care robot for use in a nursery school associates child behavior patterns with corresponding robot action patterns, and acquires a child behavior pattern when a child behaves in a specified pattern. The robot selects one of the robot action patterns, which is associated with the acquired child behavior pattern, and performs the selected robot action pattern. Additionally, the robot associates child identifiers with parent identifiers, and receives an inquiry message from a remote terminal indicating a parent identifier. The robot detects one of the child identifiers, which is associated with the parent identifier of the inquiry message, acquires an image or a voice of a child identified by the detected child identifier, and transmits the acquired image or voice to the remote terminal. The robot further moves in search of a child, measures the temperature of the child, and associates the temperature with time of day at which it was measured.

Owner:NEC CORP

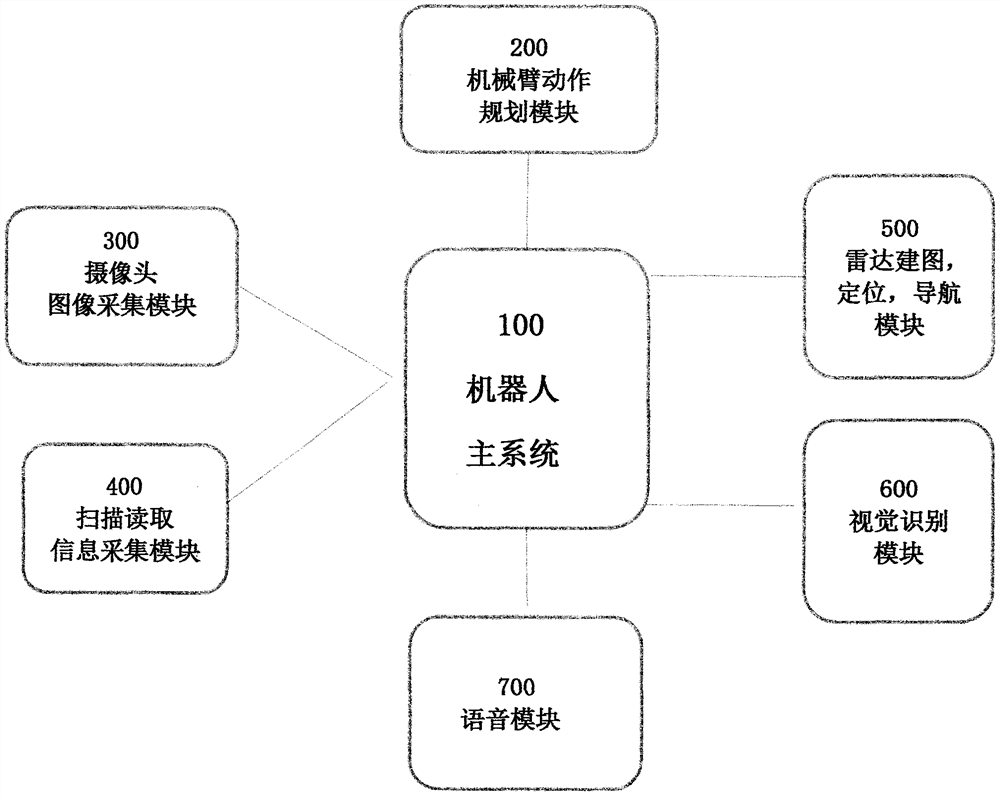

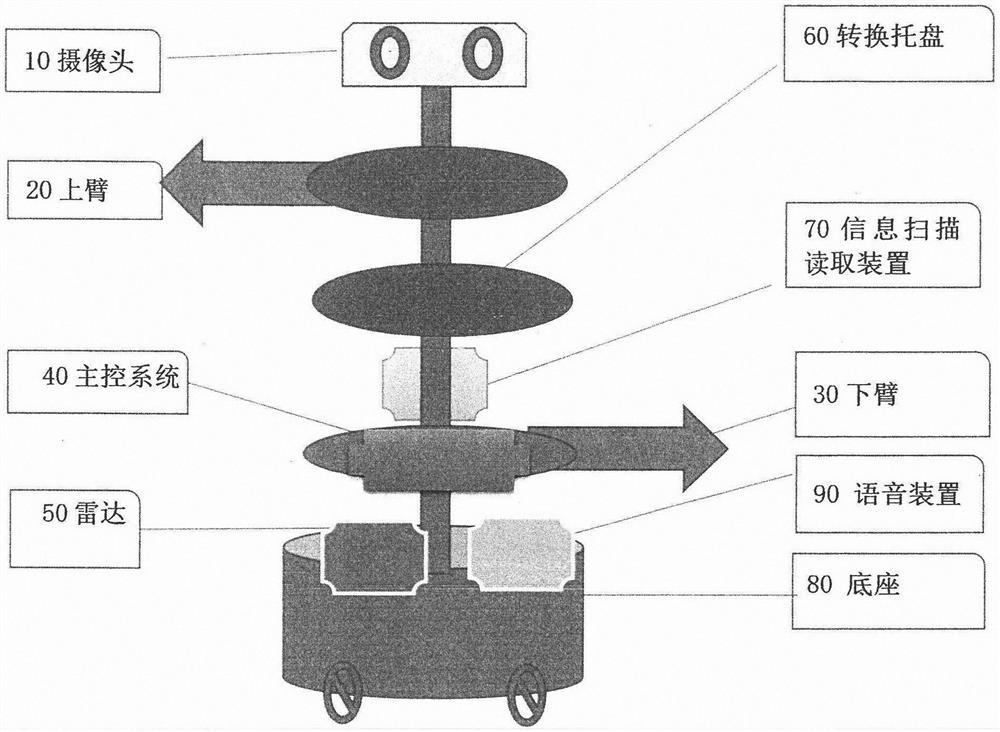

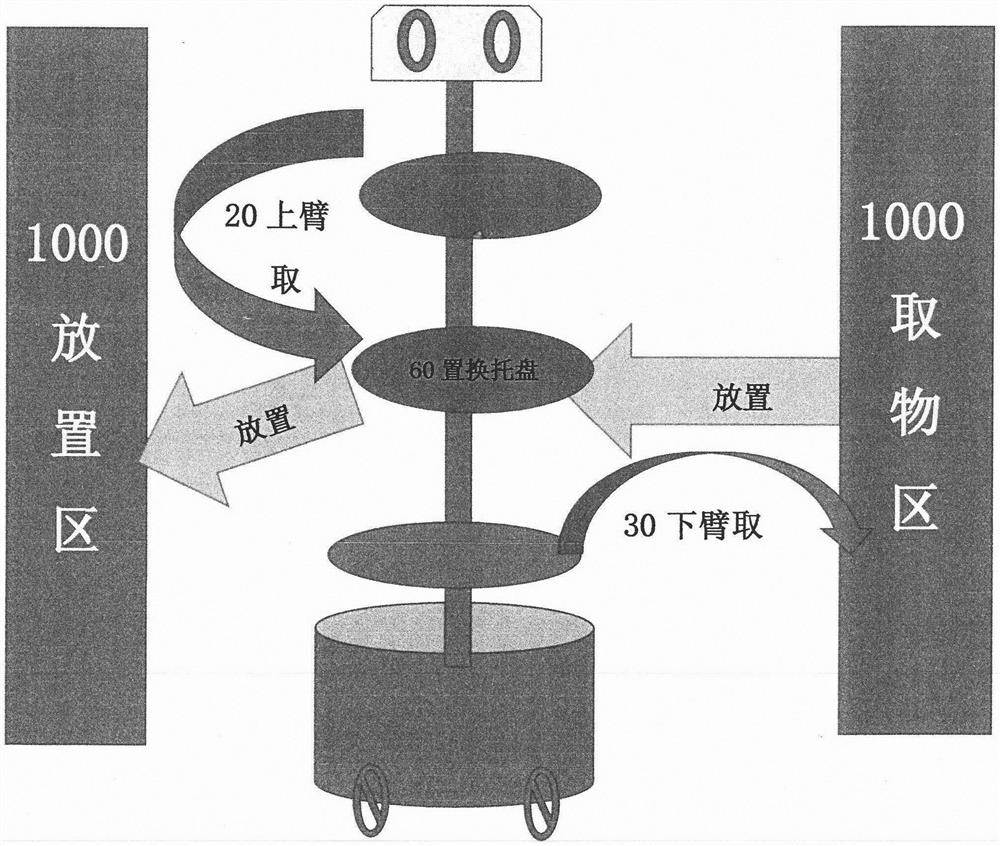

Multi-mode comprehensive information recognition mobile double-arm robot device, system and method

PendingCN111906785AFast trainingAccurate identificationProgramme-controlled manipulatorRobotic systemsMobile navigation

The invention provides a multi-mode comprehensive information recognition mobile double-arm robot device, system and method. A robot action planning equipment platform is realized by utilizing an artificial intelligence robot multi-scene recognition technology, a multi-mode recognition technology and a voice recognition and positioning navigation mobile technology. According to the multi-mode comprehensive information recognition mobile double-arm robot device, system and method, the artificial intelligence and the robot technology are applied, and the robot node communication principle is combined, so that voice acquisition, voice interaction, voice instruction, voice query, remote and autonomous control, autonomous placement, code scanning query, scanning and reading of biological information, multi-scene article and personnel identification, article and equipment management, radar double-precision position locating and autonomous mobile navigation are realized; a double-arm sorting,counting and article placing integrated robot device is provided; and a robot system is connected with a personnel management system and an article management system. According to the present invention, the capabilities of voice interaction, accurate positioning, autonomous positioning and navigation and autonomous sorting, counting and placing of articles are improved, and the method, the systemand the device are widely applied to schools, business, factories, warehouses and medical scenes.

Owner:谈斯聪

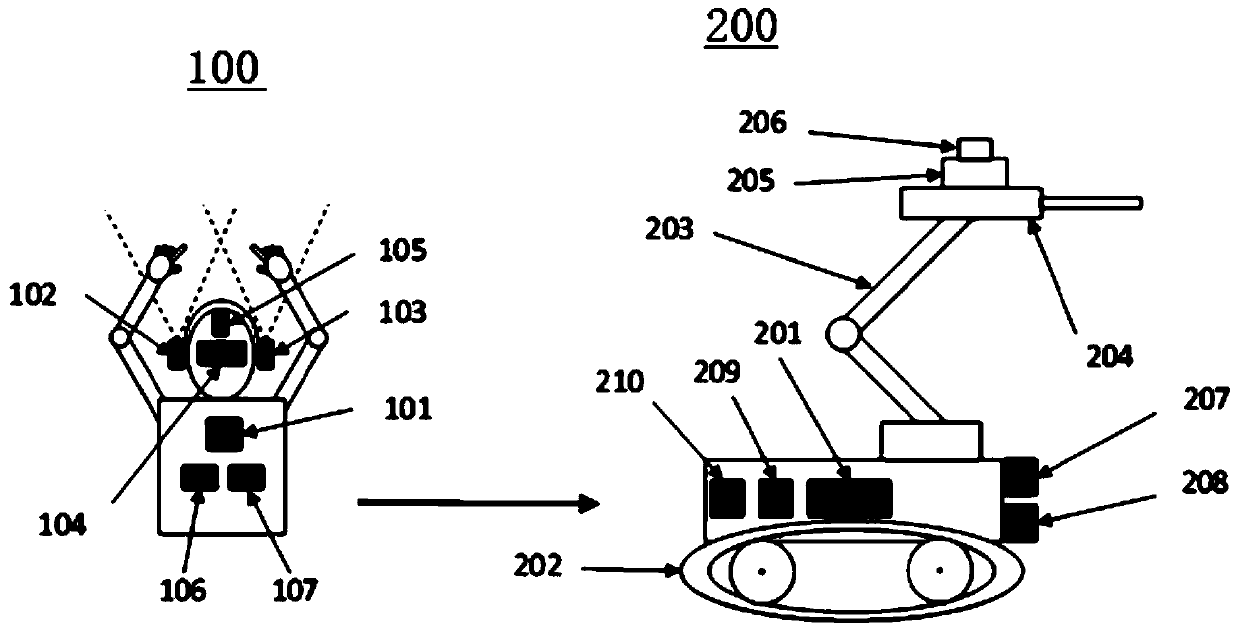

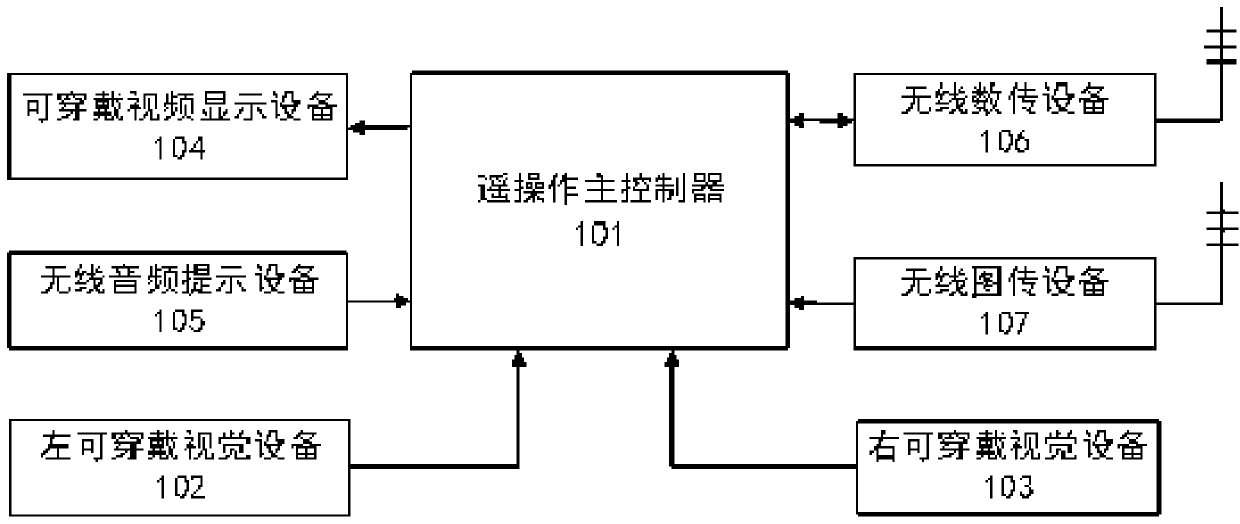

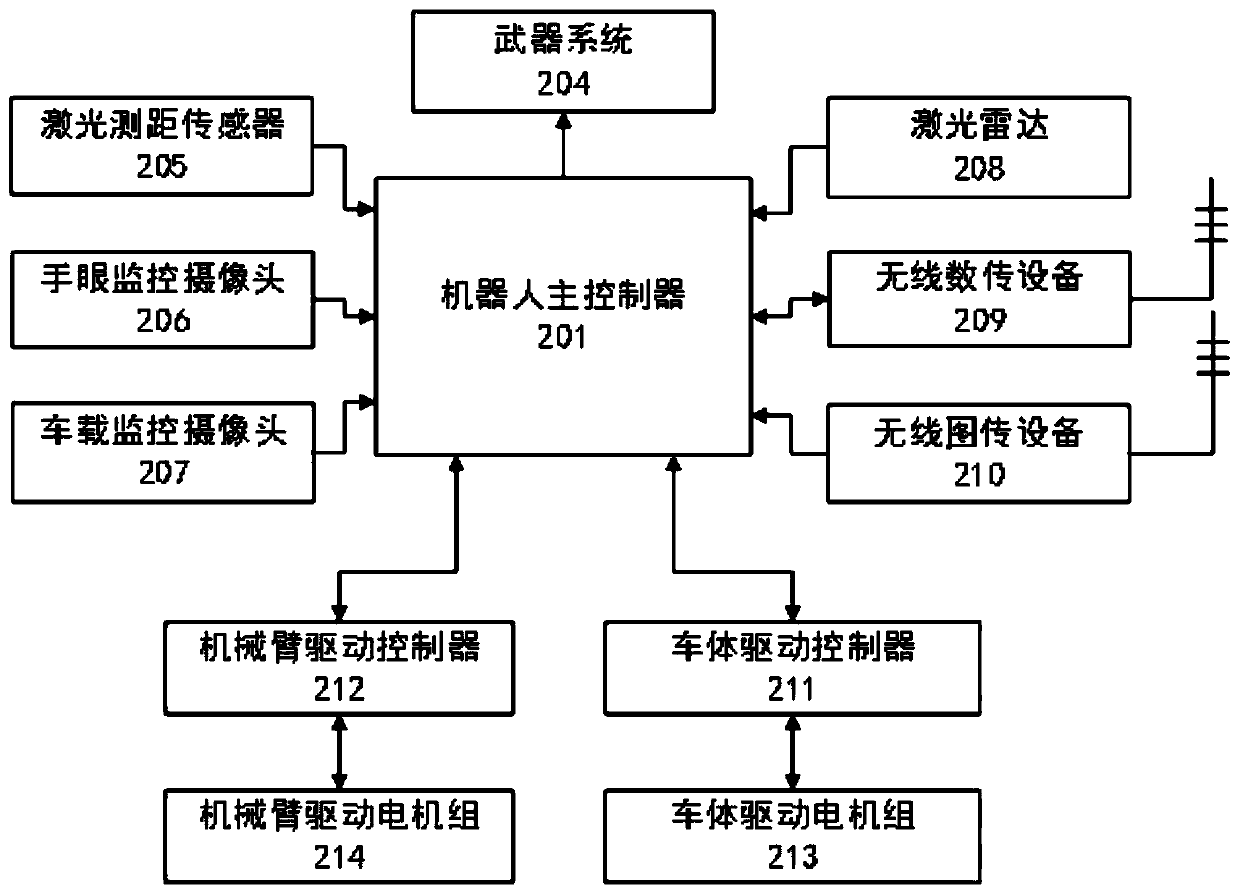

Robot remote control system based on wearable equipment and control methods

ActiveCN110039545AImprove intuitivenessSimple structureProgramme-controlled manipulatorControl systemRemote control

The invention provides a robot remote control system based on wearable equipment and control methods. The robot remote control system based on the wearable equipment is firstly provided, through the arrangement of the wearable equipment, intuition of control is improved, the two hands of a robot control person are freed, meanwhile, the two control methods based on the control system are provided,and remote control over a slave-end robot is achieved. In one method, the different hands of the control person are adopted for controlling different action portions of the slave-end robot during control, and the left hand and the right hand are used for control so that the accuracy that the slave-end robot executes command actions can be higher; and in the other method, the connecting hand type is arranged, so that the control person gets rid of dependency on a physical ccontroller. Compared with the manner of achieving discrete control over robot actions only through gesture types, incremental continuous and precise control over the position of the tail end of a reconnaissance system can be achieved.

Owner:山科华智(山东)机器人智能科技有限责任公司

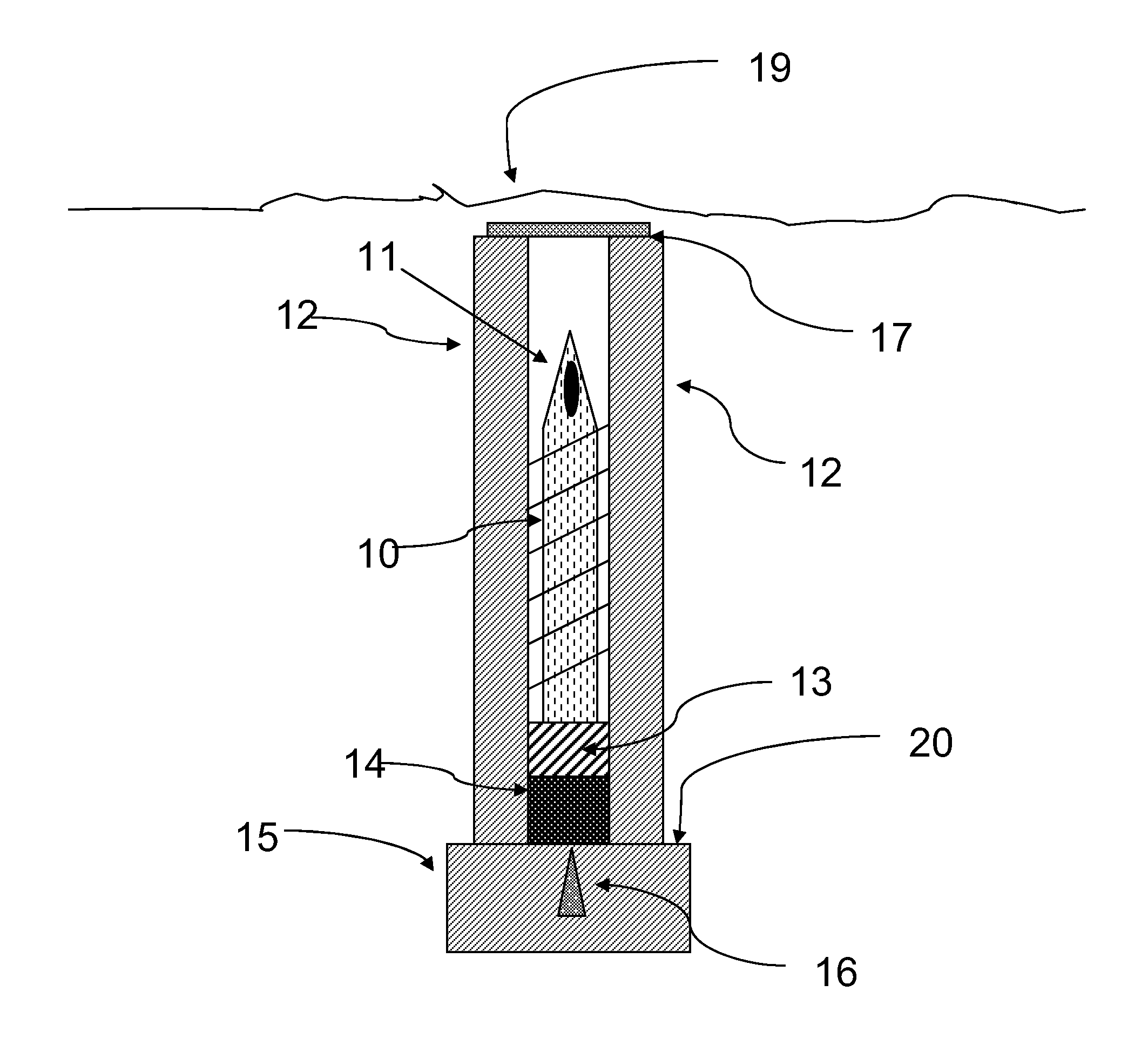

Area Denial Device

InactiveUS20130206028A1Increase costSignificant injury can be avoidedStampsThrusting weaponsEngineeringRobot action

A propelled lance provides a wounding non-lethal anti-insurgent action when stepped on by piercing an enemy insurgent's foot with a lance penetrator. The lance penetrator lodges in the foot and further penetration is impeded by a stop plate. The lance penetrator may insert an RFID or other identifiable device or other payload into the insurgent. The lance penetrator provides anti-personnel, anti-vehicle and anti-robot action.

Owner:AREA DENIAL SYST

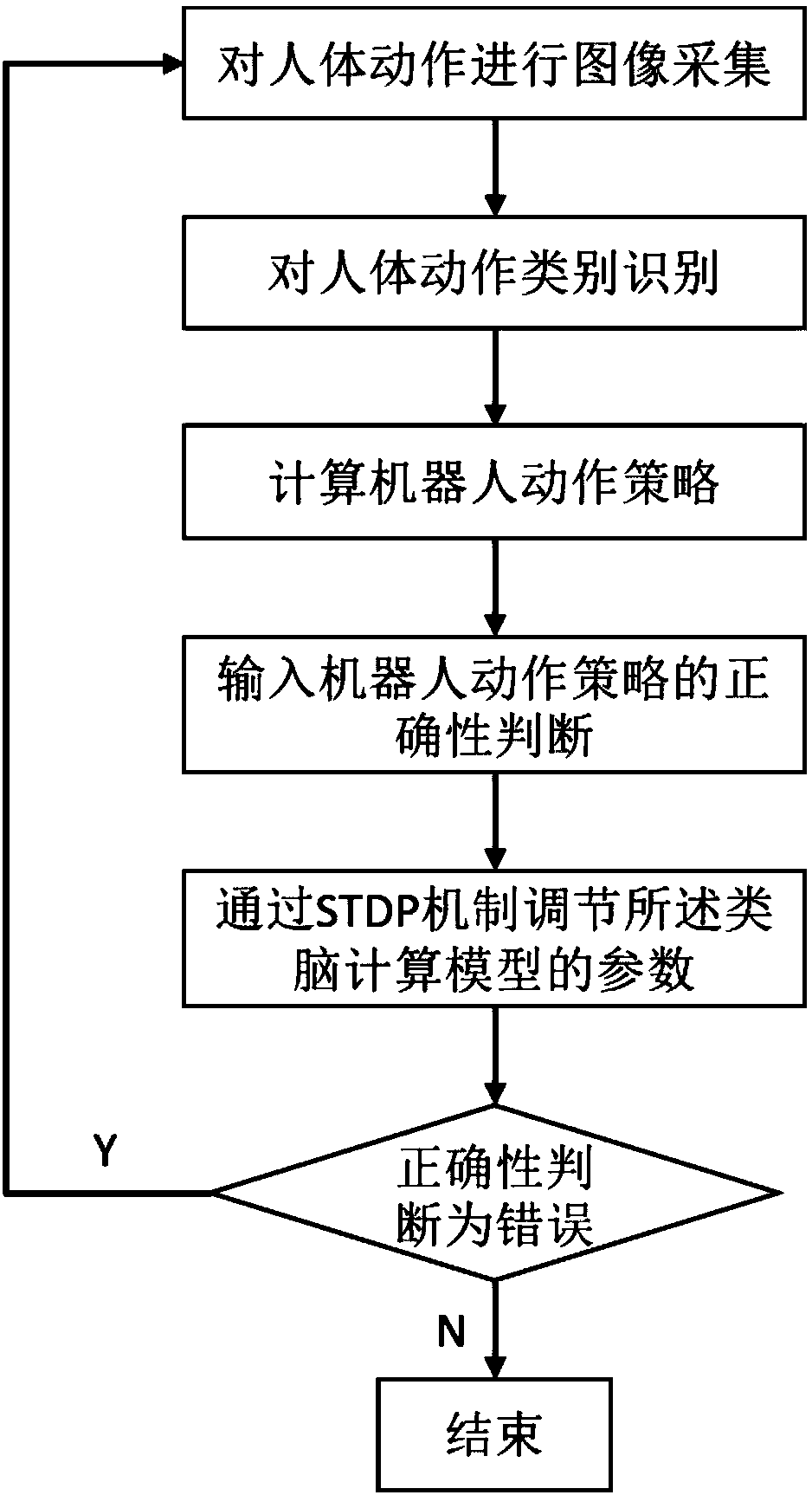

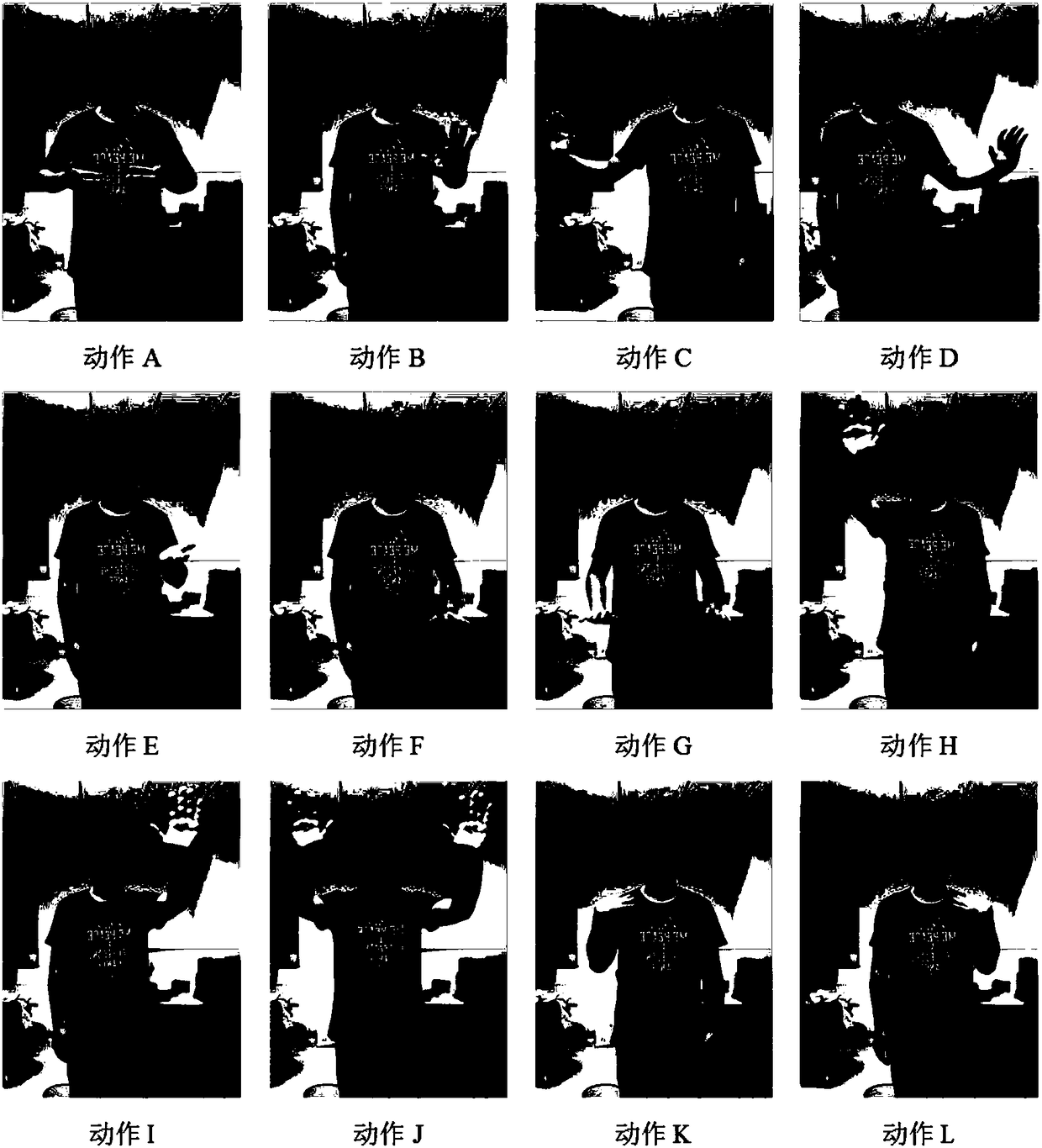

Human action intention recognition training method based on cooperative computation of multiple brain areas

ActiveCN108304767AEnables cognitive function modelingPerform cognitive tasksInput/output for user-computer interactionPhysical realisationCategory recognitionHuman body

The invention belongs to the field of cognitive neuroscience, and specifically relates to a human action intention recognition training method based on cooperative computation of multiple brain areas.The human action intention recognition training method comprises the steps of 1, performing image collection on a human body action; 2, obtaining human body joint information, and recognizing the category of the human body action; 3, calculating a robot action strategy according to the category of the action executed by the human by adopting a mode of cooperative computation of multiple brain areas based on a brain-like computing model; 4, inputting a correctness judgment for the robot action strategy calculated in the step 3; 5, adjusting parameters of the brain-like computing model throughan STDP mechanism based on the correctness judgment inputted in the step 4; and 6, if the correctness judgment inputted in the step 4 shows that the robot action strategy is wrong, executing the step1 for repeated training until the correctness judgment inputted in the step 4 shows that the robot action strategy is correct. The human action intention recognition training method overcomes the defect of being not flexible enough because programming and the like need to be performed in advance in the traditional human-computer interaction technology, and improves the use experience.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

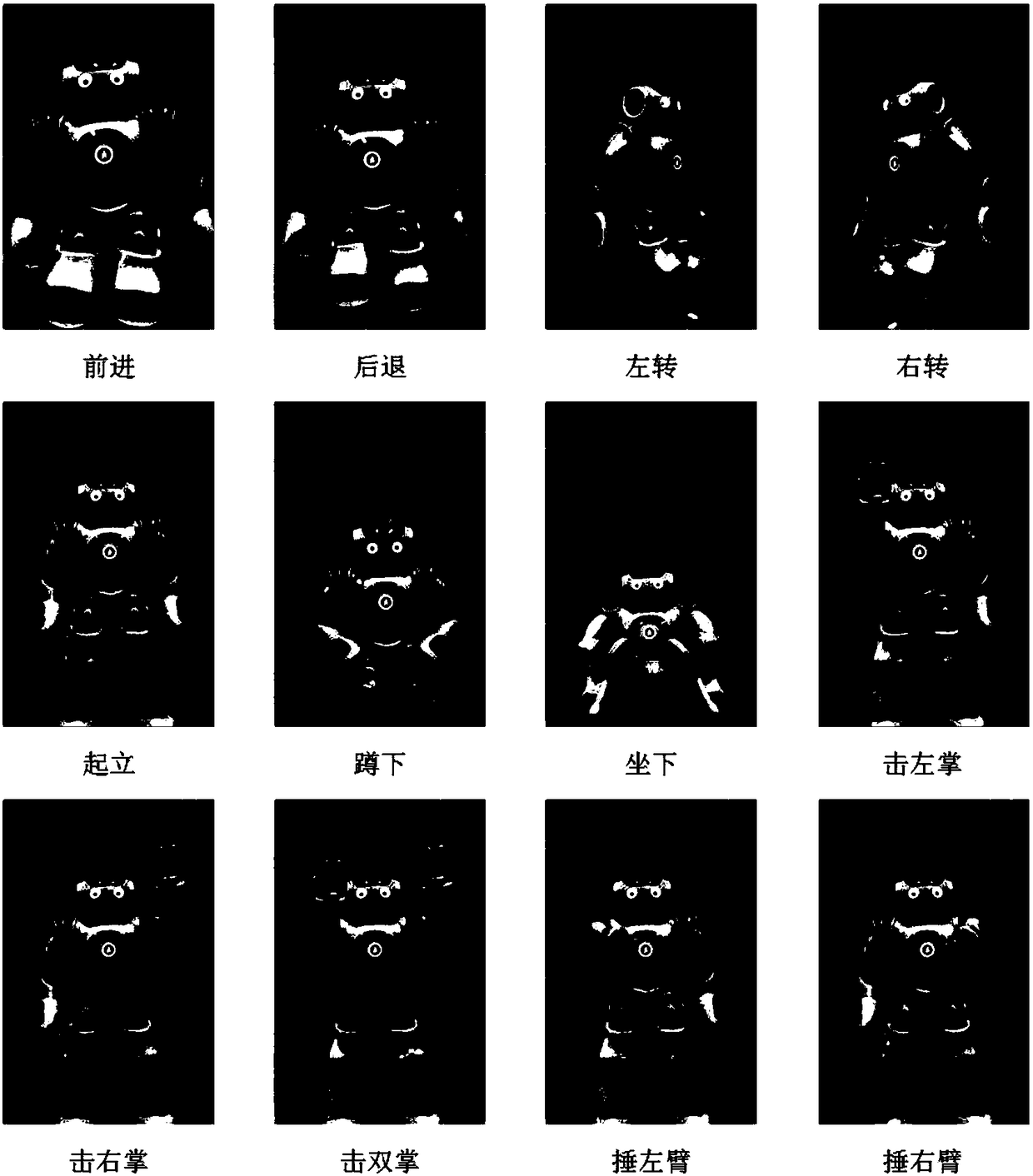

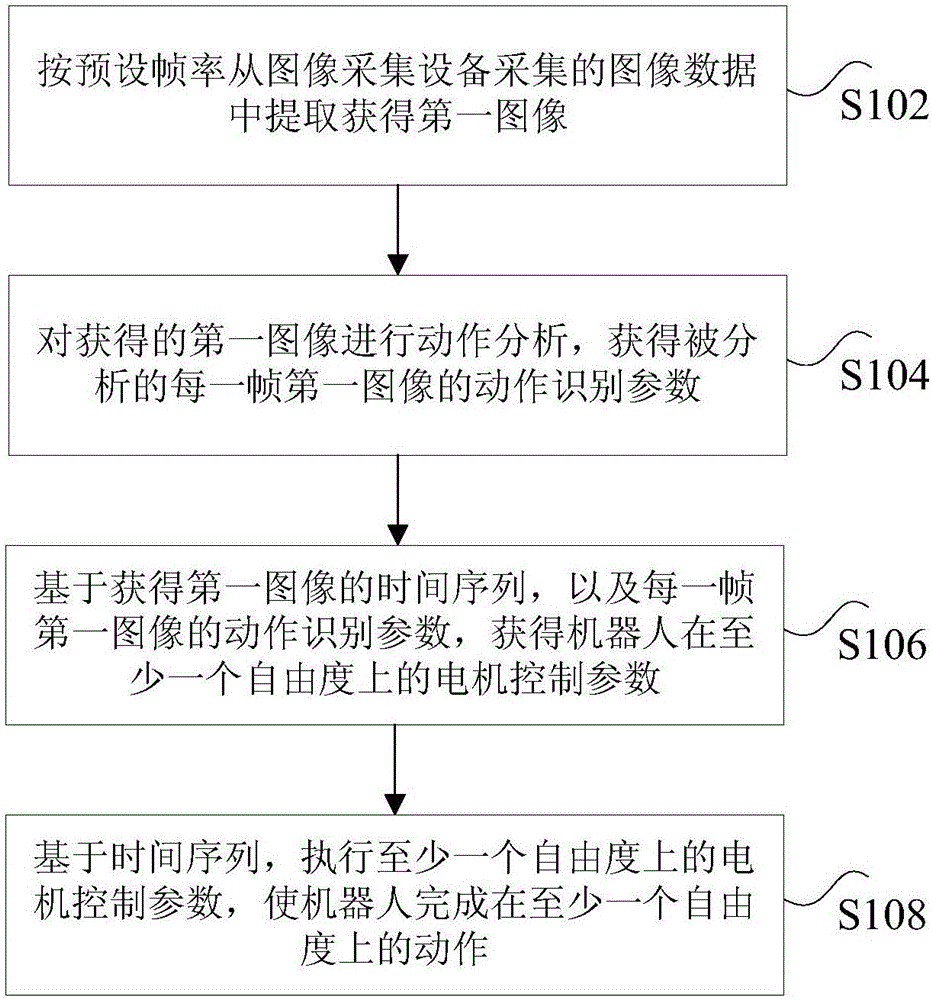

Robot action simulation method and device

The invention discloses a robot action simulation method and device. A robot has image acquiring equipment. The method comprises the following steps: first images are extracted from image data acquired by the image acquiring equipment according to a preset frame rate; the action analysis is performed for the obtained first images to obtain action identifying parameters of each frame of analyzed first images; motor control parameters of the robot on at least one degree of freedom are obtained based on a time sequence of obtaining the first images and the action identifying parameters of each frame of first images; and the robot finishes actions on at least one degree of freedom by performing the motor control parameters on at least one degree of freedom based on the time sequence. The method solves the technical problem of higher difficulty on accurate setting of actions of the robot on each degree of freedom when relatively complex actions are designed for the robot in the prior art.

Owner:NINEBOT (BEIJING) TECH CO LTD

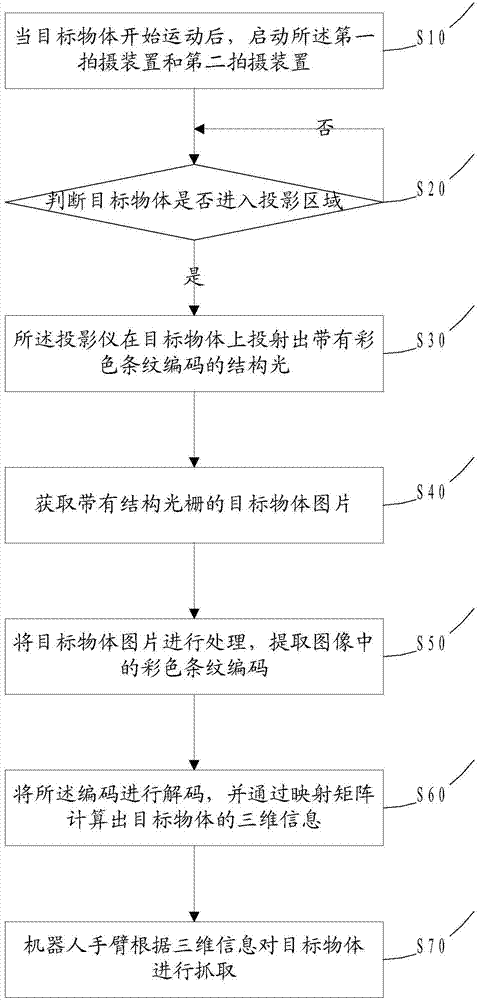

Robot visual servo control method based on structured light

The invention discloses a robot visual servo control method based on structured light. The robot visual servo control method comprises the following steps: after a target object starts moving, starting a first photographing device and a second photographing device, judging whether the target object enters a projection area, if yes, starting a projector; projecting structured light with colorful stripe codes on the target object through the projector; obtaining a target object image with a structured optical grating; processing the target object image with the structured optical grating, and extracting the colorful stripe codes in the image; decoding the codes, calculating three-dimensional information of the target object through a mapping matrix; and grabbing the target object according to the three-dimensional information through a robot arm. The robot visual servo control method can be used for guiding the robot arm to move through vision of the structured light based on the colorful stripes, so that the operation action of the robot arm is relatively accurate, and the optimization to the robot action speed and the accuracy is realized.

Owner:SHENYANG SIASUN ROBOT & AUTOMATION

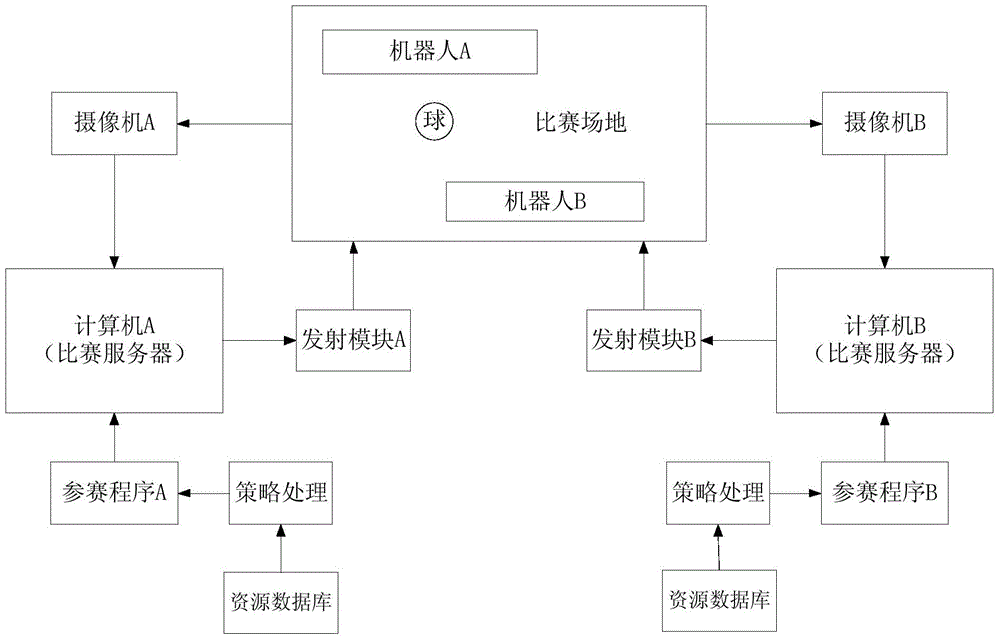

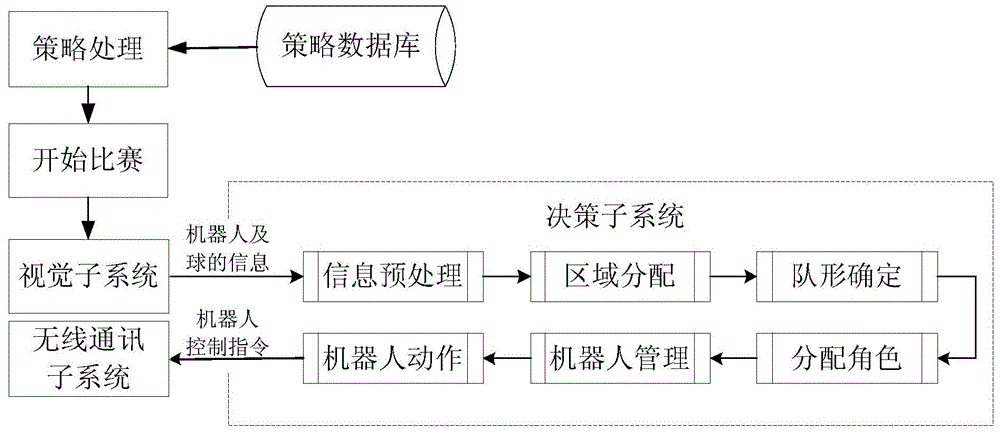

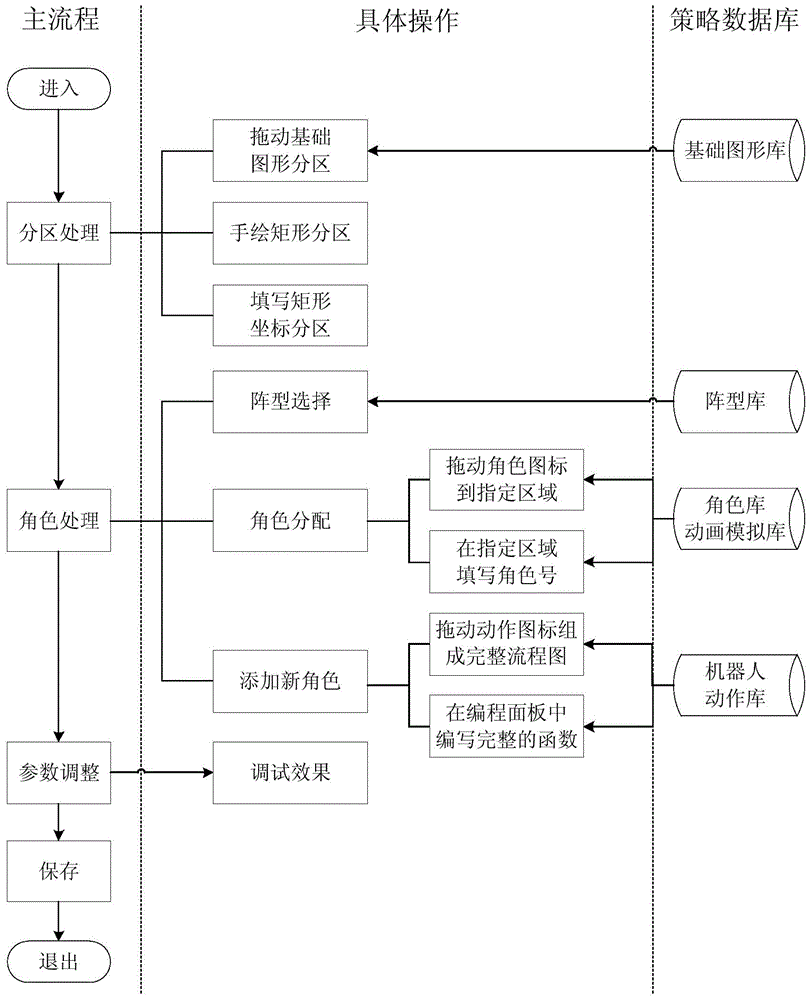

Strategy regulatory module of global vision soccer robot decision-making subsystem and method thereof

ActiveCN104834516AModify in timeEasy to operateSpecific program execution arrangementsSoccer robotOperability

The invention relates to a strategy regulatory module of a global vision soccer robot decision-making subsystem and a method thereof, wherein the module comprises a plurality of resource databases, such as a role library, a formation library, a basis graphics library, a robot action library, and so on. The module and the method of the invention can be used for re-drawing a competition area, assigning roles, modifying a role parameter and adding new role functions, and so on via graphical programming or code programming; especially the decision-making subsystem can be adjusted only on a strategy processing panel without entering into a complex program, which greatly strengthens the operability of the whole system. In addition to this, a strategy parameter can be directly modified in the game and taken effect in time so as to be convenient for an operator to timely modify our tactics according to different strategies of an opponent and a site situation.

Owner:周凡

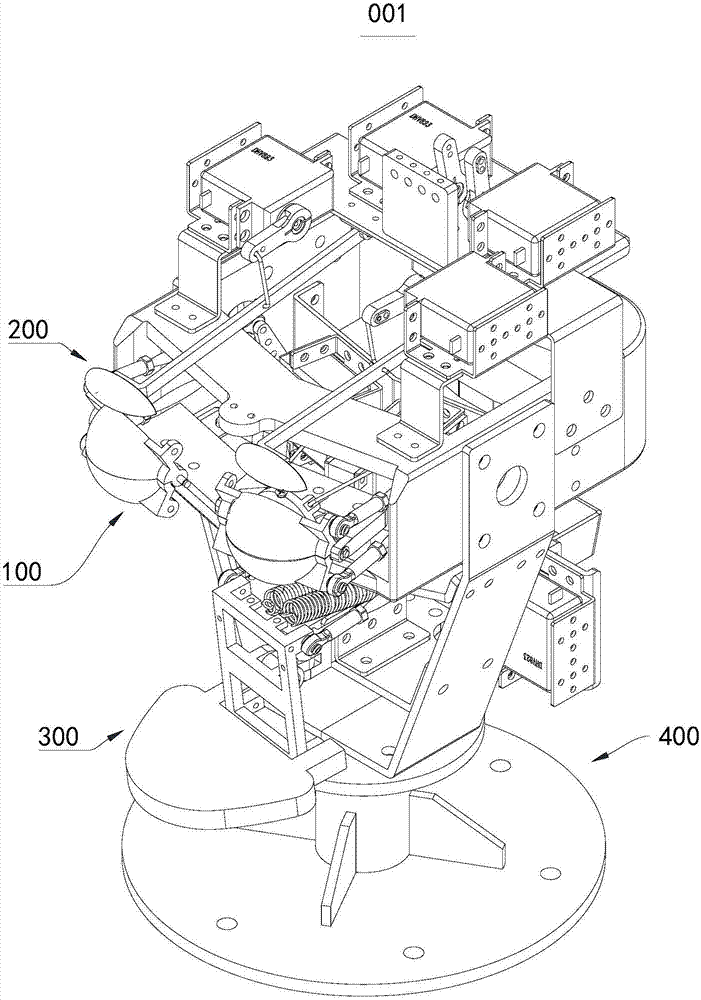

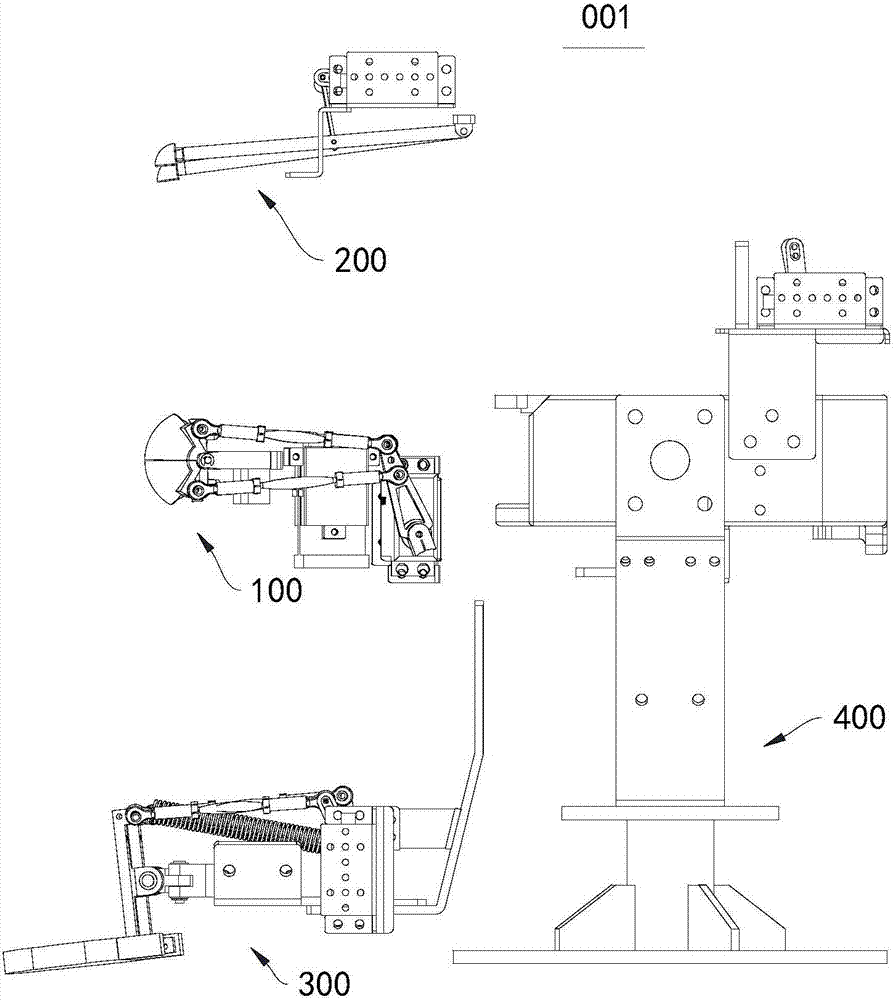

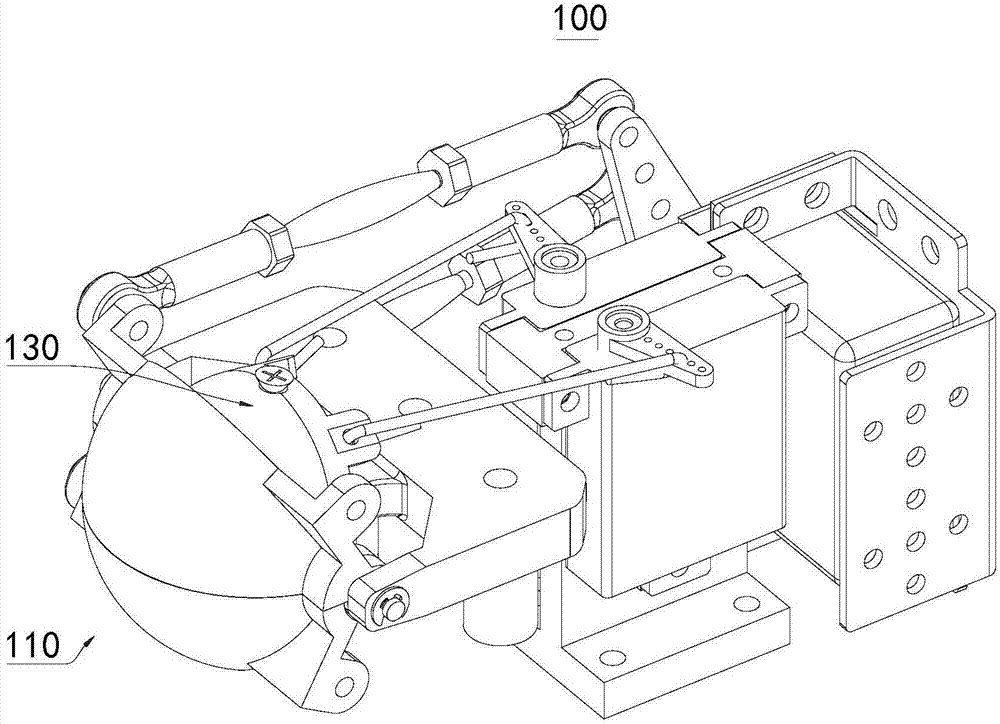

Robot head device and robot

The invention provides a robot head device and a robot and belongs to the field of artificial intelligence. The robot head device comprises an eye assembly, an eyebrow assembly, a mouth assembly and a head supporting assembly. The eye assembly, the eyebrow assembly and the mouth assembly are connected to the head supporting assembly. The eye assembly comprises an eyeball assembly. The eyeball assembly comprises a simulation eyeball, the simulation eyeball is rotationally connected to the head supporting assembly, and the simulation eyeball is driven by two sets of four-rod mechanisms to rotate. The robot comprises a simulation body and the robot head device. The robot head device and the robot are stable and flexible in action, rapid in response, good in simulation effect and wide in application range.

Owner:CHANGZHOU JINGANG NETWORK TECH

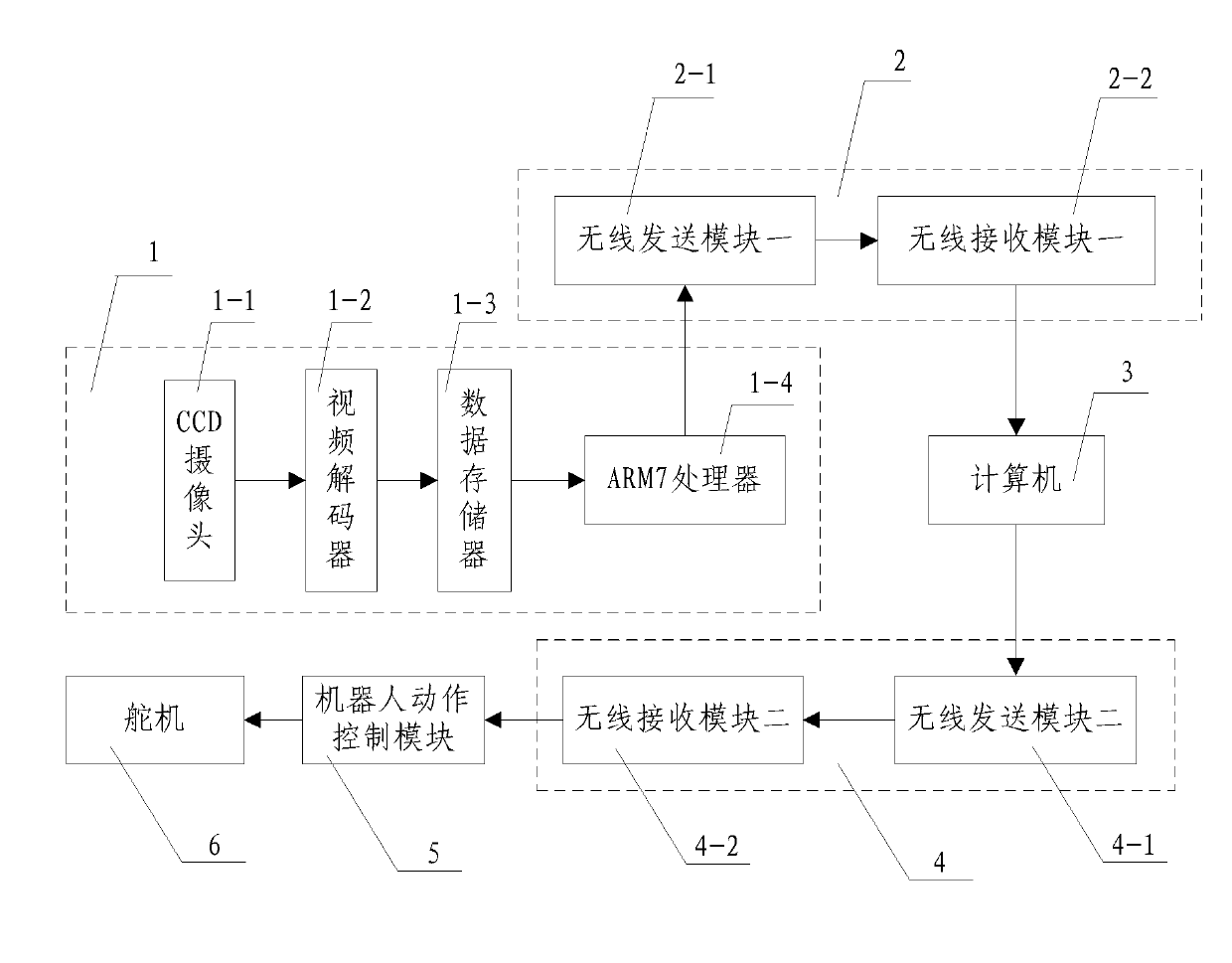

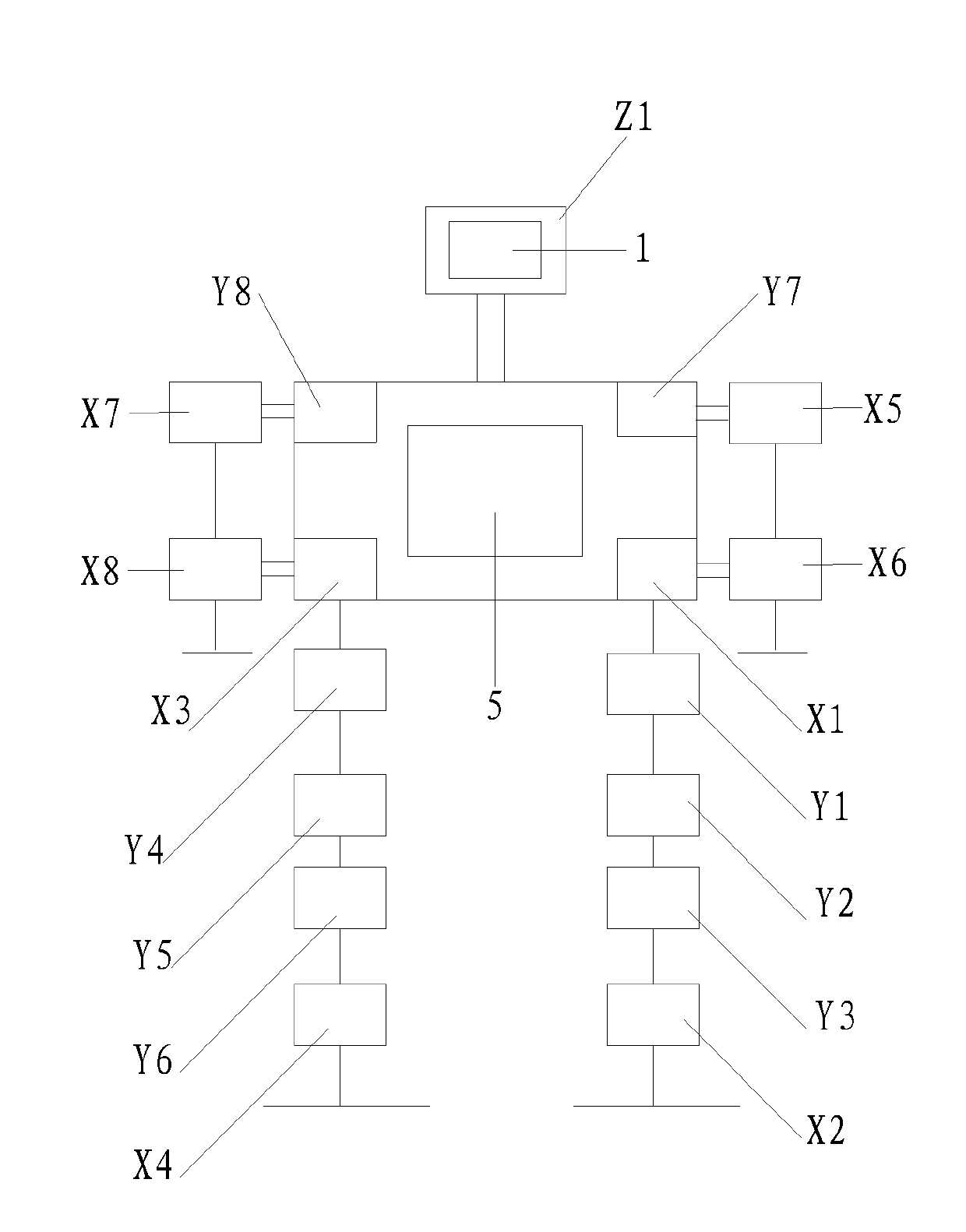

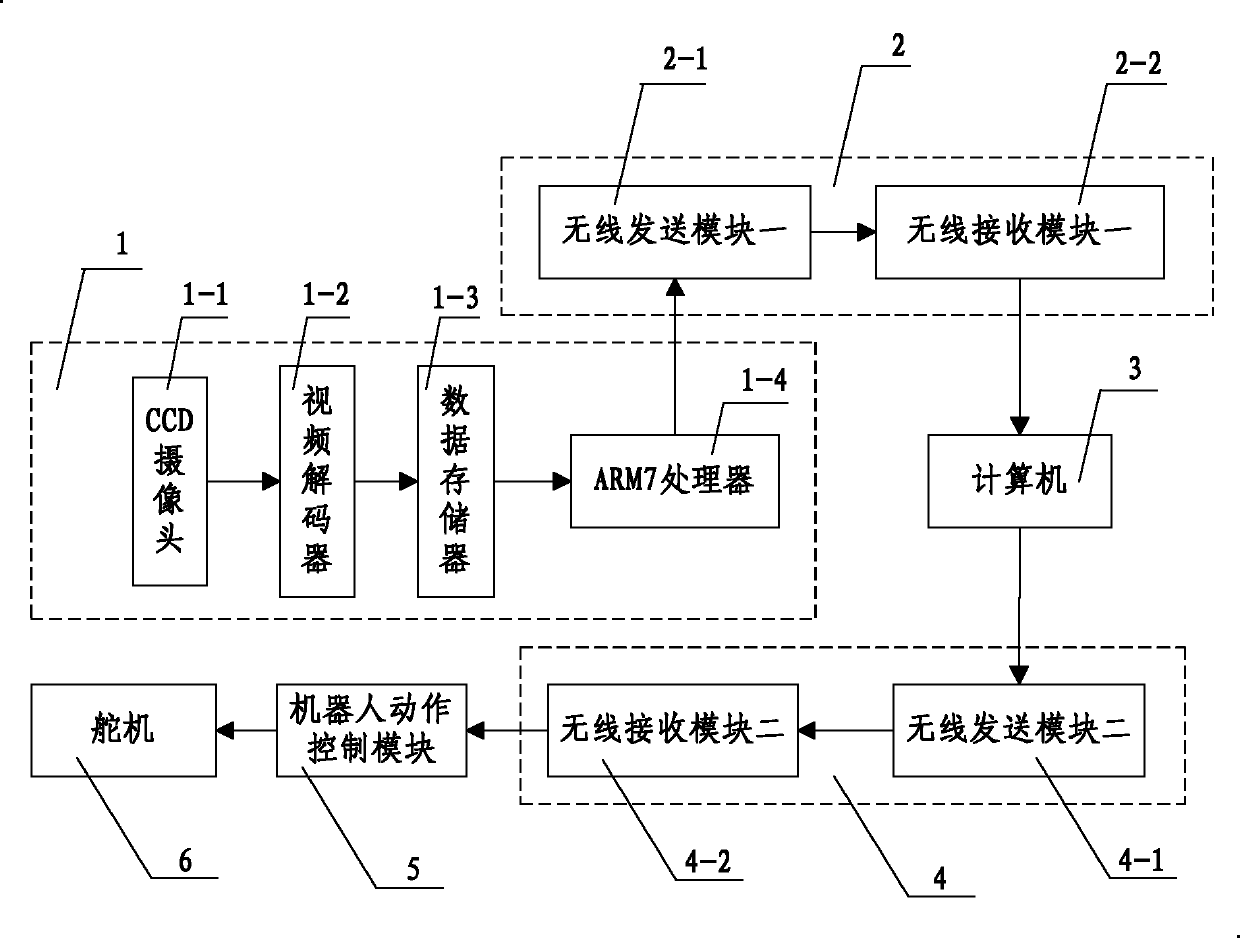

Intelligent toy robot with action imitation function

InactiveCN102553250ANovel structureReasonable designSelf-moving toy figuresWireless transmissionProcess module

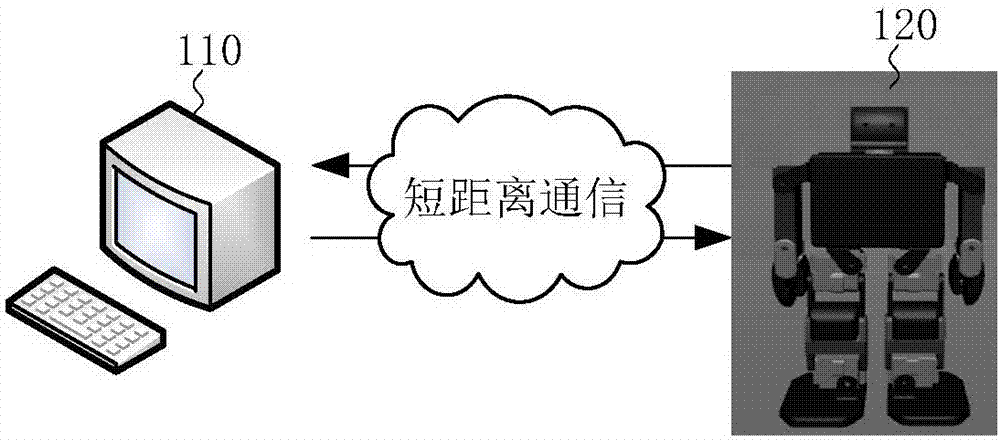

The invention discloses an intelligent toy robot with an action imitation function. The intelligent toy robot comprises a computer, a humanoid robot body, a video acquisition and process module and a robot action control module and also comprises a wireless communication module I and a wireless communication module II, wherein the wireless communication module I is used for realizing the data wireless transmission between the video acquisition and process module and a computer, and the wireless communication module II is used for realizing the data wireless transmission between the computer and the robot action control module; the humanoid robot body is composed of 17 steering engines and steering engine connecting metal pieces; the video acquisition and process module is composed of a CCD (Charge-Coupled Device) camera, a video decoder and an ARM (Advanced RISC Machines)7 processor; and the wireless communication module I is composed of a wireless transmitting module I and a wireless receiving module I, and the wireless communication module II is composed of a wireless transmitting module II and a wireless receiving module II. The intelligent toy robot has a novel structure and is reasonable in design, high in personification degree and intelligent degree and can imitate the actions of a child so as to interact with the child, so that the intelligent toy robot can stimulate the scientific exploring desire of the child invisibly.

Owner:XIAN TEKTONG DIGITAL TECH

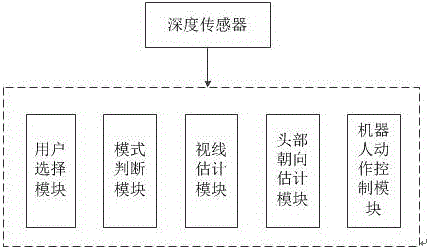

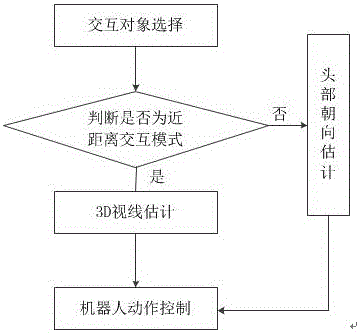

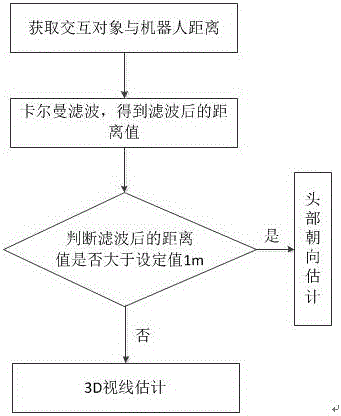

Far-near distance man-machine interactive system based on 3D sight estimation and far-near distance man-machine interactive method based on 3D sight estimation

InactiveCN105759973AImprove effectivenessImprove stabilityInput/output for user-computer interactionAcquiring/recognising eyesFar distanceMan machine

The invention discloses a far-near distance man-machine interactive system based on 3D sight estimation and a far-near distance man-machine interactive method based on 3D sight estimation. The system comprises a depth sensor, a user selection module, a mode judgment module, a sight estimation module, a head orientation estimation module and a robot action control module. The method comprises the following steps: (S1) interaction object selection; (S2) interaction mode judgment; (S3) 3D sight estimation; (S4) head orientation estimation; and (S5) robot action control. According to the system and the method, the man-machine interaction is divided into a far distance mode and a near distance mode according to the actual distance between people and a robot, and the action of the robot is controlled by virtue of the two modes, so that the validity and stability of the man-machine interaction are improved.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

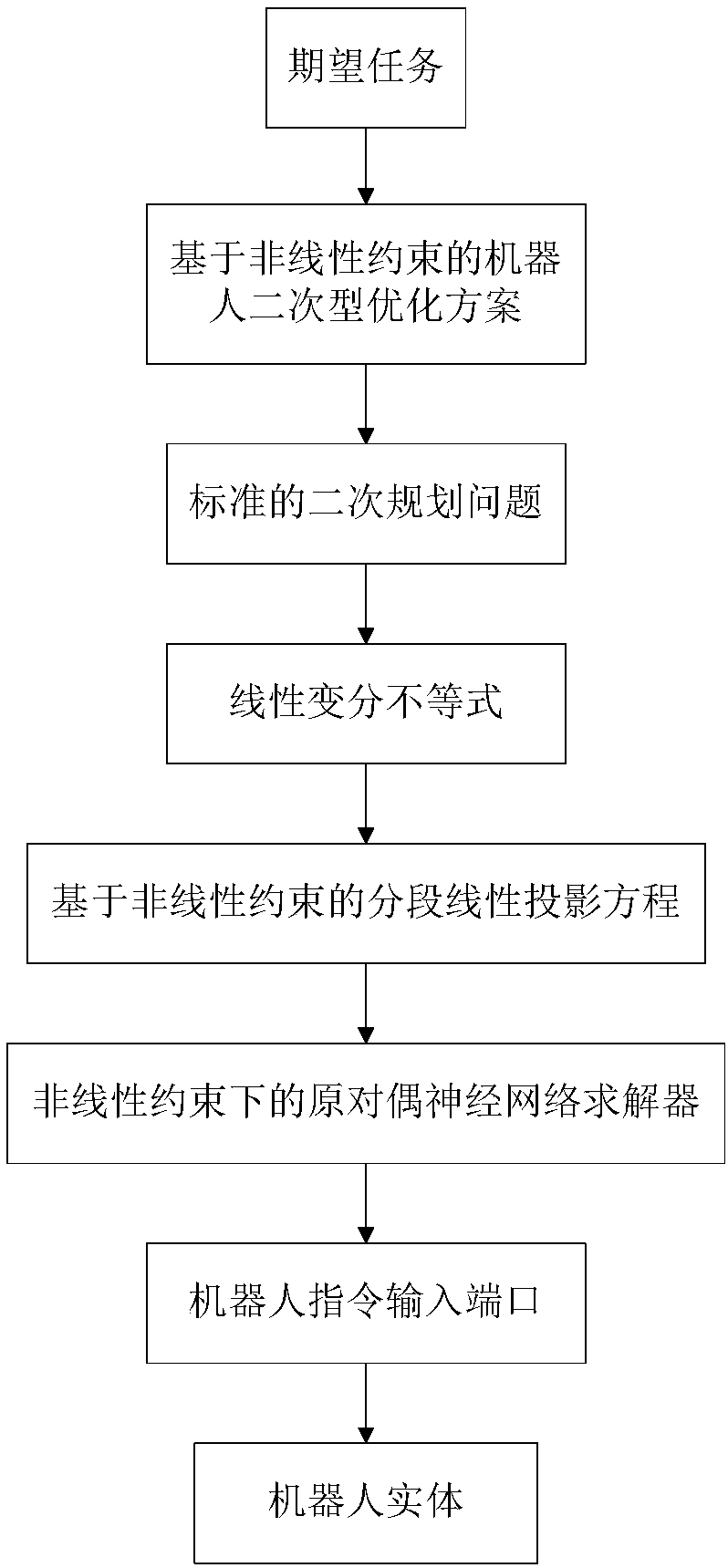

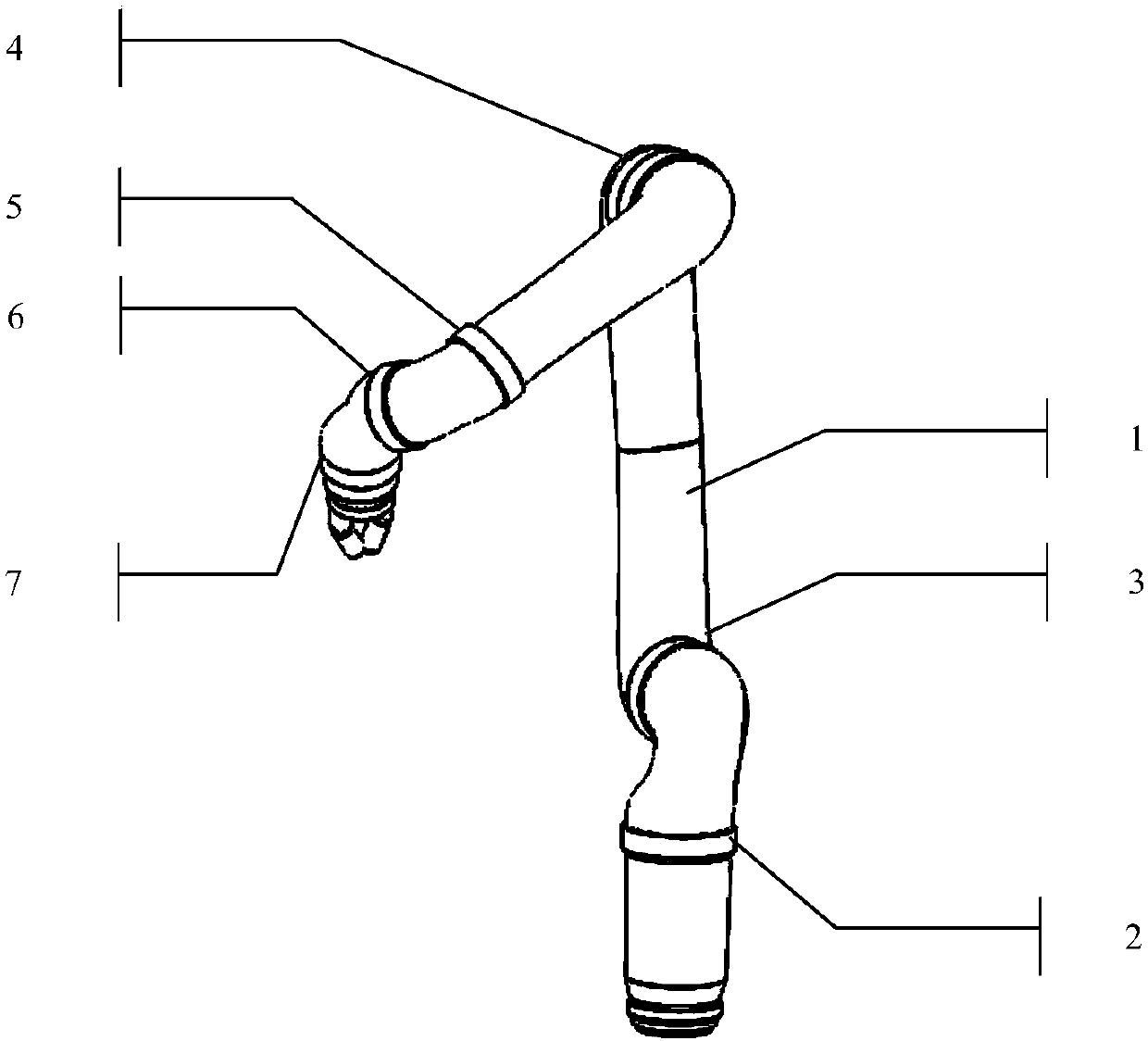

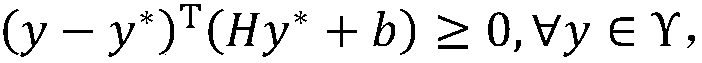

Nonlinear constrained primal-dual neural network robot action planning method

ActiveCN108015766AEliminate the initial error problemOvercome the problem of error accumulationProgramme-controlled manipulatorNerve networkStandard form

The invention discloses a nonlinear constrained primal-dual neural network robot action planning method, which comprises the steps of acquiring a current state of a robot, and adopting a quadratic optimization scheme for carrying out inverse kinematics analysis on a robot track on a speed layer; converting the quadratic optimization scheme to a standard form of a quadratic planning problem; enabling a quadratic planning optimization problem to be equivalent to a linear variational inequality problem; converting the linear variational inequality problem to a solution of a piecewise linearity projection equation based on nonlinear equality constraint; utilizing a nonlinear constrained primal-dual neural network solver for solving the piecewise linearity projection equation; and transferringa solved instruction to a robot instruction input port, and driving a robot to carry out path follow. According to the nonlinear constrained primal-dual neural network robot action planning method provided by the invention, convex set constraint and non-convex set constraint can be compatible, a preliminary test error problem occurred in robot control is eliminated, and an error accumulation problem during a robot control process is solved.

Owner:SOUTH CHINA UNIV OF TECH

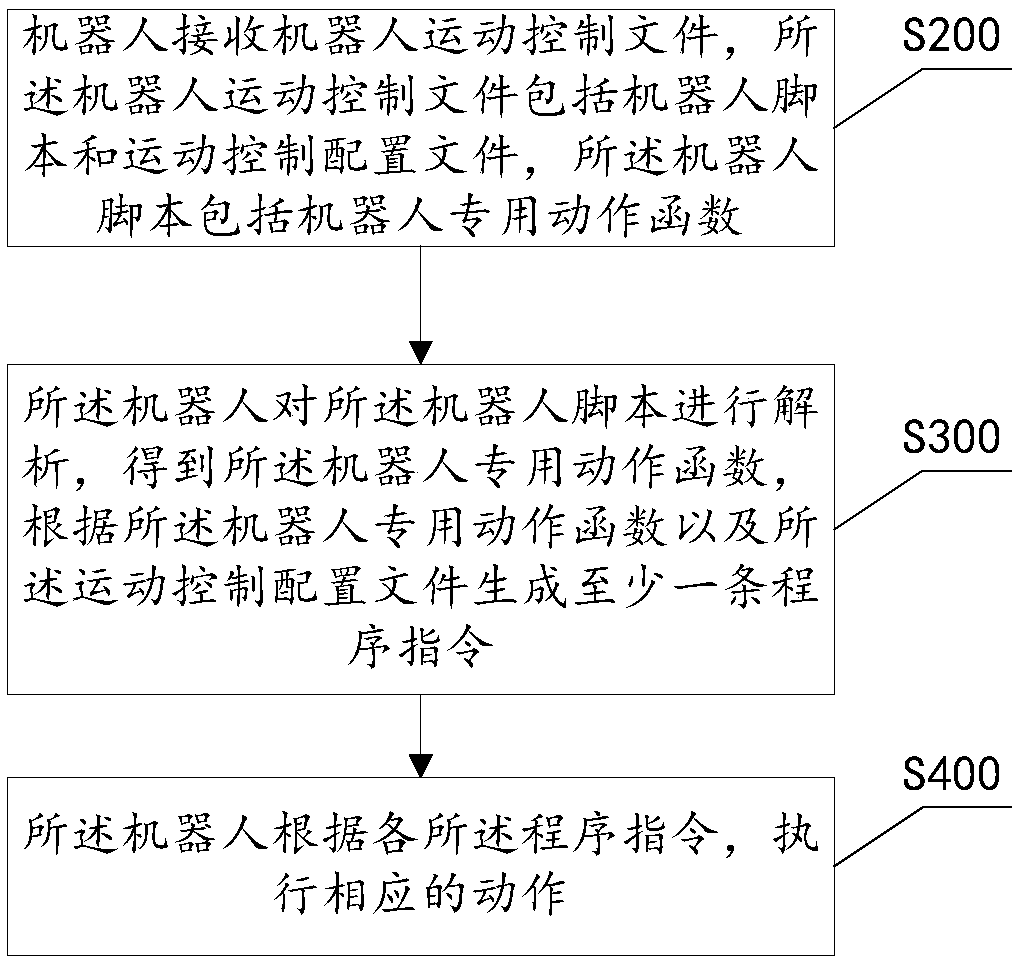

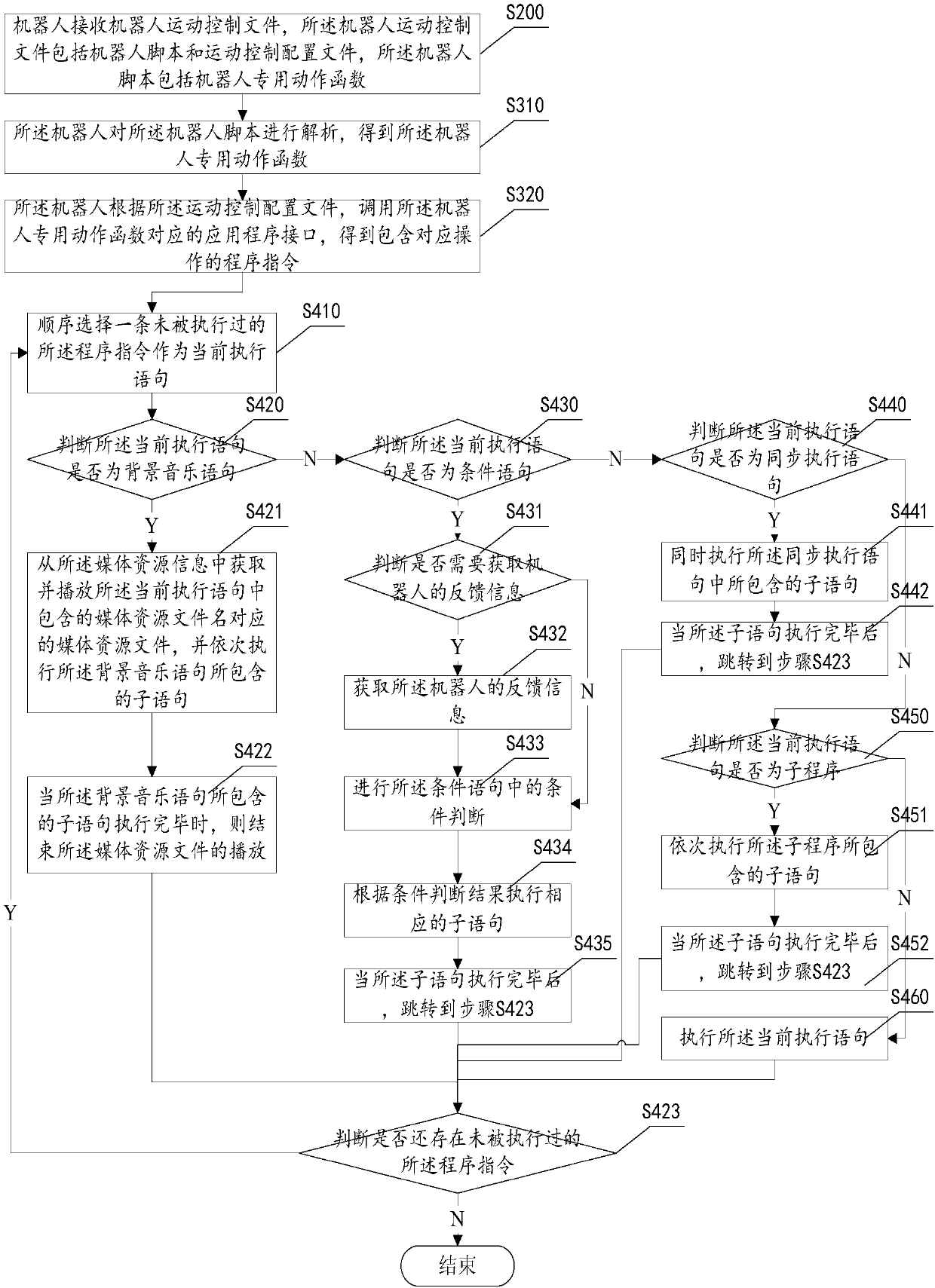

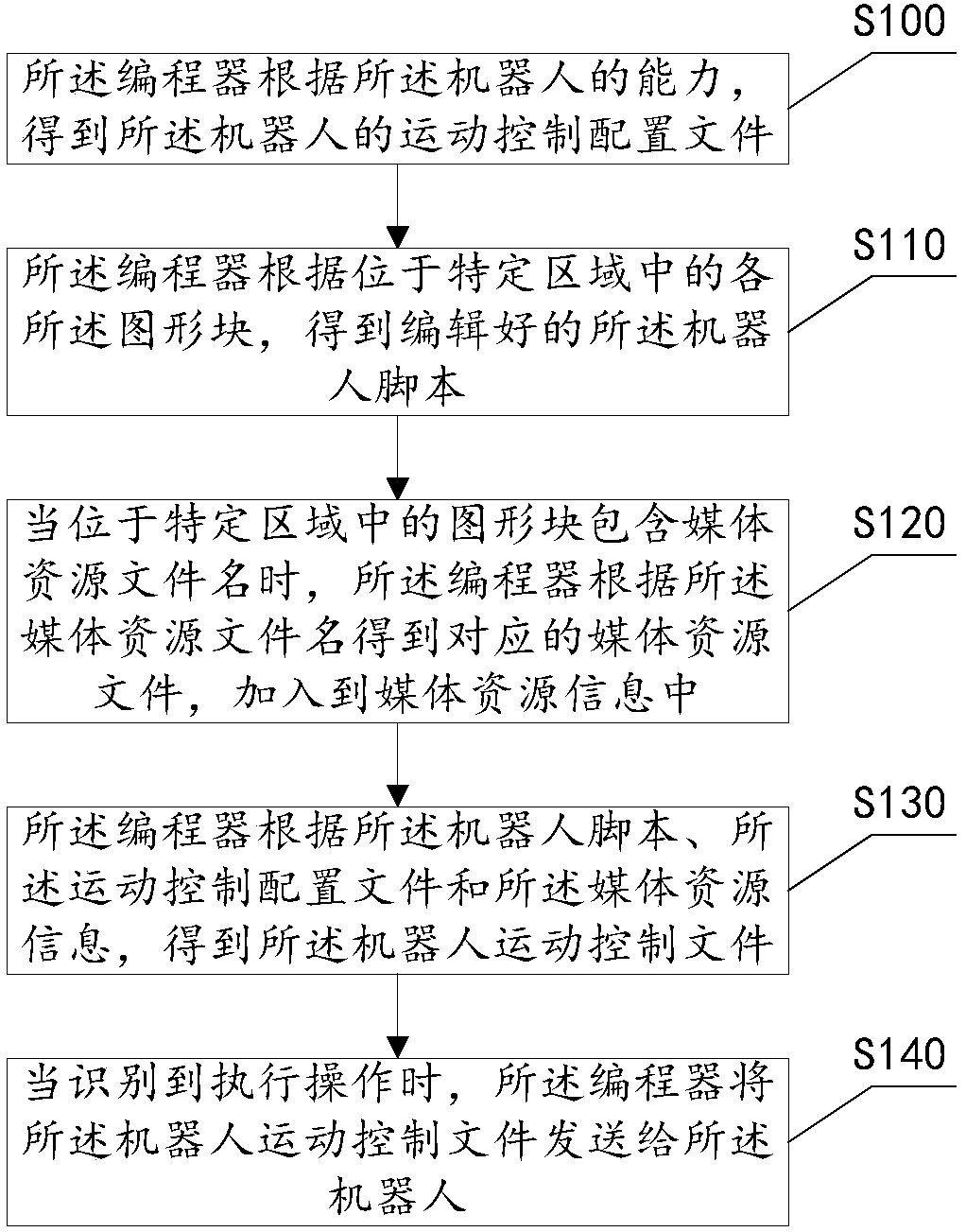

Robot, method for controlling movement thereof and system

ActiveCN107765612AEasy programmingVersatilityProgramme controlComputer controlCommunication interfaceProgram instruction

The invention provides a robot, a method for controlling movement thereof and a system. The method includes steps of S200, receiving robot movement control files by the aid of the robot; S300, analyzing robot scripts by the aid of the robot to obtain special robot action functions and generating at least one program instruction according to the special robot action functions and movement control configuration files; S400, executing corresponding actions by the aid of the robot according to the program instructions. The robot movement control files comprise the robot scripts and the movement control configuration files, and the robot scripts comprise the special robot action functions. The robot, the method and the system have the advantages that consistent communication interfaces and unified programming language can be provided for operating and controlling diversified robots, and the robot, the method and the system are universal and flexible.

Owner:阿凡达(湖南)科技有限公司

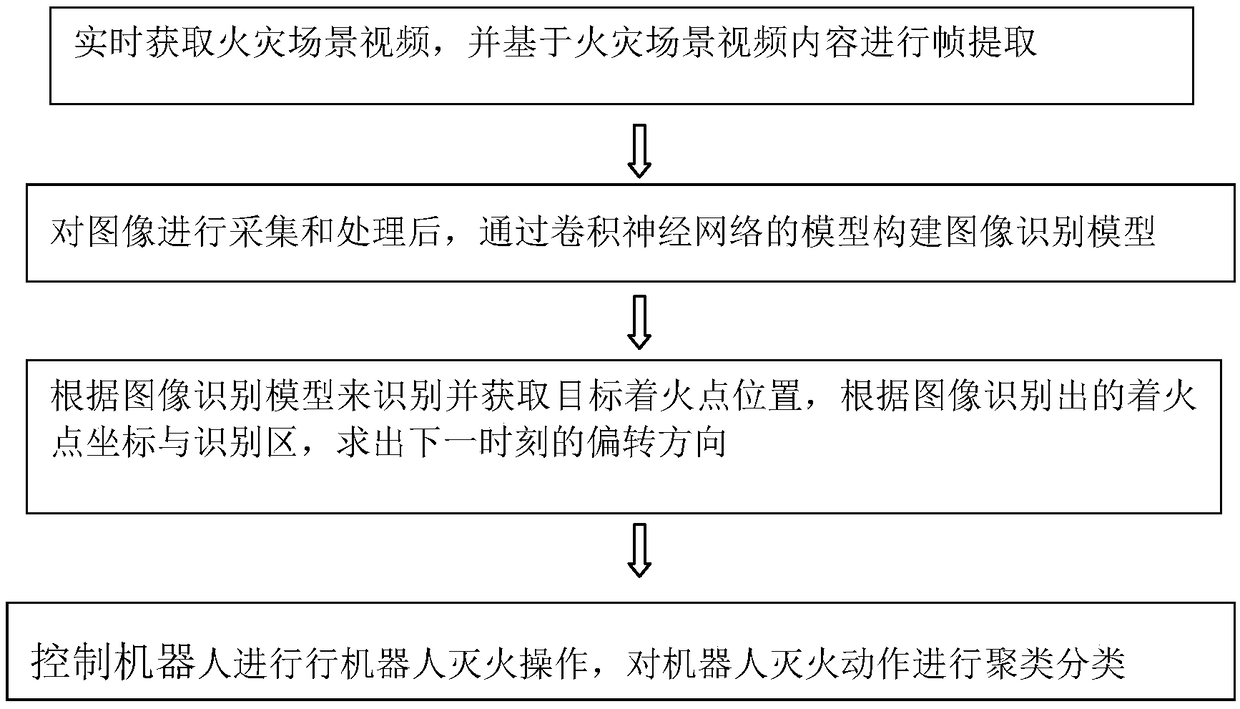

A fire-fighting robot action real-time guidance algorithm for a fire scene

InactiveCN109447030AEasy to controlReal-time monitoring of operating conditionsImage analysisFire rescueAlgorithmSteering control

The invention requests to protect a fire-fighting robot action real-time guidance algorithm for a fire scene, and the algorithm comprises the following steps of obtaining a fire scene video in real time, carrying out the frame extraction based on the content of the fire scene video, and carrying out the image preprocessing of a video frame, wherein the image preprocessing comprises the denoising and foreground and background separation of images; after the image is collected and processed, constructing an image recognition model through a model of a convolutional neural network; identifying and acquiring a target ignition point position according to an image identification model, designing different steering controls for different target ignition point positions according to the target ignition point position identified by the image identification model, carrying out the robot fire extinguishing operation, and carrying out the clustering classification on robot fire extinguishing actions.

Owner:重庆知遨科技有限公司

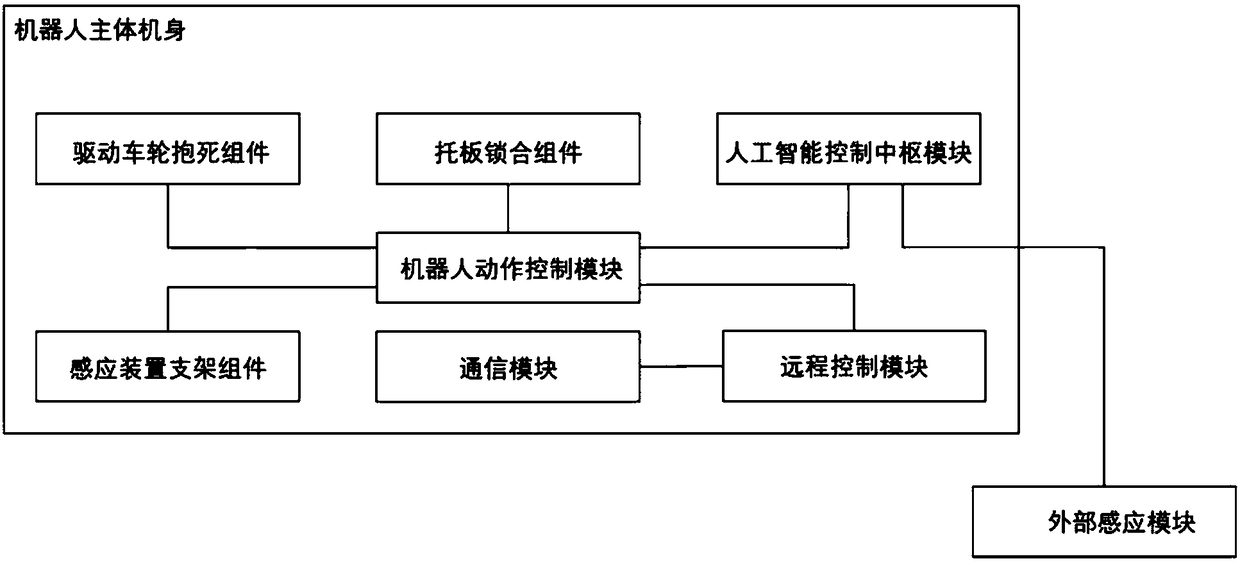

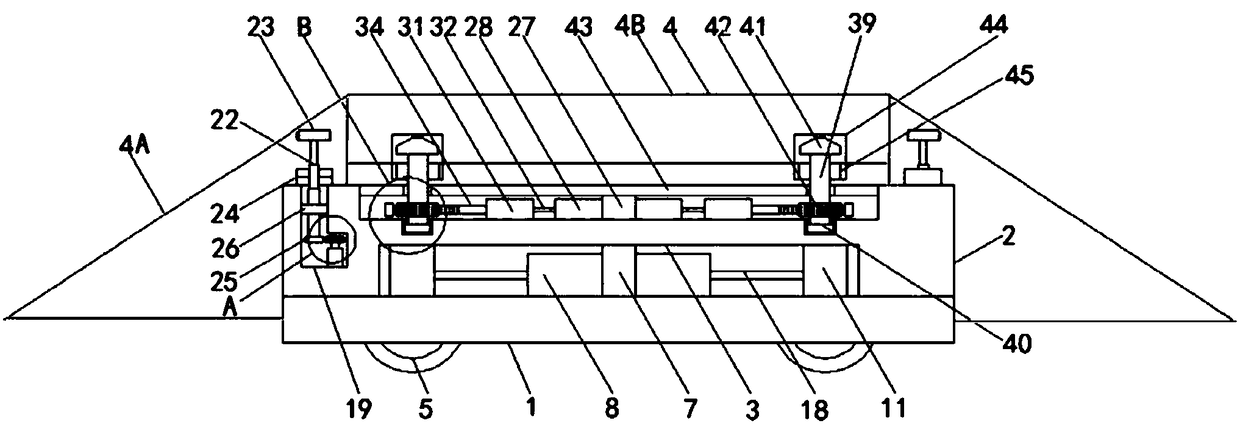

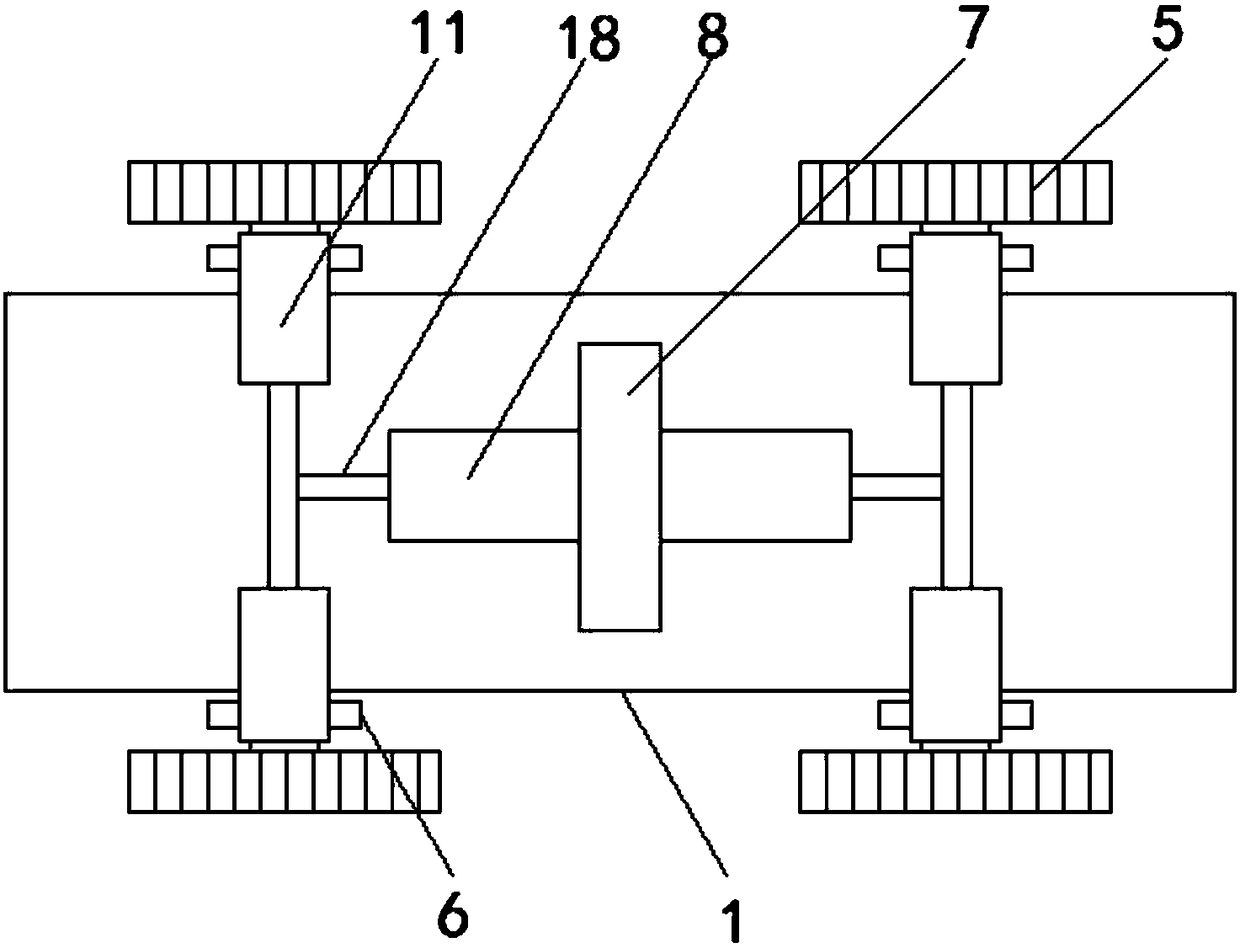

Artificial intelligence robot for vehicle service

ActiveCN108166822AImprove informatizationHigh degree of intelligenceParkingsInformatizationRemote control

The invention relates to an artificial intelligence robot for vehicle service. The artificial intelligence robot comprises a robot main body machine body, an external sensing module, an artificial intelligence control maincenter module, a remote control module, a robot action control module and a communication module. According to the robot for parking service, an artificial intelligence recognition and analyzing technology is used, intelligent judgment and switching of a variety of travelling and operating modes can be realized, a vehicle parking intention can be independently judged and service is provided during parking, automatic search and identity certification made by a vehicle owner can be carried out during vehicle picking up, and through special improvement of a robot mechanicalstructure, the working mode that parking anywhere and picking up a vehicle anywhere at a parking lot handover area is achieved. Informatization and intelligentialize degree of the robot is obviously improved, obvious convenience is provided at the aspects of real-time view of the vehicle condition, registration of a license plate and registration and authentication of a user, and user experience is improved.

Owner:TERMINUSBEIJING TECH CO LTD

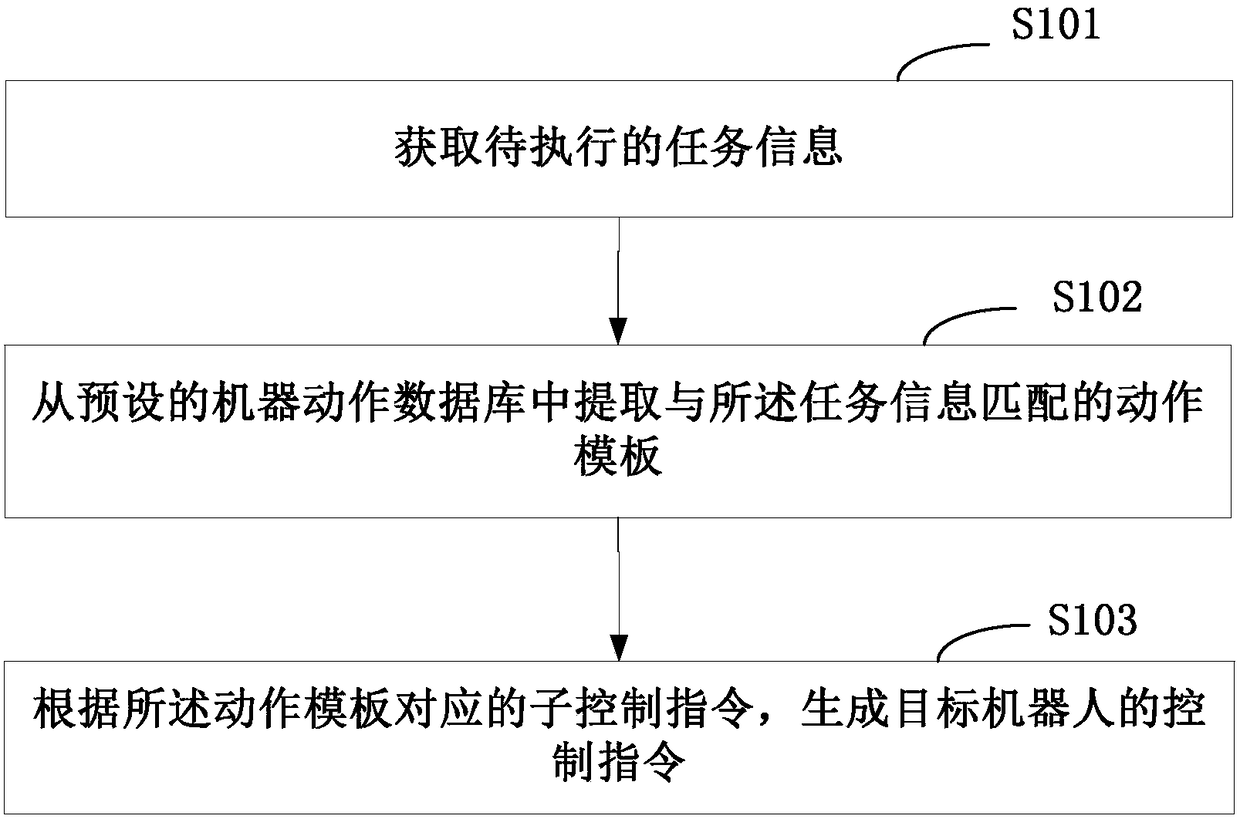

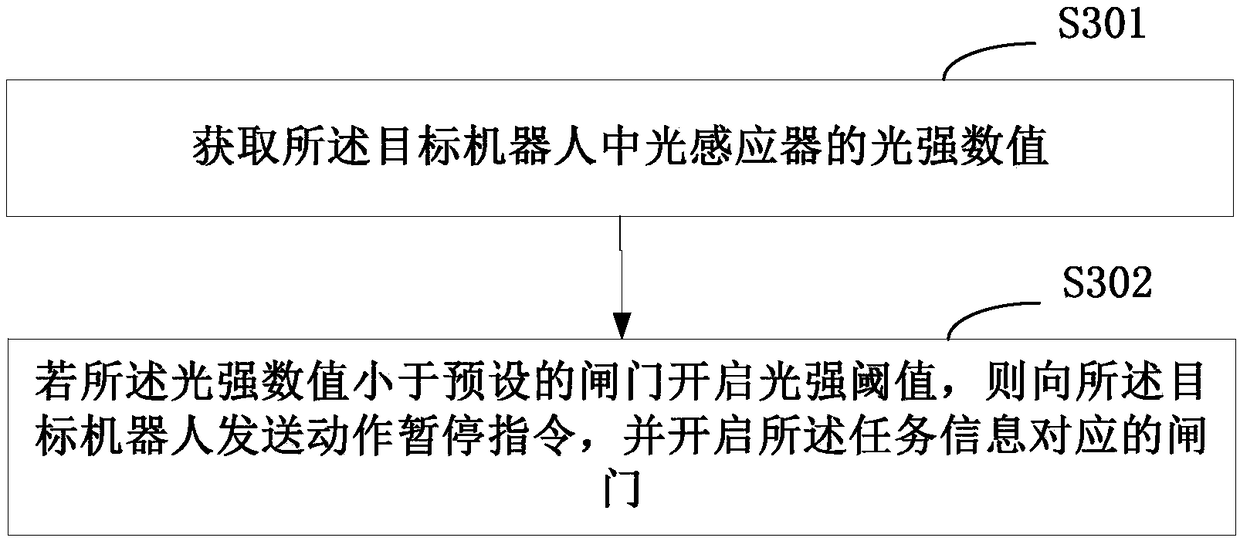

Control method and device of robot

ActiveCN109202882AReduce the difficulty of operationExpand the scope of applicable industriesProgramme-controlled manipulatorPersonalizationControl objective

The invention is suitable for the technical field of robots and provides a control method and device of a robot. The control method comprises the steps that task information to be executed is acquired; an action template matched with the task information is extracted from a preset robot action database; and according to a sub-control instruction corresponding to the action template, a control instruction of the target robot is generated, and the control instruction is used for controlling the target robot to complete a task corresponding to the task information. According to the control method, the corresponding matched action template is extracted from the preset robot action database according to the task information to be executed, and therefore the corresponding target robot control instruction is generated according to the matched action template, the target robot can complete the task information to be executed according to the control instruction, and the problems that an existing robot control technology is not suitable for industries in which the individuation degree is relatively high and the updating speed is relatively high and is relatively low in applicability and relatively poor in variability are solved.

Owner:深圳模德宝科技有限公司

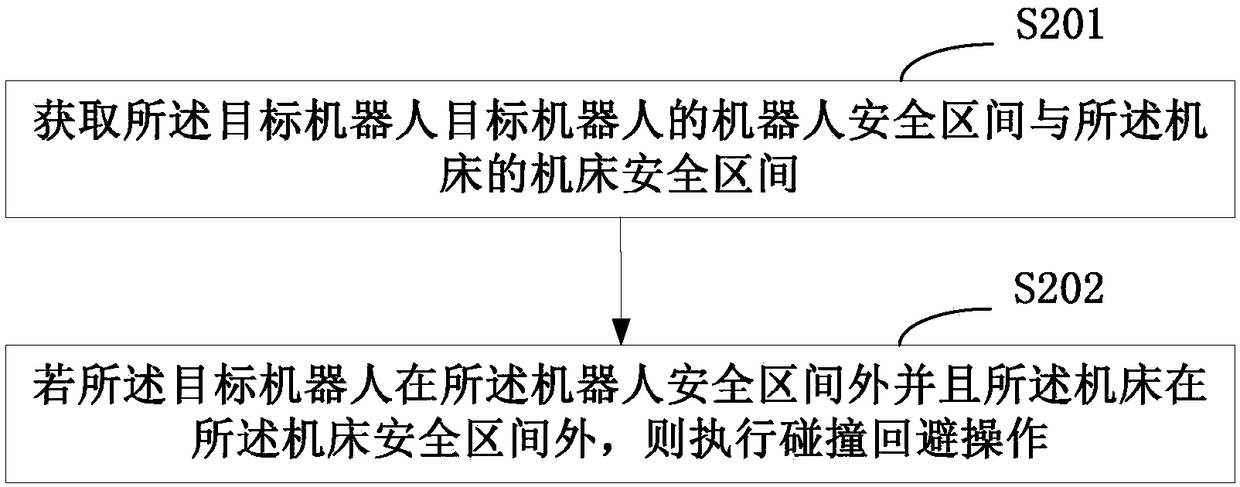

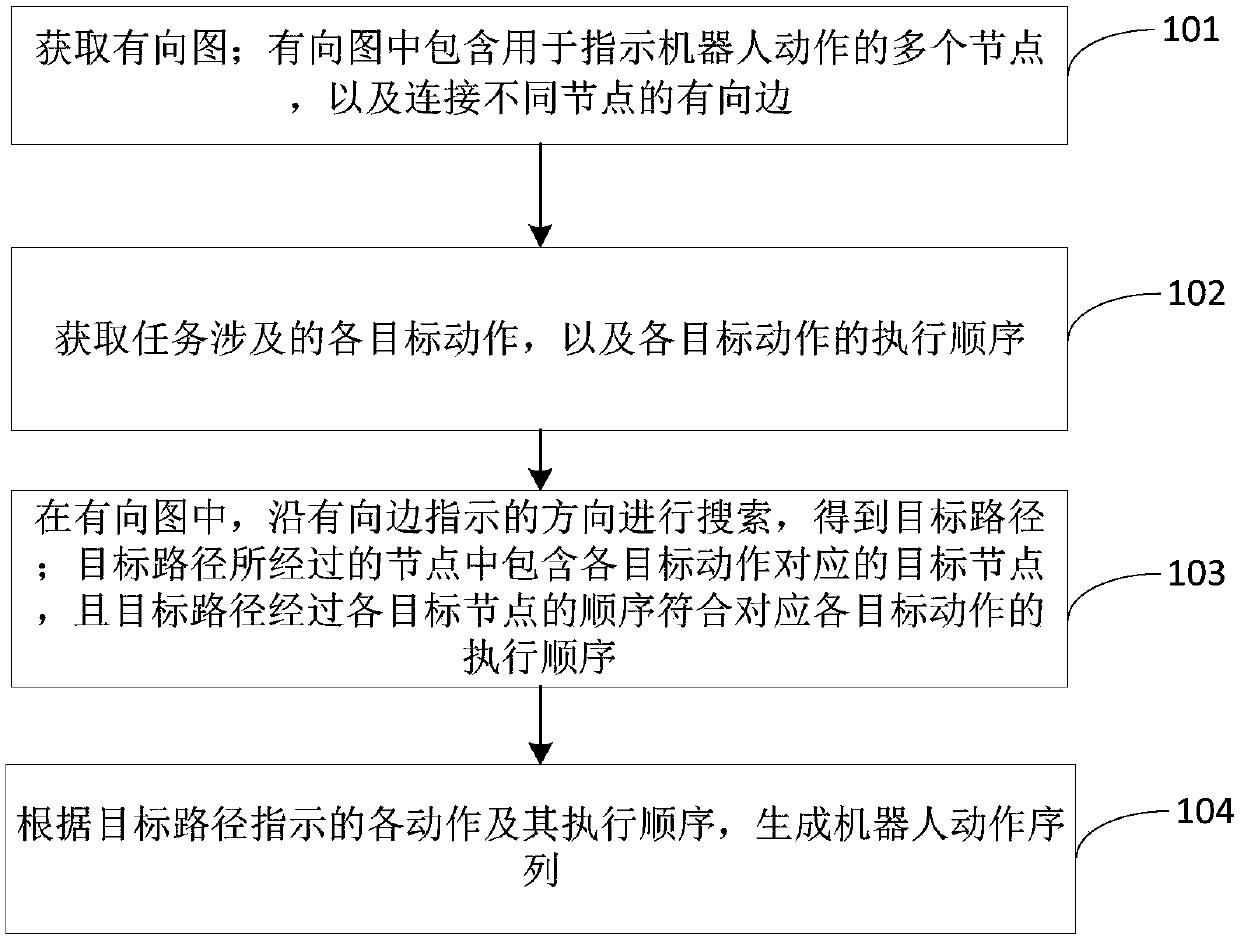

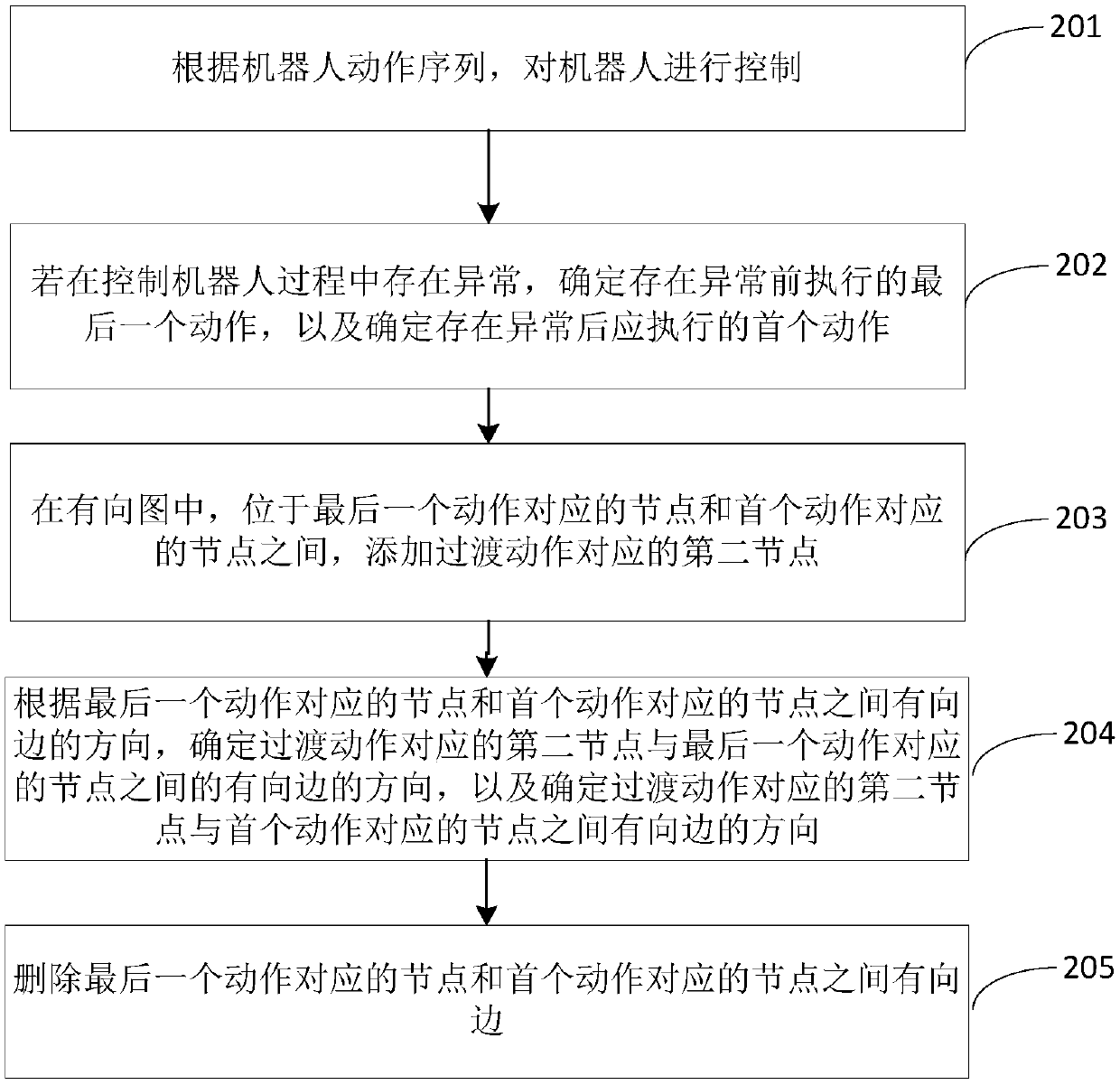

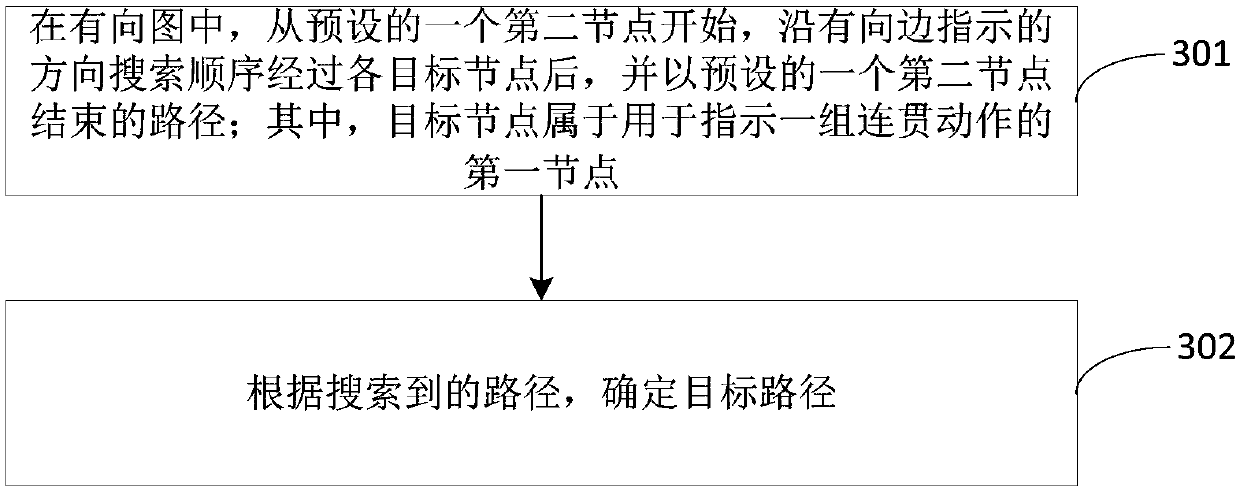

Robot action sequence generation method and device

ActiveCN110297697AImprove convenienceProgramme controlProgramme-controlled manipulatorDirected graphTarget–action

The invention provides a robot action sequence generation method and device. The robot action sequence generation method comprises the steps: obtaining a directed graph, wherein the directed graph comprises a plurality of nodes used for indicating robot actions and directed edges connected with different nodes; obtaining each target action related to the task and an execution sequence of each target action; searching in the directed graph along the direction indicated by the directed edge to obtain a target path, wherein the nodes passed by the target path comprise target nodes corresponding to the target actions, and the sequence of the target path passing by the target nodes conforms to the execution sequence corresponding to the target actions; and generating a robot action sequence according to the actions indicated by the target path and the execution sequence thereof. Therefore, based on the directed graph, each action and sequence of execution needed by the robot to complete thetask are automatically determined, and the convenience of robot control is improved, and support is provided for the robot to adapt to flexible and changeable scene tasks.

Owner:BEIJING ORION STAR TECH CO LTD

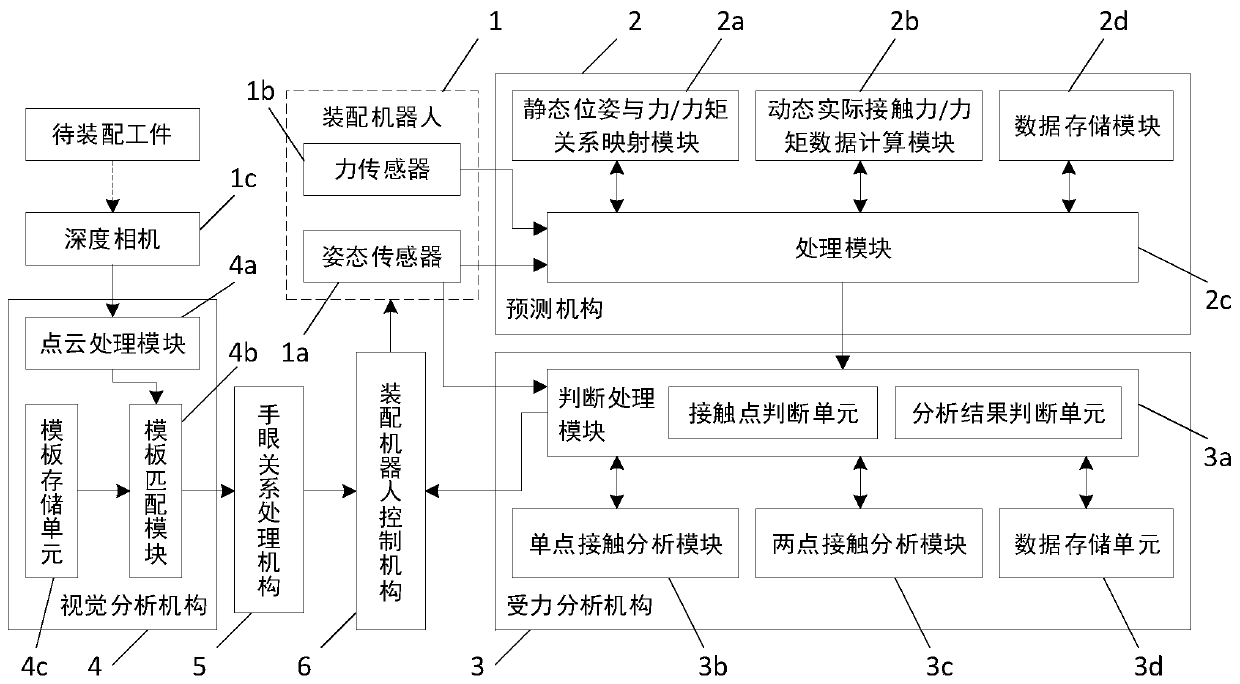

Robot guide system and method based on point cloud data

ActiveCN109940606AReal-time location relationshipPrecisely formulatedProgramme-controlled manipulatorPoint cloudSimulation

The invention discloses a robot guide system and method based on point cloud data. Data of a depth camera is converted as position information of assembly points through a visual analysis mechanism toobtain real-time accurate position relation; no matter the positions of workpieces to be assembled are changed or not, precise position information is provided for subsequent robot actions; and the positions are calibrated through an eye-hand relation processing mechanism, so that an assembly robot control mechanism can accurately formulate actions to realize quick and accurate assembly actions.

Owner:LASER FUSION RES CENT CHINA ACAD OF ENG PHYSICS

Systems, methods, and apparatus for neuro-robotic tracking point selection

InactiveUS8788030B1ElectroencephalographyInput/output for user-computer interactionPattern recognitionVisual servoing

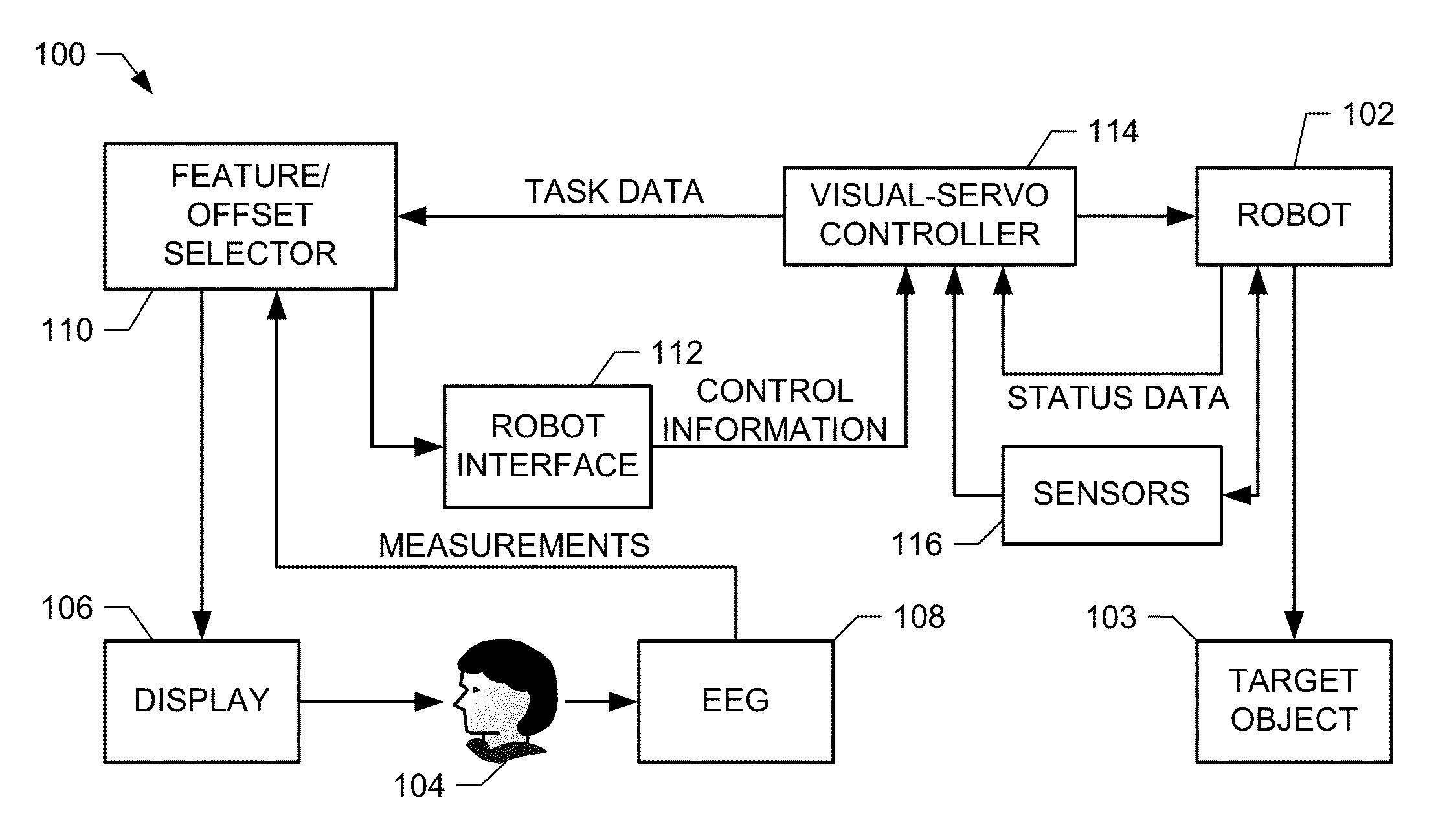

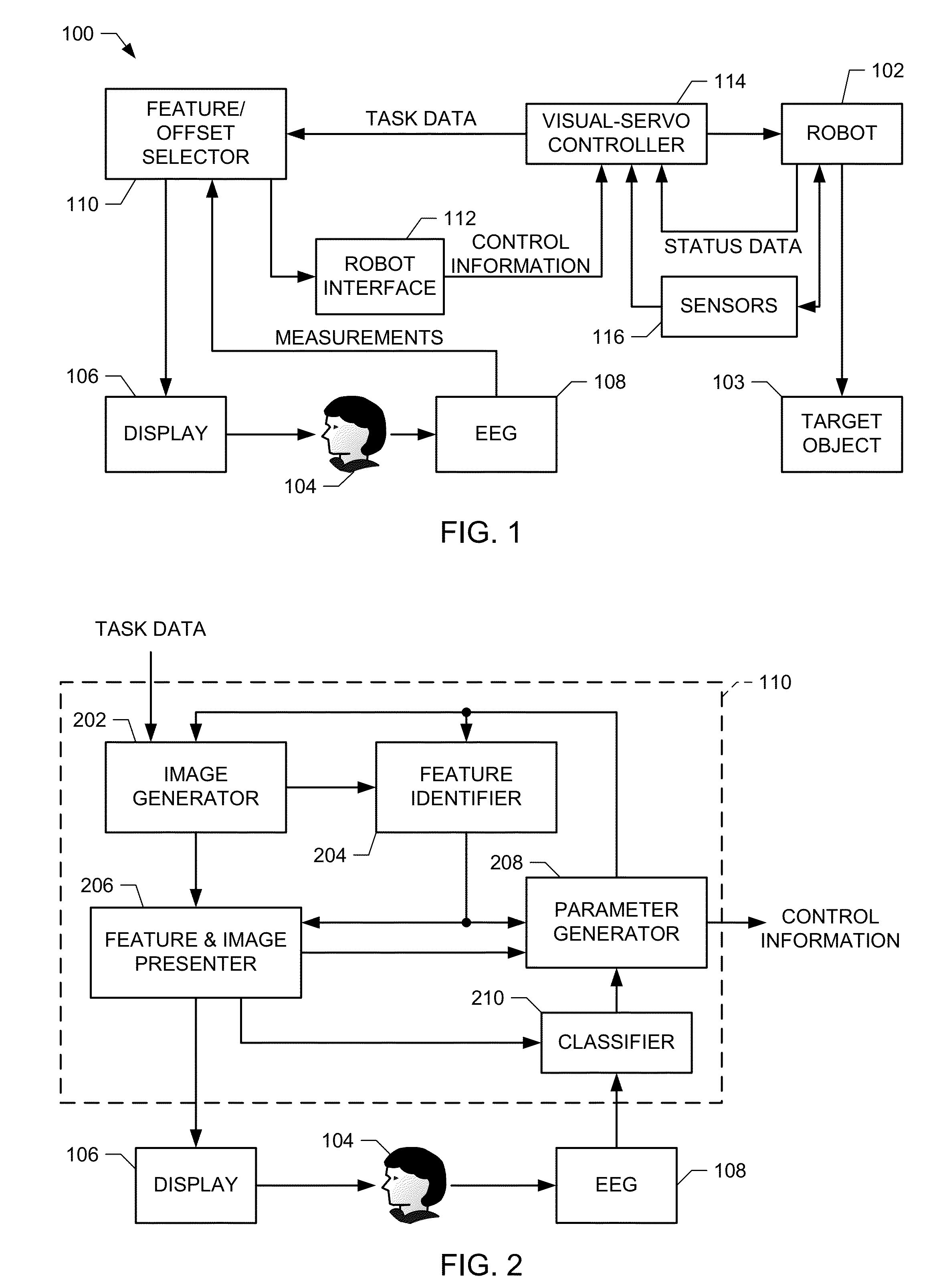

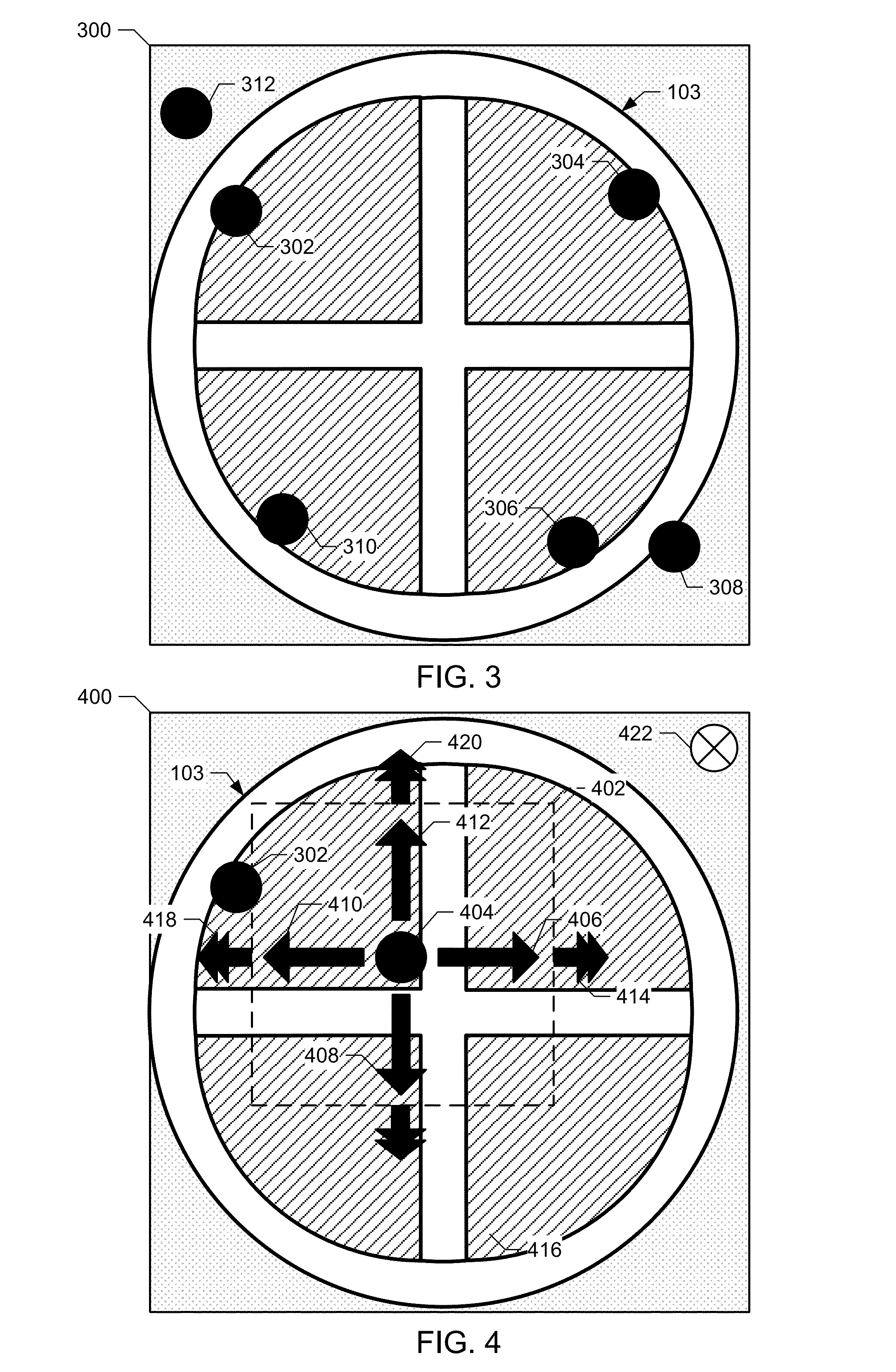

Systems, methods, and apparatus for neuro-robotic tracking point selection are disclosed. A described example robot control system includes a feature and image presenter, a classifier, a visual-servo controller, and a robot interface. The feature and image presenter is to display an image of an object, emphasize one of more potential trackable features of the object, receive a selection of the emphasized feature, and determine an offset from the selected feature as a goal. The classifier is to classify a mental response to the emphasized features, and to determine that the mental response corresponds to the selection of one of the emphasized features. The visual-servo controller is to track the emphasized feature corresponding to an identified brain signal. The robot interface is to generate control information to effect a robot action based on the emphasized feature, the visual-servo controller to track the emphasized feature while the robot action is being effected.

Owner:HRL LAB

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com