Multimodal image fusion classification method and system based on adversarial complementary features

A multi-modal image and classification method technology, applied in neural learning methods, biological neural network models, instruments, etc., can solve problems such as deep learning overfitting, ignoring complementary information for effective mining and fusion, and limiting fusion classification accuracy. Achieve the effect of strengthening information interaction and solving the uneven distribution of small samples

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

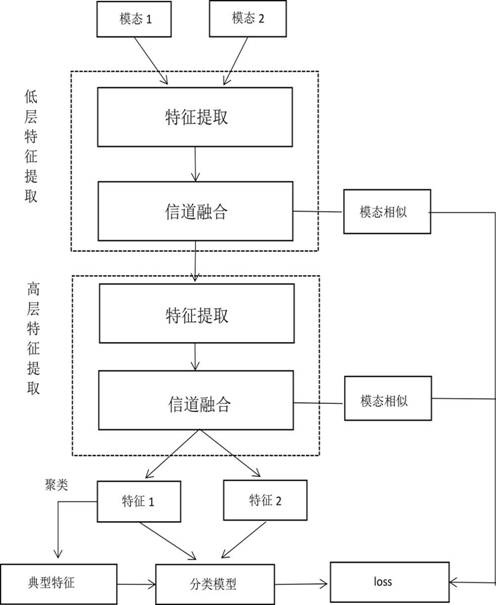

[0028] The present disclosure provides a multimodal image fusion classification method based on adversarial complementary features, which consists of figure 1 shown, including the following steps:

[0029] Step 1: collect and preprocess image data with multiple modes, select the mode to be fused from the multiple modes, and input the image data in each mode into the neural network model in groups;

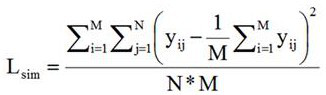

[0030] Step 2: First perform low-level feature extraction to obtain the key feature information vector of the image, and then determine whether the first channel fusion can be performed, and at the same time, perform the first similarity calculation on the obtained key feature vector of the image;

[0031] Step 3: Then perform high-level feature extraction on the features extracted from the low-level features, extract the key feature information vector of the image again, and determine whether the second channel fusion can be performed. similarity calculation;

[0032] Step 4: Pe...

Embodiment 2

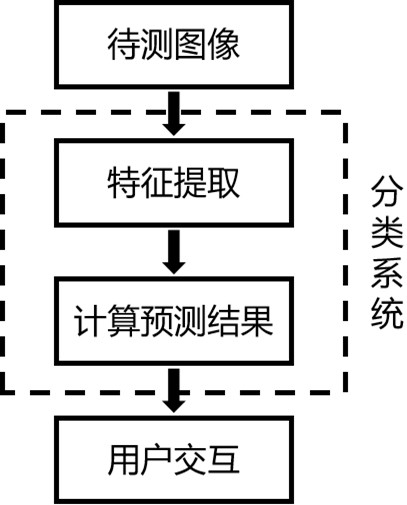

[0061] The present disclosure provides a multimodal image fusion classification system based on adversarial complementary features, including:

[0062] The data acquisition and processing module is used to collect and preprocess image data with multimodality;

[0063] A feature extraction module for selecting a modality to be fused from a plurality of modalities, inputting the image data in each modality into a neural network model in groups, and performing low-level feature extraction to obtain image key feature information vectors; and Loading the features obtained in the low-level feature extraction into another convolutional neural network to perform convolution operation for high-level feature extraction, extracting the key feature information vector of the image, and obtaining the feature map group of the image group;

[0064] The channel fusion module is used to judge whether the first channel fusion and the second channel fusion can be performed. If yes, use the bn lay...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com