Method for collecting imitation learning data by using virtual reality technology

A virtual reality technology and data collection technology, applied in the field of imitation learning data collection using virtual reality technology, can solve the problems of difficulty in the demonstration stage, low efficiency, model performance dependence, etc., and achieve the effect of improving model training efficiency and facilitating collection.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

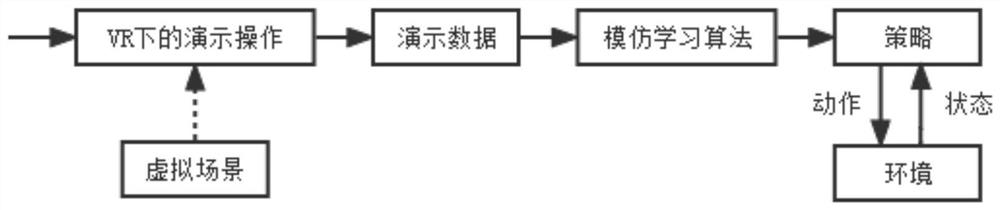

[0034] refer to figure 1 , a method for imitation learning data collection using virtual reality technology,

[0035] Include the following steps:

[0036] Step 1: Acquire scene image data, and build a virtual scene in a 3D engine by imitating the real scene;

[0037] Step 2: Setting up at least one operating virtual model object as a proxy in the virtual scene;

[0038] Step 3: According to the specific goal to be achieved, use the Unity plug-in ML-Agents to write code to complete the status input, reward setting and action output of the intelligent agent;

[0039] Step 4: Perform reinforcement learning training: configure reinforcement learning training parameters, perform training, and check the effect;

[0040] Step 5: Perform imitation learning training: configure imitation learning training parameters, use virtual reality trackers to perform human demonstrations, and complete imitation learning data collection;

[0041] Step 6: Carry out imitation learning training o...

Embodiment 2

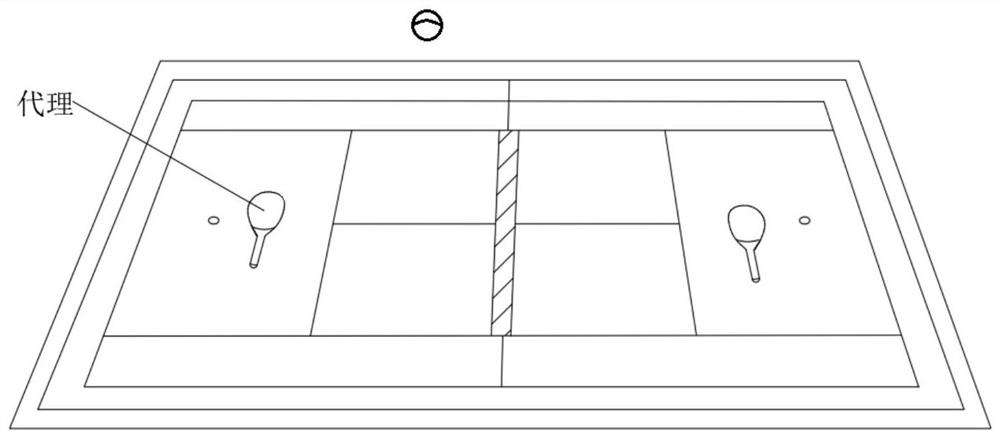

[0045] refer to figure 2 , On the basis of Embodiment 1, an example of building a tennis scene is adopted, which specifically includes the following steps:

[0046] Step 1: Obtain the tennis court scene image data, and build a tennis court virtual scene in a 3D engine by imitating the real scene;

[0047] Step 2: Set up two operating virtual model objects as proxies in the virtual tennis scene, and share the policy model parameters according to the proxy rules;

[0048] Specifically, when the proxy rules of multiple agents are the same, the same policy module is used, otherwise, different policy models are used.

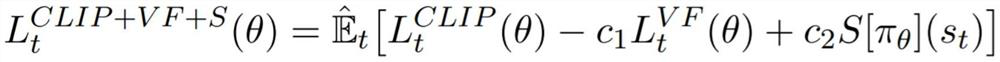

[0049] It should be noted that the policy model adopts Proximal Policy Optimization (proximal policy optimization algorithm), where:

[0050] The ratio of the action probability under the current strategy divided by the action probability of the previous strategy Constrain the objective function to ensure that large policy updates do not occur.

[0051] Cropped...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com