Patents

Literature

168 results about "Imitation learning" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Imitation learning is learning by imitation in which an individual observes an arbitrary behavior of a demonstrator and replicates that behavior.

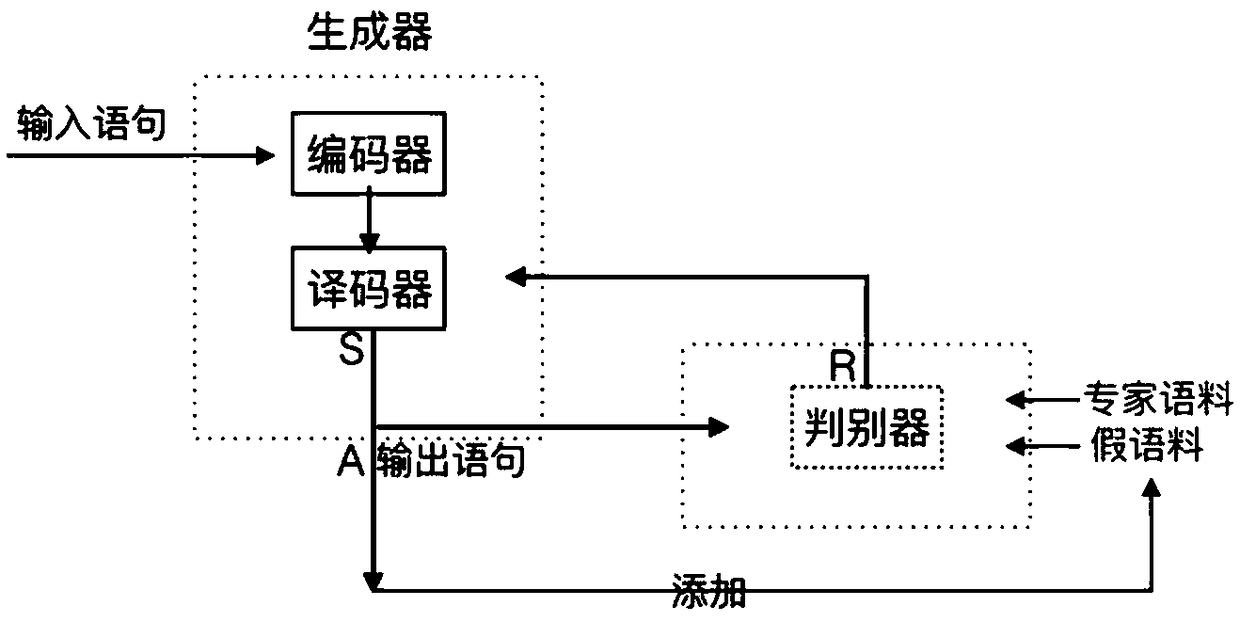

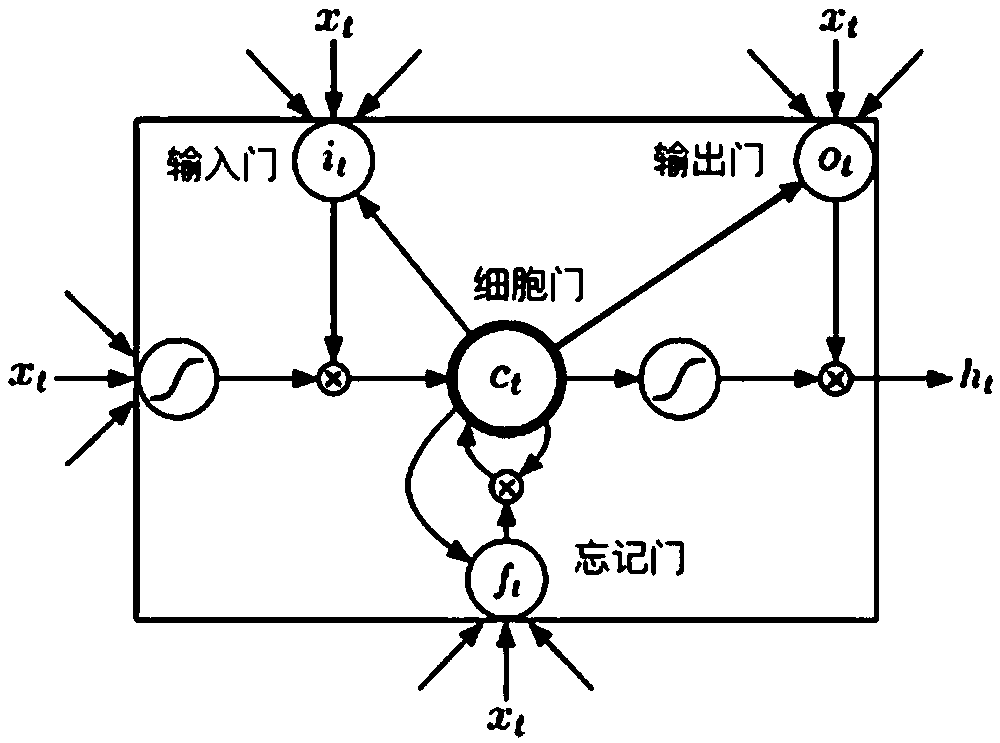

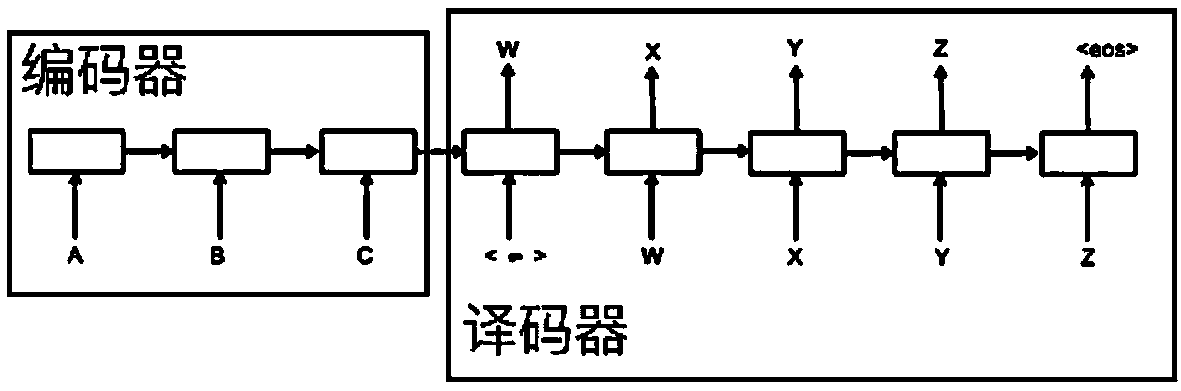

Learning by imitation dialogue generation method based on generative adversarial networks

ActiveCN108734276AIncrease diversityAvoid prone to overfitting problemsNeural architecturesNeural learning methodsDiscriminatorGenerative adversarial network

The invention relates to a learning by imitation dialogue generation method based on generative adversarial networks. The method comprises the following steps: 1) building a dialogue statement expertcorpus; 2) building the generative adversarial network, wherein a generator in the generative adversarial network comprises a pair of encoder and decoder; 3) building a false corpus; 4) performing first classification training for a discriminator; 5) inputting an input statement into the generator, and training the encoder and the decoder in the generator through a reinforcement learning architecture; 6) adding an output statement generated in the step 5) into the false corpus, and continuing training the discriminator; 7) alternatively performing training of the generator and training of thediscriminator through a training mode of the generative adversarial network, until that the generator and the discriminator both are converged. Compared with the prior art, the method provided by theinvention can generate the statements more similar as that of human and avoid emergence of too much general answers, and can promote training effects of a dialogue generation model and solve a problemof extremely high frequency of the general answers.

Owner:TONGJI UNIV

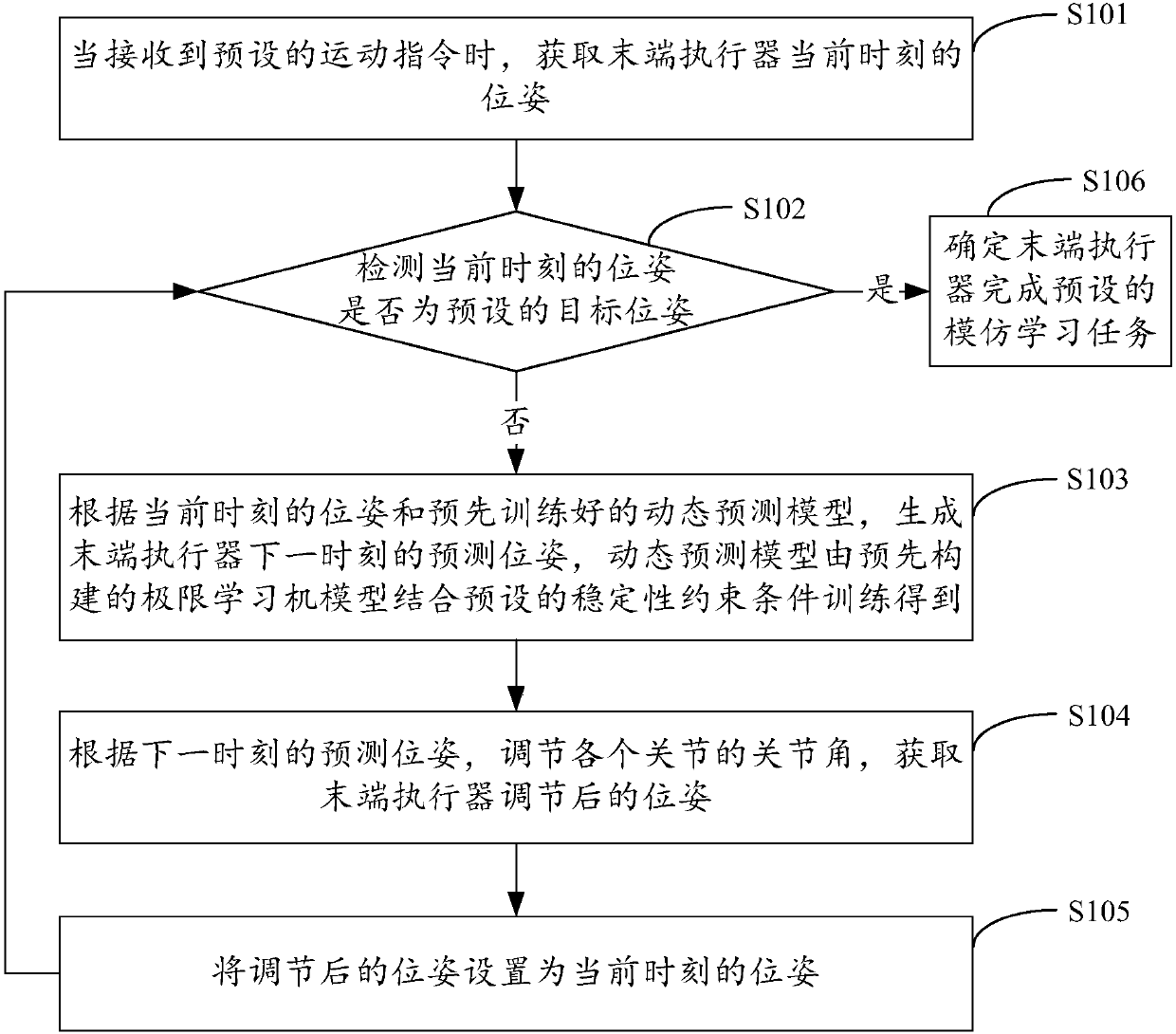

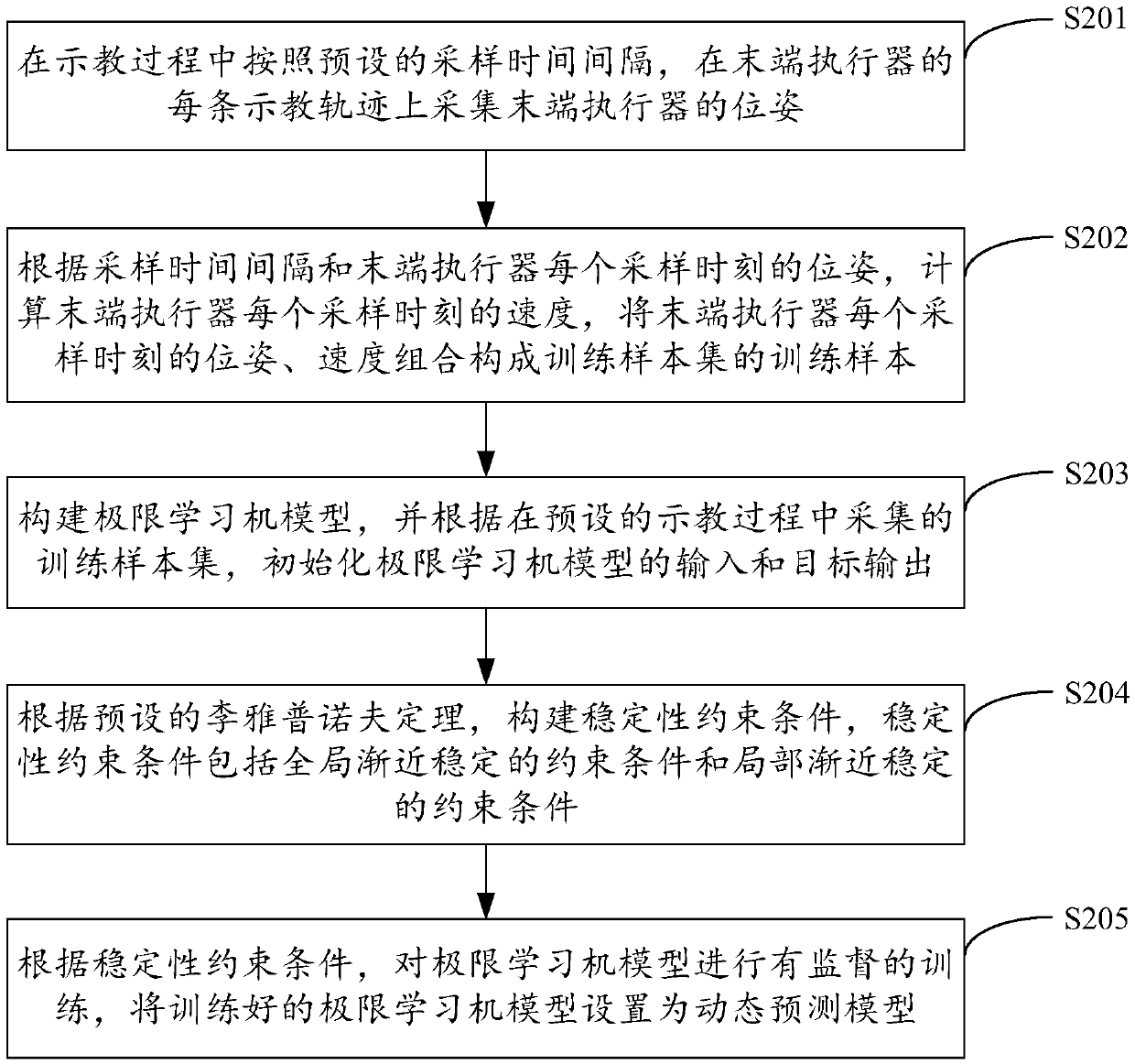

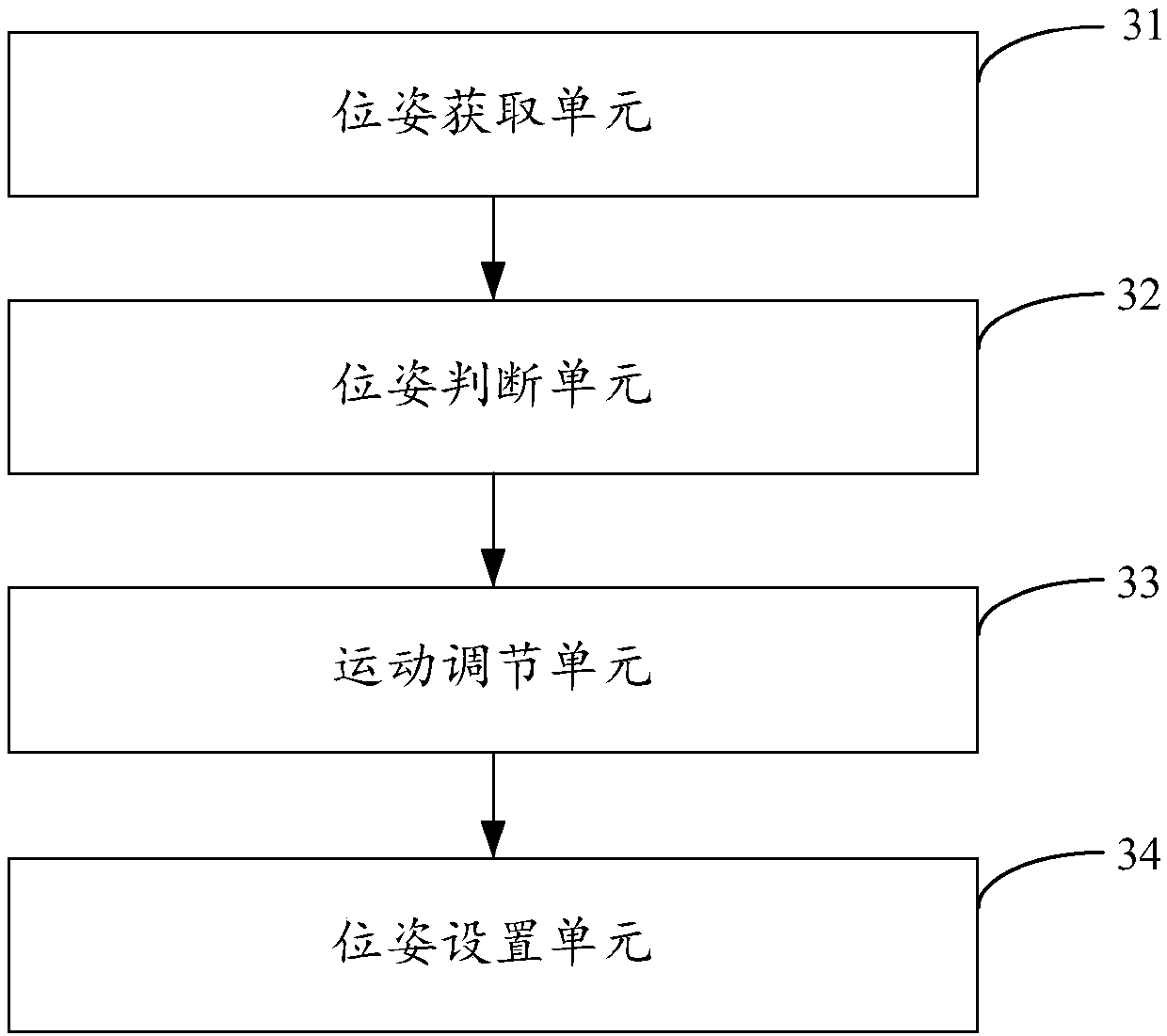

Robot imitation learning method and device, robot and storage medium

ActiveCN108115681AGuaranteed stabilityGuaranteed reproducibilityProgramme-controlled manipulatorLearning machineSimulation

The invention is applicable for the field of robots and intelligent control, and provides a robot imitation learning method and device, a robot and a storage medium. The method comprises the steps ofacquiring the pose of an end-effector at the current time when receiving movement instructions, and checking whether the pose at the current time is the target pose; determining that a preset imitation learning task is finished by the end-effector if the pose at the current time is the target pose; or else generating the predicted pose of the end-effector at the next time according to the pose atthe current time and a dynamic prediction model, and adjusting angles of joints according to the predicted pose; and setting the pose after adjustment of the end-effector to the pose at the current time, and skipping to the step of checking whether the pose at the current time is the target pose. The dynamic prediction model is obtained through a limit learning machine model in combination with preset stability constraint condition training, so that the stability, repetition precision and model training speed of robot imitation learning are ensured, and the humanization degree of robot motionis improved effectively.

Owner:SHENZHEN INST OF ADVANCED TECH

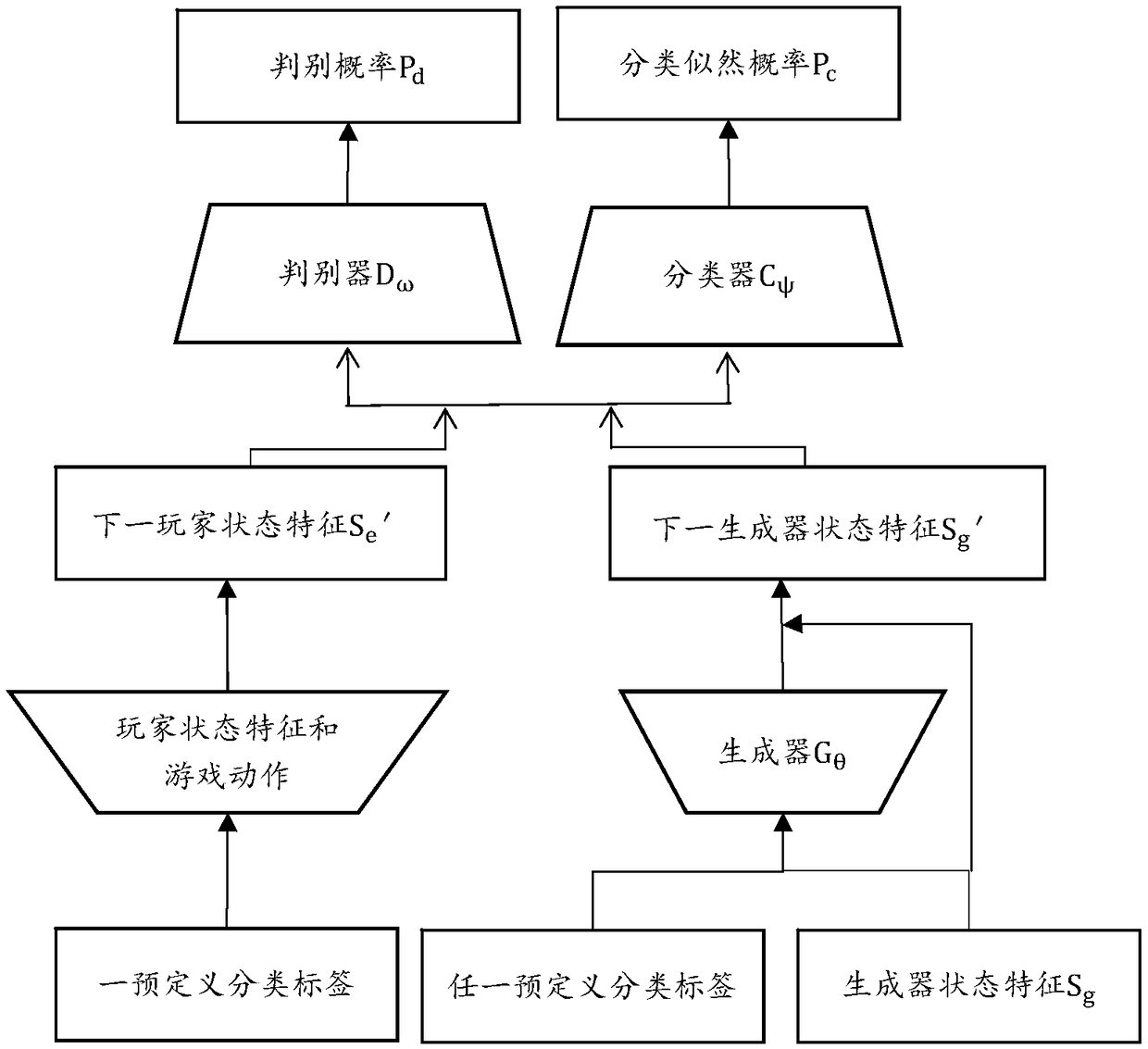

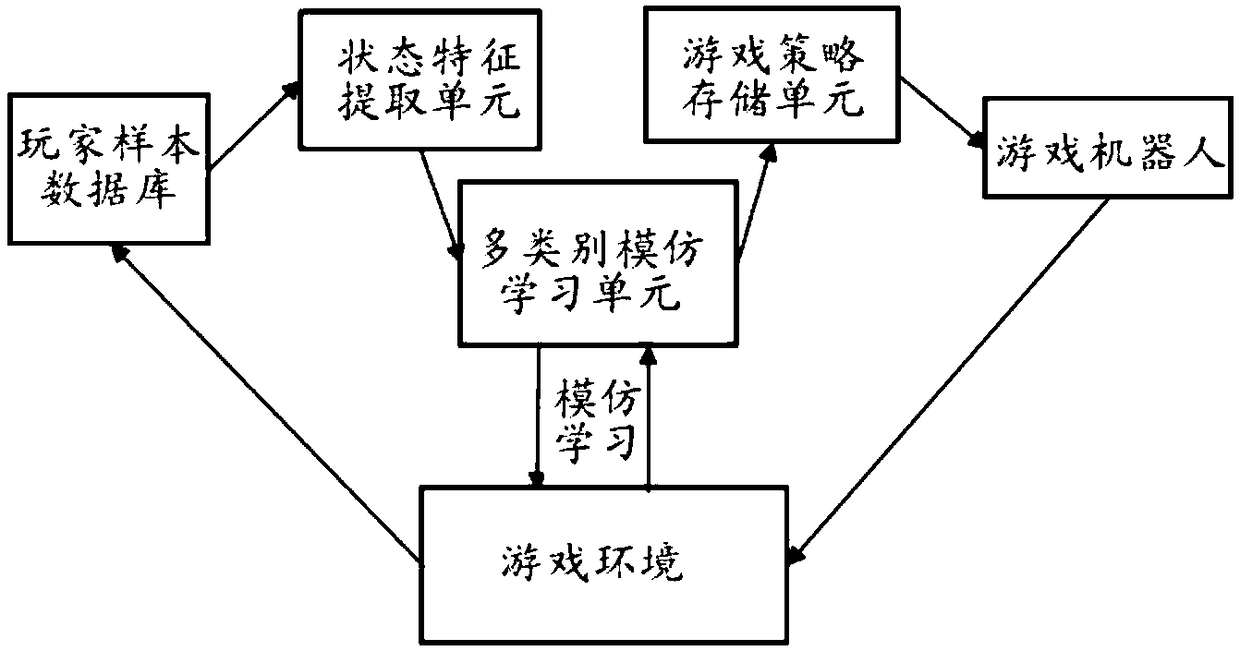

End-to-end game robot generation method and system based on multi-class imitation learning

ActiveCN108724182AScientific learning styleSmart learningProgramme-controlled manipulatorTechnical gradeHuman–computer interaction

The invention relates to an end-to-end game robot generation method and system based on multi-class imitation learning to obtain game robots which are more comparable to game levels of players of different technical grades. The end-to-end game robot generation method based on multi-class imitation learning comprises the following steps: establishing a player sample database; and forming an adversarial network by a policy generator, a policy discriminator and a policy sorter, wherein the policy generator carries out imitation learning in the adversarial network, the policy generator obtains game policies similar to game behaviors of the players of different technical grades to further generate game robots, and the policy generator, the policy discriminator and the policy sorter are multilayered neural networks. According to the method and system provided by the invention, the multi-class game robots can be obtained, and the robot of each class can simulate the game policy close to the player of corresponding class.

Owner:NETEASE (HANGZHOU) NETWORK CO LTD

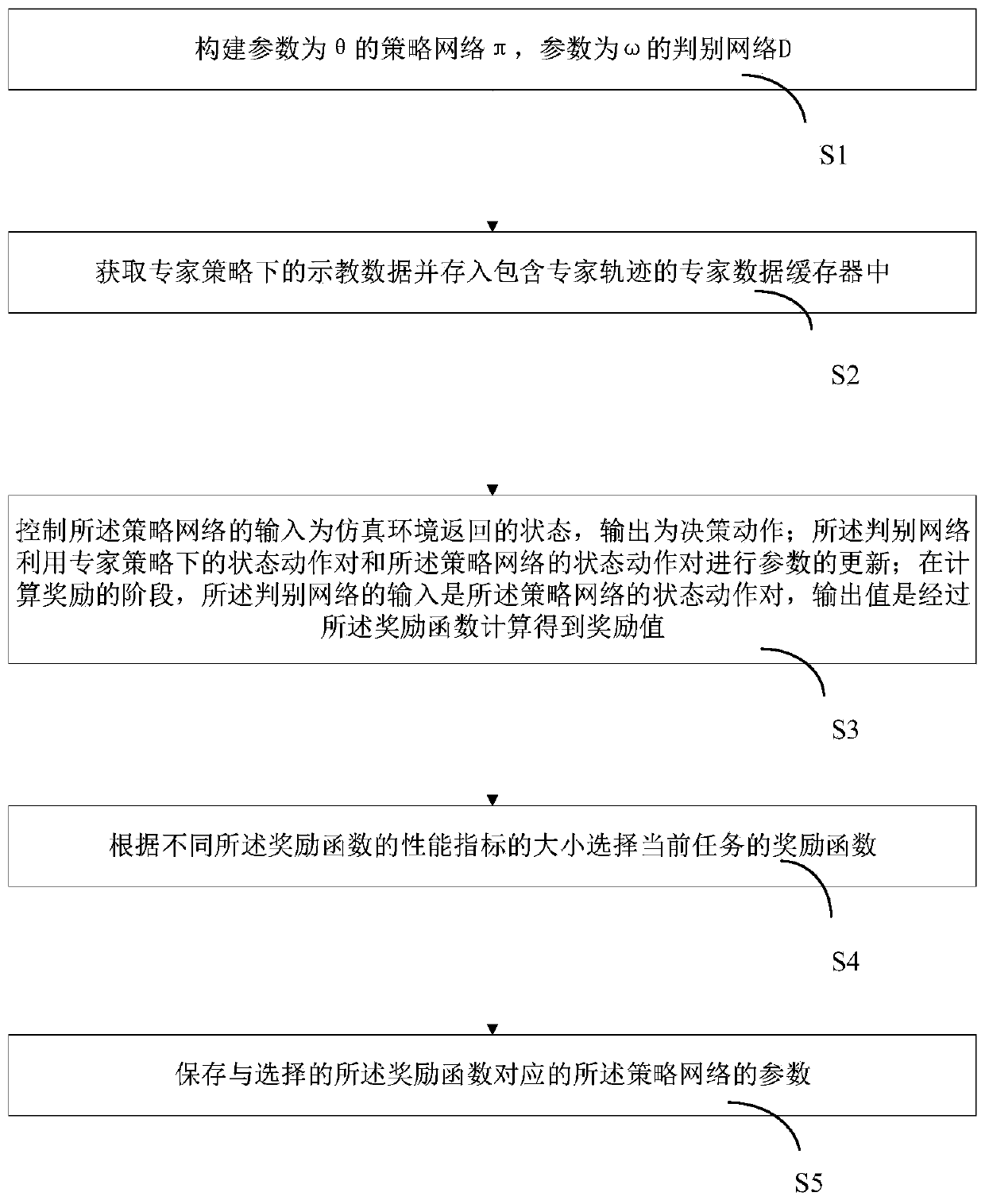

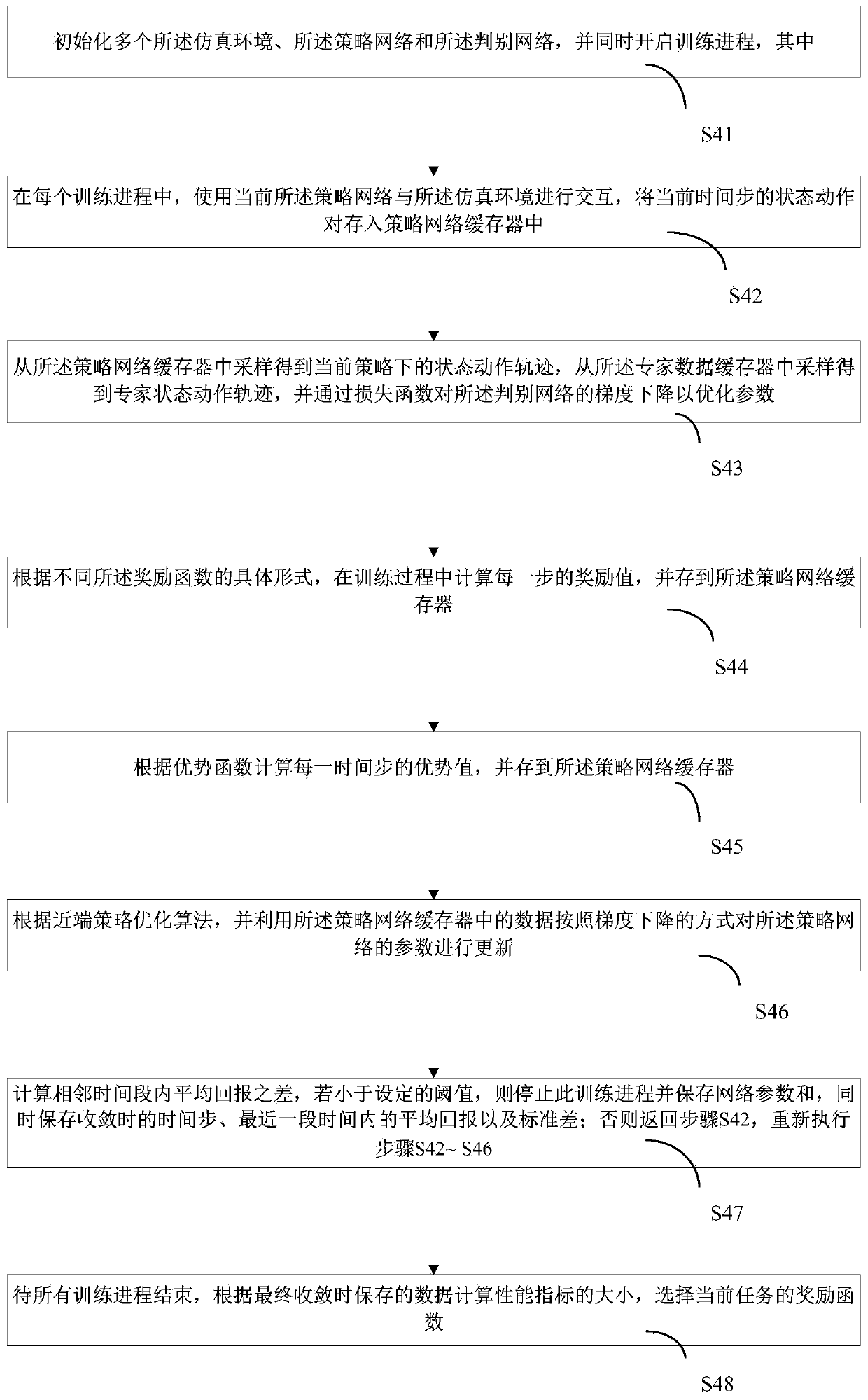

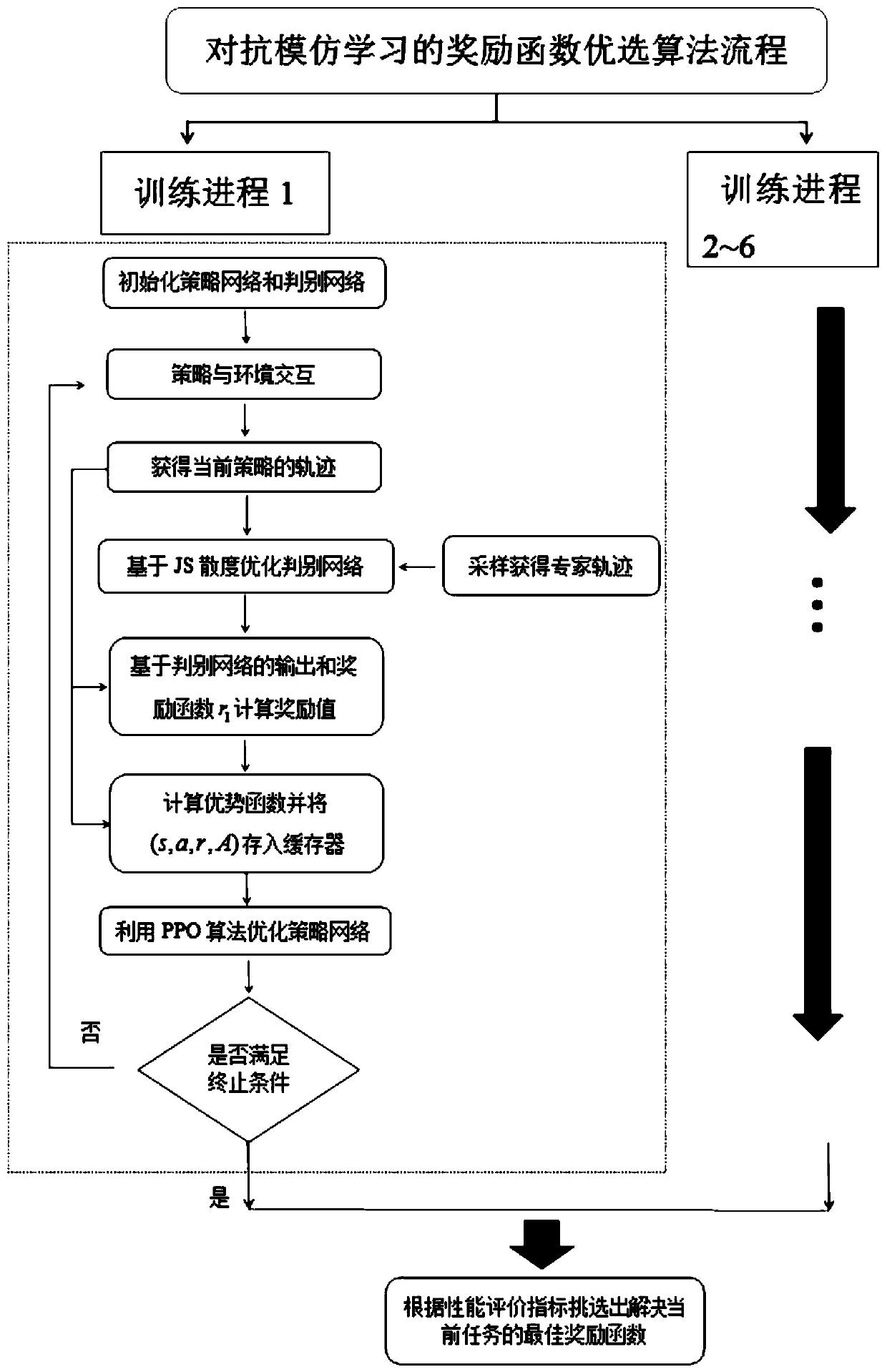

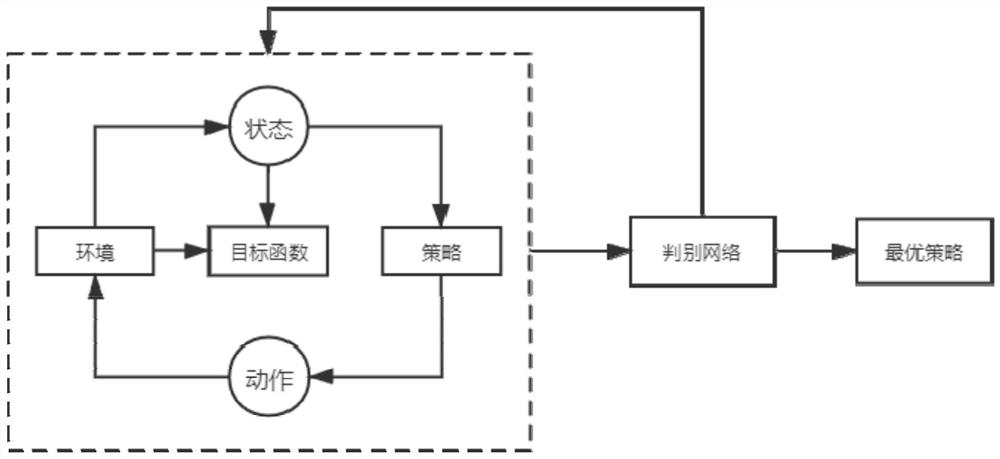

Method for selecting reward function in adversarial imitation learning

The invention provides a method for selecting reward functions in adversarial imitation learning. The method comprises the following steps: constructing a strategy network with a parameter theta, a discrimination network with a parameter w and at least two reward functions; obtaining teaching data under an expert strategy and storing the teaching data into an expert data buffer containing an expert track, wherein the input of the control strategy network is a state returned by the simulation environment, and the output is a decision action; enabling the discrimination network to update parameters by using the state action pair under the expert strategy and the state action pair of the strategy network; in the award calculation stage, judging that the input of the network is a state actionpair of the strategy network, and judging that the output value is an award value obtained by award function calculation; selecting a reward function of the current task according to the sizes of theperformance indexes of different reward functions; and storing the parameters of the strategy network corresponding to the selected reward function. And the intelligent agent learns under the guidanceof different reward functions, and then selects an optimal reward function in a specific task scene according to the performance evaluation index.

Owner:SHENZHEN GRADUATE SCHOOL TSINGHUA UNIV

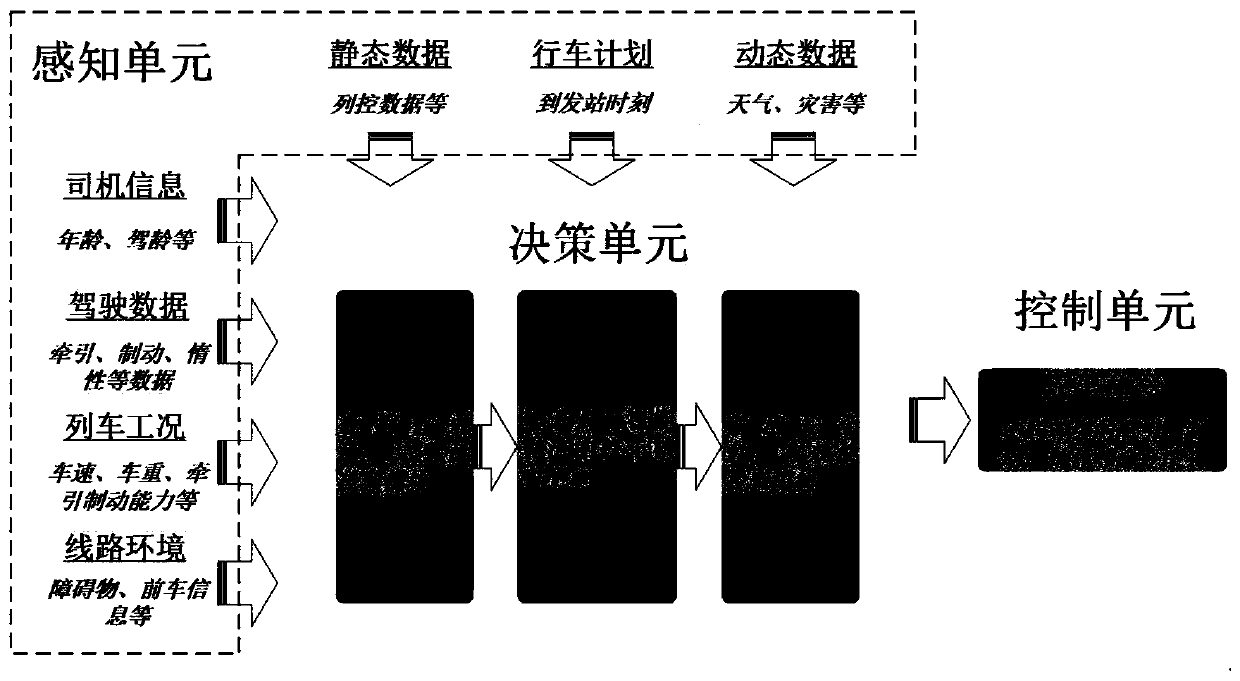

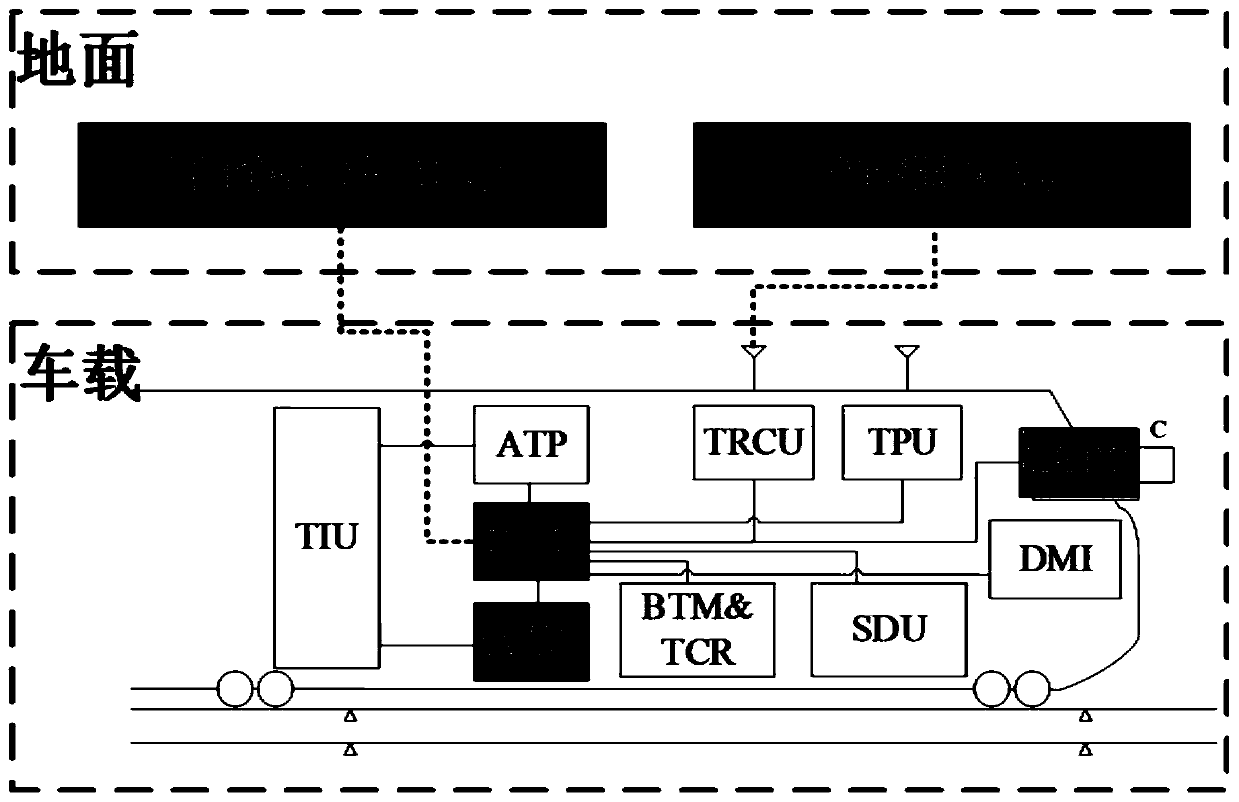

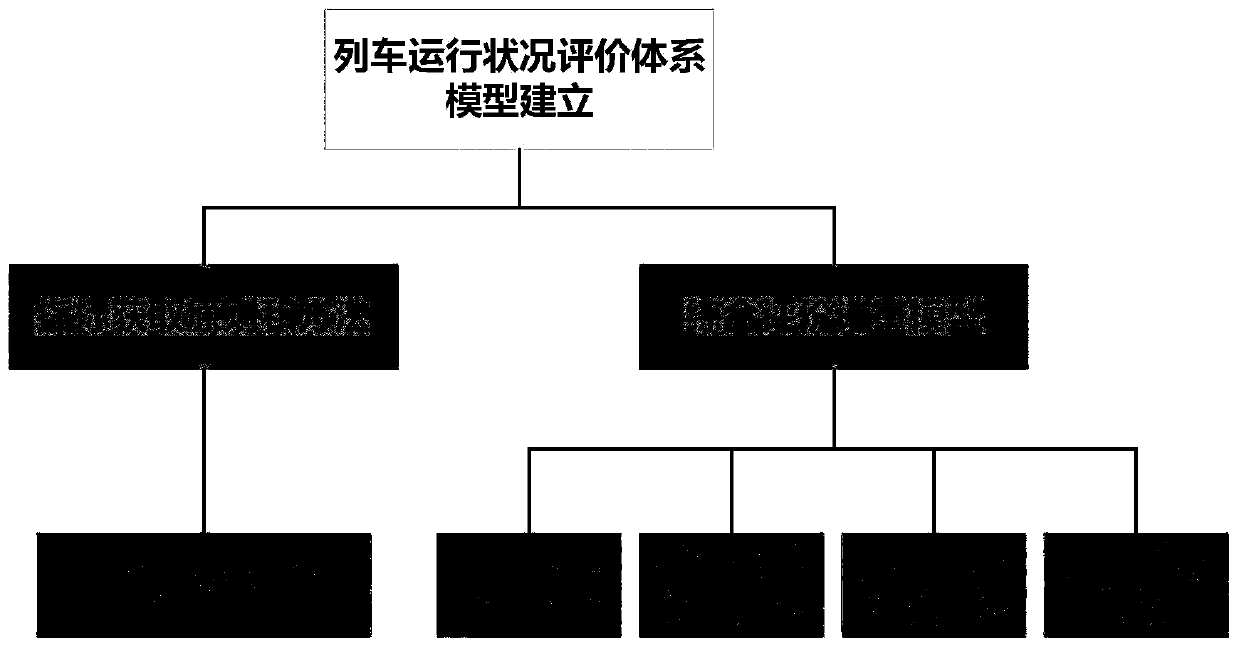

High-speed railway train automatic driving system based on artificial intelligence technology

The invention discloses a high-speed railway train automatic driving system based on an artificial intelligence technology. The system is a novel high-speed railway train automatic driving system based on deep reinforcement learning and imitation learning. By constructing comprehensive train operation condition evaluation indexes and supplementing and enhancing acquisition of big data related to train operation, a train control strategy model which is based on deep reinforcement learning and imitation learning and can perform comprehensive perception and make correct and reasonable decisions is trained, and the comprehensive quality of train operation is further improved.

Owner:SIGNAL & COMM RES INST OF CHINA ACAD OF RAILWAY SCI +3

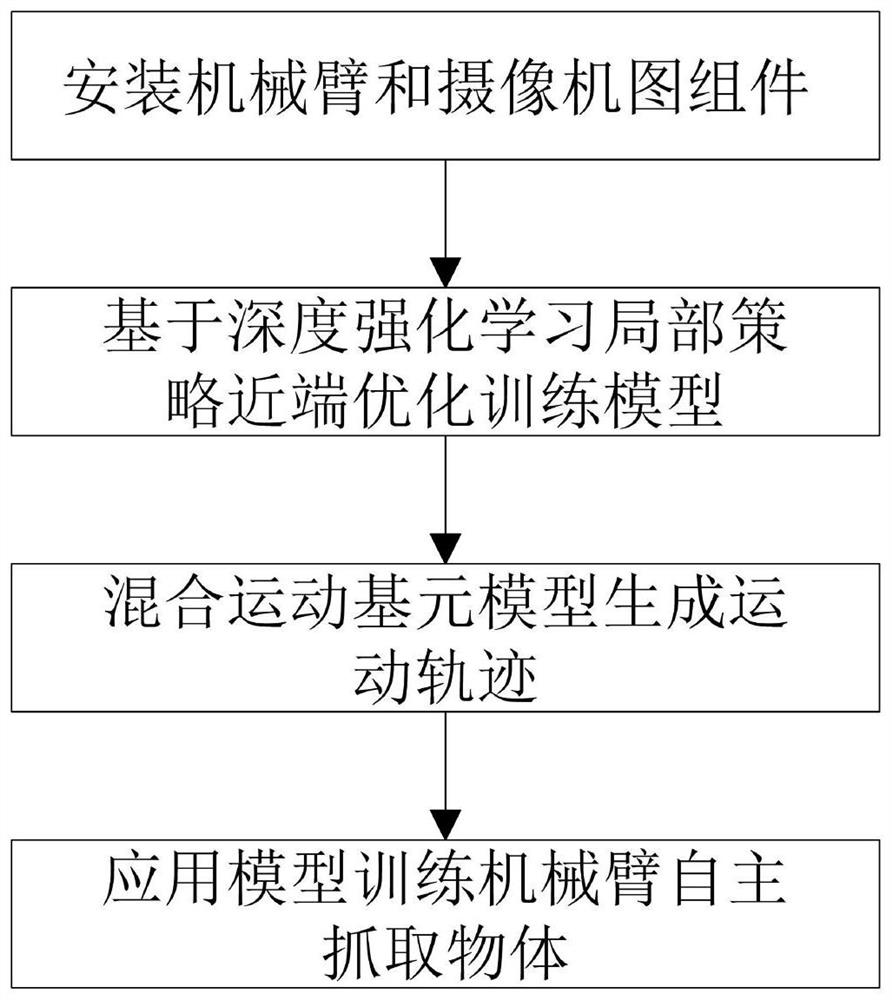

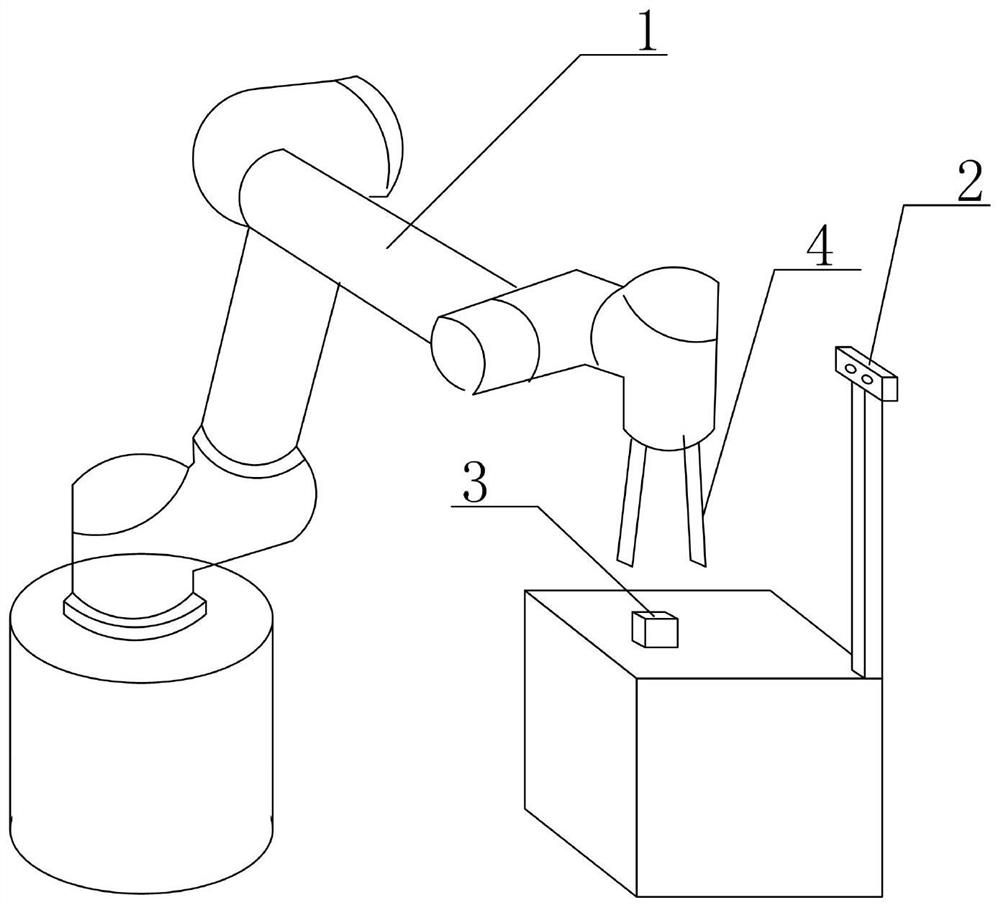

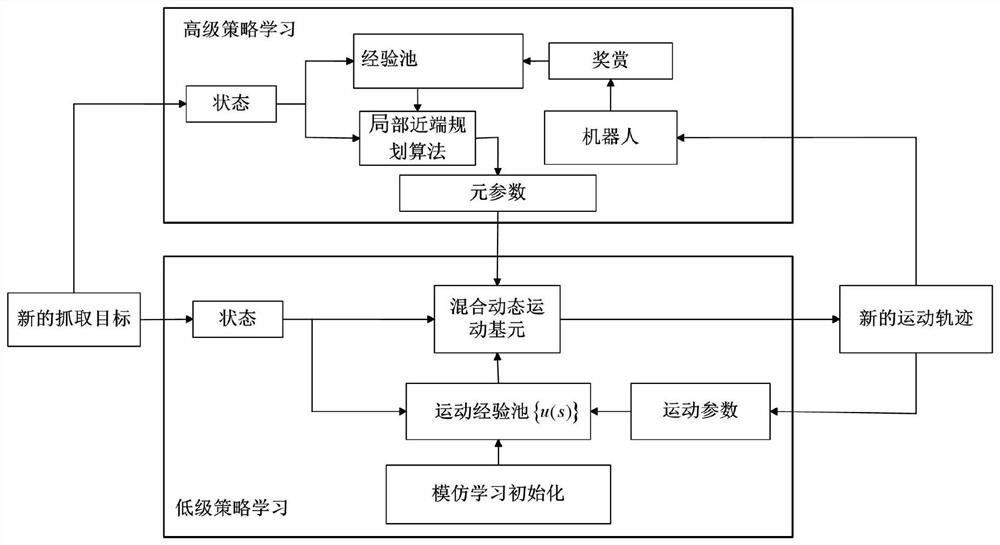

Mechanical arm autonomous grabbing method based on deep reinforcement learning and dynamic movement primitives

ActiveCN111618847ASolve the problem of uneven joint movementAdaptableProgramme-controlled manipulatorPattern recognitionCamera image

The invention discloses a mechanical arm autonomous grabbing method based on deep reinforcement learning and dynamic movement primitives. The mechanical arm autonomous grabbing method includes the following steps that firstly, a camera image assembly is installed, it is ensured that the recognition area is not shielded, grabbing target area images are preprocessed and sent to a deep reinforcementlearning intelligent agent as state information; secondly, a local strategy near-end optimization training model is established on the basis of the state and deep reinforcement leaning principle; thirdly, a new mixed movement primitive model is established by fusing the dynamic movement primitives and imitation learning; and fourthly, a mechanical arm is trained to autonomously grab objects on thebasis of the models. By means of the mechanical arm autonomous grabbing method, the problem that the mechanical arm joint movement based on traditional deep reinforcement learning is unsmooth can beeffectively solved, the learning problem of primitive parameters is converted into the reinforcement learning problem through combination with the dynamic movement primitive algorithm, and by means ofthe training method of deep reinforcement learning, the mechanical arm can complete the autonomous grabbing task.

Owner:NANTONG UNIVERSITY

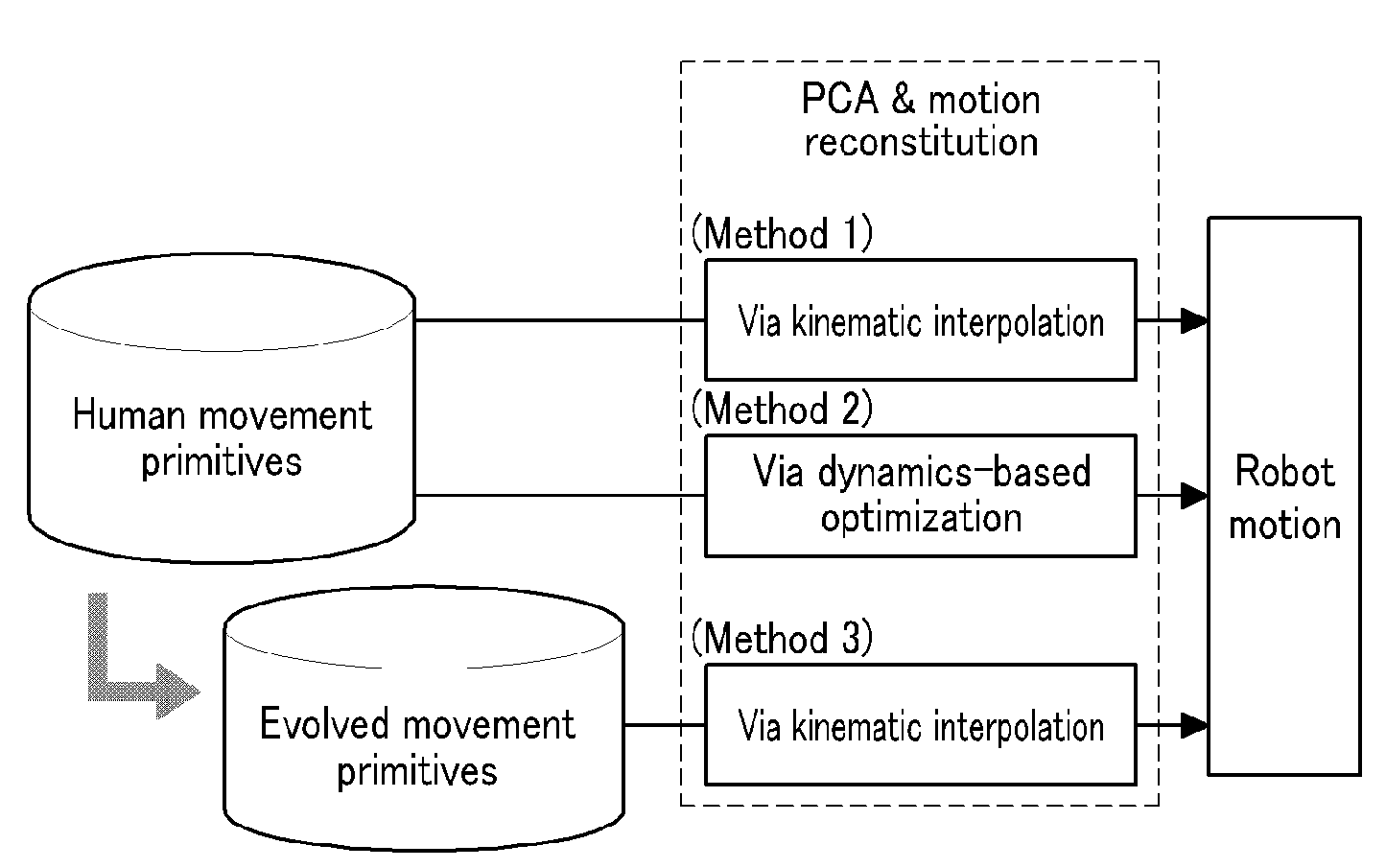

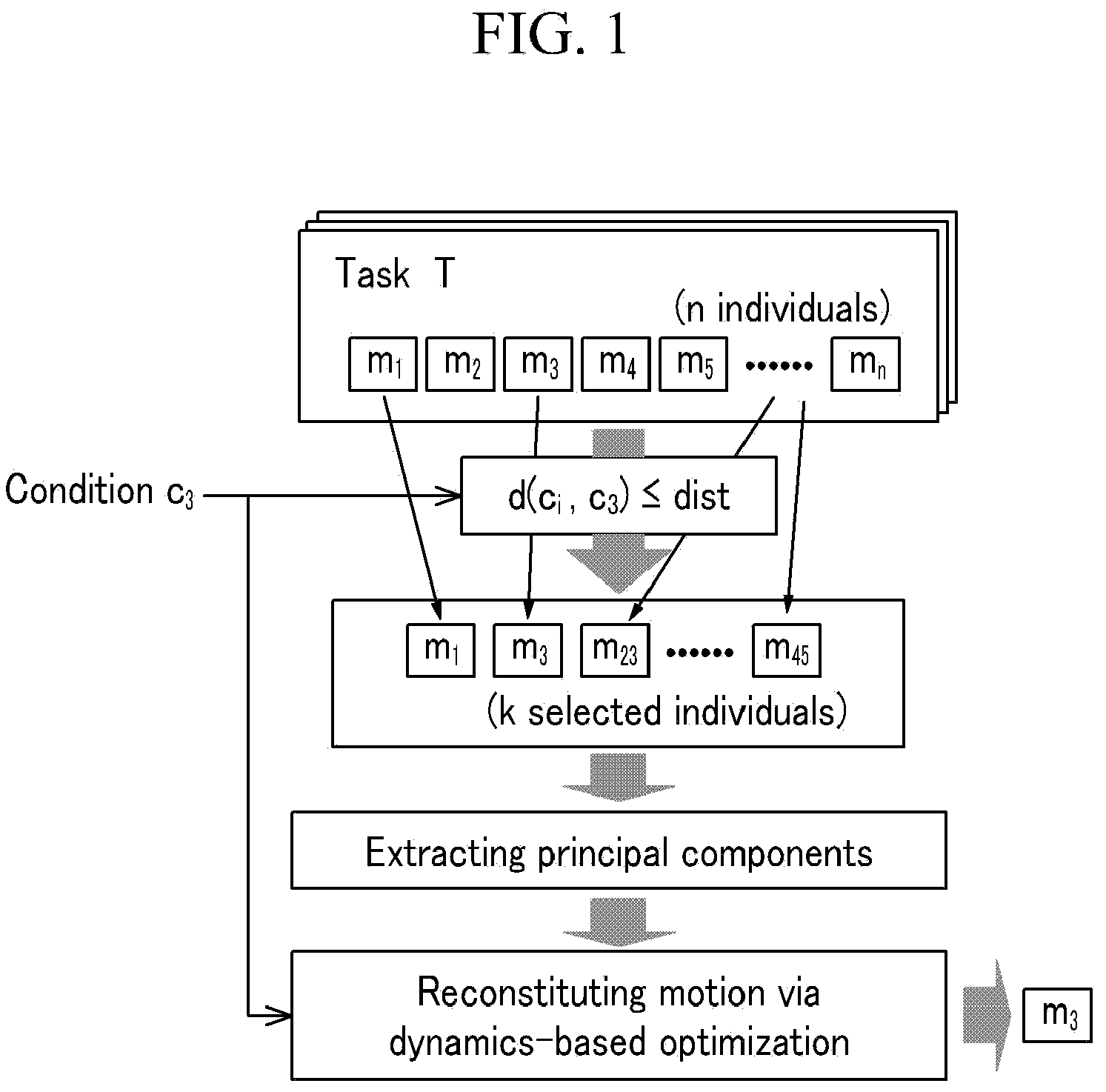

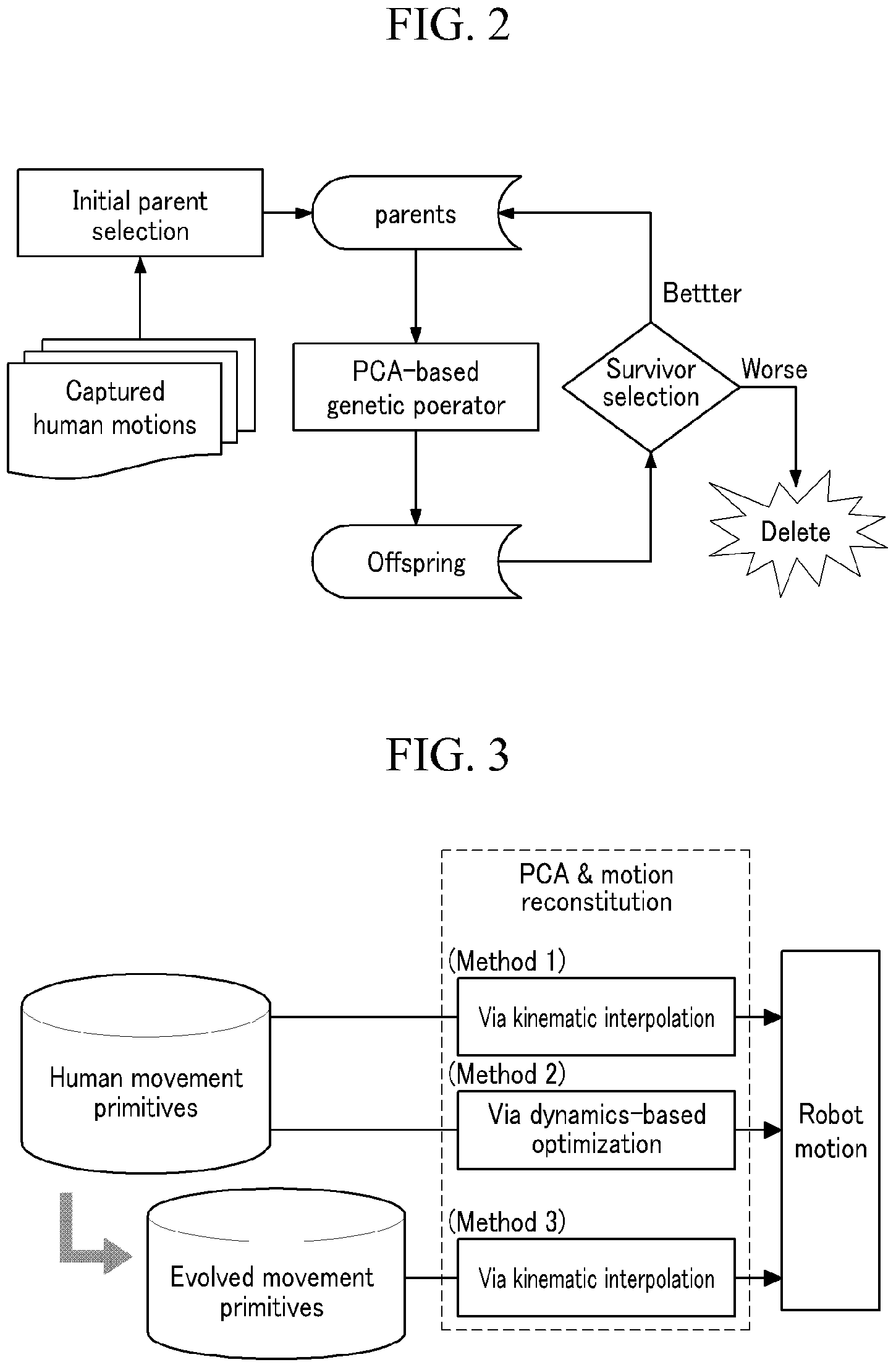

Method for controlling motion of a robot based upon evolutionary computation and imitation learning

InactiveUS20100057255A1Great motionEasily applied to robotProgramme-controlled manipulatorComputer controlPattern recognitionEvolutionary computation

The present invention relates to a method for controlling motions of a robot using evolutionary computation, the method including constructing a database by collecting patterns of human motion, evolving the database using a genetic operator that is based upon PCA and dynamics-based optimization, and creating motion of a robot in real time using the evolved database. According to the present invention, with the evolved database, a robot may learn human motions and control optimized motions in real time.

Owner:KOREA INST OF SCI & TECH

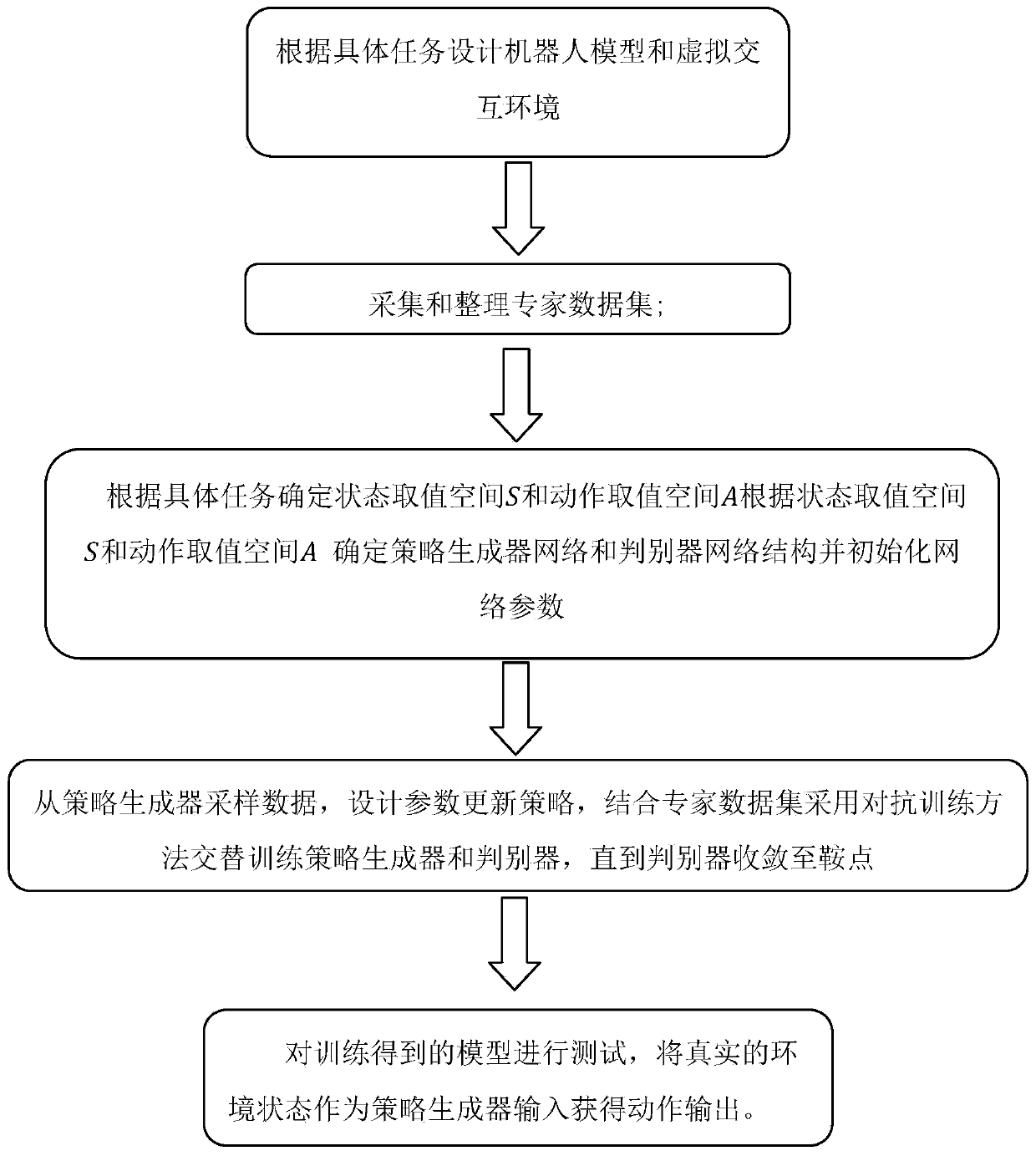

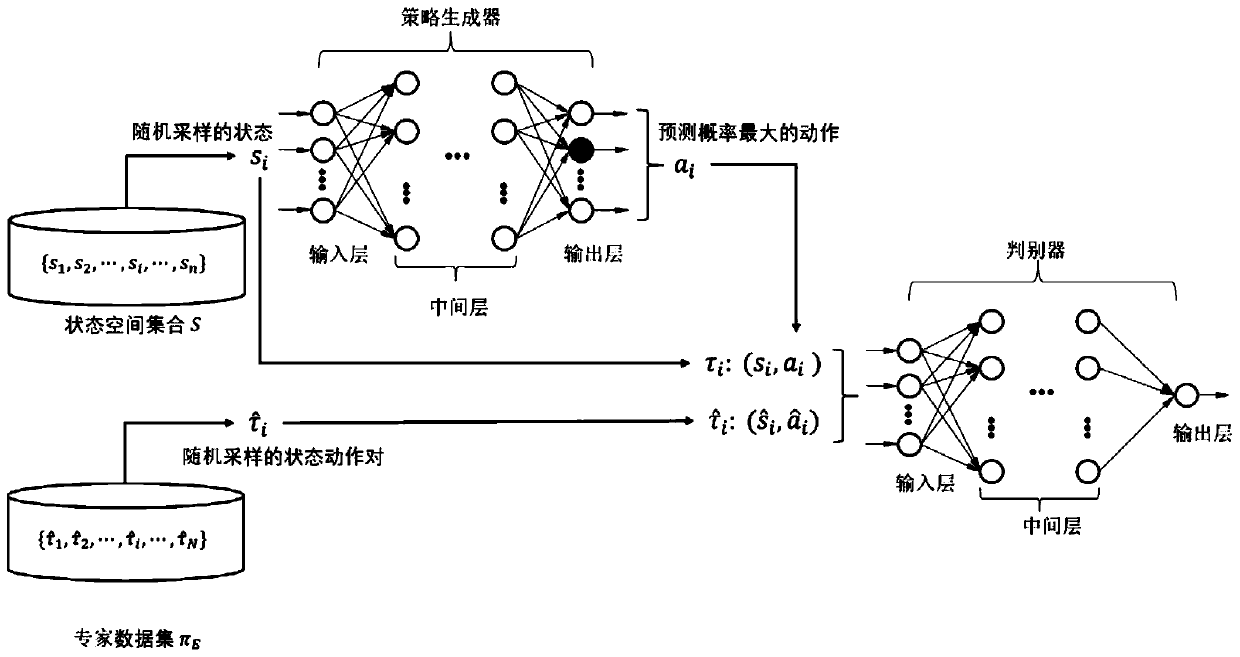

Robot imitation learning method based on virtual scene training

PendingCN110991027ATraining model fastLate migration is fastArtificial lifeDesign optimisation/simulationData setAlgorithm

The invention discloses a robot imitation learning method based on virtual scene training. The method comprises the following steps: designing a robot model and a virtual interaction environment according to a specific task; collecting and arranging an expert data set; determining a state value space S and an action value space A according to the specific task, and determining structures of the network of a strategy generator and the network of a discriminator according to the state value space S and the action value space A; sampling data from the strategy generator, designing a parameter updating strategy, and alternately training the strategy generator and the discriminator by combining the expert data set and adopting an adversarial training method until the discriminator converges toa saddle point; and testing a network model composed of the strategy generator and the discriminator obtained by training, and taking a real environment state as input of the strategy generator so asto obtain action output. According to the method, a value return function is judged and learned; a large number of complex intermediate steps of inverse reinforcement learning with high calculation amount are bypassed; and the learning process is simpler and more efficient.

Owner:SOUTH CHINA UNIV OF TECH

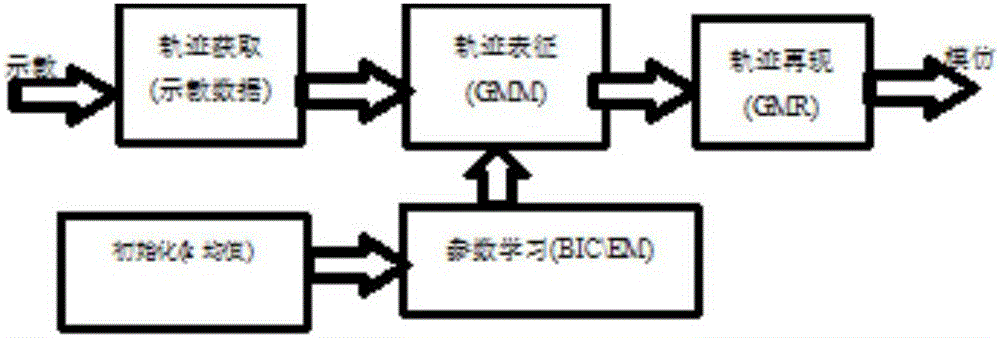

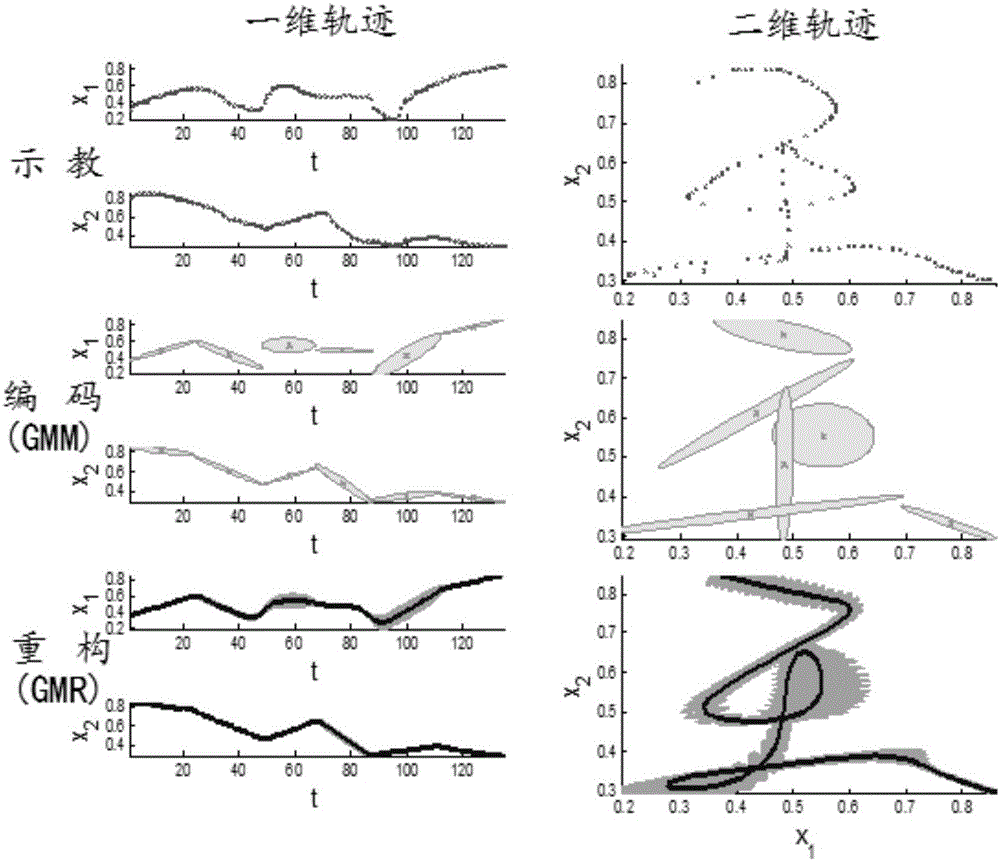

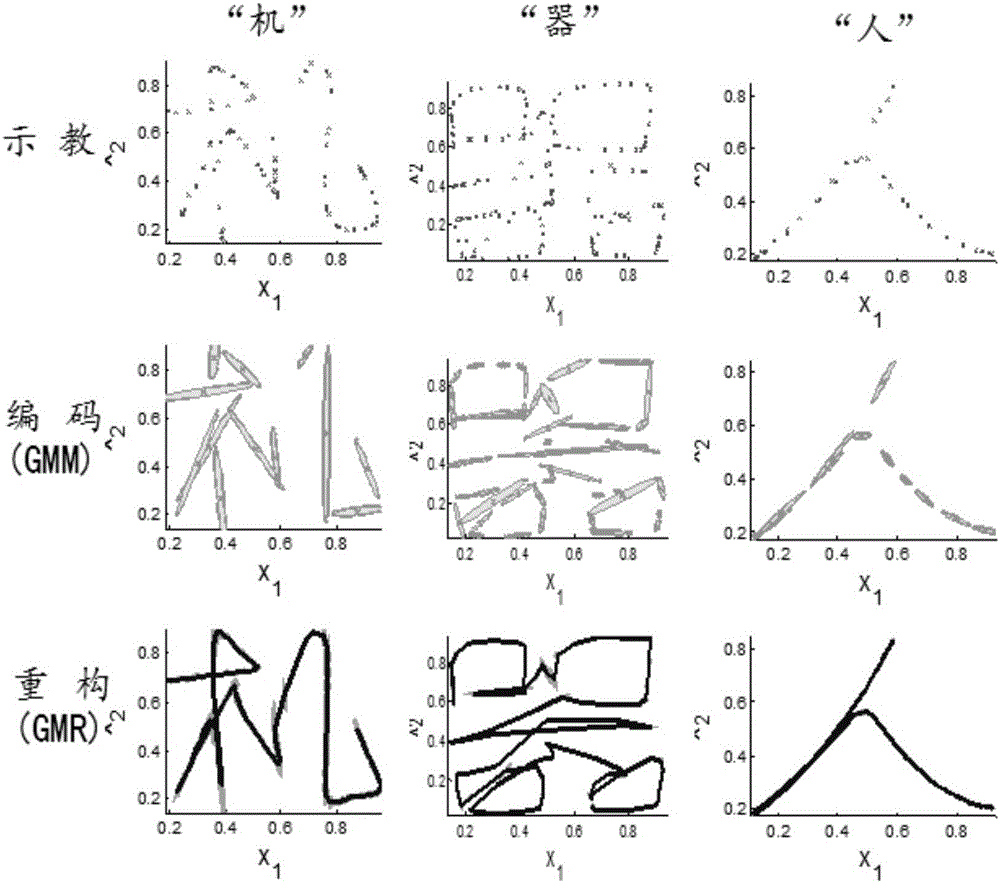

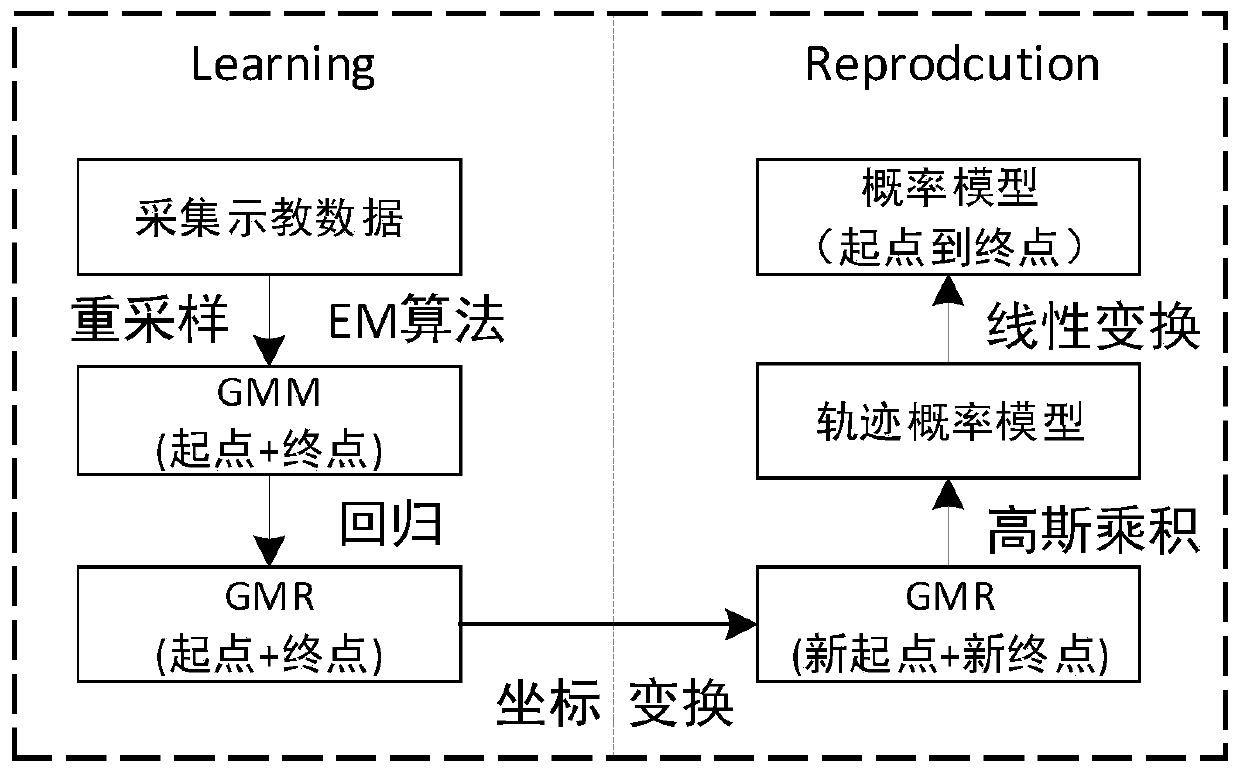

Robot Chinese character writing learning method based on track imitation

The invention relates to a Chinese character writing learning method based on track imitation, wherein the method belongs to the fields of artificial intelligent and robot learning. According to the method, imitation learning based on track matching is introduced into studying of a robot writing skill; demonstration data are coded through a Gaussian mixture model; track characteristics are extracted; data reconstruction is performed through Gaussian mixture regression; a generalized output of the track is obtained; and furthermore learning of a track-continuous Chinese character writing skill can be realized. An interference problem in the writing process is processed in a method of multiple demonstrations, and noise tolerance of the method is improved. According to the method, multitask expansion is based on the basic Gaussian mixture model; a complicated Chinese character is divided into a plurality of parts; track coding and reconstruction are performed on each divided part; and the method is applied for generating discrete tracks, thereby realizing writing of track-discontinuous Chinese characters. The Chinese character writing learning method realizes high Chinese character writing generalization effect.

Owner:BEIJING UNIV OF TECH

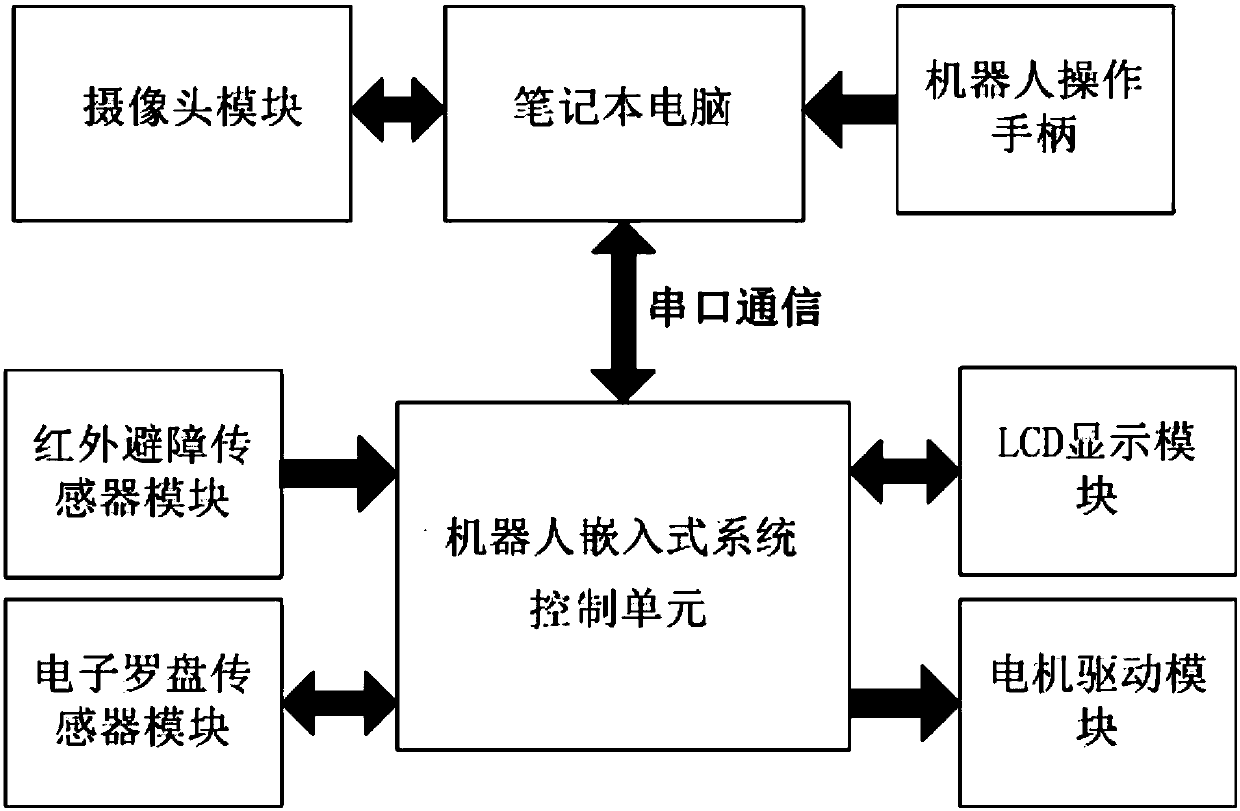

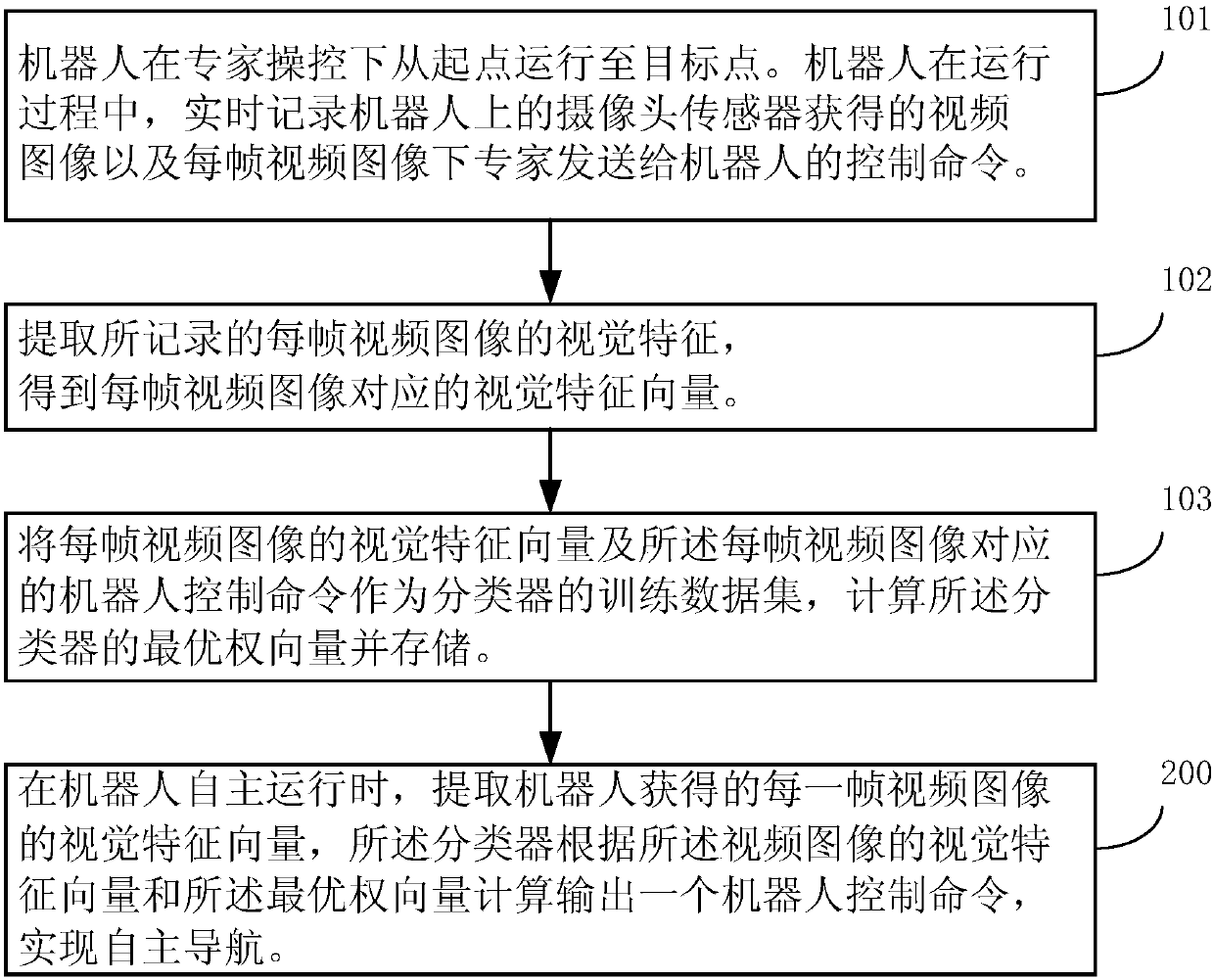

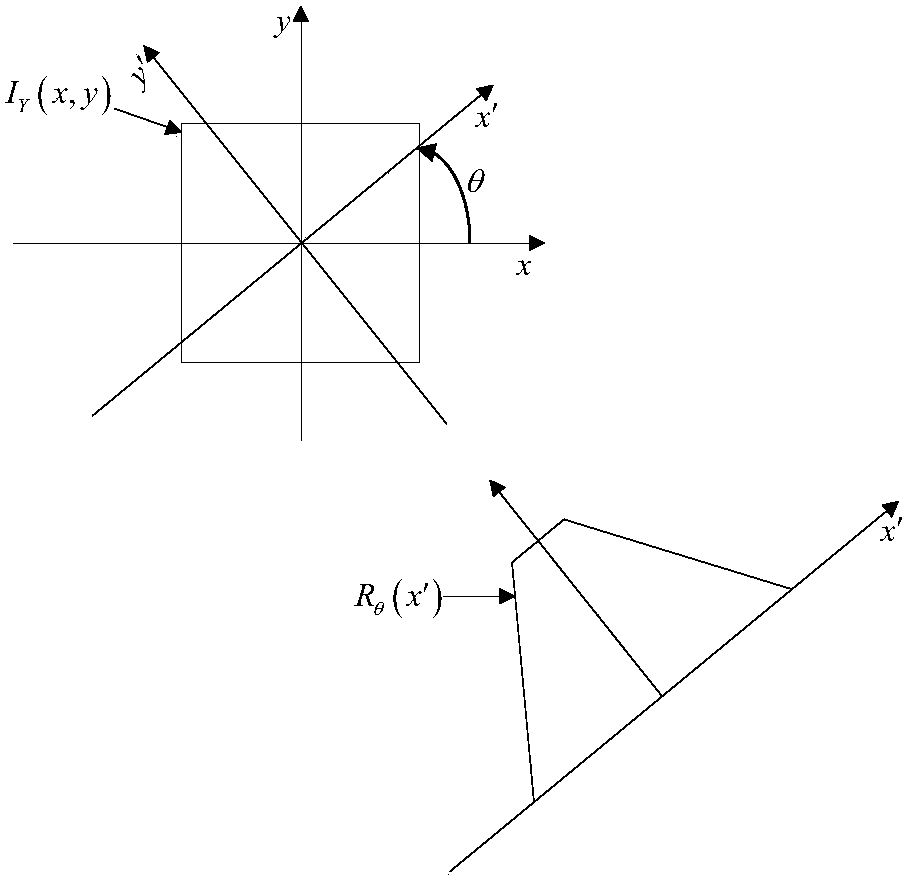

Robot navigation method based on machine vision and machine learning

InactiveCN105760894ARealize autonomous navigationNavigational calculation instrumentsCharacter and pattern recognitionHumanoid robot naoData set

The invention discloses a robot navigation method, comprising robot imitation learning and autonomous navigation steps. The robot imitation learning step comprises: recording each frame video image obtained by a camera and corresponding control commands; extracting a visual feature vector corresponding to each frame video image; employing the visual feature vectors and control commands as a training data set of a classifier; and calculating an optimal vector of the classifier. The robot autonomous navigation step comprises: extracting the visual feature vector of each frame video image; and the classifier calculating and outputting the control commands according to the visual feature vectors of the video images and the optimal vectors to realize autonomous navigation. The method does not need to establish complex environment models or robot motion control models; robot detection to environments mainly depends on visual feature information, and is suitable for indoor navigation and outdoor navigation; the method can be applied to mobile robots, humanoid robots, mechanical arms, unmanned aerial vehicles, etc.

Owner:HARBIN WEIFANG INTELLIGENT SCI & TECH DEV

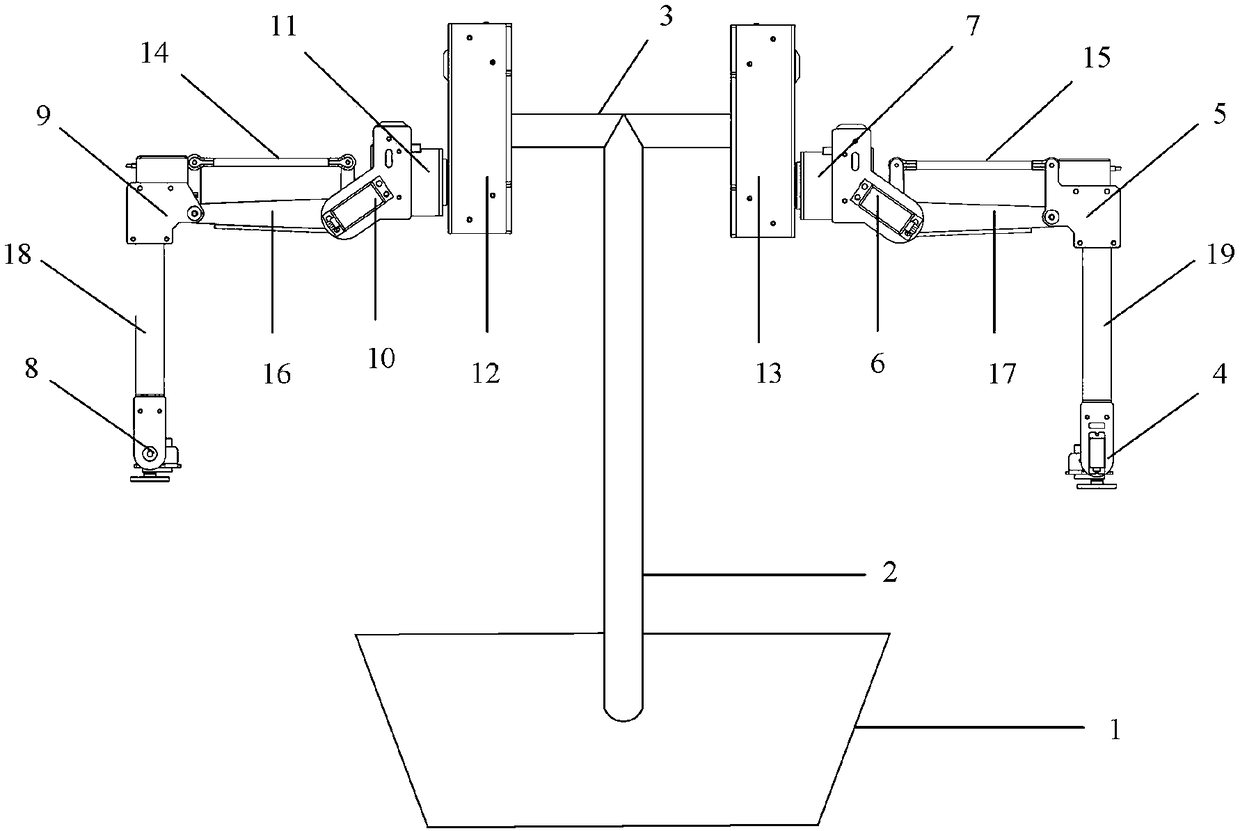

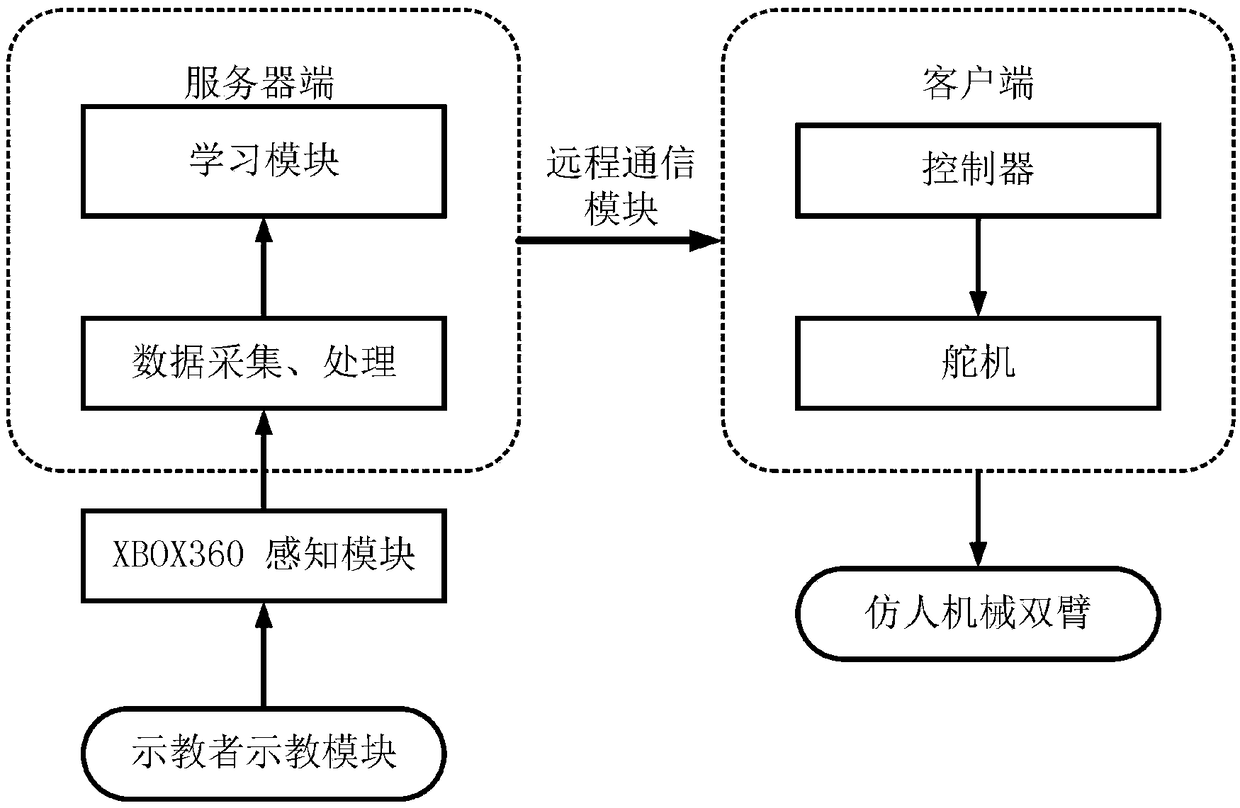

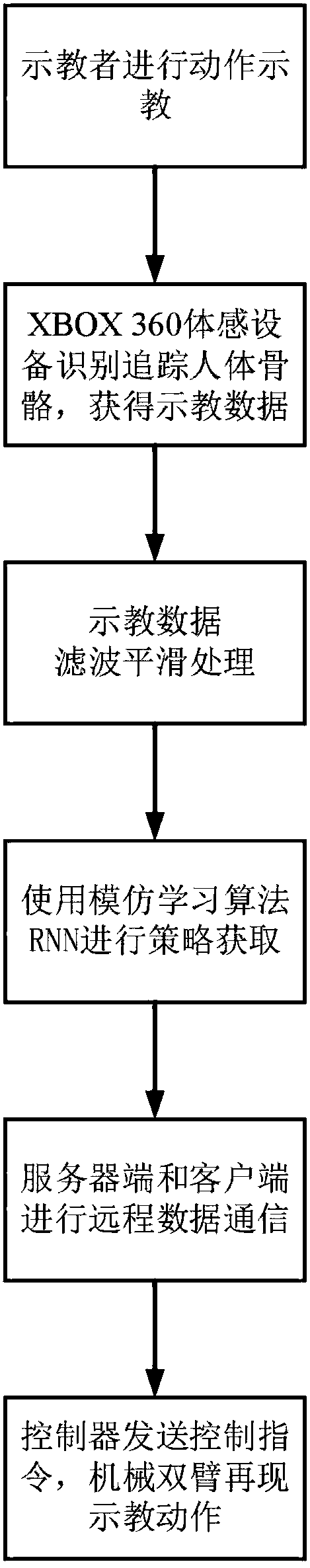

Remote machine two-arm system with imitation learning mechanism and method

ActiveCN108284436AEasy to operateLarge operating spaceProgramme-controlled manipulatorGripping headsSimulationEngineering

The invention discloses a remote machine two-arm system with an imitation learning mechanism and a method. The remote machine two-arm system comprises a demonstrator teaching module, a self-designed action executing module composed of two humanoid machine arms and a digital rudder controller, an XBOX360 body sensor perception module, a remote upper computer communication module and an imitation learning algorithm study module. The module independent power supply mode is adopted, demonstrators do demonstration actions in front of an XBOX360 sensor, the XBOX360 is used for collecting action dataof the demonstrators, and data processing is conducted on a local upper computer (server side); learning is performed through an imitation learning algorithm, and real-time communication is achievedthrough the server side and a remote upper computer (client side); and then the remote client side sends the data to the controller in real time through a serial port, and the controller receiving thedata is used for guiding the two machine arms to imitate and learn the teaching actions of the demonstrators. By means of the remote machine two-arm system with the imitation learning mechanism and the method, machine arm operation intelligence is improved; and meanwhile, operation efficiency of the machine arms in a dangerous space is greatly improved and actual application significance is realized.

Owner:BEIJING UNIV OF TECH

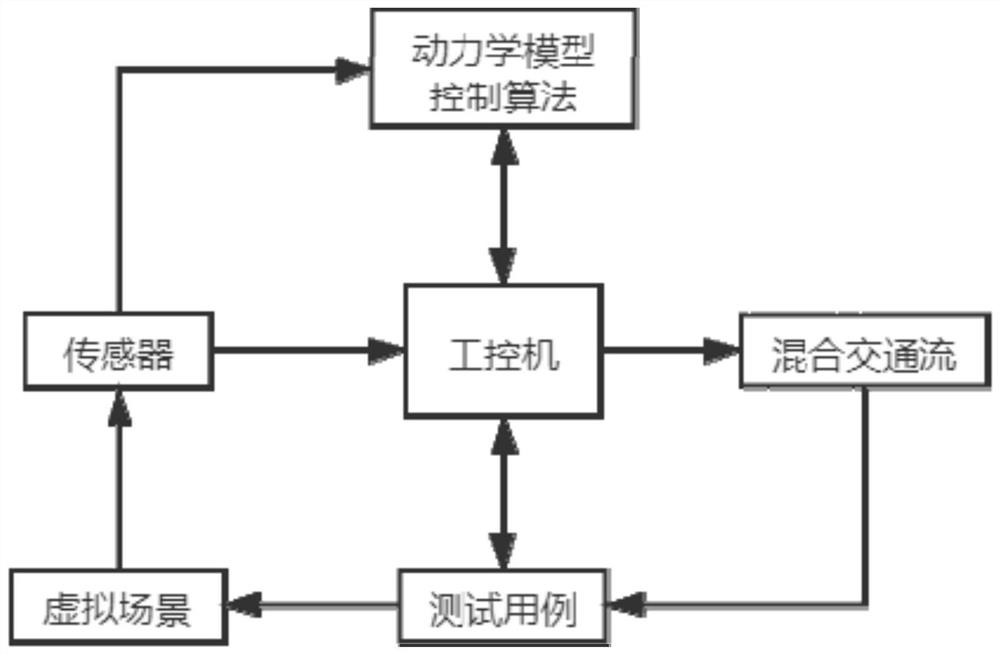

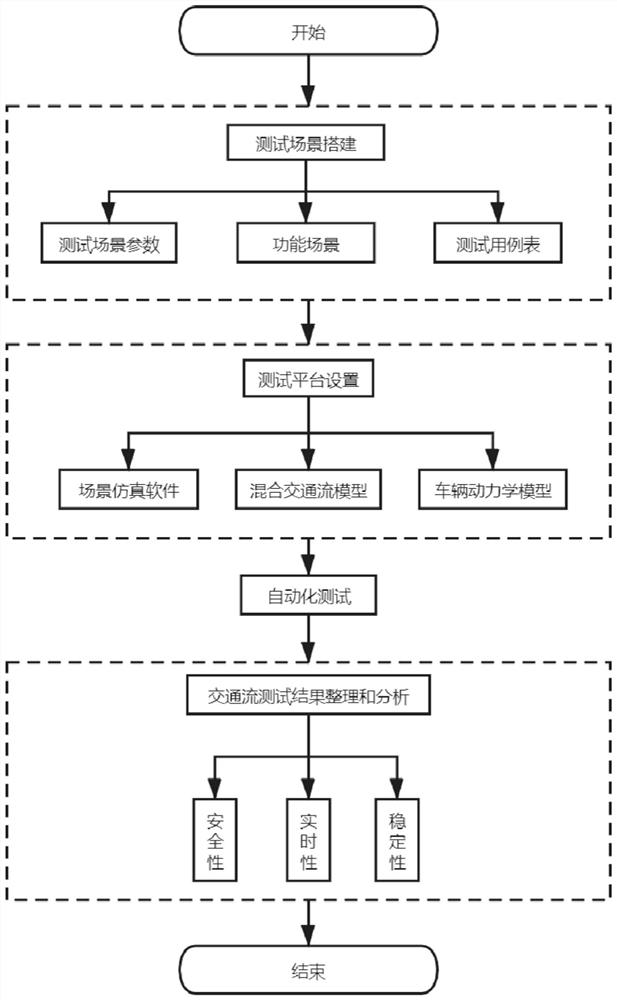

Intelligent automobile in-loop simulation test method based on mixed traffic flow model

ActiveCN113010967AReduce the number of testsImprove test efficiencyGeometric CADDesign optimisation/simulationCombined testTraffic flow modeling

The invention provides an intelligent vehicle in-loop simulation test method based on a mixed traffic flow model, and the method comprises the steps: building a mixed traffic flow model through a generative adversarial network and an Actor-Critic network, solving a traffic flow vehicle driving strategy through a near-end strategy optimization algorithm, and carrying out the interaction with the environment to form a vehicle driving track; through a discrimination model, the generated track being distinguished from the actual track and the retrograde motion, and a reward signal being provided for the traffic flow environment. According to the method, the values of multiple influence factors of the mixed traffic flow model are combined by utilizing a combined test method, so that the test times are reduced, and the influence on the test during the interaction of the factors is explored; according to the traffic flow model generation method based on generative adversarial imitation learning, a vehicle can obtain a decision similar to an actual traffic flow; the combined case test generation method based on the greedy algorithm can improve the test efficiency. According to the method, a good improvement effect is obtained through empirical analysis.

Owner:JILIN UNIV

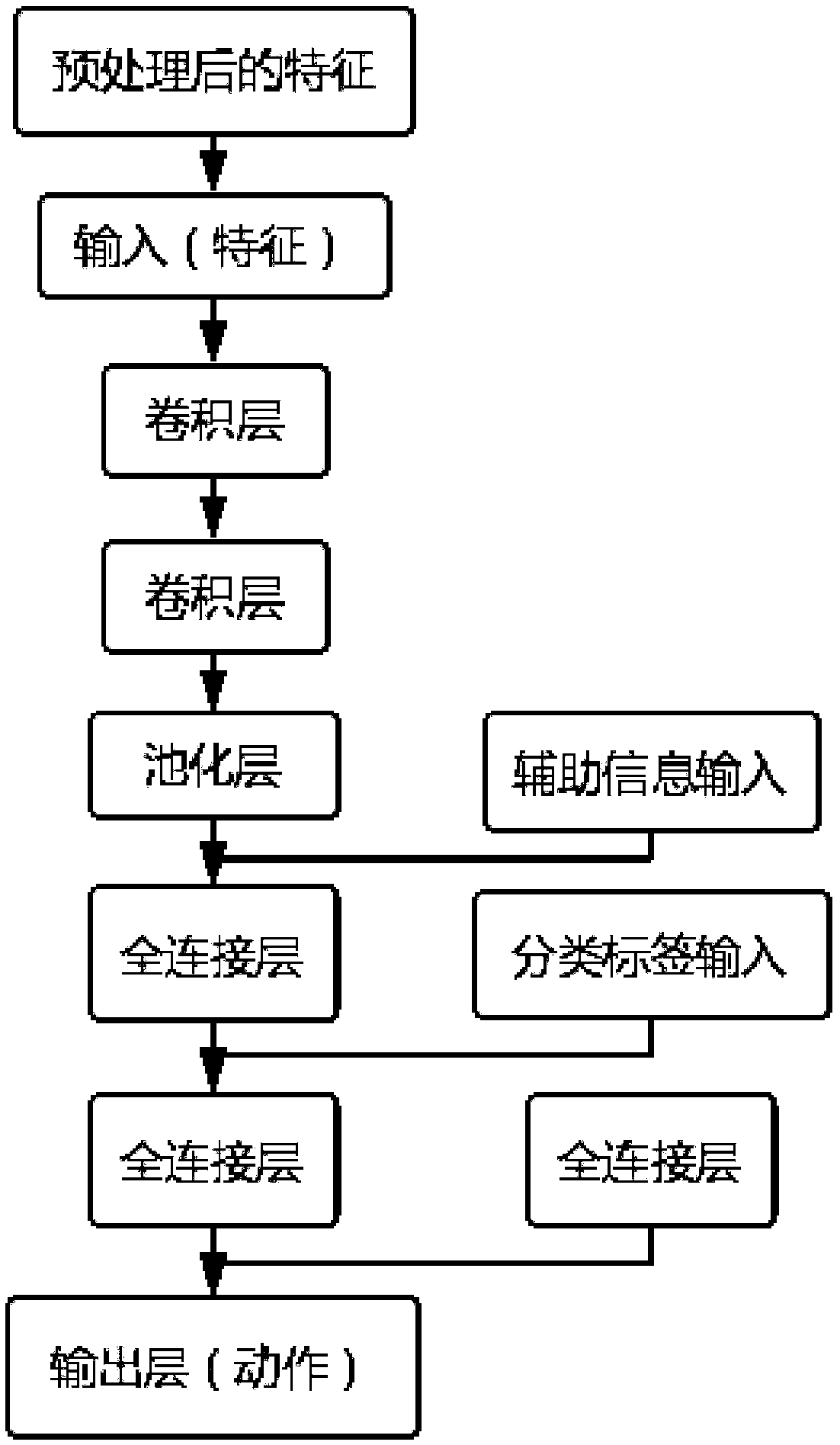

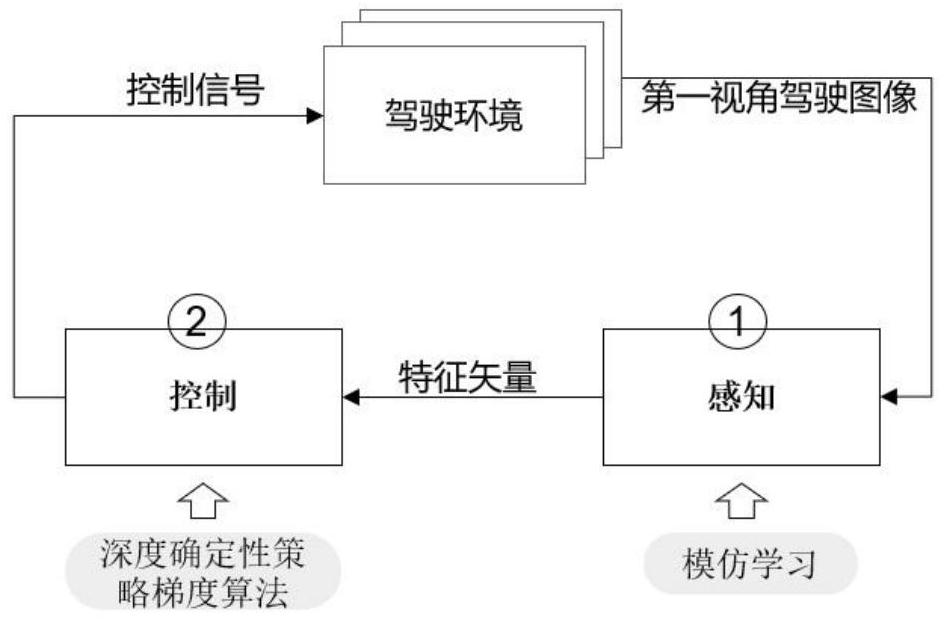

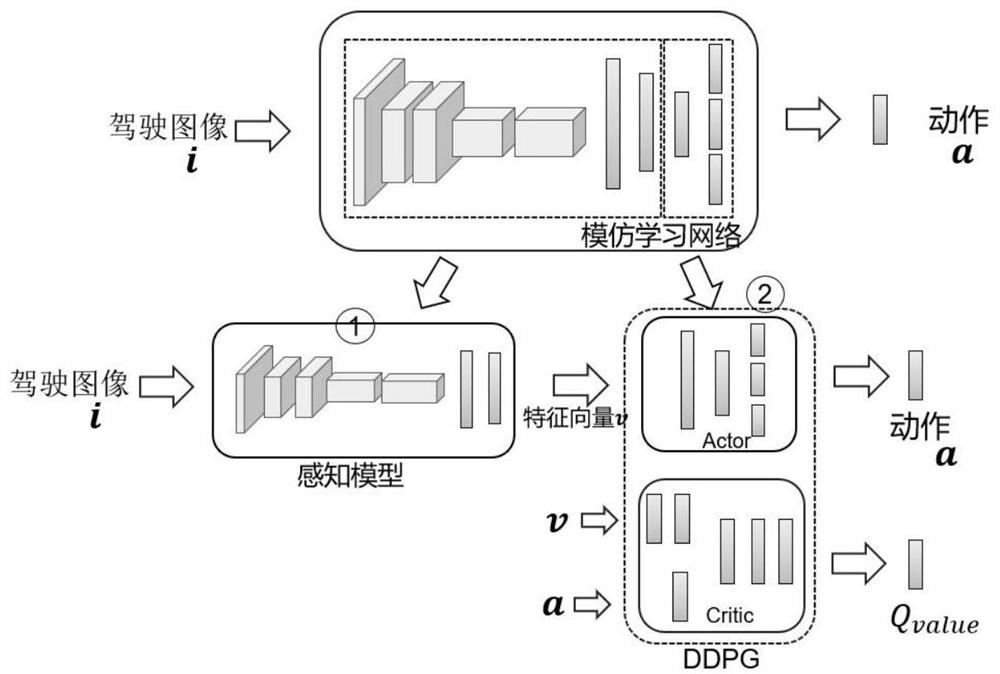

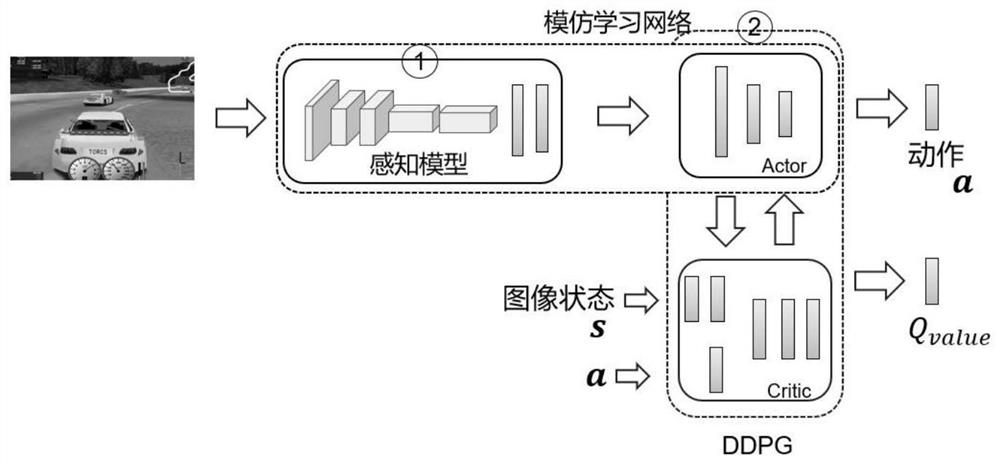

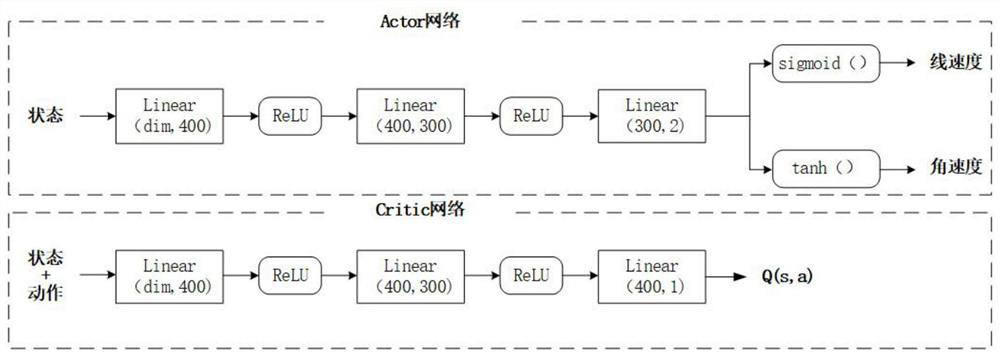

Vision-based deep imitation reinforcement learning driving strategy training method

ActiveCN112232490AEasy to handleFast convergenceInternal combustion piston enginesNeural architecturesSteering angleSimulation

The invention discloses a vision-based deep imitation reinforcement learning driving strategy training method. The method comprises the steps of constructing an imitation learning network; training the imitation learning network; performing network splitting on the trained imitation learning network to obtain a sensing module; constructing a DDPG network to obtain a control module; completing theconstruction of a deep imitation reinforcement learning model through the sensing module and the control module; and training the deep imitation reinforcement learning model. An imitation learning network comprises five convolution layers and four full connection layers, the convolution layers are used for extracting features, and the full connection layers are used for predicting a steering angle, an accelerator and a brake opening degree; in addition, a reward function is set in the training process of the deep imitation reinforcement learning model, and comfort and safety of curve driving are guaranteed.

Owner:DALIAN UNIV

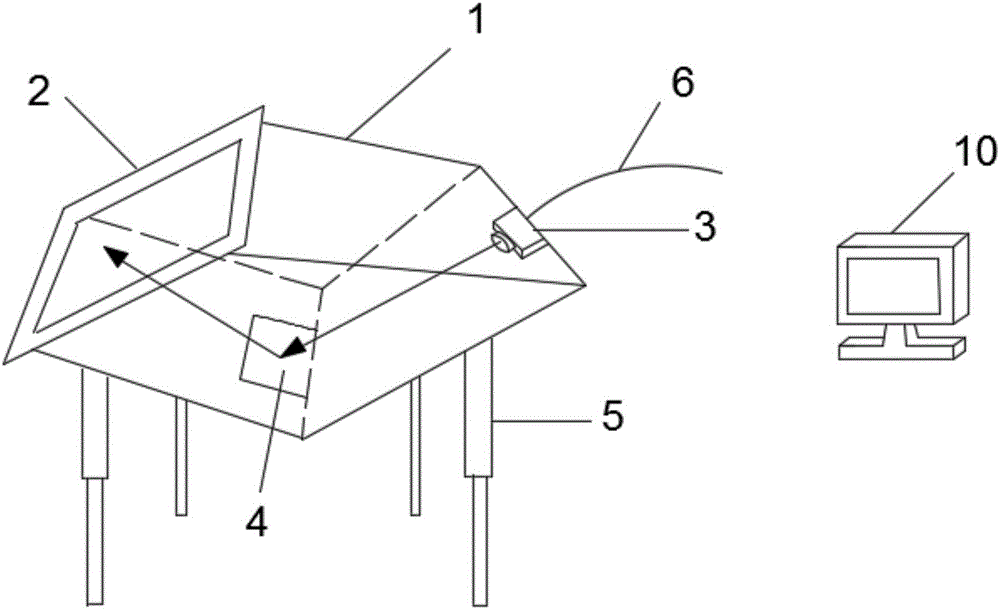

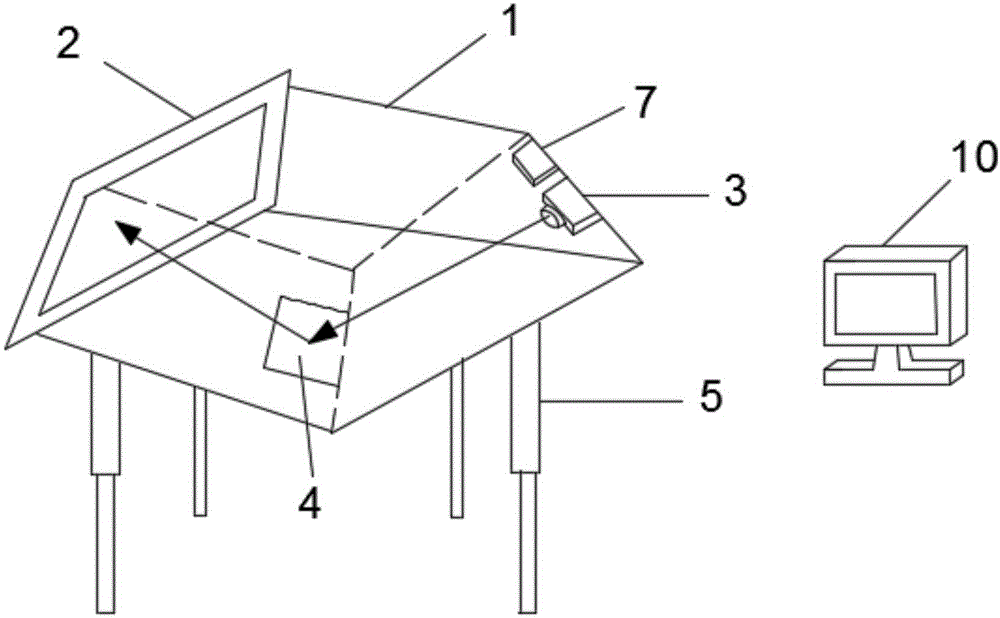

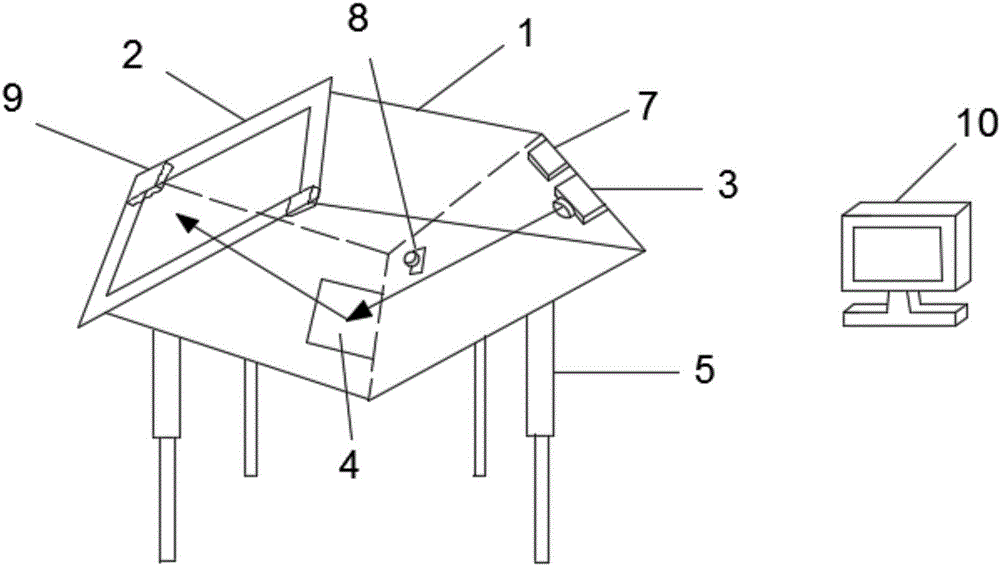

Micro projection imitation display apparatus and display method

ActiveCN106373455ASolve the problem that only static copying can be achievedAvoid direct raysTeaching apparatusHolographic screenComputer graphics (images)

The invention brings forward an imitation display apparatus capable of dynamically performing calligraphy or painting imitation, and a display method thereof. A holographic screen and a micro projection technology are employed, dynamic imitation of calligraphy or paintings can be realized through dynamic demonstration of projection, the problem that an imitation learning apparatus can only realize static imitation in the prior art is solved, holographic screen imaging is employed, the sharpness, the contrast and the color reduction degree are quite high, and the display effect is quite good. Besides, a desk body is different from the shape of a conventional desk body, the desk body employs a structure with a rhombus-shaped side surface, projection light is enabled to maintain a certain angle with the visual angle of a user, direct light is avoided, and human eyes are protected. Besides, the invention also brings forward an imitation projection display method. User imitation learning information is compared with preset standard information. A learning result of a user is evaluated by use of an intelligent apparatus, and learning and improvement of the user can be facilitated.

Owner:陈新德

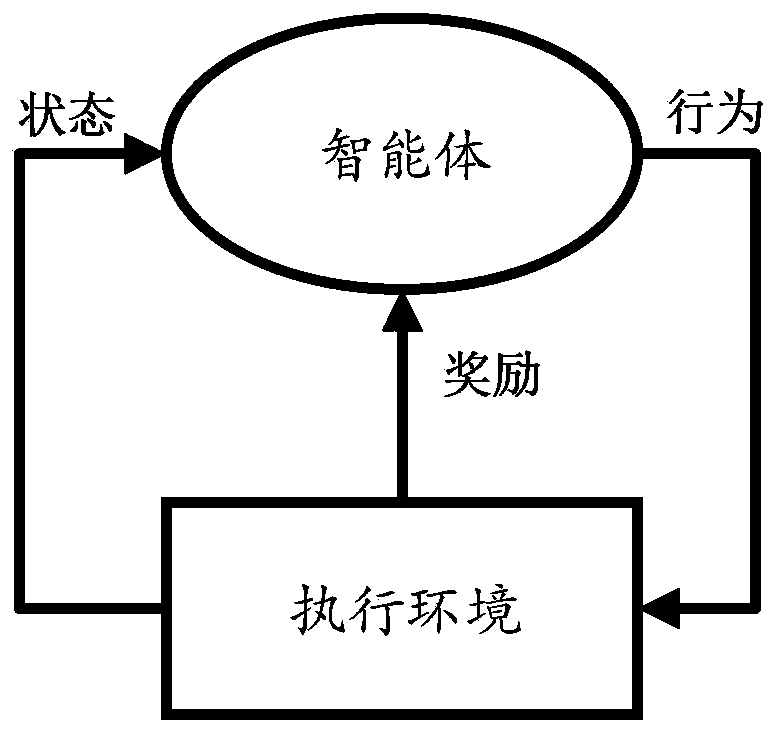

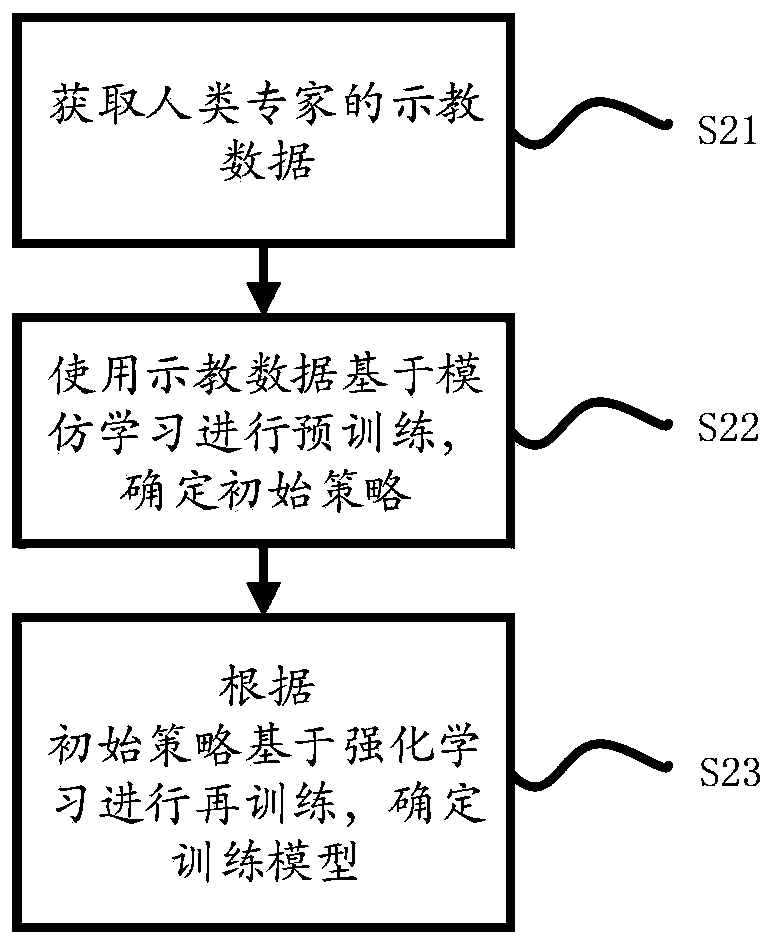

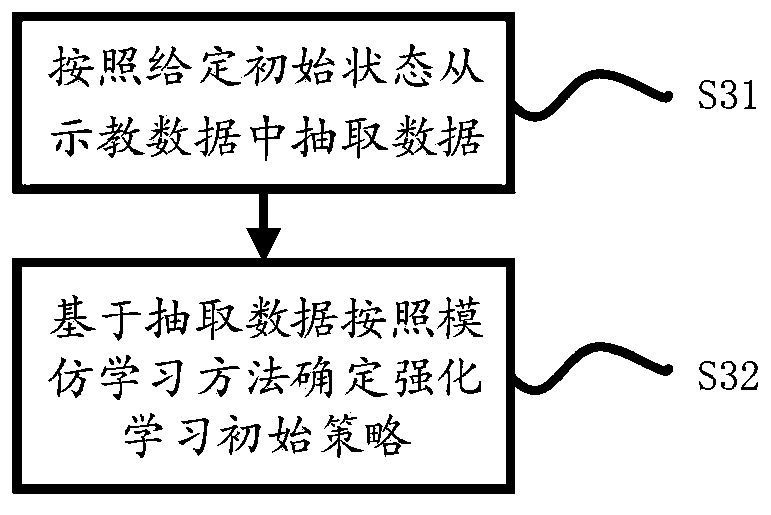

Hierarchical reinforcement learning training method and device based on imitation learning

PendingCN111144580ANarrow down the search spaceImprove training efficiencyMachine learningComputer simulationsImitation learningReinforcement learning

The invention discloses a hierarchical reinforcement learning training method and device based on imitation learning, and an electronic device. The method comprises the steps of obtaining teaching data of a human expert; conducting pre-training based on imitation learning by using the teaching data, and determining an initial strategy; and based on the initial strategy, performing retraining basedon reinforcement learning, and determining a training model. The teaching data is used for pre-training and re-training, priori knowledge and strategies are effectively utilized, the search space isreduced, and the training efficiency is improved.

Owner:INFORMATION SCI RES INST OF CETC

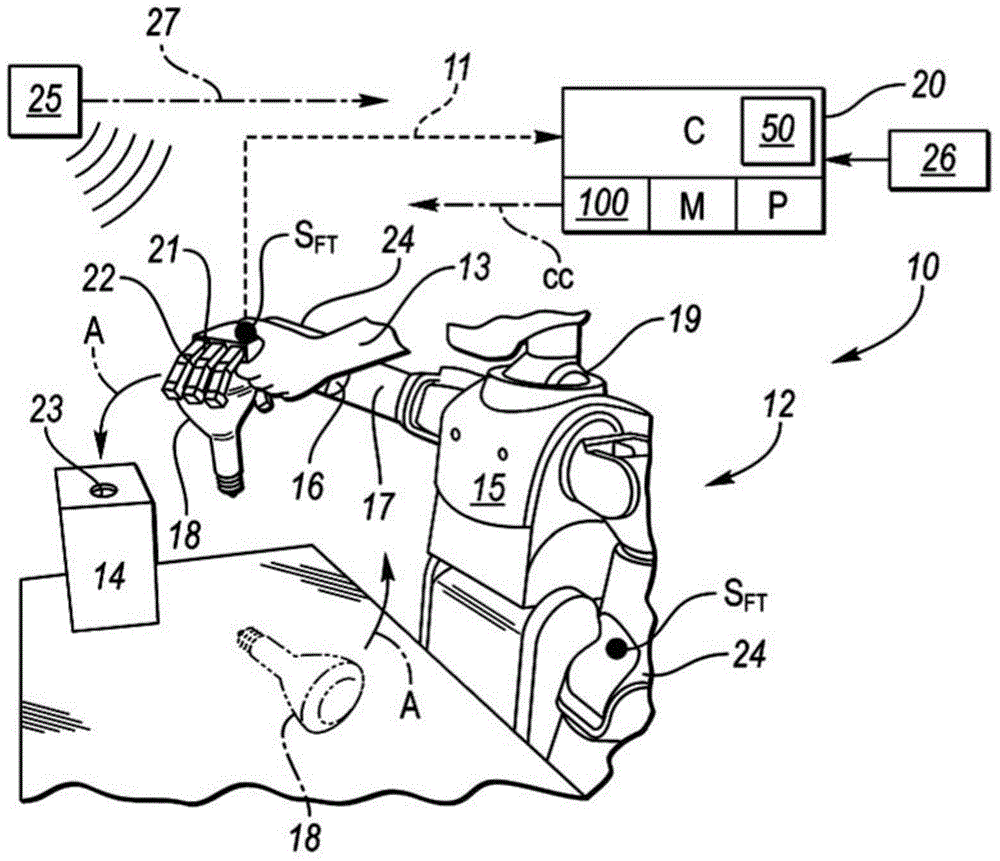

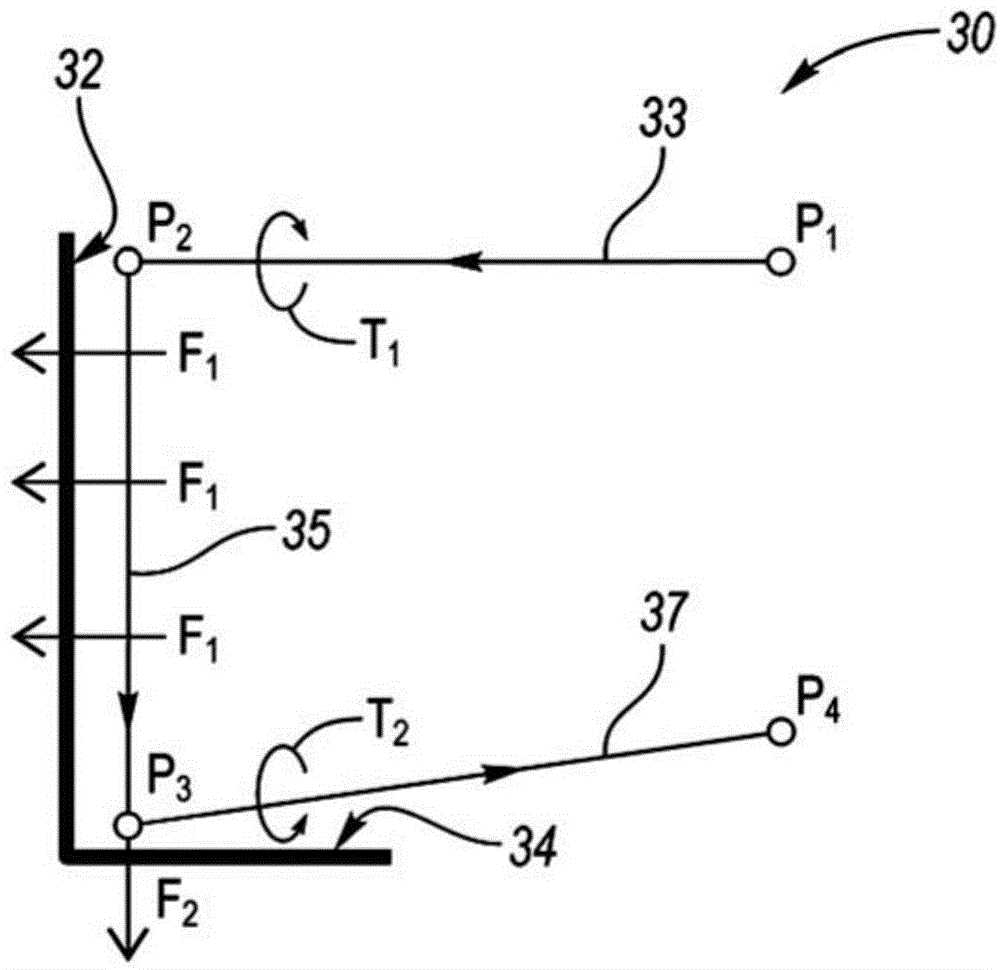

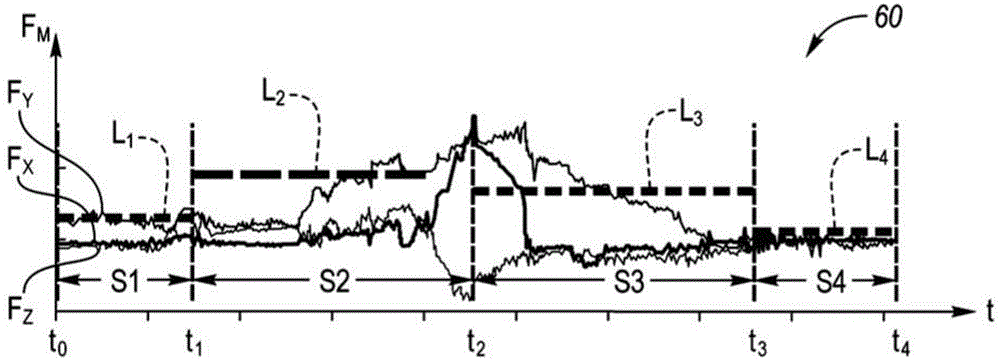

Rapid robotic imitation learning of force-torque tasks

ActiveCN105082132AInstructions are simple and consistentProgramme controlProgramme-controlled manipulatorRobotic systemsSimulation

A method of training a robot to autonomously execute a robotic task includes moving an end effector through multiple states of a predetermined robotic task to demonstrate the task to the robot in a set of n training demonstrations. The method includes measuring training data, including at least the linear force and the torque via a force-torque sensor while moving the end effector through the multiple states. Key features are extracted from the training data, which is segmented into a time sequence of control primitives. Transitions between adjacent segments of the time sequence are identified. During autonomous execution of the same task, a controller detects the transitions and automatically switches between control modes. A robotic system includes a robot, force-torque sensor, and a controller programmed to execute the method.

Owner:GM GLOBAL TECH OPERATIONS LLC

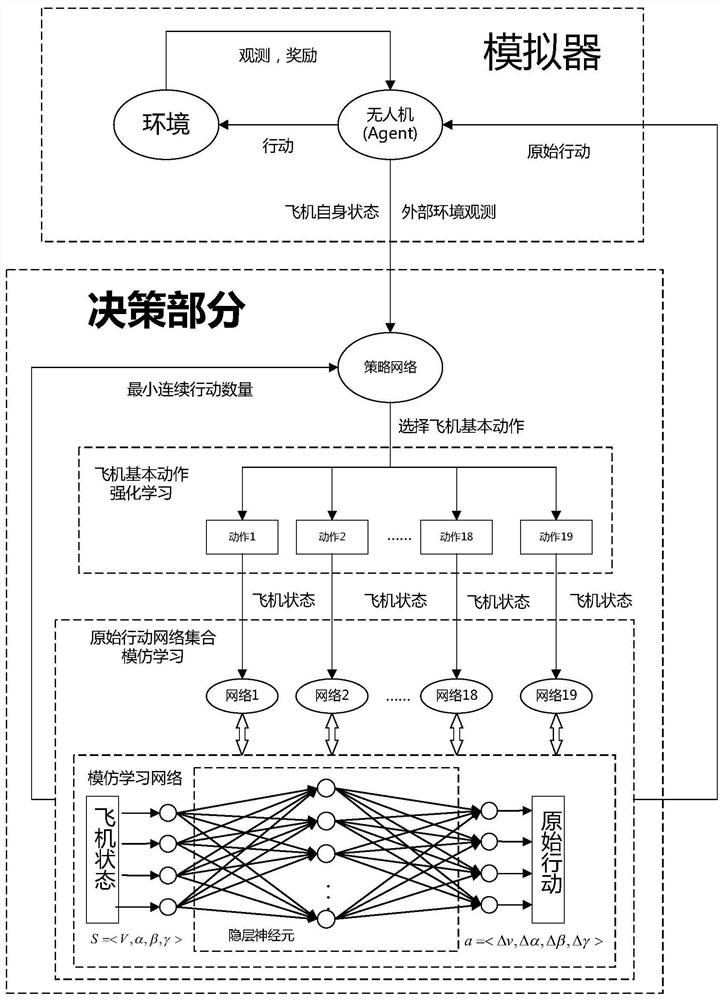

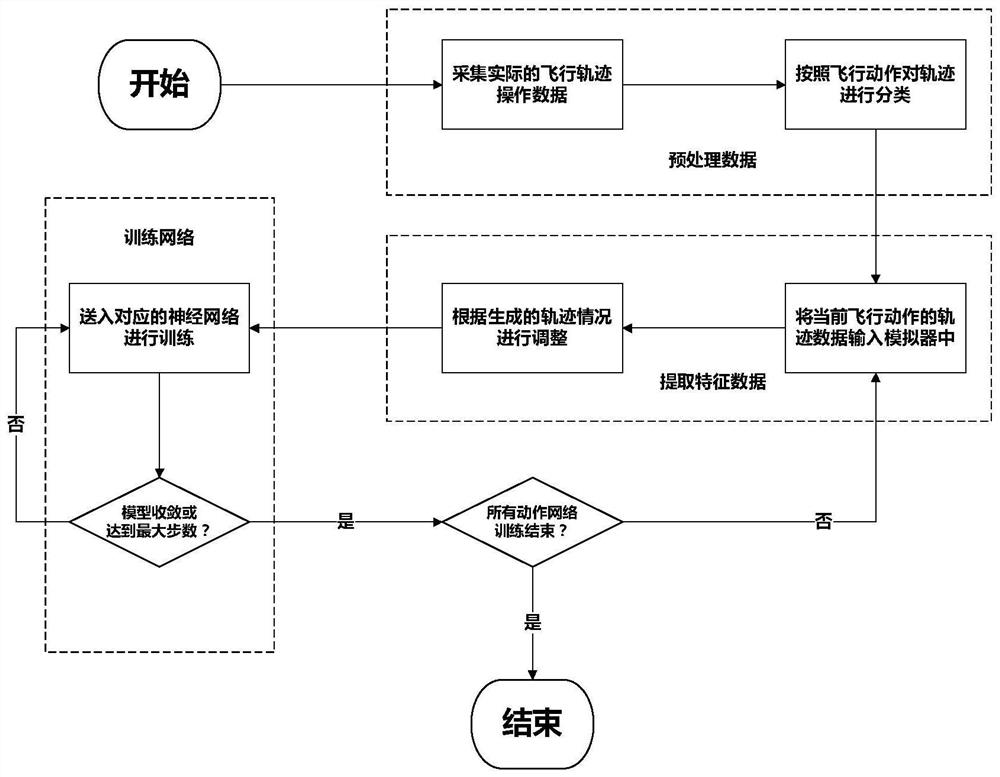

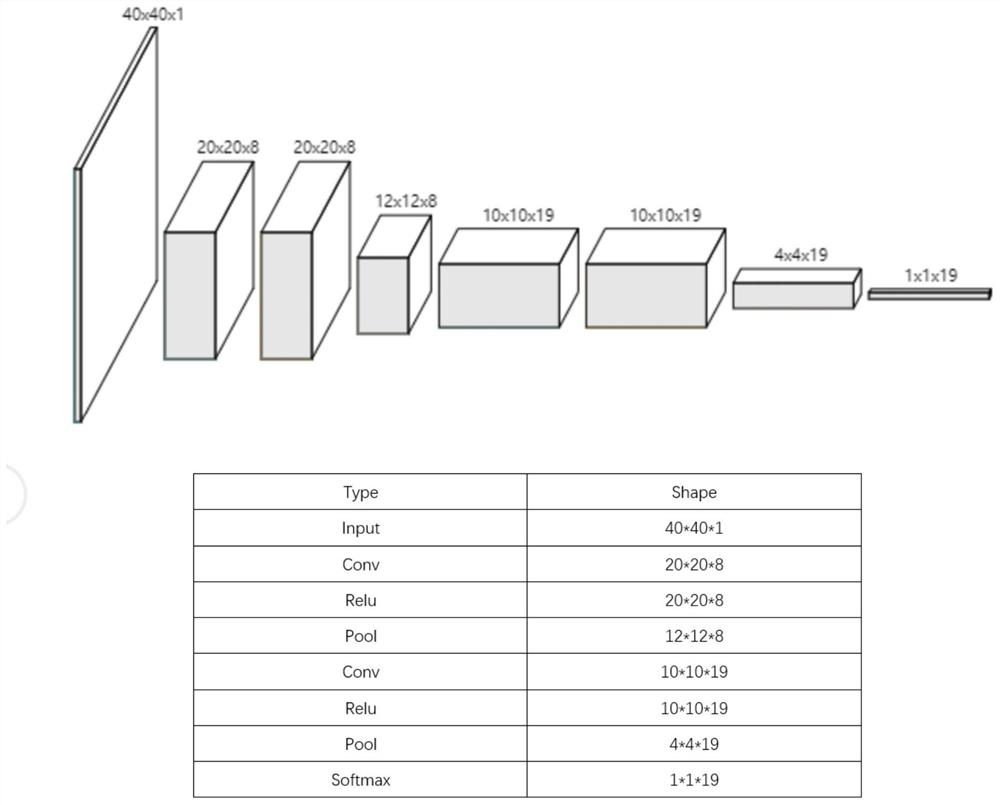

Unmanned aerial vehicle flight control method based on imitation learning and reinforcement learning algorithms

ActiveCN112162564AStable flightHigh similarityAttitude controlPosition/course control in three dimensionsSimulationReinforcement learning algorithm

The invention discloses an unmanned aerial vehicle flight control method based on imitation learning and reinforcement learning algorithms. The method comprises the following steps: creating an unmanned aerial vehicle flight simulation environment simulator; defining a basic action set of flight; classifying the trajectory data according to the flight basic actions; for each flight action, learning mapping network parameters from a flight basic action to an original action by utilizing imitation learning; counting the minimum continuous action number of each basic action; constructing an upper-layer reinforcement learning network, and adding the minimum continuous action number as punishment p of aircraft action inconsistency; in the simulator, obtaining current observation information andawards, and selecting corresponding flight basic actions by using a pDQN algorithm; inputting the state information of the aircraft into an imitation learning neural network corresponding to the flight basic action, and outputting the original action of the simulator; inputting the obtained original action into a simulator to obtain observation and awards of the next moment; and performing training by using a pDQN algorithm until the strategy network on the upper layer converges.

Owner:NANJING UNIV

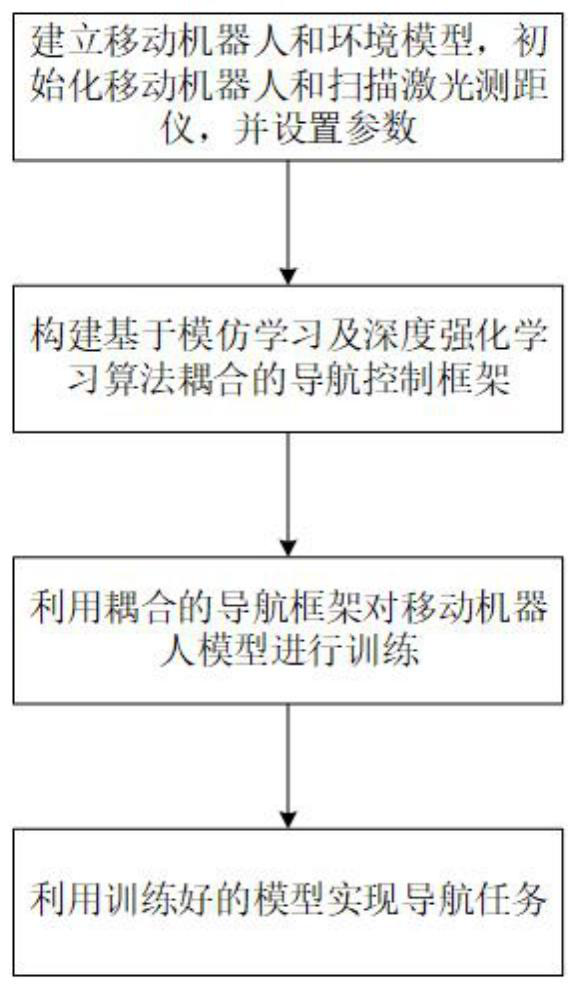

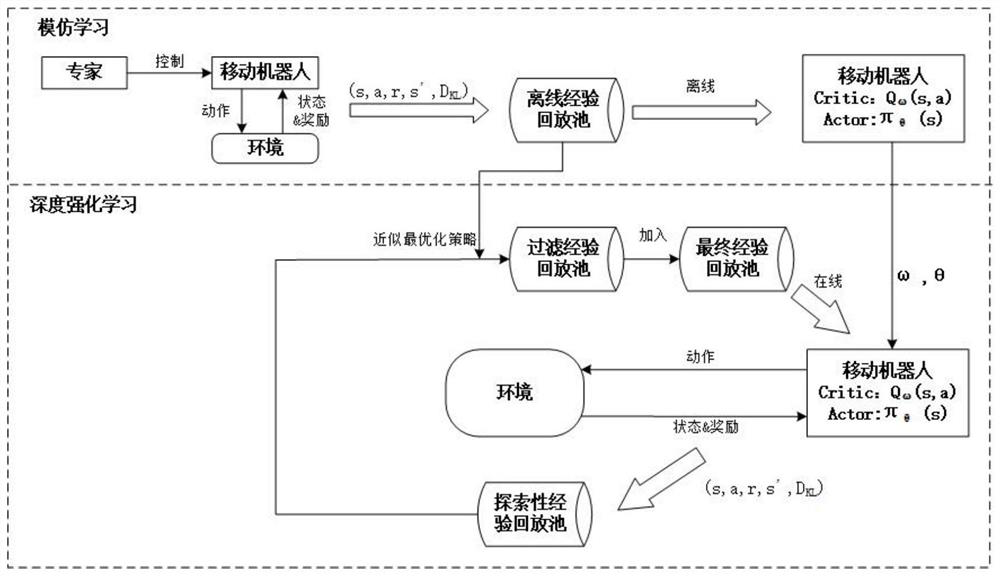

Mobile robot navigation method based on imitation learning and deep reinforcement learning

InactiveCN112433525AReduce dependenceOptimal Control StrategyNeural architecturesNeural learning methodsReinforcement learning algorithmImitation learning

The invention provides a mobile robot navigation method based on imitation learning and deep reinforcement learning. The mobile robot navigation method comprises the following steps: step 1, establishing an environment model of a mobile robot; 2, constructing a navigation control framework based on imitation learning and deep reinforcement learning algorithm coupling, and training the mobile robotmodel by using the coupled navigation framework; and 3, realizing a navigation task by utilizing the trained model.

Owner:NANJING UNIV OF SCI & TECH

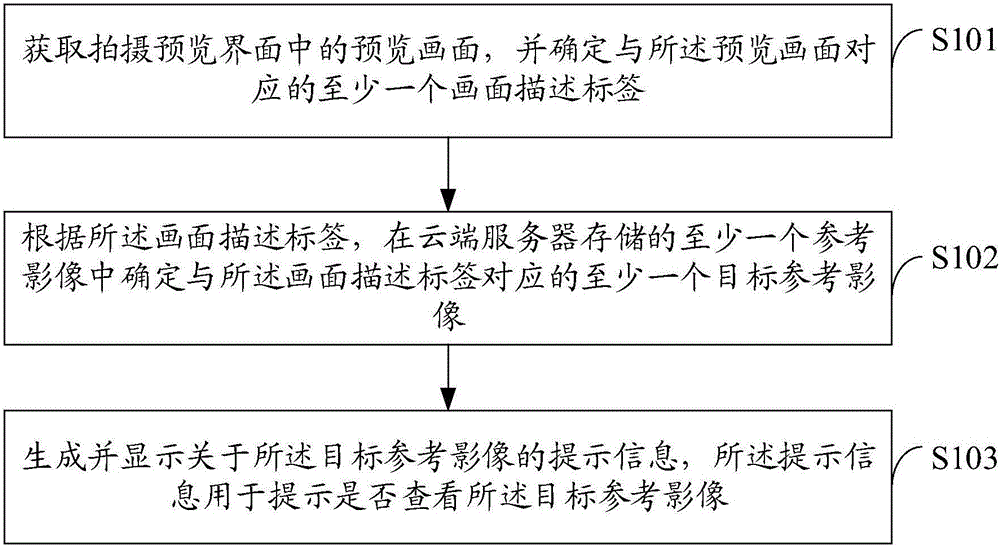

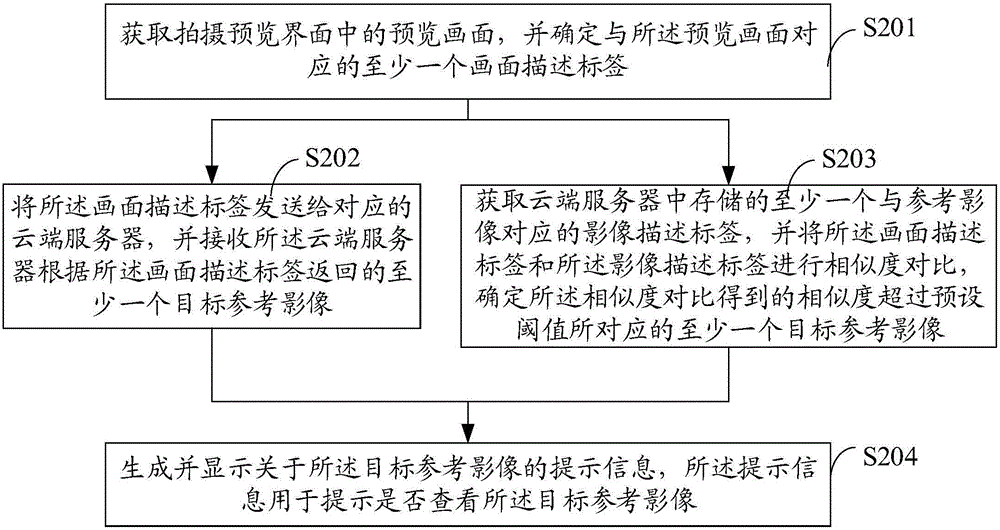

Shooting processing method and terminal

InactiveCN106250421AImprove shooting skillsImprove practicalitySpecial data processing applicationsReference imageImage description

Embodiments of the invention disclose a shooting processing method and a terminal. The method comprises the steps of obtaining a preview picture in a shooting preview interface, and determining at least one picture description tag corresponding to the preview picture; determining at least one target reference image corresponding to the picture description tag in at least one reference image stored in a cloud server, wherein the target reference image comprises at least one target image description tag, and the similarity obtained by performing similarity comparison on the picture description tag with the target image description tag exceeds a preset threshold; and generating and displaying prompt information about the target reference image, wherein the prompt information is used for prompting whether the target reference image is viewed or not. According to the embodiments of the method and the terminal, a user can be assisted to shoot a perfect image by gradually improving shooting skills through continuous imitation learning.

Owner:SHENZHEN GIONEE COMM EQUIP

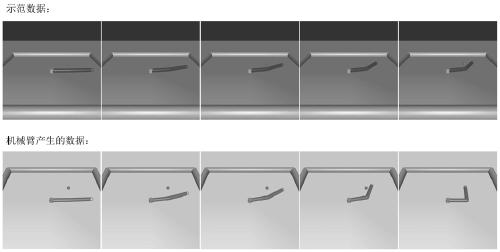

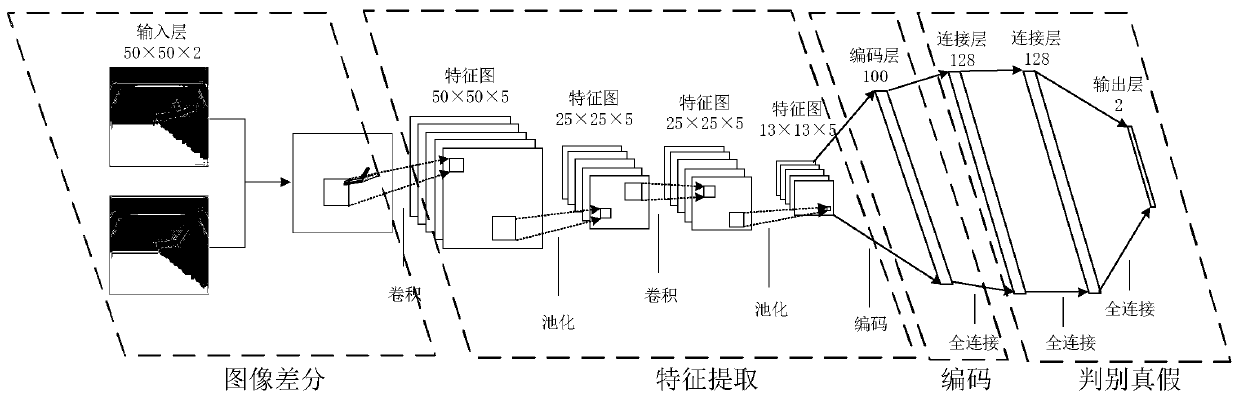

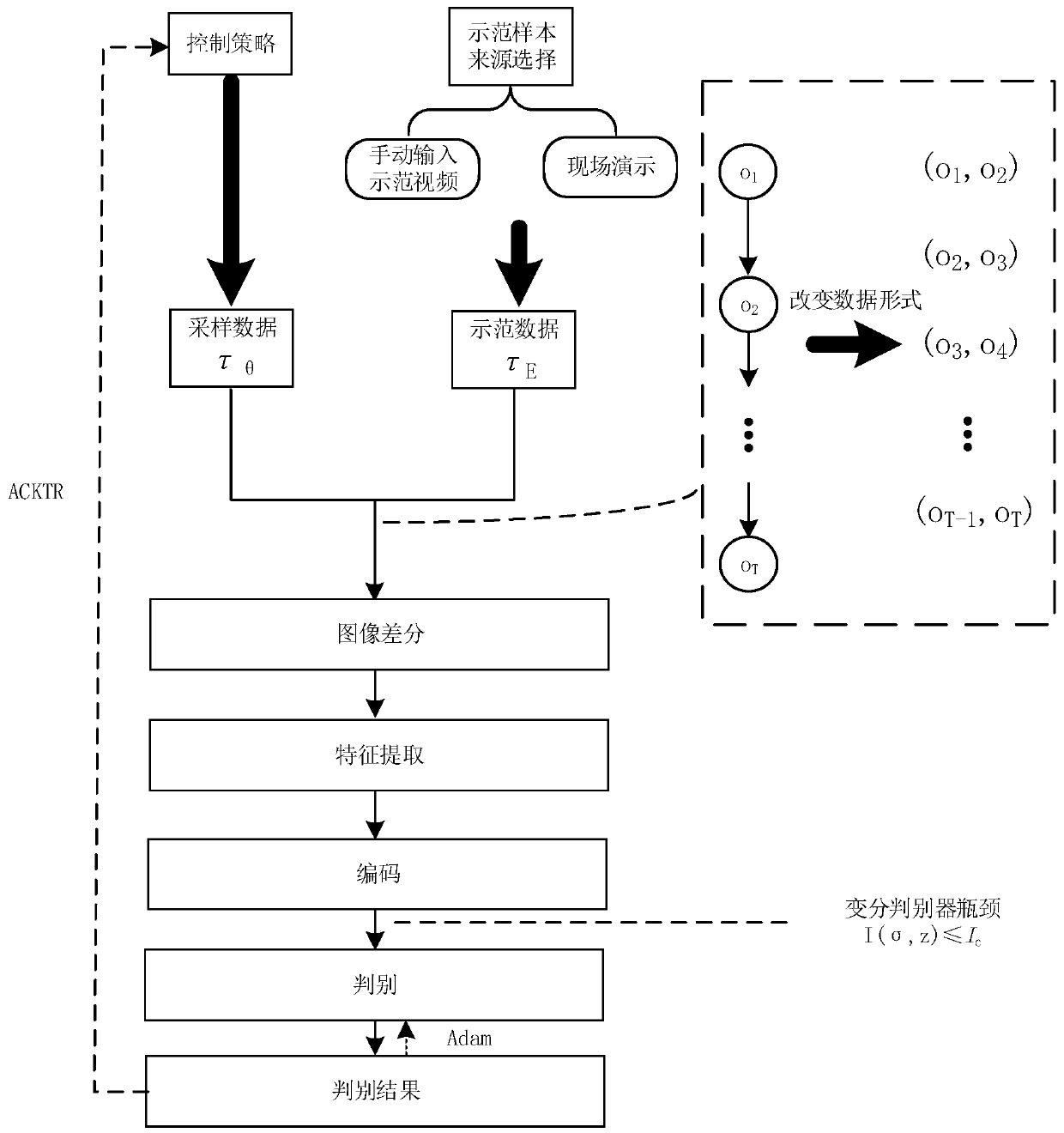

Mechanical arm action learning method and system based on third-person imitation learning

ActiveCN111136659ABreak the balance of the gameGame balance maintenanceProgramme-controlled manipulatorThird partyAutomatic control

The invention discloses a mechanical arm action learning method and system based on third-person imitation learning. The method and system are used for automatic control of a mechanical arm so that the mechanical arm can automatically learn how to complete a corresponding control task by watching a third-party demonstration. According to the method and system, samples exist in a video form, and the situation that a large number of sensors are needed to be used obtaining state information is avoided; an image difference method is used in a discriminator module so that the discriminator module can ignore the appearance and the environment background of a learning object, and then third-party demonstration data can be used for imitation learning; the sample acquisition cost is greatly reduced; a variational discriminator bottleneck is used in the discriminator module to restrain the discriminating accuracy of a discriminator on demonstration generated by the mechanical arm, and the training process of the discriminator module and a control strategy module is better balanced; and the demonstration action of a user can be quickly simulated, operation is simple and flexible, and the requirements for the environment and demonstrators are low.

Owner:NANJING UNIV

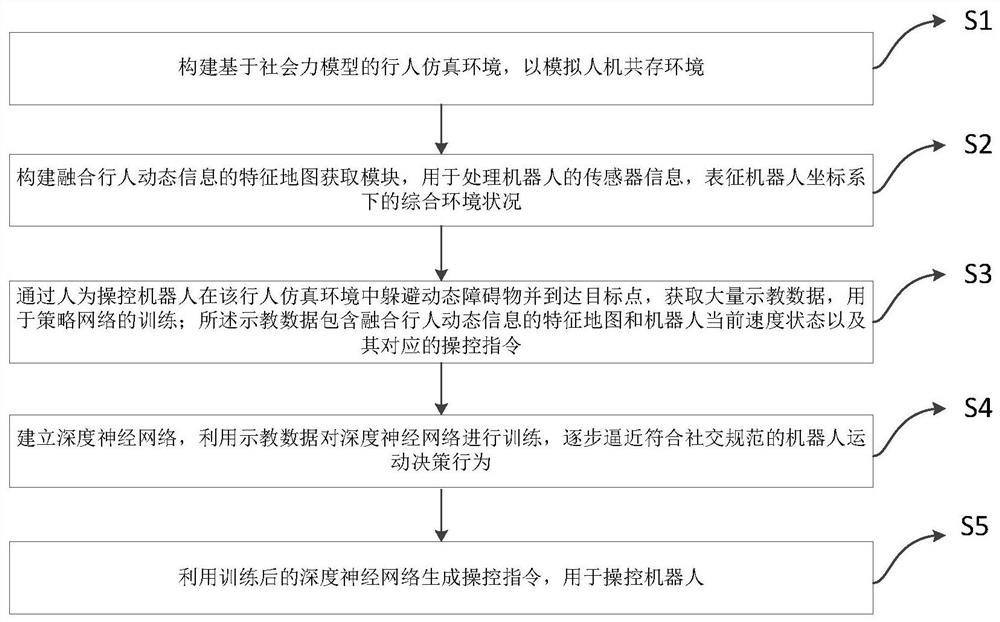

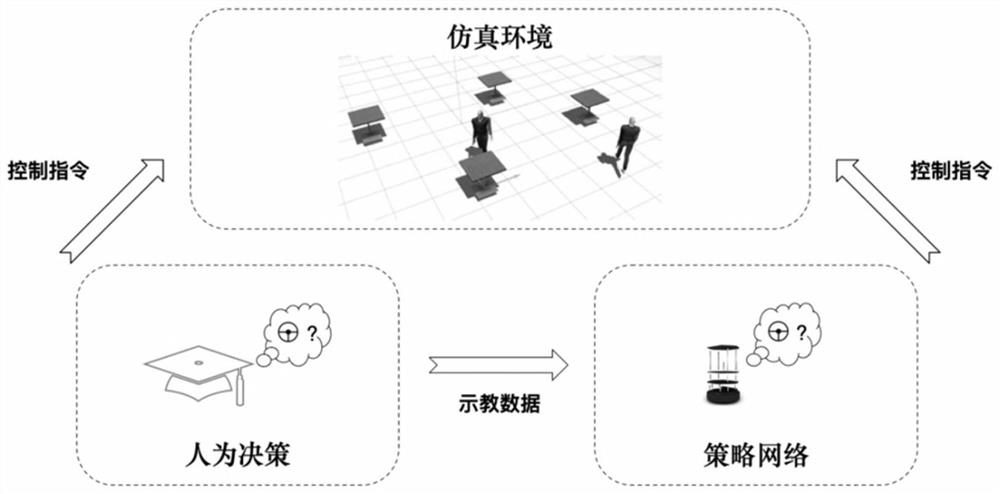

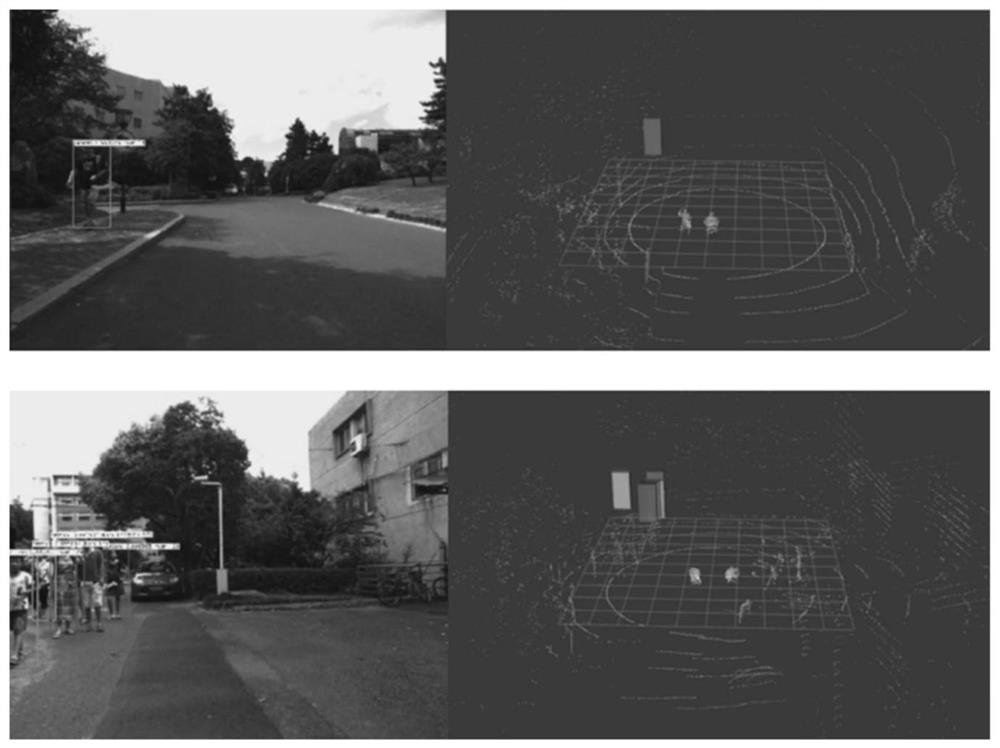

Imitation learning social navigation method based on feature map fused with pedestrian information

ActiveCN112965081AExpand the feasible areaReasonable and efficient perceptionInternal combustion piston enginesNavigational calculation instrumentsPoint cloudRgb image

The invention discloses an imitation learning social navigation method based on a feature map fused with pedestrian information. According to the imitation learning social navigation method, a robot is guided to imitate the movement habits of experts by introducing an imitation learning method, the navigation method conforming to the social specifications is planned, the planning efficiency is improved, the problem that the robot is locked is solved, and the robot is helped to be better integrated into a man-machine co-integration environment. The method comprises the steps of: acquiring a time sequence motion state of a pedestrian through pedestrian detection and tracking in a sequence RGB image and three-dimensional point cloud alignment; then, in combination with two-dimensional laser data and a social force model, acquiring a local feature map marked with pedestrian dynamic information; and finally, establishing a deep network with the local feature map, a current speed of the robot and a relative position of a target as input and a robot control instruction as output, training with expert teaching data as supervision, and acquiring a navigation strategy conforming to social specifications.

Owner:ZHEJIANG UNIV

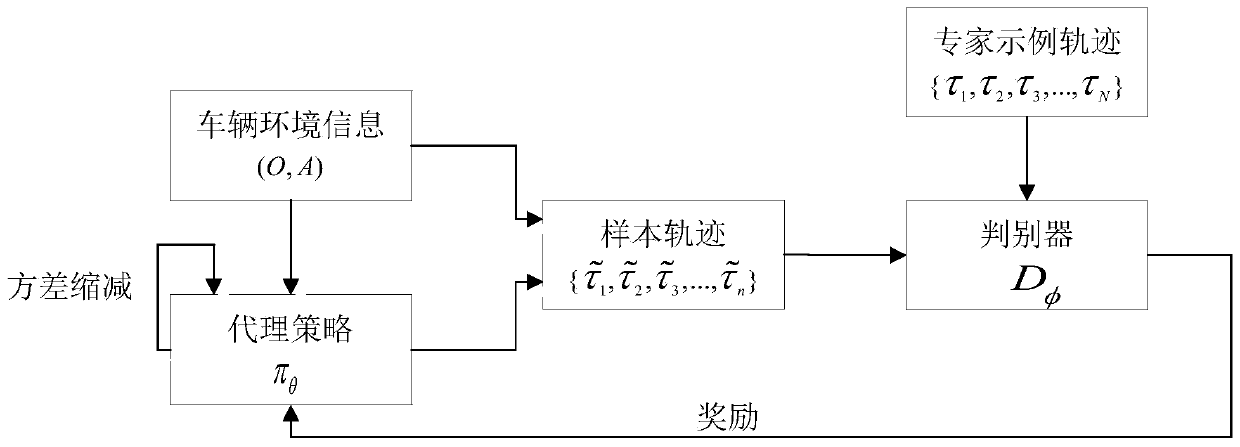

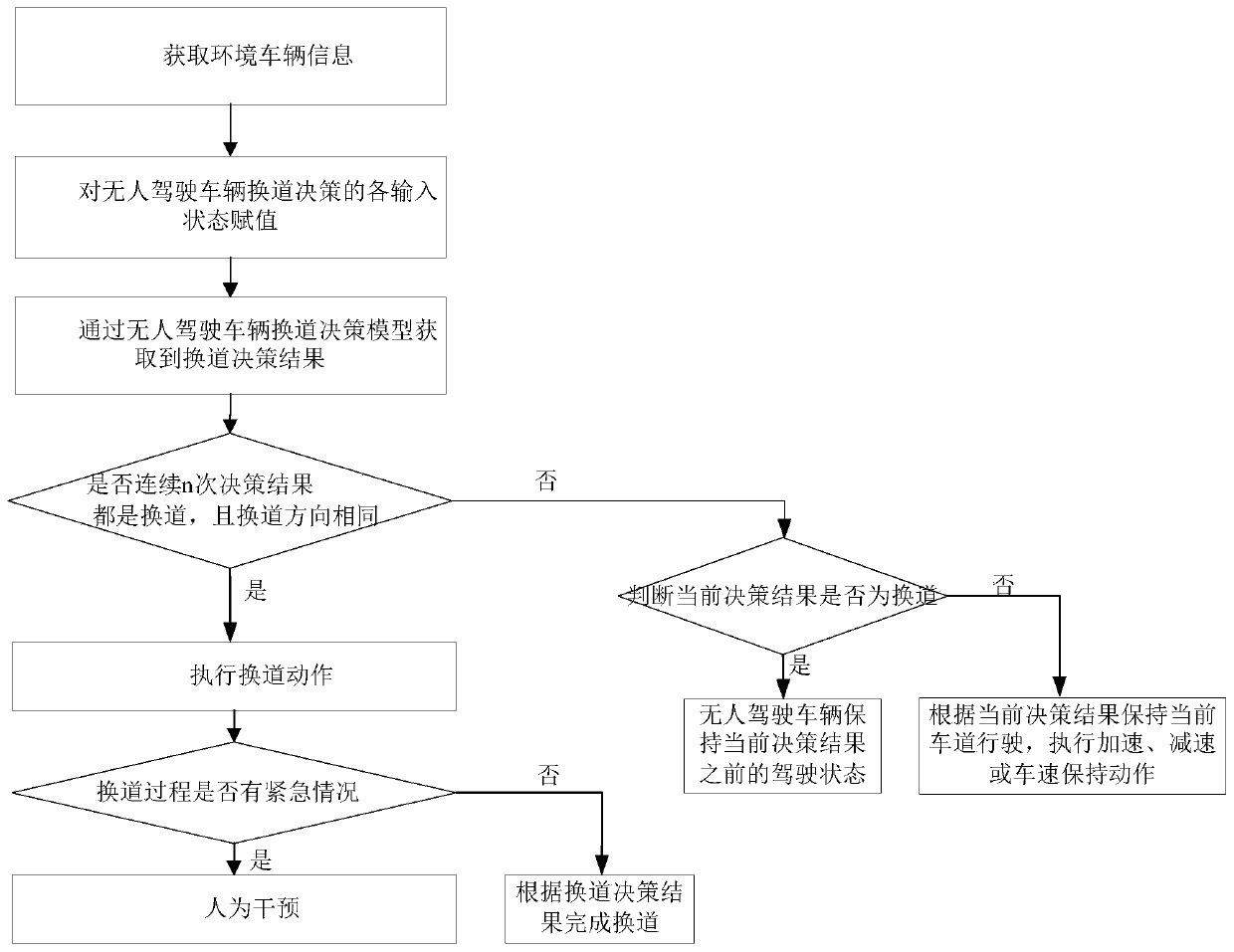

Unmanned vehicle lane changing decision-making method and system based on adversarial imitation learning

ActiveCN111483468AImprove accuracyImprove robustnessInternal combustion piston enginesDecision modelEngineering

The invention discloses an unmanned vehicle lane changing decision-making method and system based on adversarial imitation learning. The method comprises the steps that an unmanned vehicle lane changing decision-making task is described as a partially observable Markov decision-making process; training is carried out from examples provided by professional driving teaching by adopting an adversarial imitation learning method to obtain an unmanned vehicle lane changing decision-making model; and in the unmanned driving process of the vehicle, a vehicle lane change decision-making result is obtained through the unmanned vehicle lane change decision-making model by taking currently obtained environmental vehicle information as an input parameter of the unmanned vehicle lane change decision-making model. According to the invention, a lane changing strategy is learned from examples provided by professional driving teaching through adversarial imitation learning; a task reward function does not need to be manually designed, and direct mapping from the vehicle state to the vehicle lane changing decision can be directly established, so that the correctness, robustness and adaptivity of thelane changing decision of the unmanned vehicle under the dynamic traffic flow condition are effectively improved.

Owner:GUANGZHOU UNIVERSITY

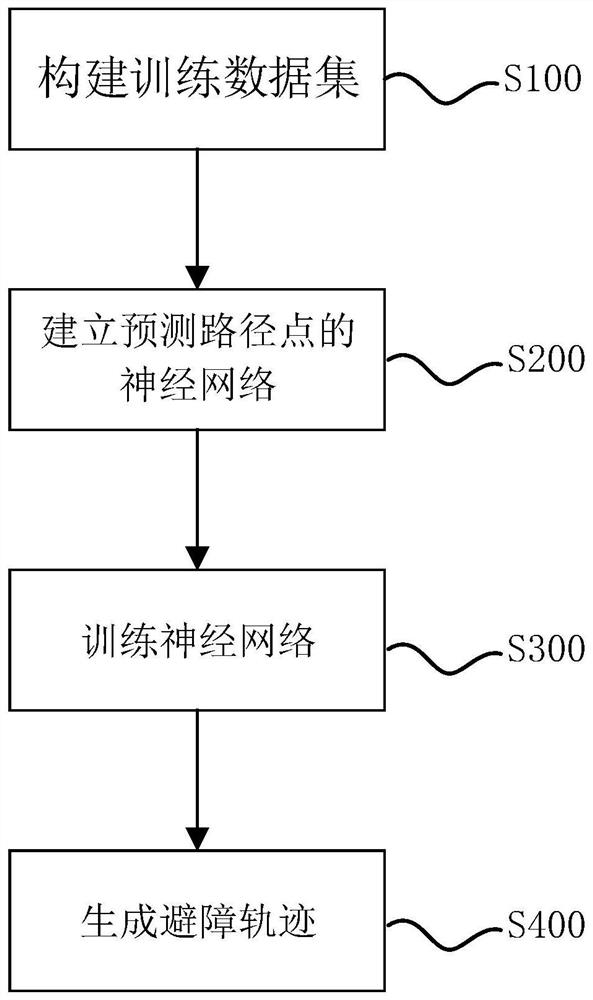

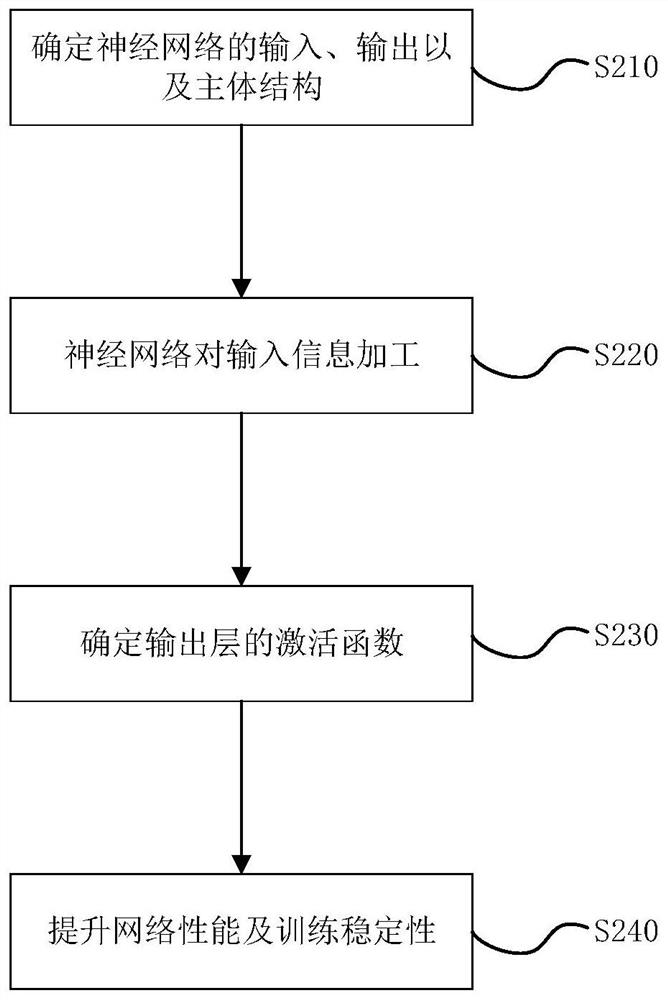

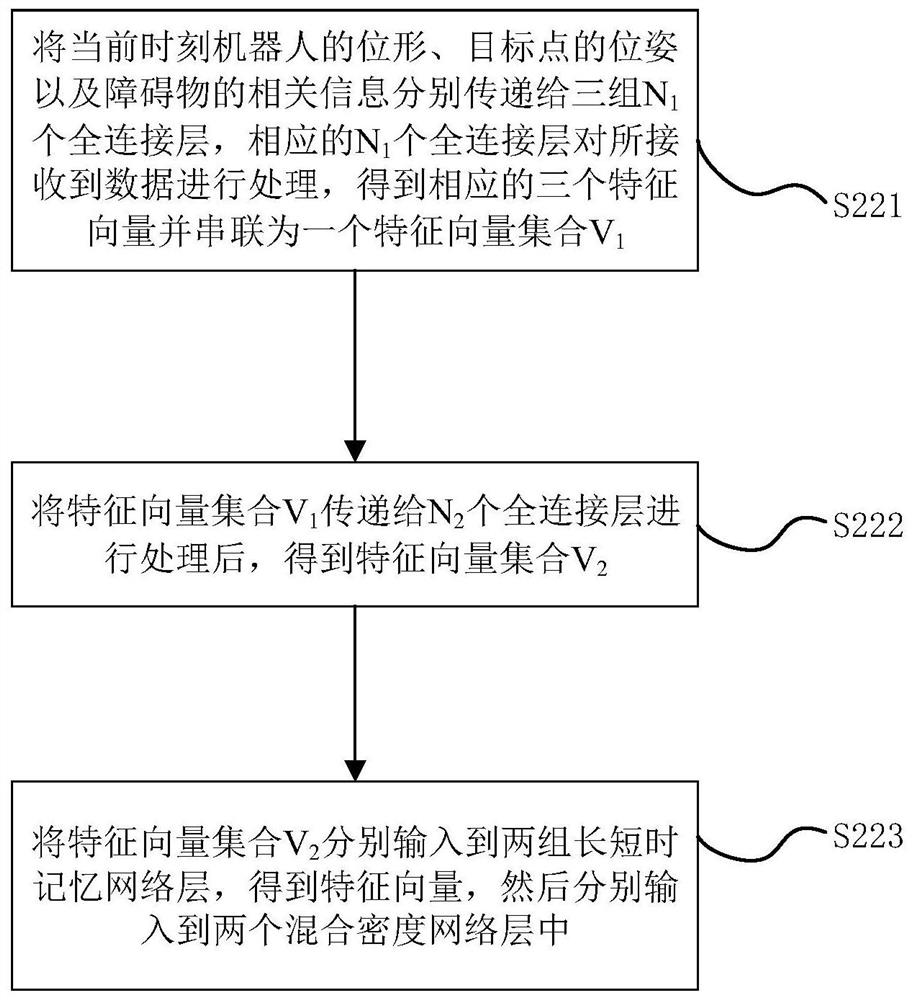

Robot obstacle avoidance trajectory planning method based on imitation learning and robot

ActiveCN111702754AReduce demandComplete obstacle avoidance trajectoryProgramme-controlled manipulatorRobot motion planningData set

The invention relates to the field of robot motion planning, and discloses a robot obstacle avoidance trajectory planning method based on imitation learning and a robot. The method comprises the stepsof constructing a training data set, establishing a neural network of prediction path points, training the neural network, and generating an obstacle avoidance trajectory. The method can achieve thepurpose of planning the obstacle avoidance trajectory of the robot without knowing complete obstacle information in a way of learning a taught trajectory.

Owner:STATE GRID ANHUI ULTRA HIGH VOLTAGE CO +1

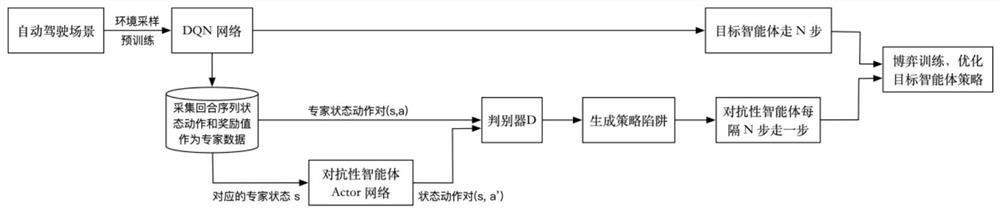

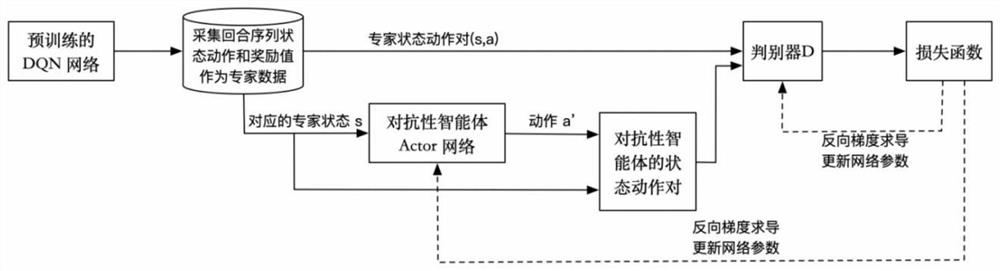

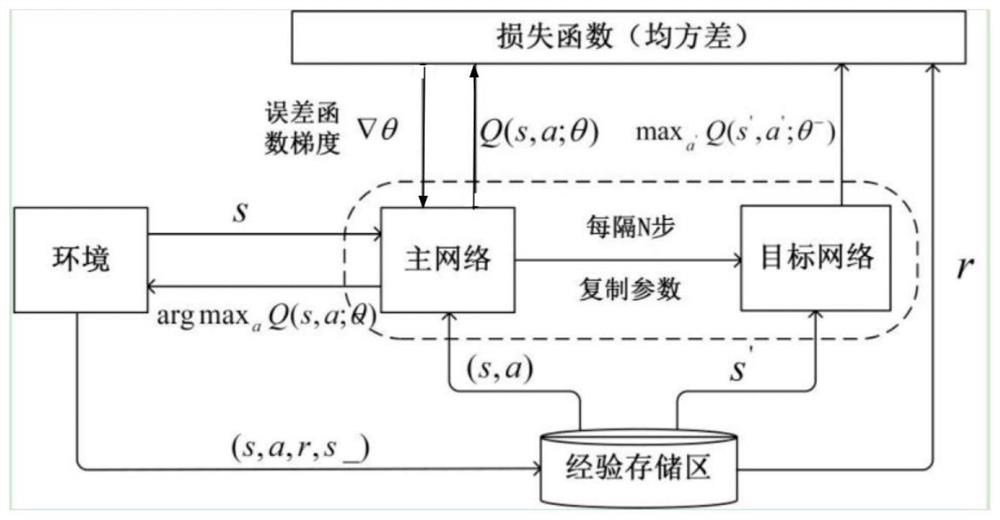

Depth reinforcement learning strategy optimization defense method and device based on imitation learning

PendingCN112884131AImprove robustnessForecastingNeural architecturesGenerative adversarial networkEngineering

The invention discloses a deep reinforcement learning strategy optimization defense method and device based on imitation learning, and the method comprises the steps: building an agent automatic driving simulation environment of deep reinforcement learning, constructing a target agent based on a deep Q network in reinforcement learning, and carrying out the reinforcement learning of the target agent to optimize the parameters of the deep Q network; utilizing the parameter-optimized deep Q network to generate a state action pair sequence of the target agent at T moments as expert data, wherein an action value in a state action pair corresponds to an action with a minimum Q value; constructing an adversarial agent based on the generative adversarial network, and performing imitation learning on the adversarial agent, that is, taking the state in the expert data as the input of the generative adversarial network, and taking the expert data as a label to supervise and optimize the parameters of the generative adversarial network; and performing adversarial training on the target agent based on the state generated by the adversarial agent, and then optimizing parameters of the deep Q network to achieve deep reinforcement learning strategy optimization defense.

Owner:ZHEJIANG UNIV OF TECH

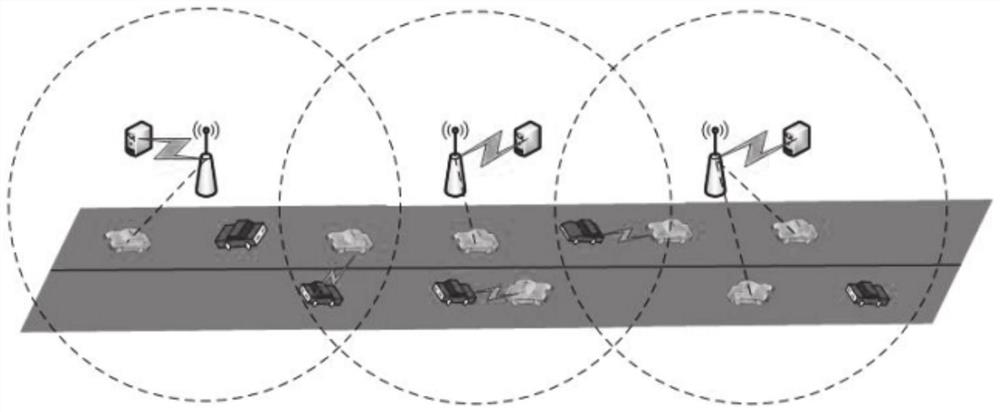

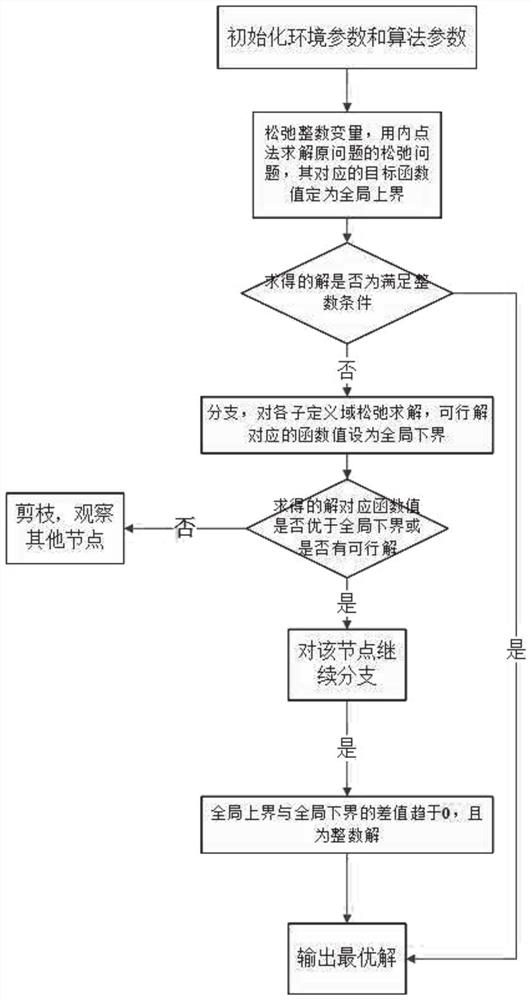

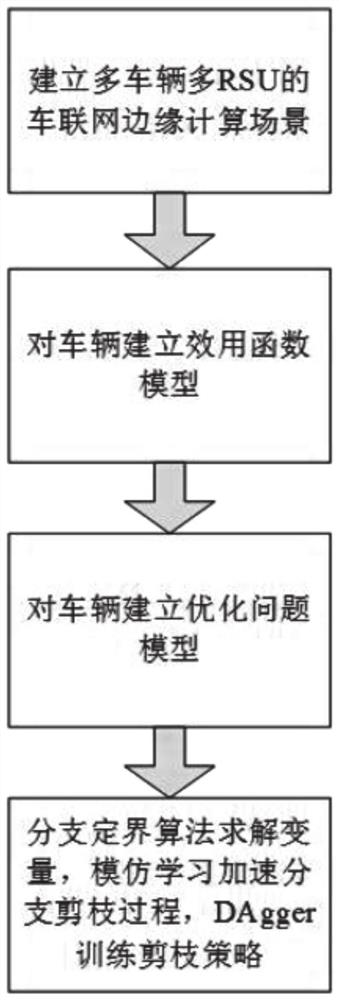

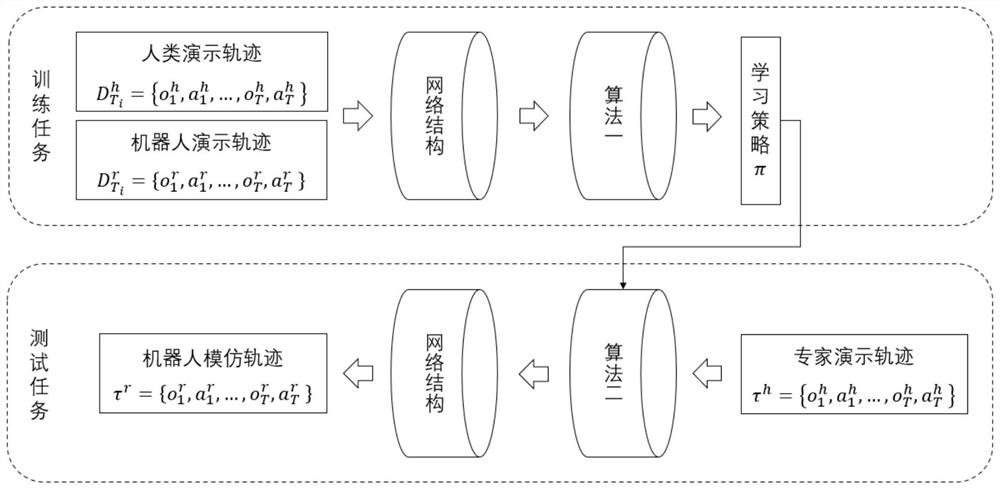

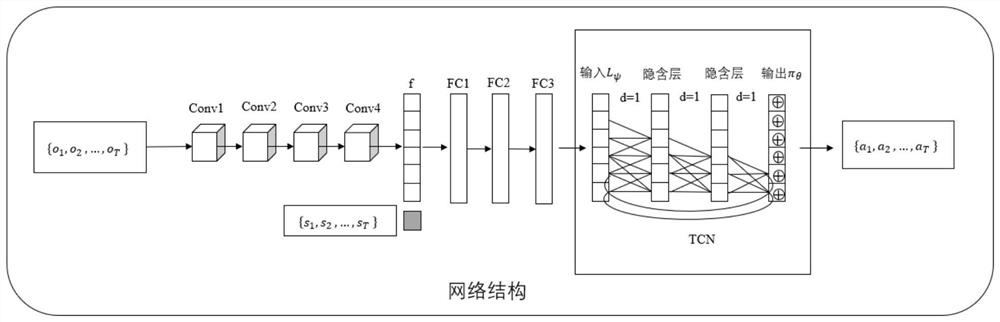

Internet of Vehicles mobile edge computing task unloading method and system based on learning pruning

ActiveCN112512013AUtility optimizationFlexible designParticular environment based servicesVehicle-to-vehicle communicationSimulationMobile edge computing

The invention provides an Internet of Vehicles mobile edge computing task unloading method and system based on learning pruning, belongs to the technical field of Internet of Vehicles communication, and the method comprising the following steps: calculating vehicle parameters in an Internet of Vehicles mobile edge computing scene, calculating unloading parameters according to the vehicle parameters; constructing a task unloading utility model according to the unloading parameters; and solving an optimal solution of the task unloading utility model by using a branch and bound algorithm in combination with an imitation learning method, thereby selecting a task unloading mode in a utility optimization manner and determining computing resources obtained by bidding. On the premise of considering the mobility of the vehicle, a vehicle utility function in an Internet of Vehicles scene is established, so that the selection of the unloading mode by the vehicle and the calculation resource obtained by bidding are carried out in a utility optimization mode; when the task is selected to be unloaded to the service type vehicle, the vehicle running in different directions is selected, so that the transmission rate is increased; a branch and bound method is utilized, a pruning strategy based on learning is combined to accelerate the branch pruning process, and the complexity is reduced.

Owner:SHANDONG NORMAL UNIV

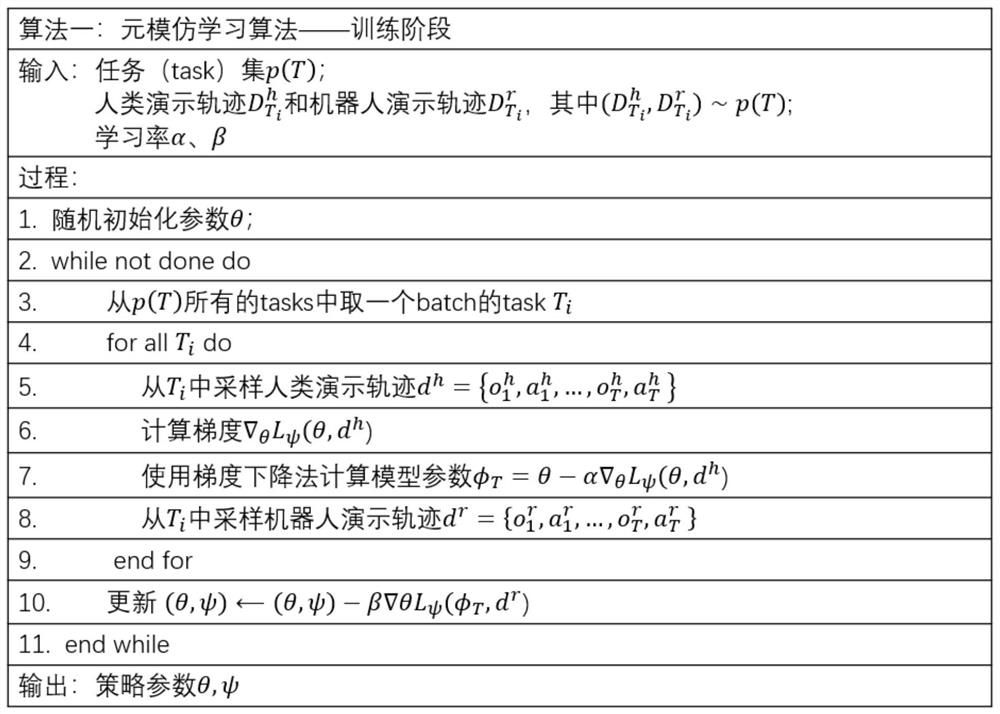

Robot demonstration teaching method based on meta-imitation learning

PendingCN111983922AFast generalizationQuick teachProgramme-controlled manipulatorArtificial lifeNetwork structureMachine

The invention discloses a robot demonstration teaching method based on meta-imitation learning, and relates to the technical field of machine learning, and the method comprises the steps: obtaining arobot demonstration teaching task set; constructing a network structure model and obtaining a self-adaptive target loss function; in the meta-training stage, learning and optimizing a loss function and initialization values and parameters of the loss function by using an algorithm I; in the meta-test stage, learning the trajectory demonstrated by the expert by using an algorithm II to obtain a learning strategy; taking the expert demonstration track as input, in combination with a learning strategy, generating a robot imitation track by utilizing the network structure model, and mapping the robot imitation track to the action of the robot in combination with the robot state information. According to the method, a new scene can be rapidly generalized from a small number of demonstration examples given by expert demonstration, specific task engineering does not need to be carried out, and the robot can self-learn strategies irrelevant to tasks according to the expert demonstration, so that a track is generated, and one-time demonstration and rapid teaching are realized.

Owner:GUANGZHOU INST OF ADVANCED TECH CHINESE ACAD OF SCI

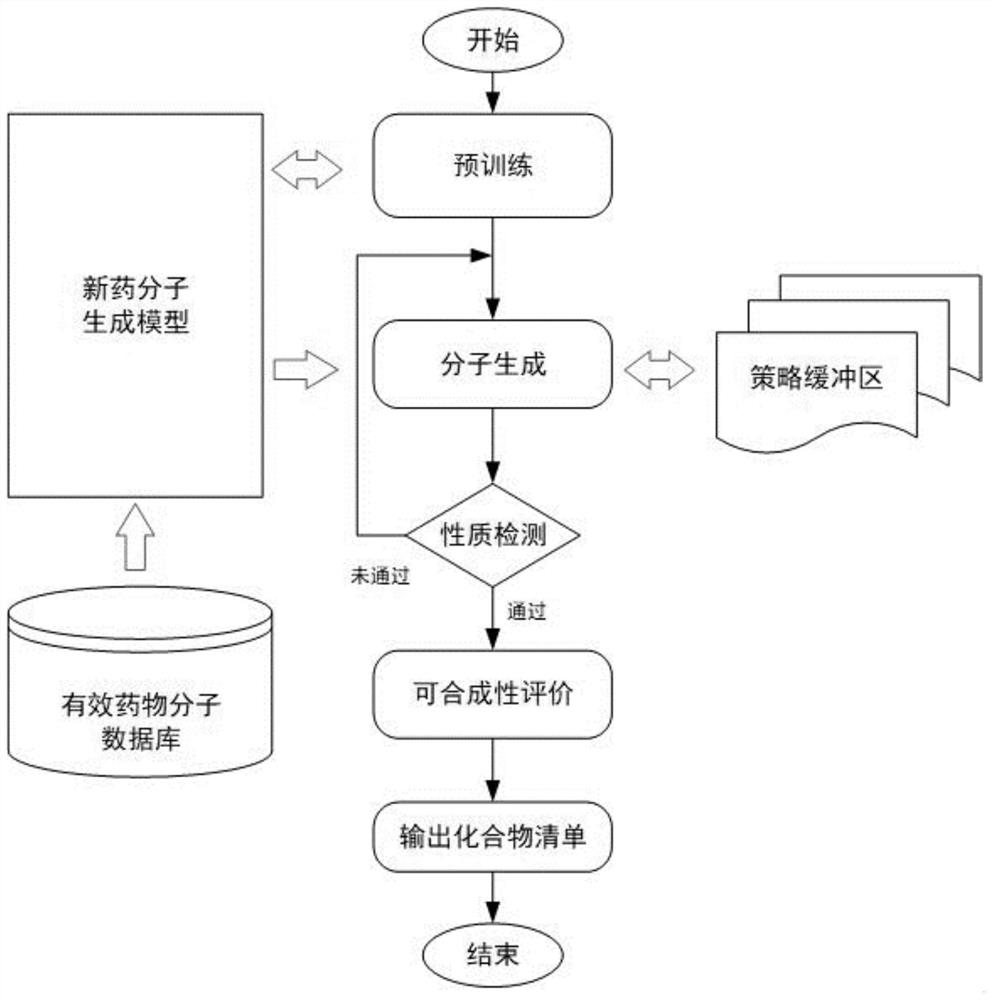

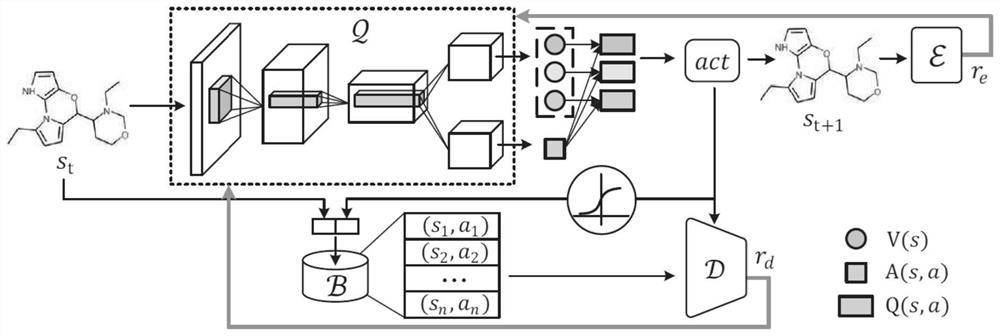

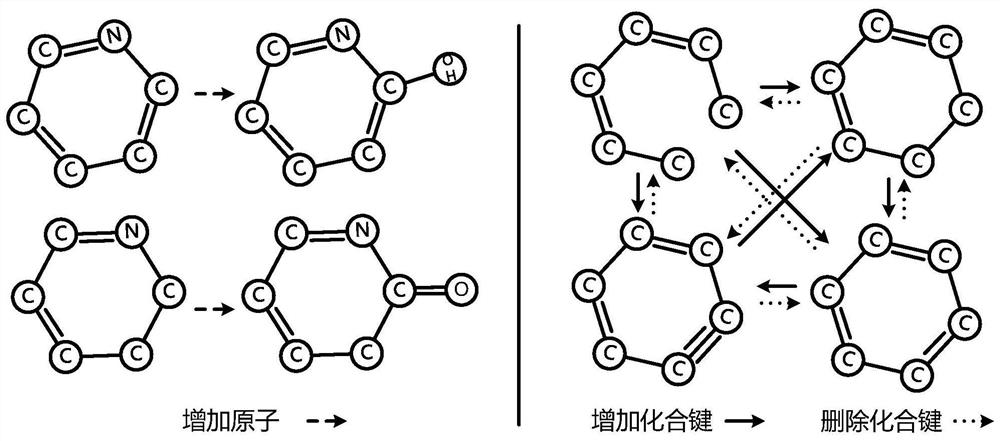

Drug molecule generation method based on adversarial imitation learning

PendingCN112820361ABiochemical Property OptimizationImprove stabilityMolecular designChemical machine learningPharmaceutical drugBiochemistry

The invention discloses a drug molecule generation method based on adversarial imitation learning, drug molecules are generated based on adversarial imitation learning and multi-task reinforcement learning, and the method comprises the following steps: constructing an effective drug molecule library; establishing an improved drug molecule generation model: designing and realizing a multi-task reinforcement learning module, and designing and realizing an adversarial imitation learning module; pre-training the model; executing a drug molecule generation process; and generating a candidate drug molecule result. By adopting the technical scheme provided by the invention, optimization of biochemical properties of drug molecules can be effectively promoted, the stability of model training is improved, and better drug molecules are obtained.

Owner:PEKING UNIV

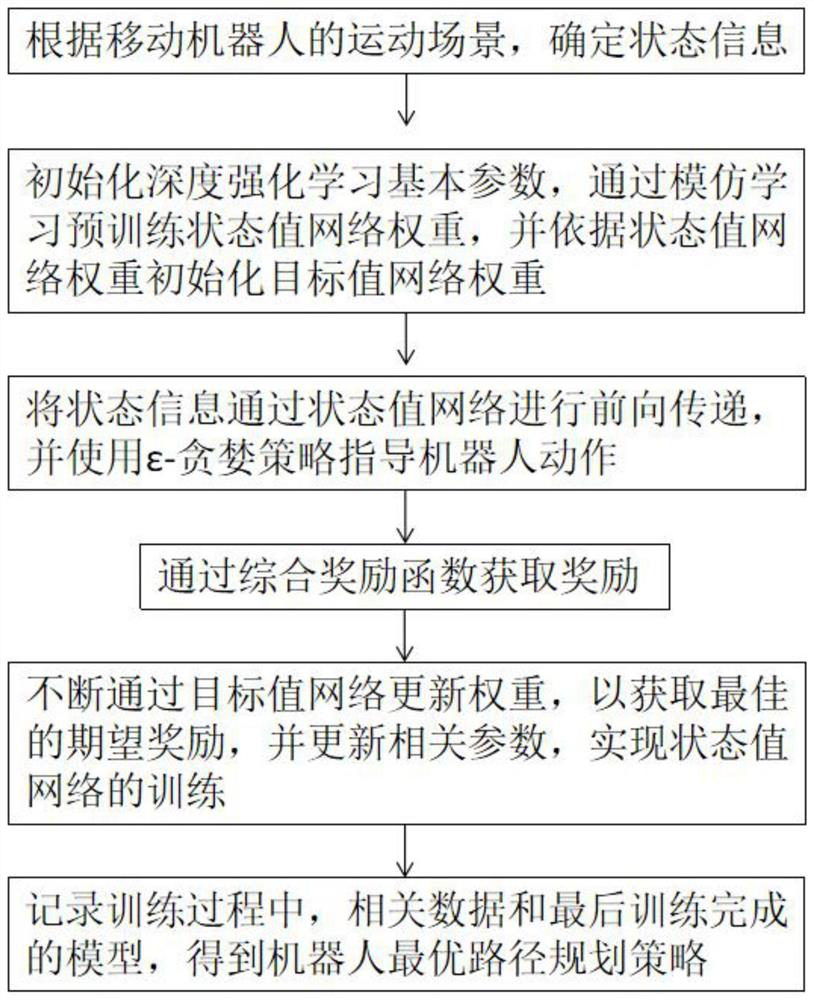

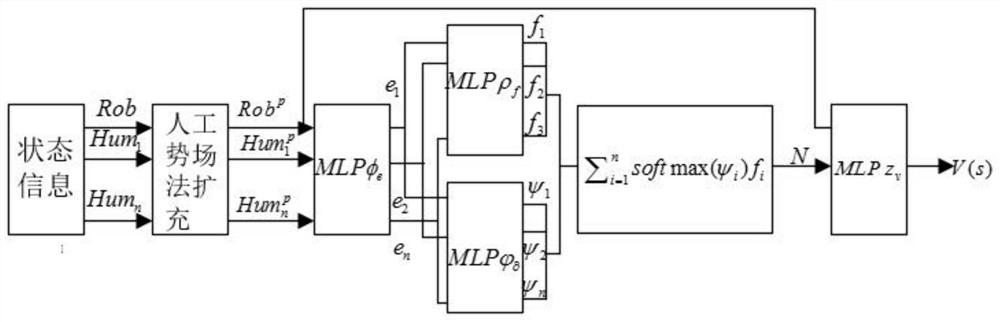

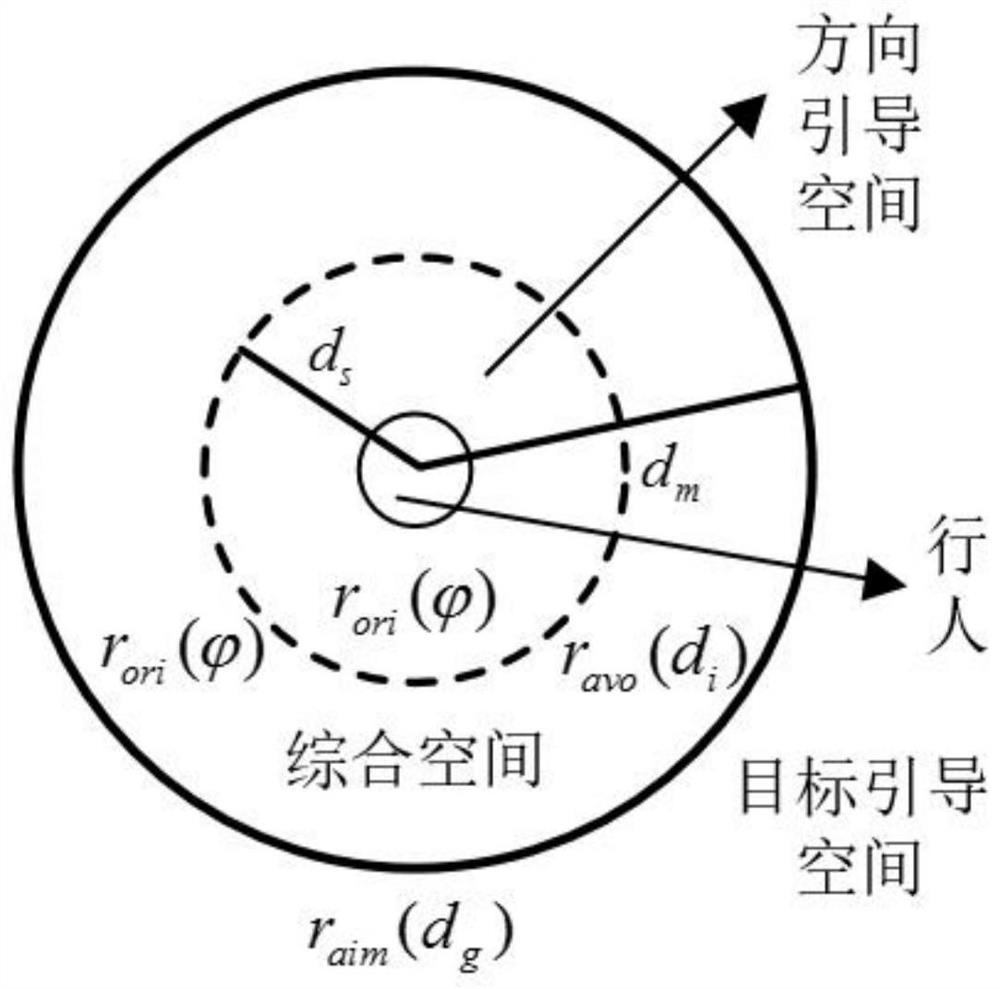

Mobile robot path planning method based on deep reinforcement learning

ActiveCN112904848AExtended Status InformationEnhanced interactionPosition/course control in two dimensionsSimulationEngineering

The invention discloses a mobile robot path planning method based on deep reinforcement learning. The method comprises the steps of S1 determining state information according to a motion scene of a mobile robot; S2 initializing basic parameters of deep reinforcement learning, and pre-training a state value network weight through imitation learning; S3 carrying out forward transmission on the state information through a state value network, and using an epsilon-greedy strategy for guiding the robot to act; S4 obtaining rewards through a comprehensive reward function; S5 continuously updating the weight through the target value network, and updating related parameters; and S6 recording related data and a model which is finally trained in the training process to obtain an optimal navigation strategy of the robot. The method has a path planning scene aiming at the pedestrian environment in the public service field; and a state value network is designed by utilizing an artificial potential field method and an attention mechanism, so that the state information of the robot and a pedestrian is effectively expanded, and the state information interaction is promoted.

Owner:CHANGSHA UNIVERSITY OF SCIENCE AND TECHNOLOGY

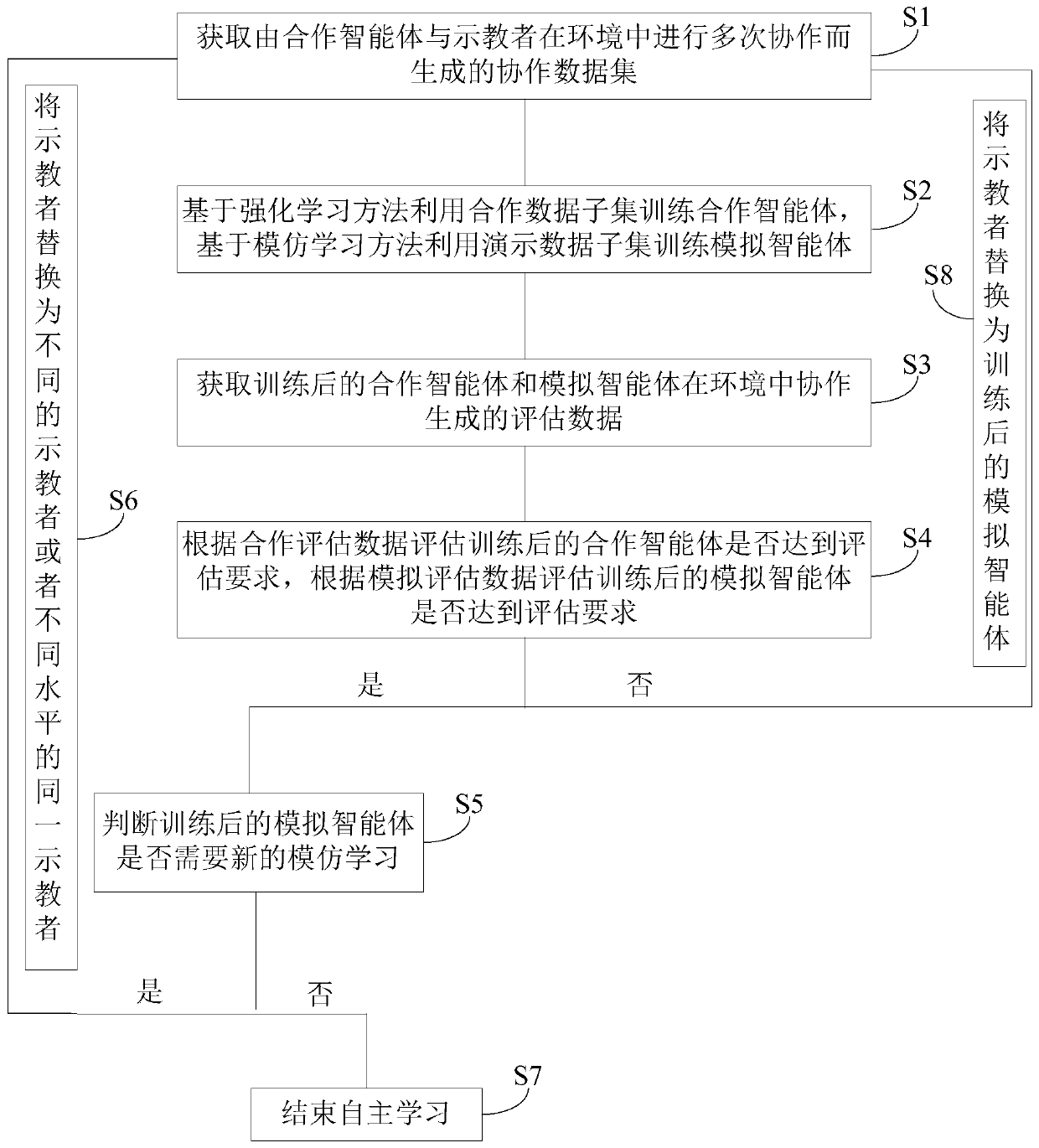

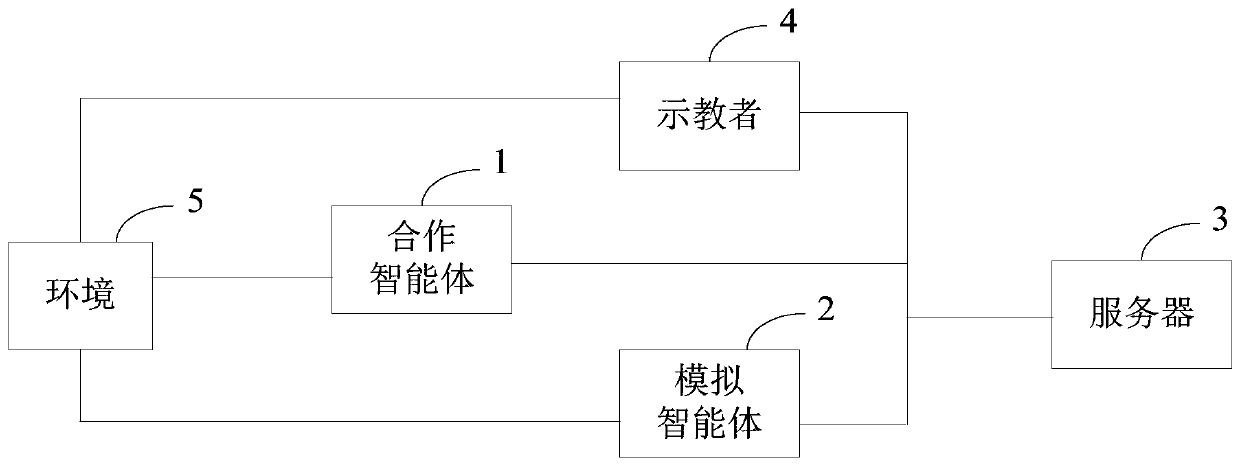

Autonomous learning method and system of agent for man-machine cooperative work

ActiveCN109858574ASame collaboration effectEfficient use ofCharacter and pattern recognitionNeural architecturesData setSimulation

The invention belongs to the technical field of artificial intelligence. The invention discloses an autonomous learning method and system of an agent for man-machine cooperative work. The method comprises the steps of obtaining a cooperation data set, training a cooperation agent and a simulation agent according to the cooperation data set; and assessing whether the cooperation agent and the simulation agent meet assessment requirements or not according to the obtained assessment data generated by cooperation of the trained cooperation agent and the simulation agent in the environment, judgingwhether the trained simulation agent needs new imitation learning or not if the assessment requirements are met, and ending autonomous learning of the trained cooperation agent if the assessment requirements are not met. The system comprises a cooperation agent, a simulation agent and a server. Through the scheme, the dynamic change of the environment can be adapted, the same performance effect can be obtained in the similar environment, the demonstration behaviors of different teaches can be simulated, so that the trained intelligent agent can adapt to the dynamic change of the teaches, andthe teaches with different operation levels can also achieve the same cooperation effect.

Owner:启元世界(北京)信息技术服务有限公司

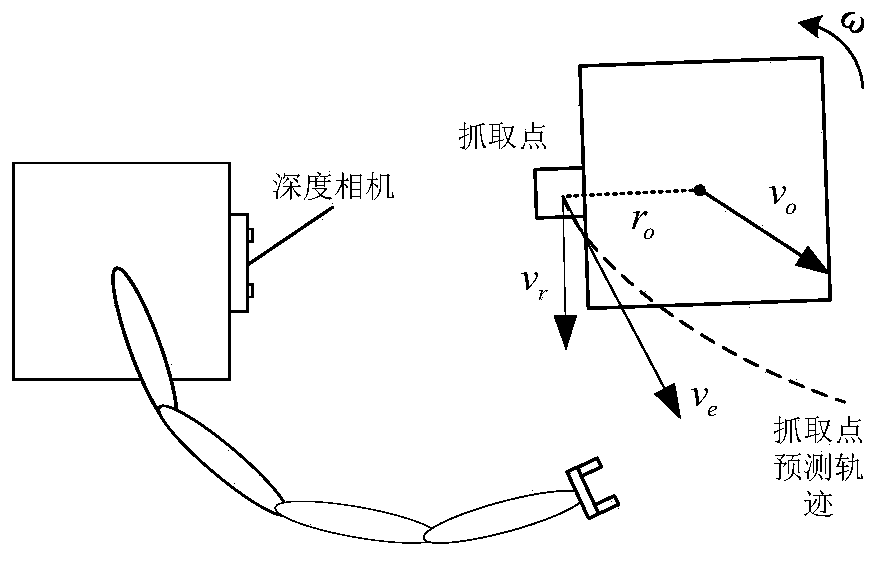

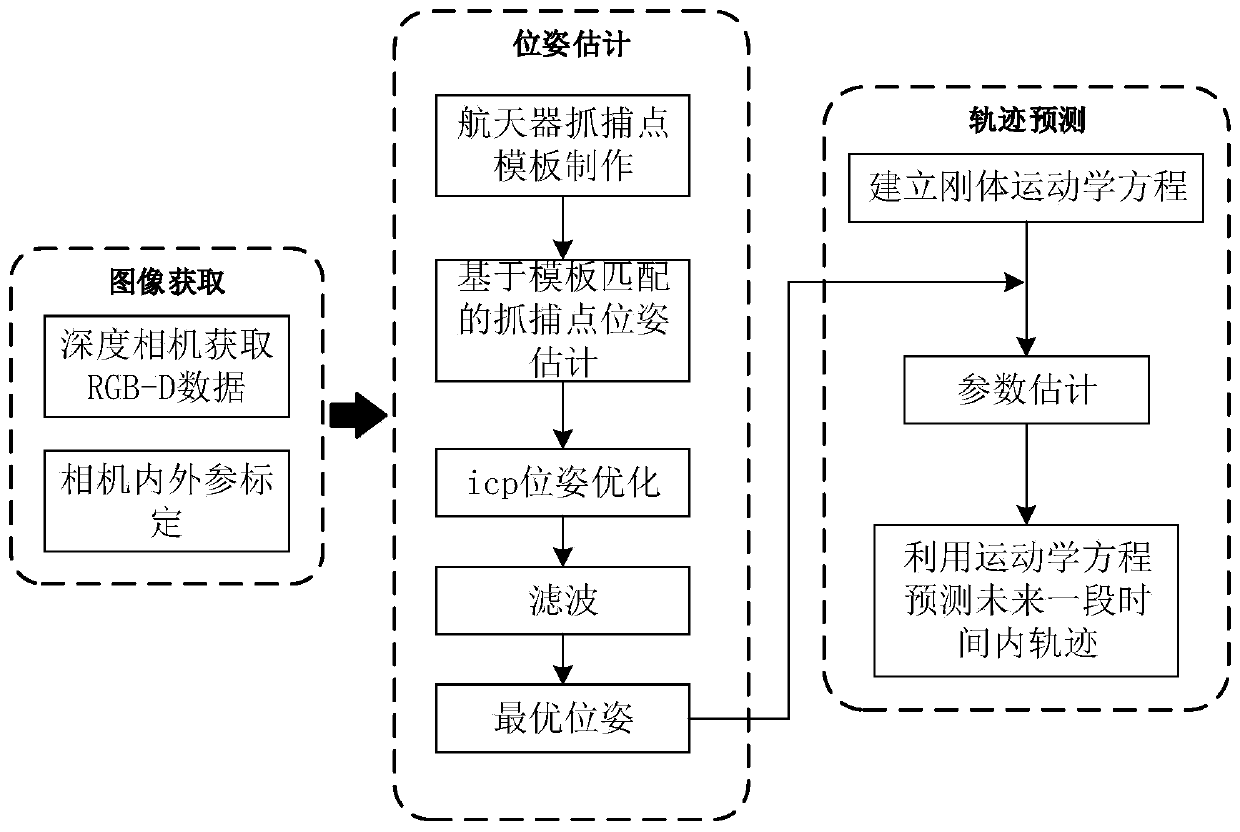

Free floating target capturing method based on 3D vision and imitation learning

ActiveCN111325768AHigh speedImprove accuracyImage analysisMachine learningTarget captureTrajectory planning

The invention discloses a free floating target capturing method based on 3D vision and imitation learning. The method mainly comprises the following three parts: firstly, acquiring the position and posture of a target capture point in real time by using a depth camera; secondly, estimating a motion state by using Kalman filtering according to historical data, and predicting a track of free floating in a future period of time according to motion information; and finally, determining the capture opportunity and the pose of the capture point at the moment, collecting capture data of the person, establishing a skill model, and migrating the skill model to the robot for capture. Pose estimation, trajectory prediction and mechanical arm trajectory planning technologies in the process are completed on the basis of vision and imitation learning, autonomous capture of a free floating target is achieved, and capture requirements can be well met.

Owner:WUHAN UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com