Free floating target capturing method based on 3D vision and imitation learning

A target and vision technology, applied in the field of space robots, can solve problems such as poor precision, high cost, and gaps, and achieve improved speed and accuracy, high autonomy, and simple effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0057] The cooperative transport system provided by the present invention will be described in detail below in conjunction with the accompanying drawings, which is an explanation of the present invention rather than a limitation.

[0058] At first introduce method principle of the present invention, comprise the following steps:

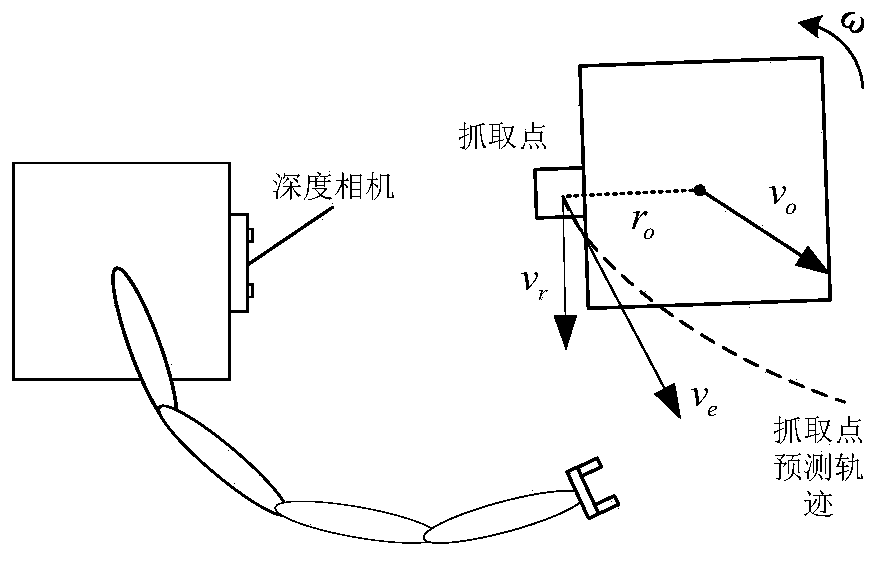

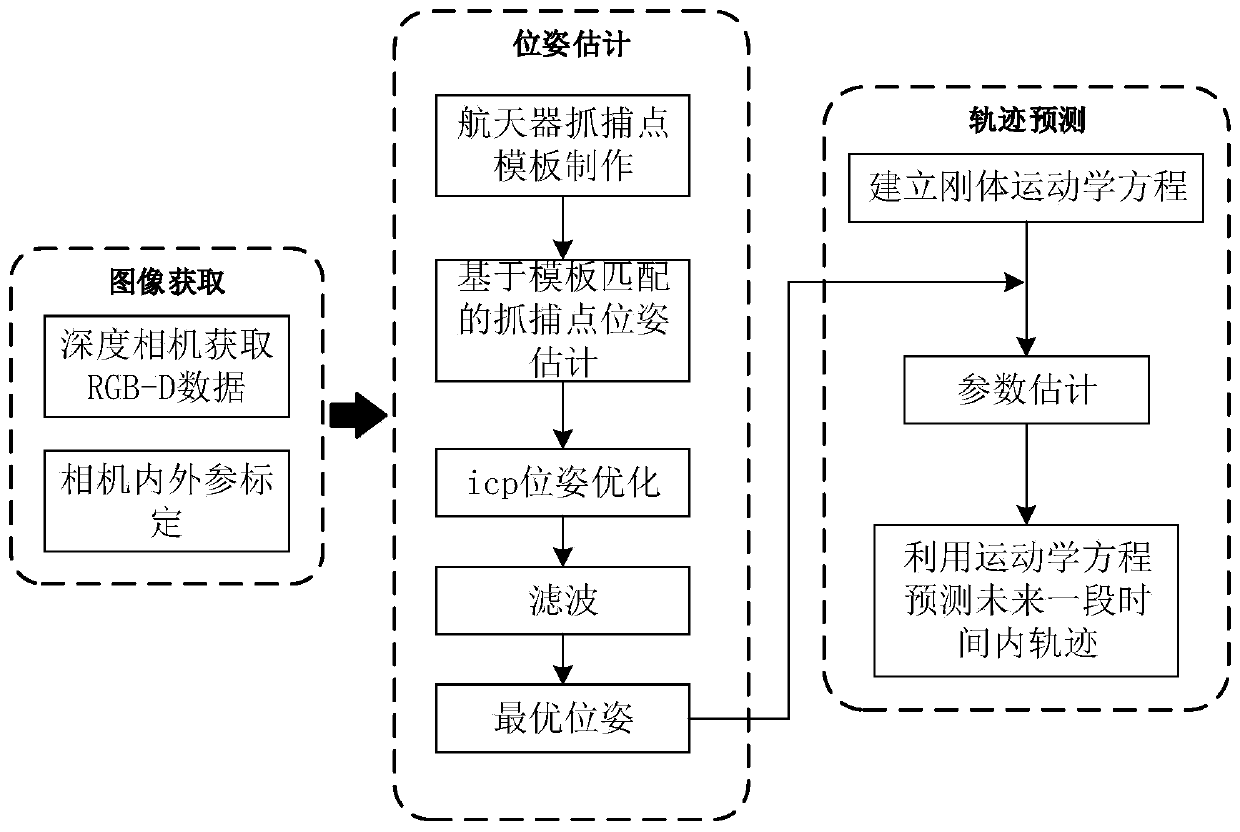

[0059] Step 1: Vision-based pose estimation, real-time feedback of the position and pose of the target;

[0060] Step 2: Trajectory prediction, based on the historical position and attitude trajectory for a period of time, dynamically predict the position and attitude trajectory for a period of time in the future;

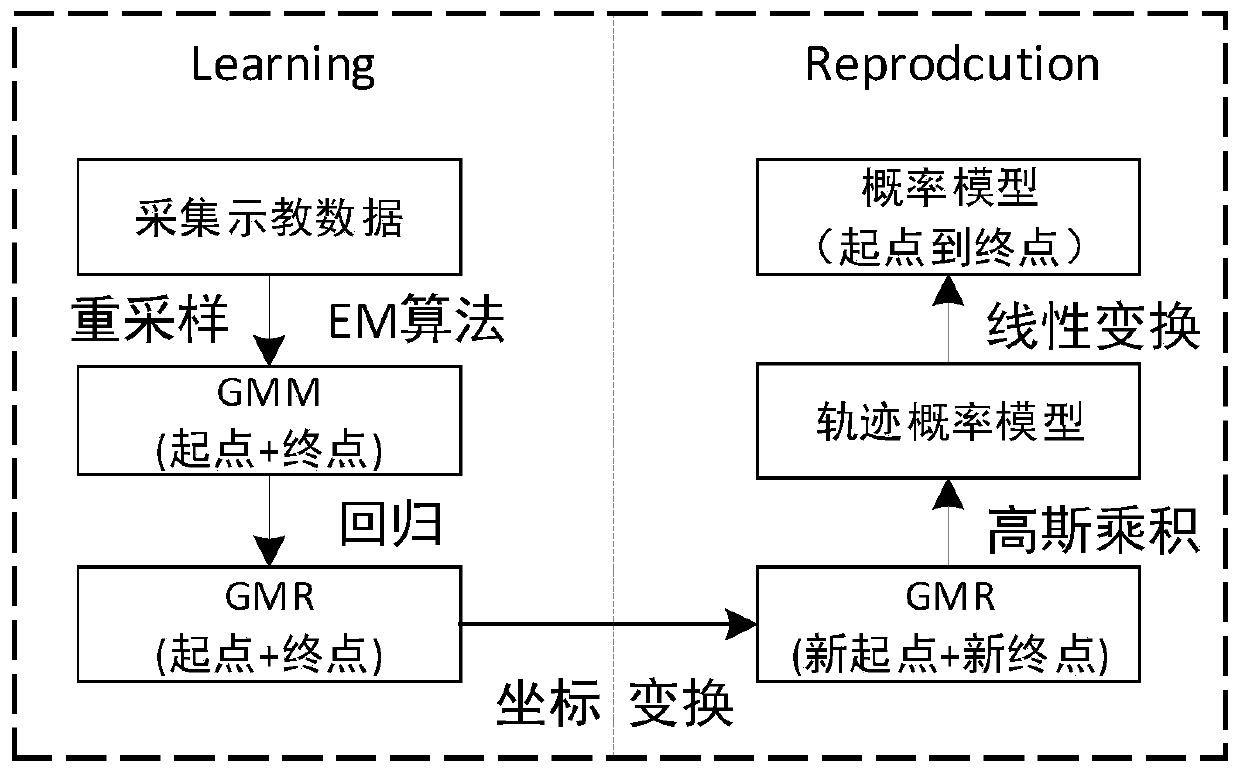

[0061] Step 3: Based on the trajectory planning of imitation learning, collect human capture data, build a skill model, transfer it to the robot, and determine the appropriate capture timing to capture according to the trajectory predicted in step 2.

[0062] Optionally, the capture system is based on the ROS (Robot Operating System) platf...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com