Patents

Literature

50 results about "Inverse reinforcement learning" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

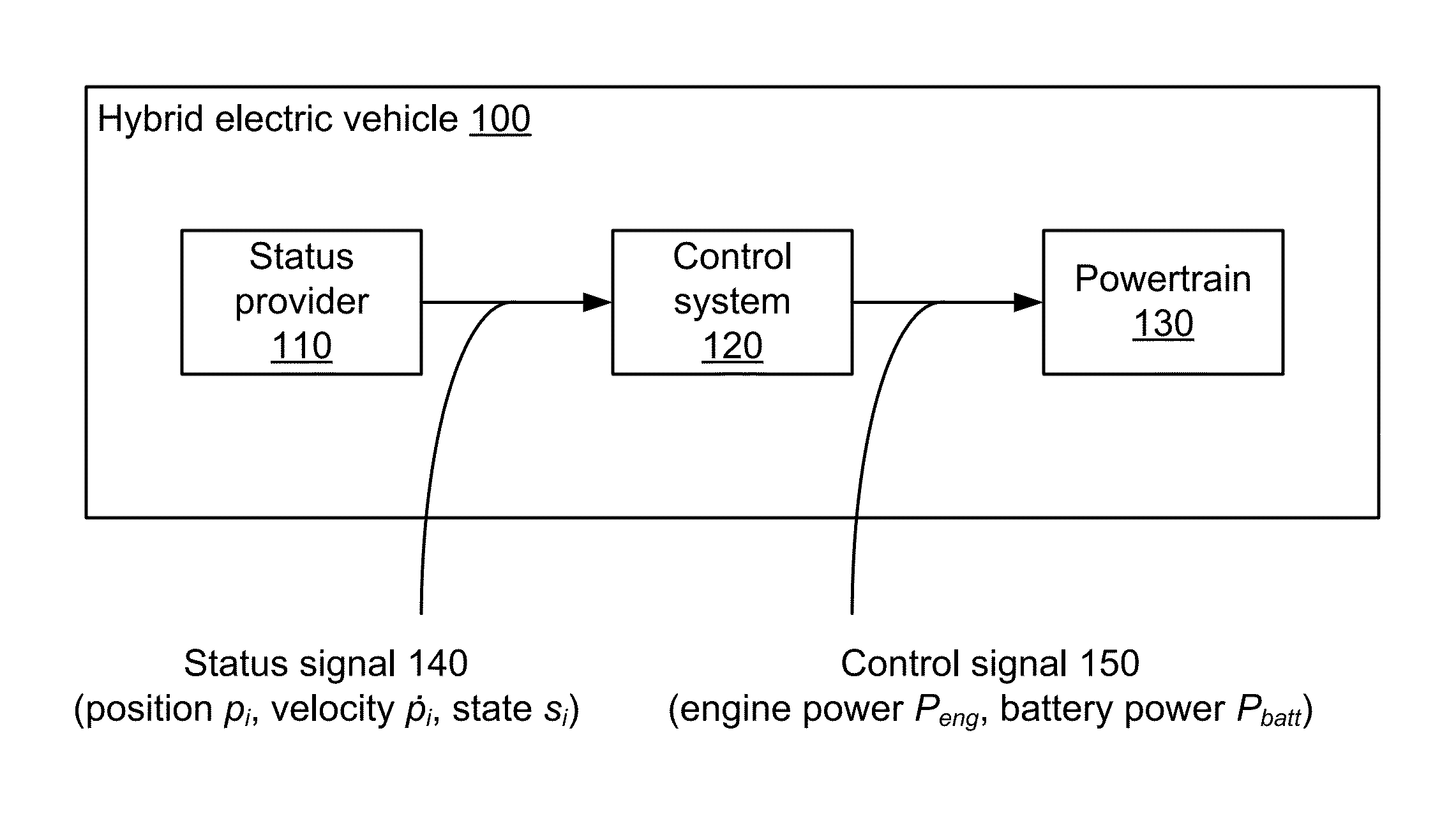

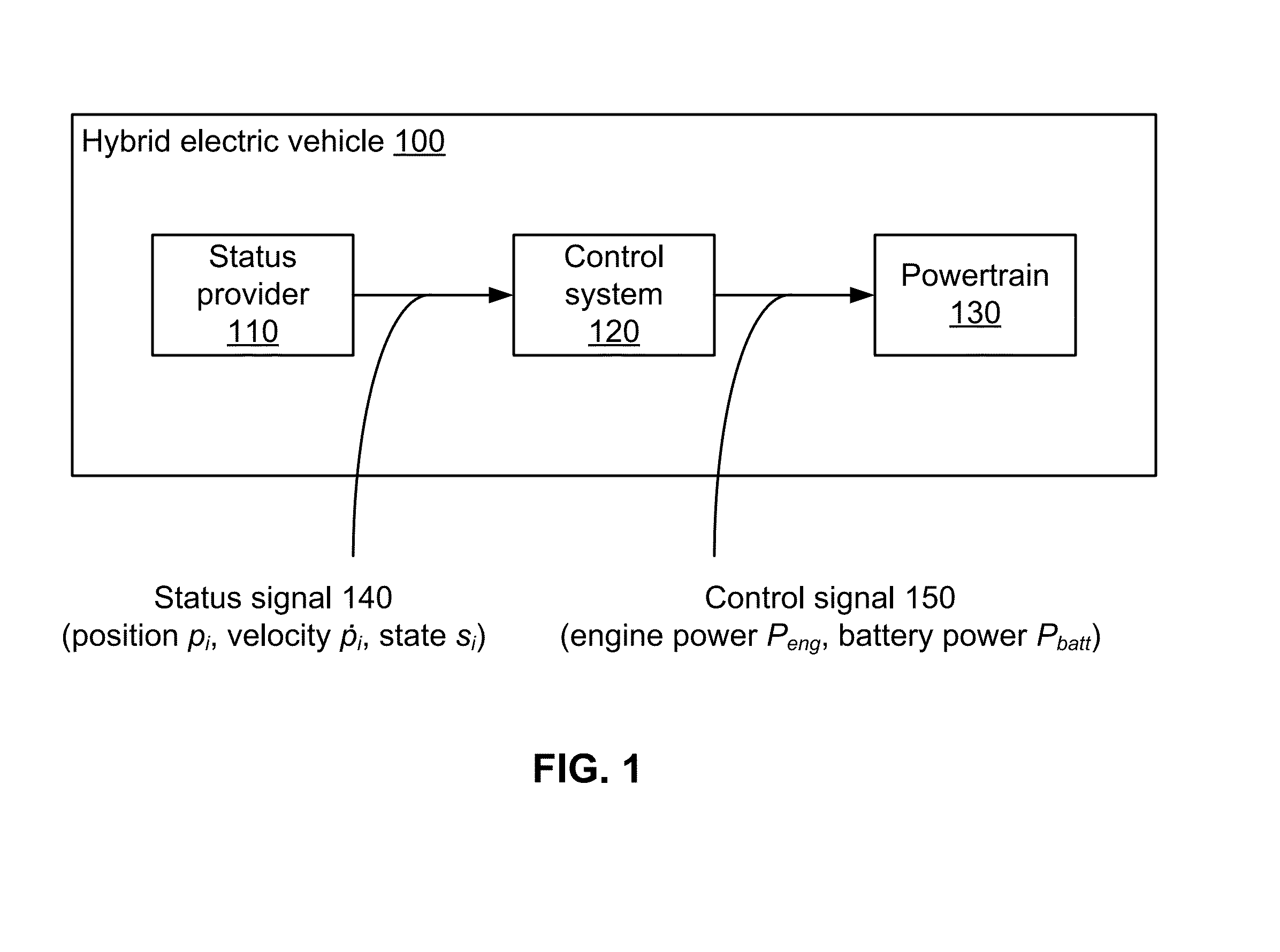

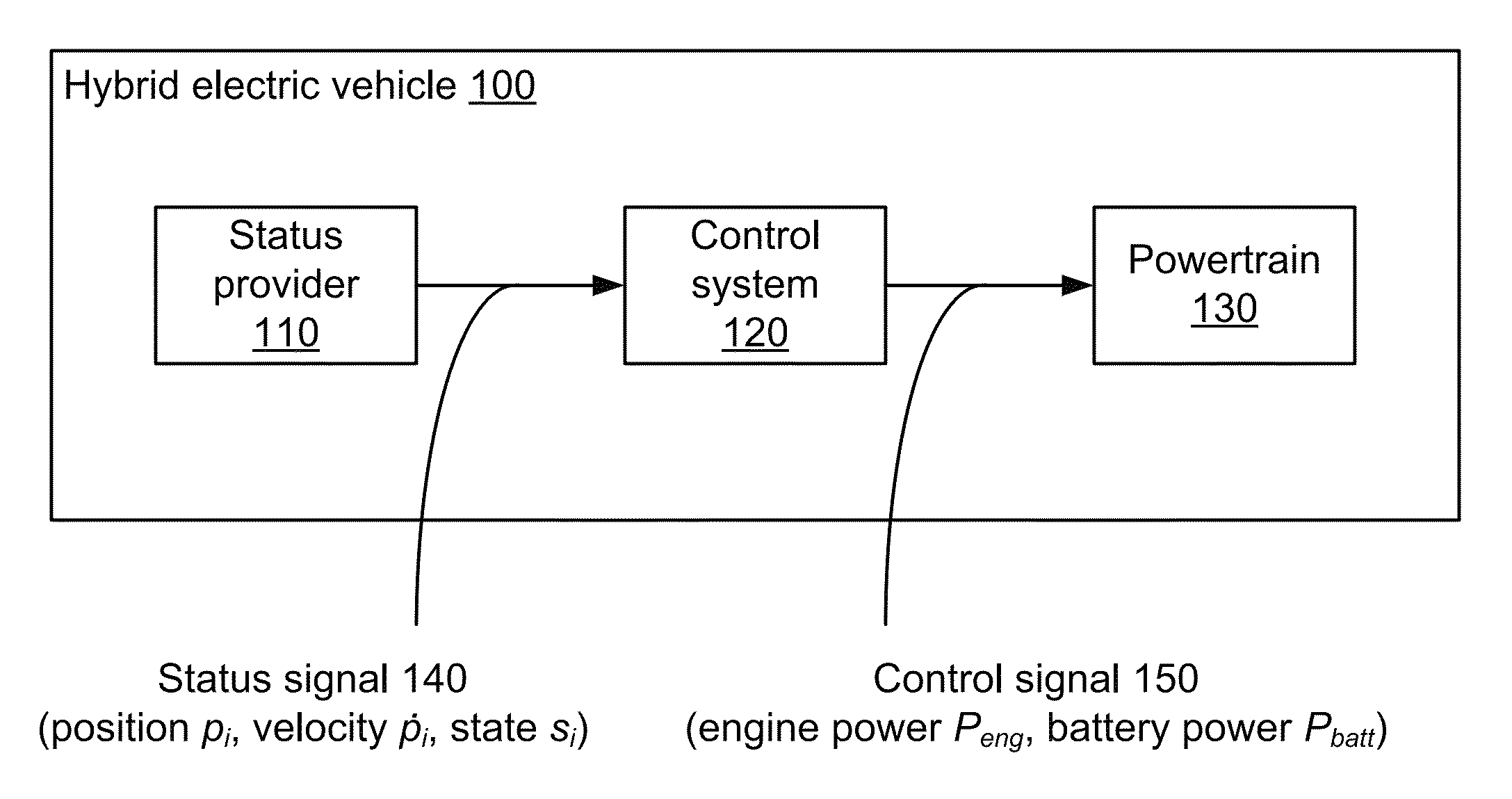

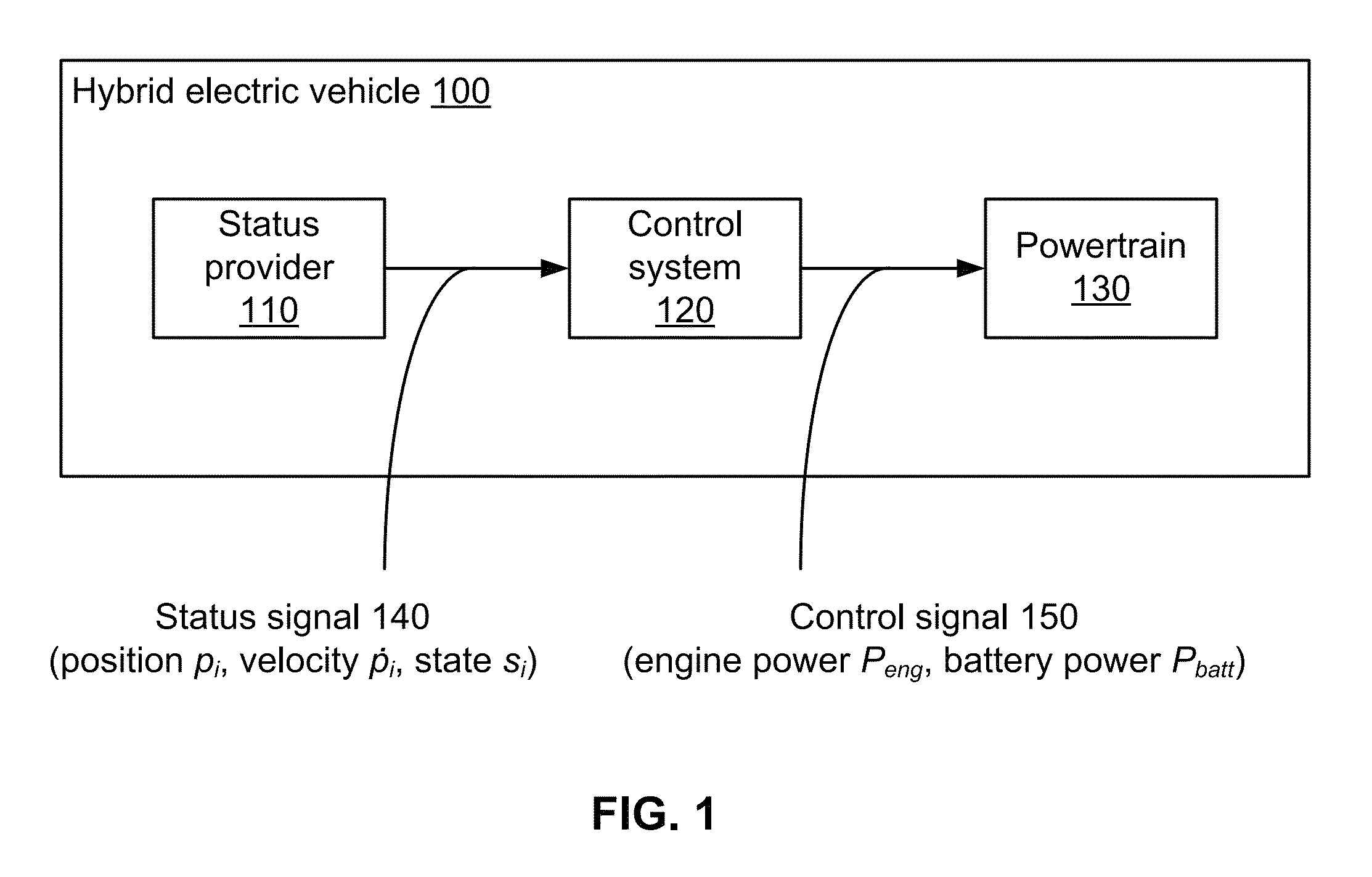

Hybrid Vehicle Fuel Efficiency Using Inverse Reinforcement Learning

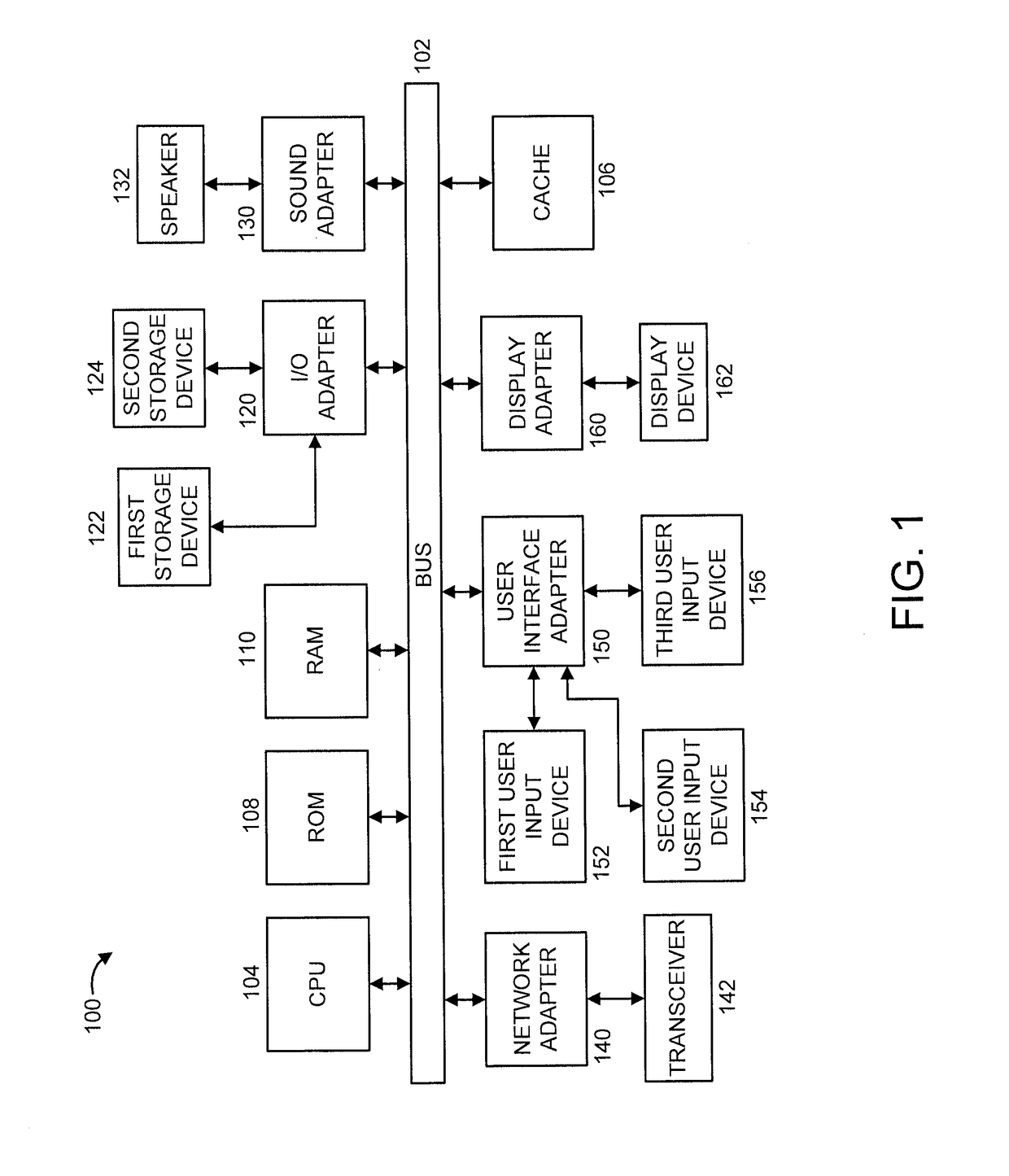

InactiveUS20140018985A1Hybrid vehiclesDigital data processing detailsFuel efficiencyElectric vehicle

A powertrain of a hybrid electric vehicle (HEV) is controlled. A first value α1 and a second value α2 are determined, α1 represents a proportion of an instantaneous power requirement (Preq) supplied by an engine of the HEV. α2 controls a recharging rate of a battery of the HEV. A determination is performed, based on α1 and α2, regarding how much engine power to use (Peng) and how much battery power to use (Pbatt). Peng and Pbatt are sent to the powertrain.

Owner:HONDA MOTOR CO LTD

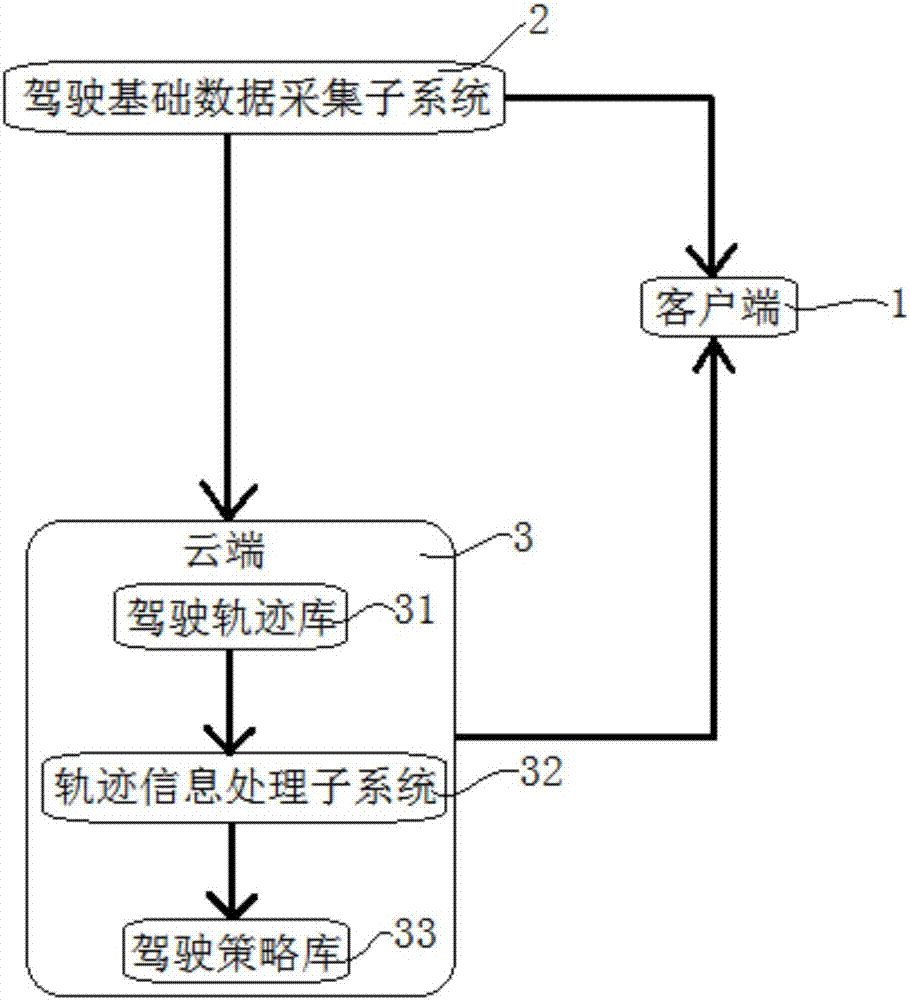

Automatic driving system and method based on relative-entropy deep and inverse reinforcement learning

InactiveCN107544516ARealize autonomous drivingPersonalized autopilotPosition/course control in two dimensionsReinforcement learning algorithmData mining

The invention relates to an automatic driving system based on relative-entropy deep and inverse reinforcement learning. The system comprises a client, a driving basic data collection sub-system and astorage module, wherein the client displays a driving strategy; the driving basic data collection sub-system collects road information; the storage module is connected with the client and the drivingbasic data collection sub-system and stores the road information collected by the driving basic data collection sub-system. The driving basic data collection sub-system collects the road information and transmits the road information to the client and the storage module; the storage module receives the road information, stores a piece of continuous road information into a historical route, conducts analysis and calculation according to the historical route so as to simulate the driving strategy, and transmits the driving strategy to the client so that a user can select the driving strategy; the client receives the road information and implements automatic driving according to the selection of the user. In the automatic driving system, the relative-entropy deep and inverse reinforcement learning algorithm is adopted, so that automatic driving under the model-free condition is achieved.

Owner:POLIXIR TECH LTD

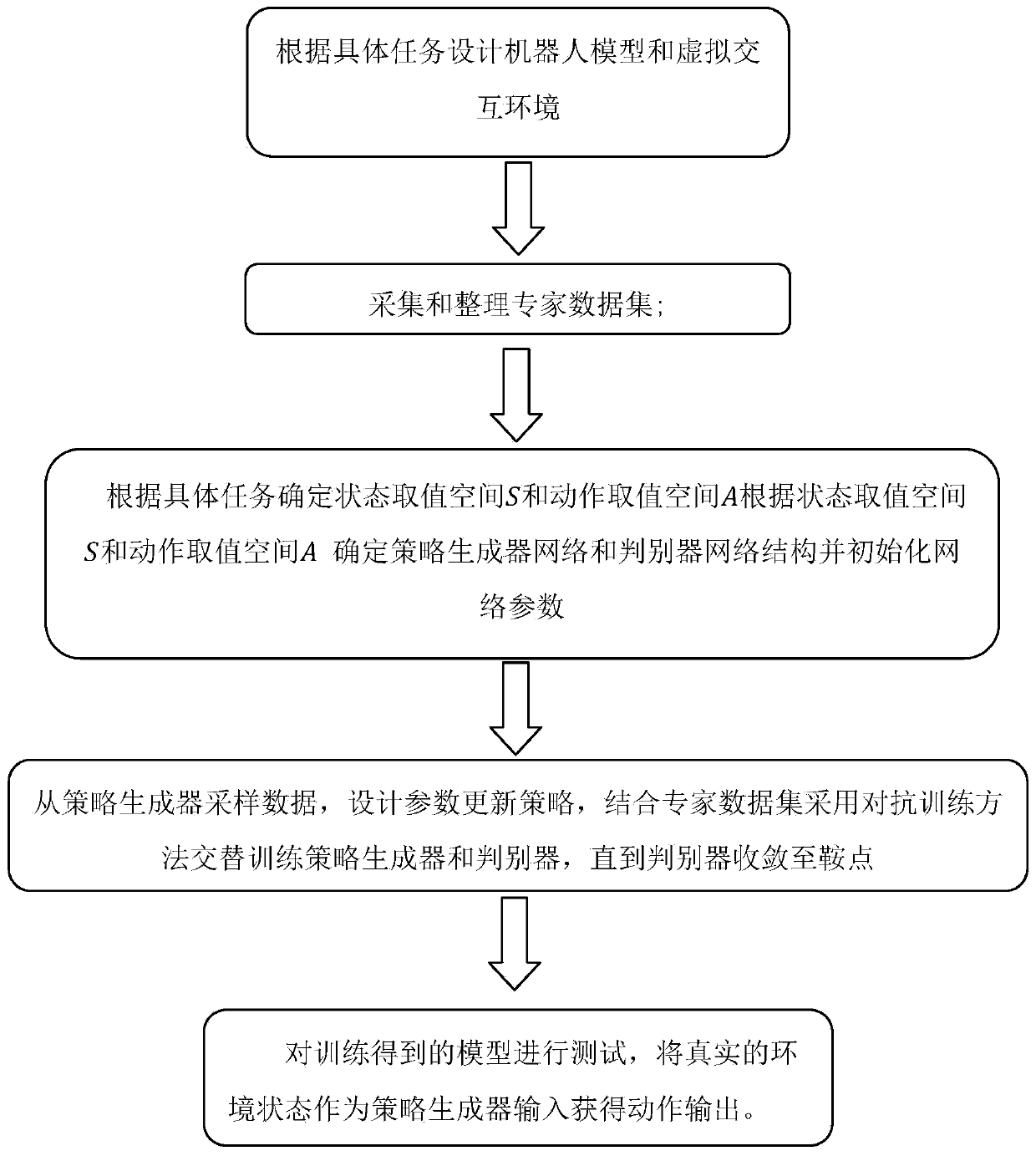

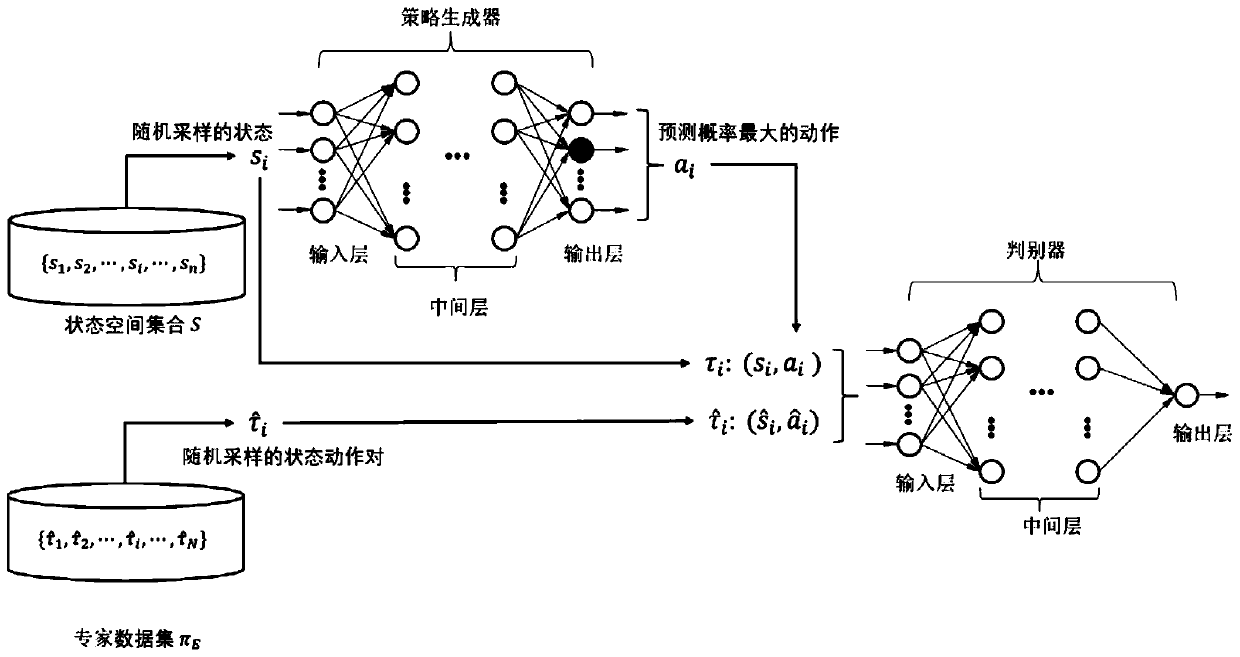

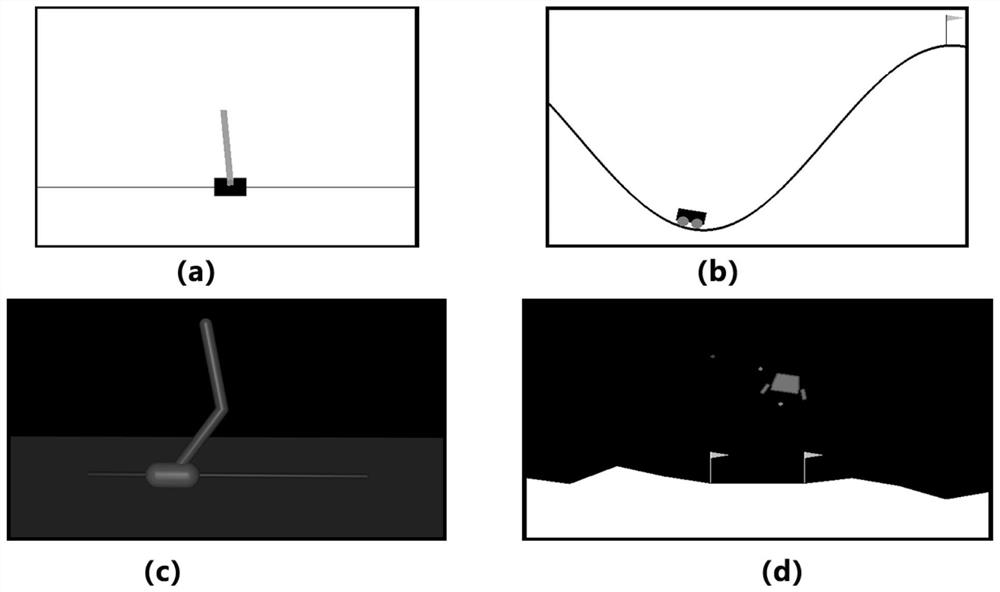

Robot imitation learning method based on virtual scene training

PendingCN110991027ATraining model fastLate migration is fastArtificial lifeDesign optimisation/simulationData setAlgorithm

The invention discloses a robot imitation learning method based on virtual scene training. The method comprises the following steps: designing a robot model and a virtual interaction environment according to a specific task; collecting and arranging an expert data set; determining a state value space S and an action value space A according to the specific task, and determining structures of the network of a strategy generator and the network of a discriminator according to the state value space S and the action value space A; sampling data from the strategy generator, designing a parameter updating strategy, and alternately training the strategy generator and the discriminator by combining the expert data set and adopting an adversarial training method until the discriminator converges toa saddle point; and testing a network model composed of the strategy generator and the discriminator obtained by training, and taking a real environment state as input of the strategy generator so asto obtain action output. According to the method, a value return function is judged and learned; a large number of complex intermediate steps of inverse reinforcement learning with high calculation amount are bypassed; and the learning process is simpler and more efficient.

Owner:SOUTH CHINA UNIV OF TECH

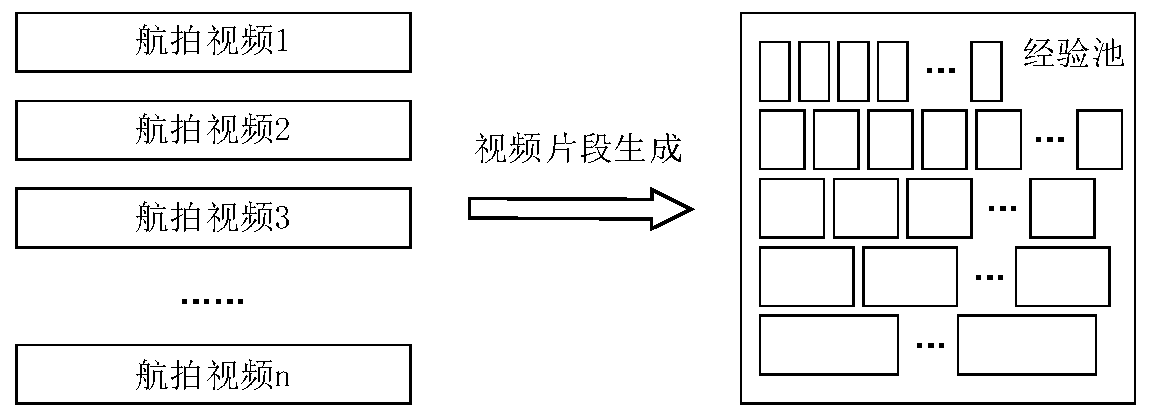

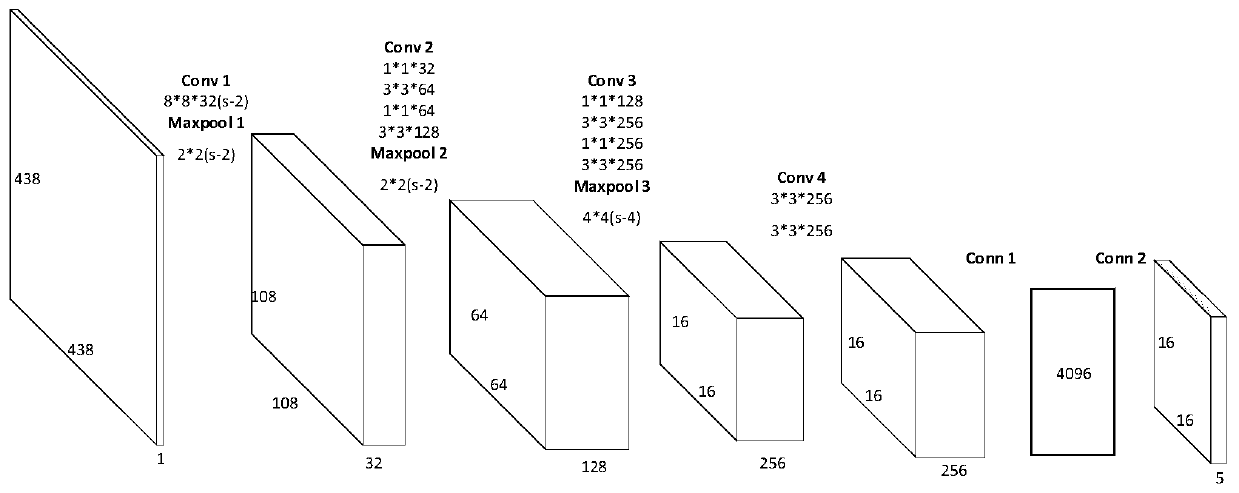

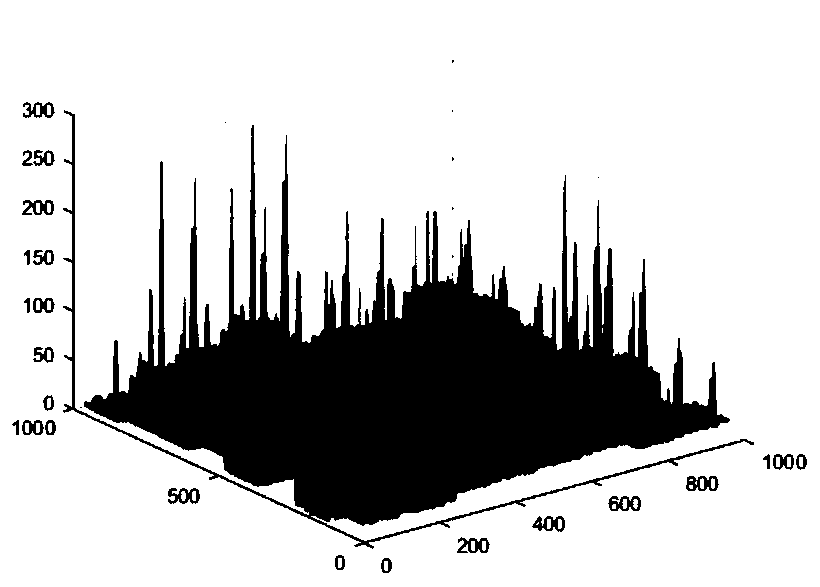

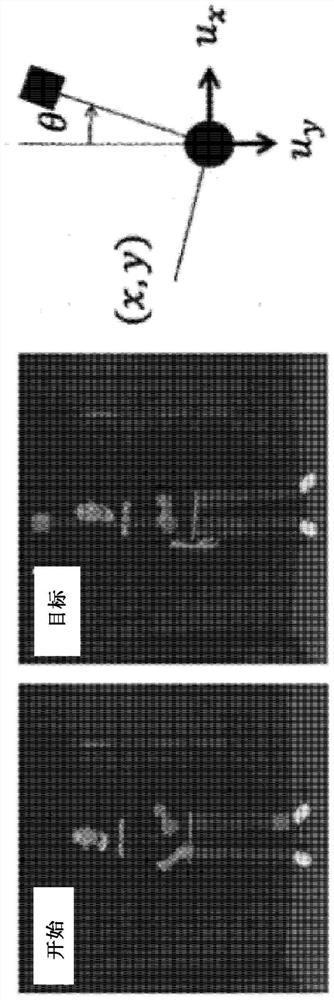

Deep inverse reinforcement learning-based target detection method in unmanned aerial vehicle aerial video based on deep inverse reinforcement learning

ActiveCN110321811AMeet the detection speed requirementsEnsure monotonicityInternal combustion piston enginesScene recognitionLearning basedAerial video

The invention relates to a moving target detection technology, in particular to a deep inverse reinforcement learning-based target detection method in an unmanned aerial vehicle aerial video, which ischaracterized by at least comprising the following steps: 1, establishing a deep inverse reinforcement learning model; 2, performing model strategy iteration and algorithm implementation; 3, selecting and optimizing key parameters of the model; and 4, outputting a moving small target detection result. According to the target tracking method in the unmanned aerial vehicle aerial video, complex tasks can be solved, and award return delay can be avoided.

Owner:INST OF ELECTRONICS ENG CHINA ACAD OF ENG PHYSICS +1

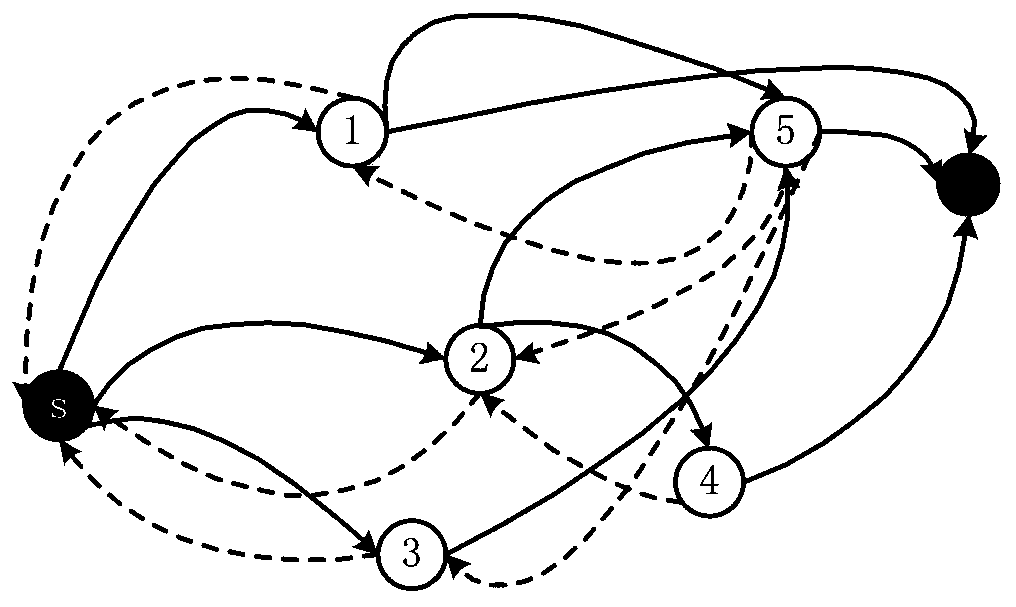

New rule creation using mdp and inverse reinforcement learning

ActiveUS20180293514A1Mathematical modelsAutonomous decision making processState dependentInverse reinforcement learning

A method is provided for rule creation that includes receiving (i) a MDP model with a set of states, a set of actions, and a set of transition probabilities, (ii) a policy that corresponds to rules for a rule engine, and (iii) a set of candidate states that can be added to the set of states. The method includes transforming the MDP model to include a reward function using an inverse reinforcement learning process on the MDP model and on the policy. The method includes finding a state from the candidate states, and generating a refined MDP model with the reward function by updating the transition probabilities related to the state. The method includes obtaining an optimal policy for the refined MDP model with the reward function, based on the reward policy, the state, and the updated probabilities. The method includes updating the rule engine based on the optimal policy.

Owner:IBM CORP

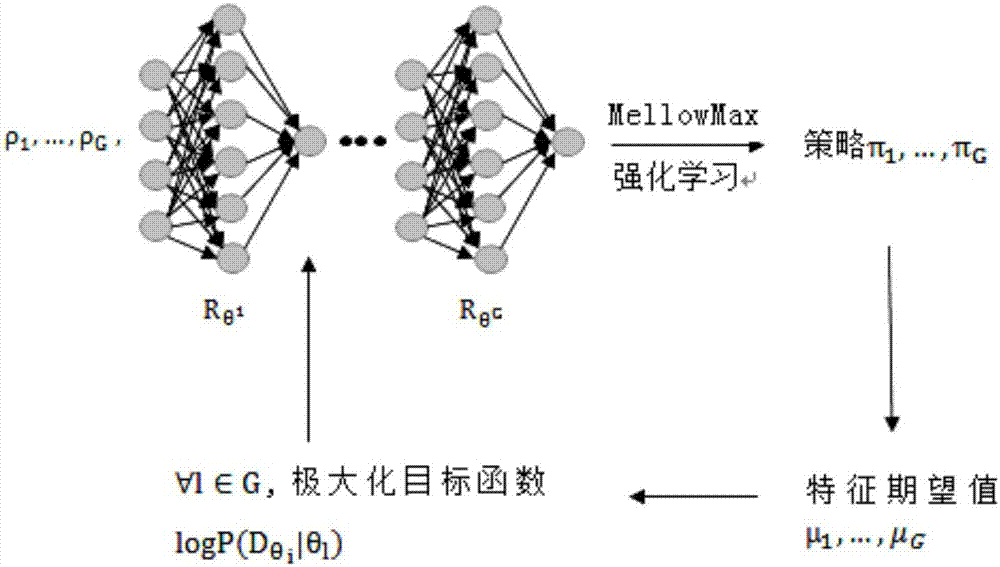

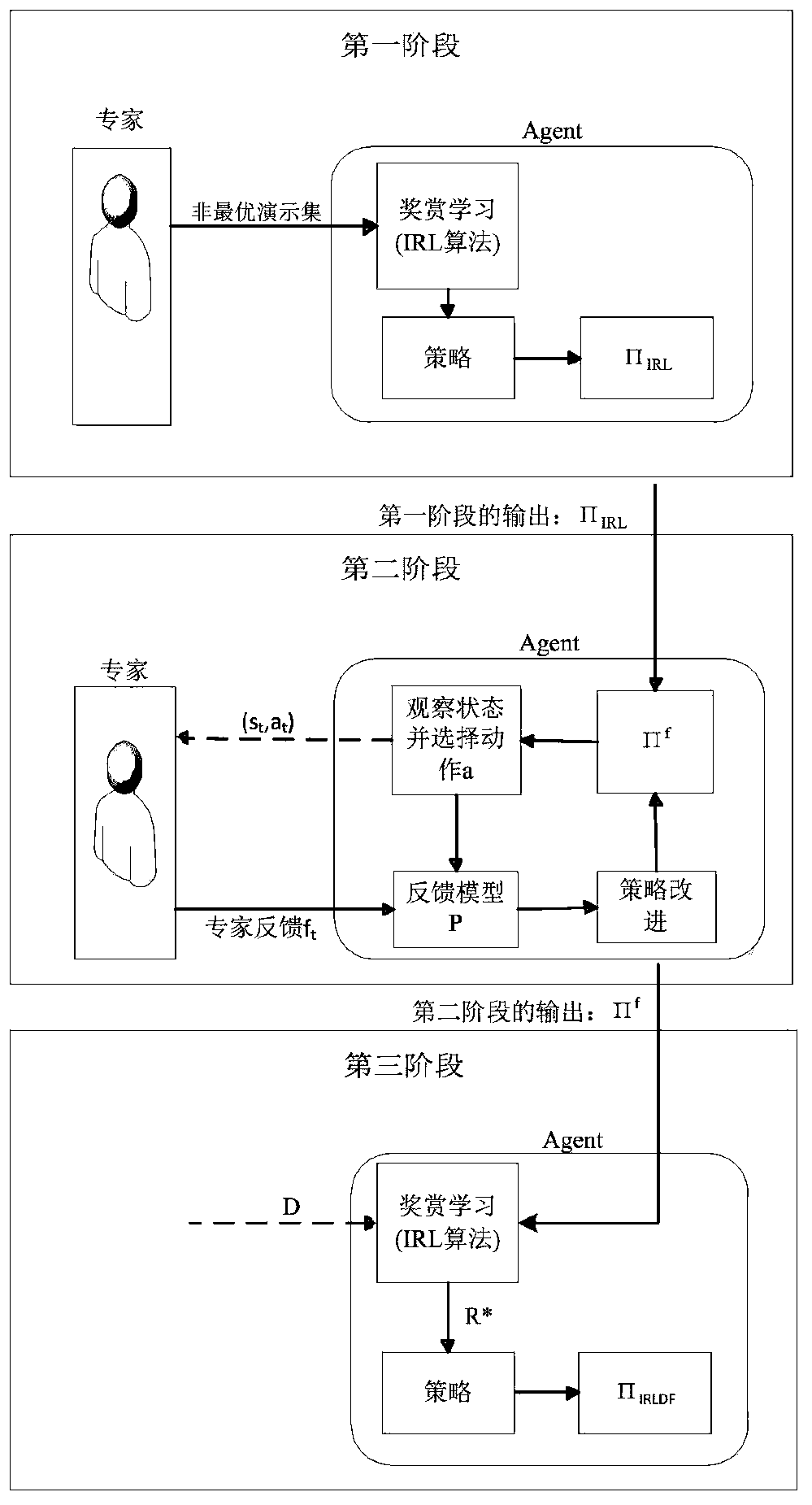

Improved Bayesian inverse reinforcement learning method based on combined feedback

InactiveCN109978012AThe result is obviousComputing modelsCharacter and pattern recognitionStudy methodsIt policy

The invention discloses an improved Bayesian inverse reinforcement learning method based on combined feedback, and provides an interactive learning method combining expert feedback and demonstration,in an LfF, an expert evaluates the behavior of a learner and gives feedback with different rewards, so as to improve the strategy of the learner. In the LfD, the Agent attempts to learn its policy byobserving expert demonstration. The research algorithm of the method is divided into three learning stages: learning is carried out from non-optimal demonstration; learning from the feedback; demonstration and feedback learning; to reduce states requiring iteration According to the number of actions, the Agent strategy is improved through iteration of the graphical Bayesian rule to enhance the learned reward function, and the speed of finding the optimal action is increased.

Owner:BEIJING UNIV OF TECH

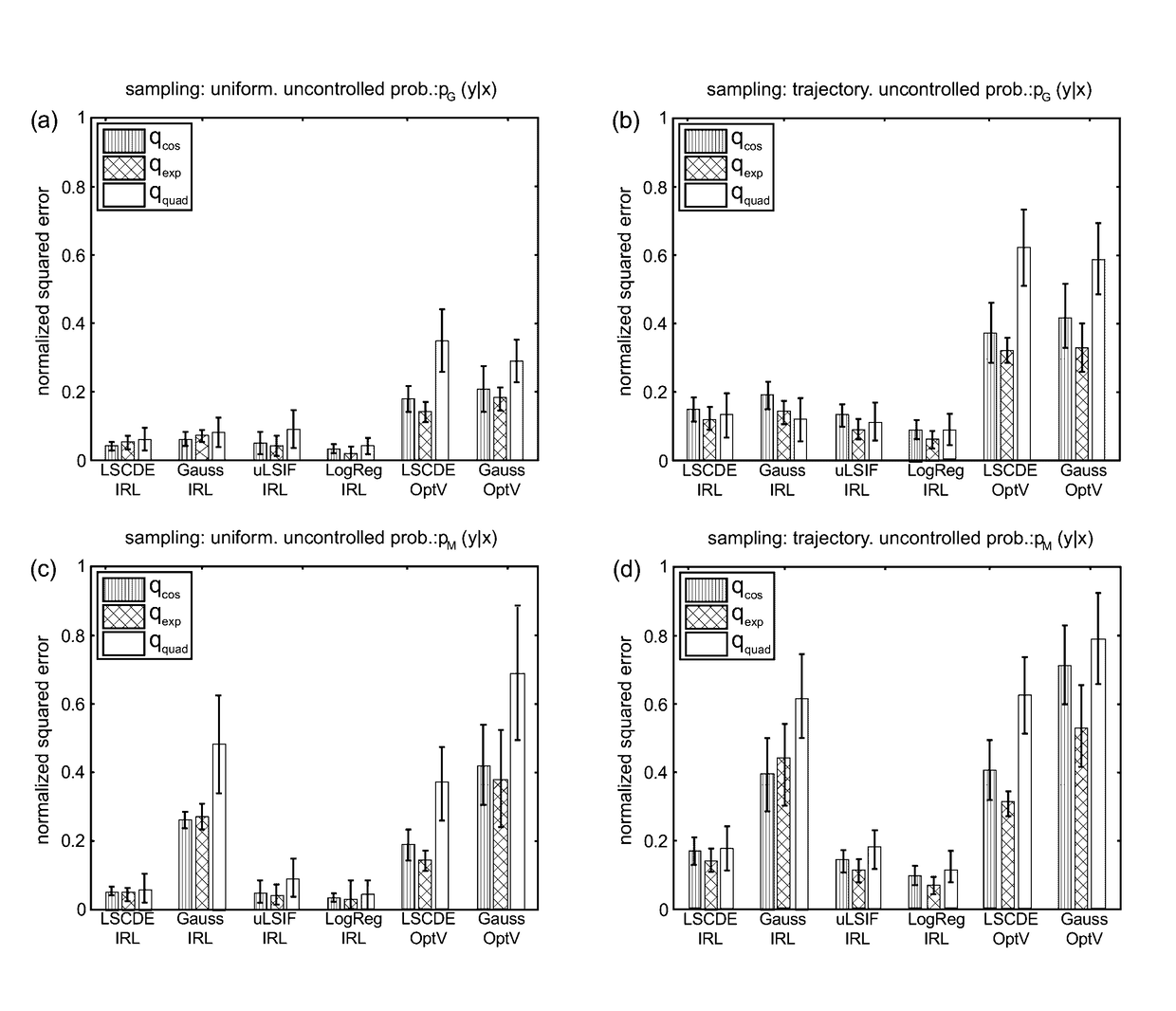

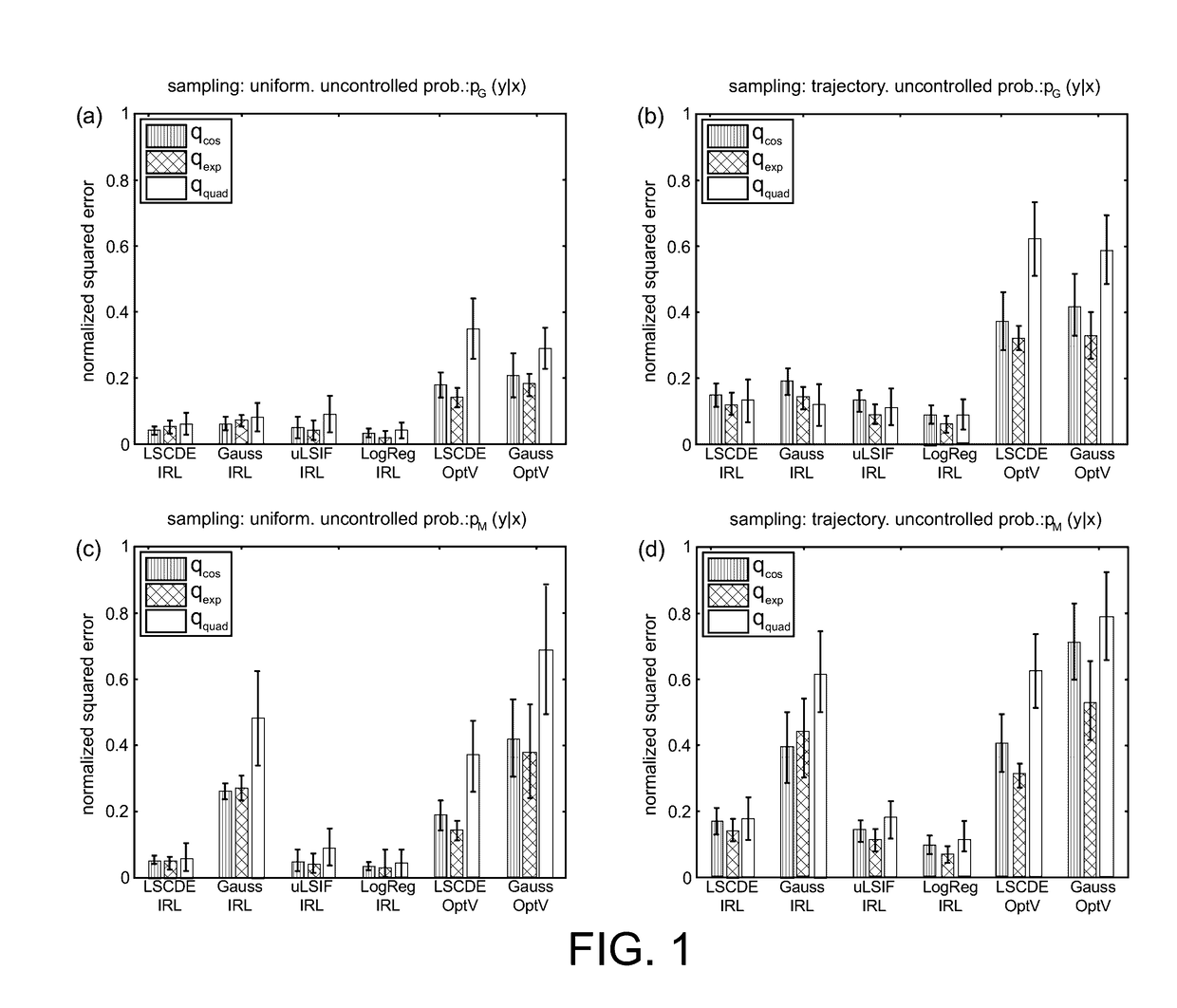

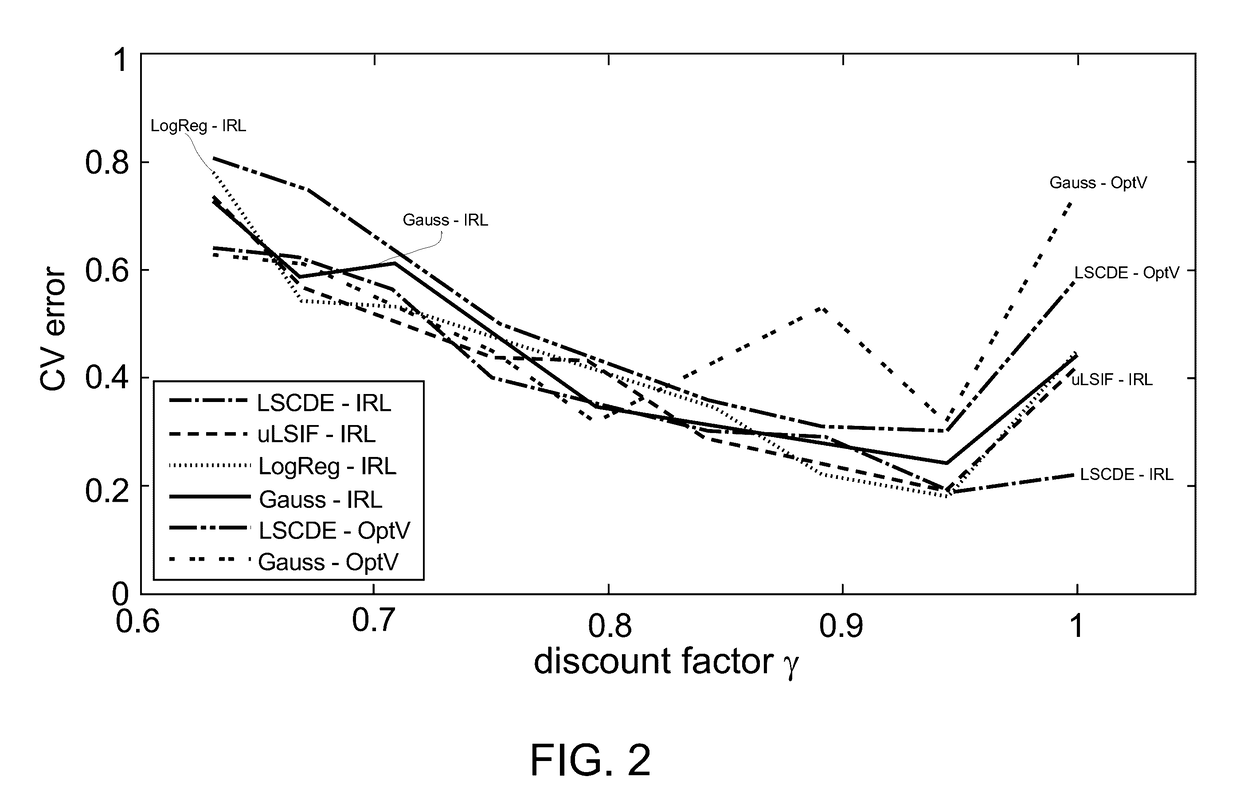

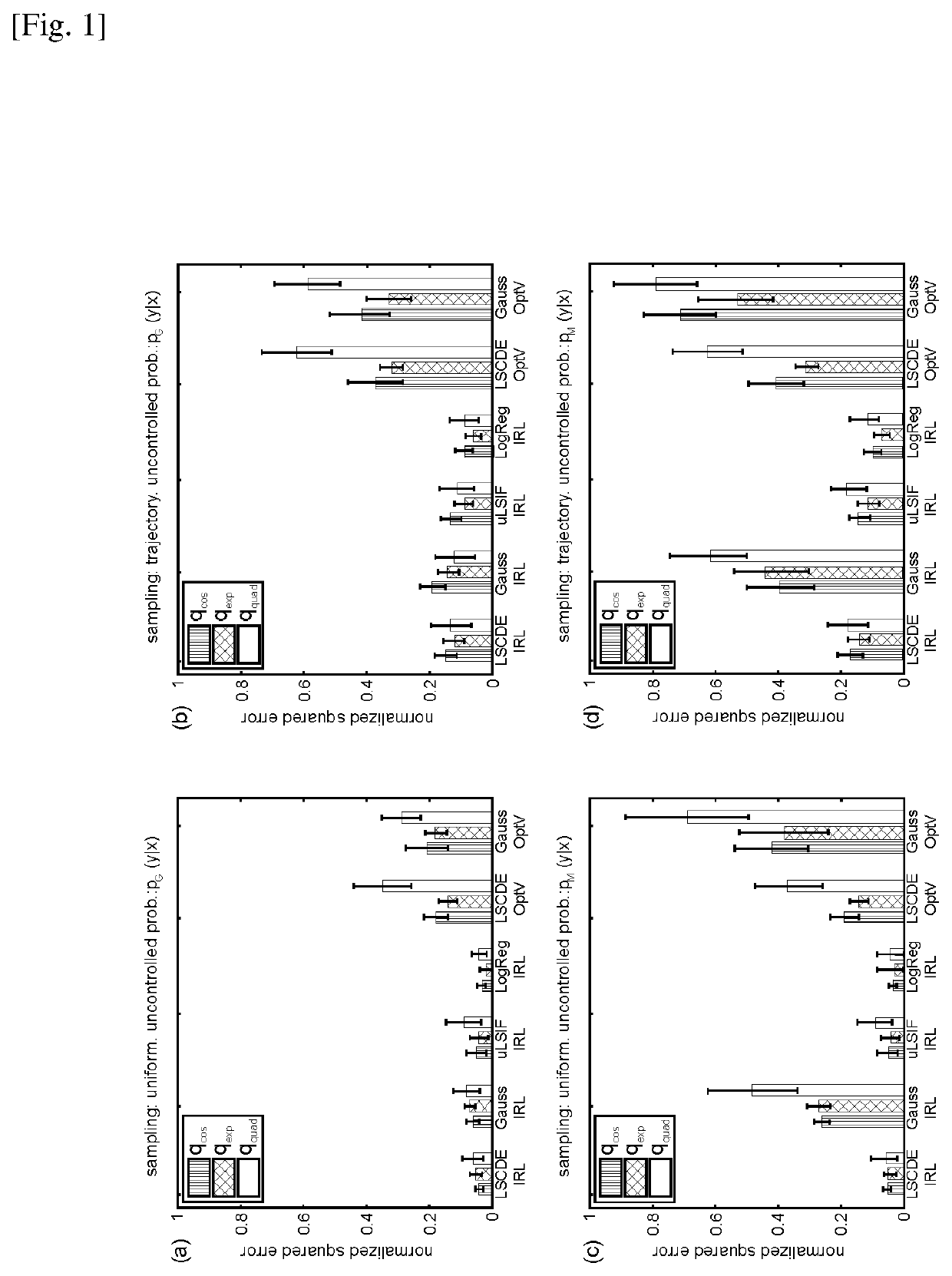

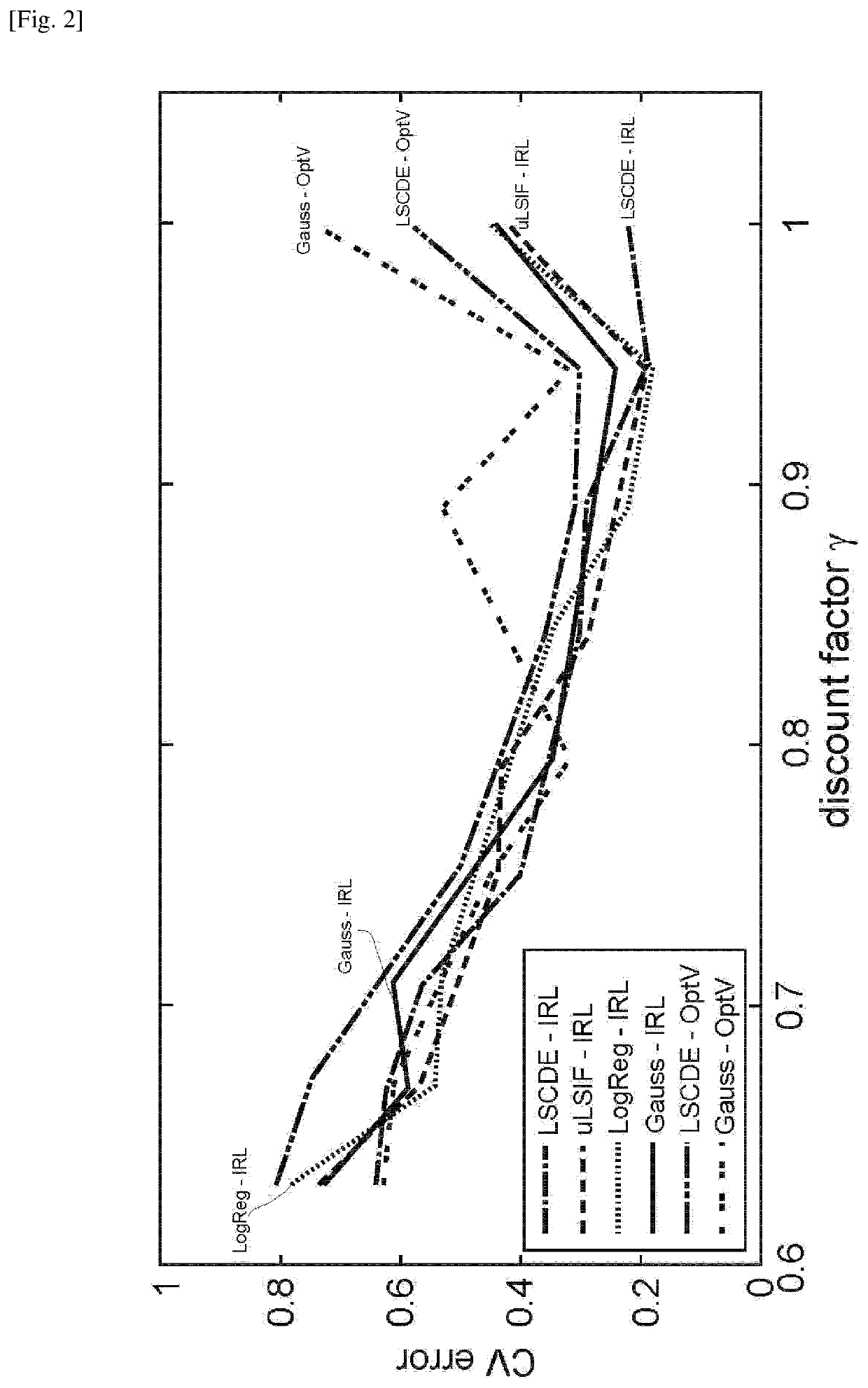

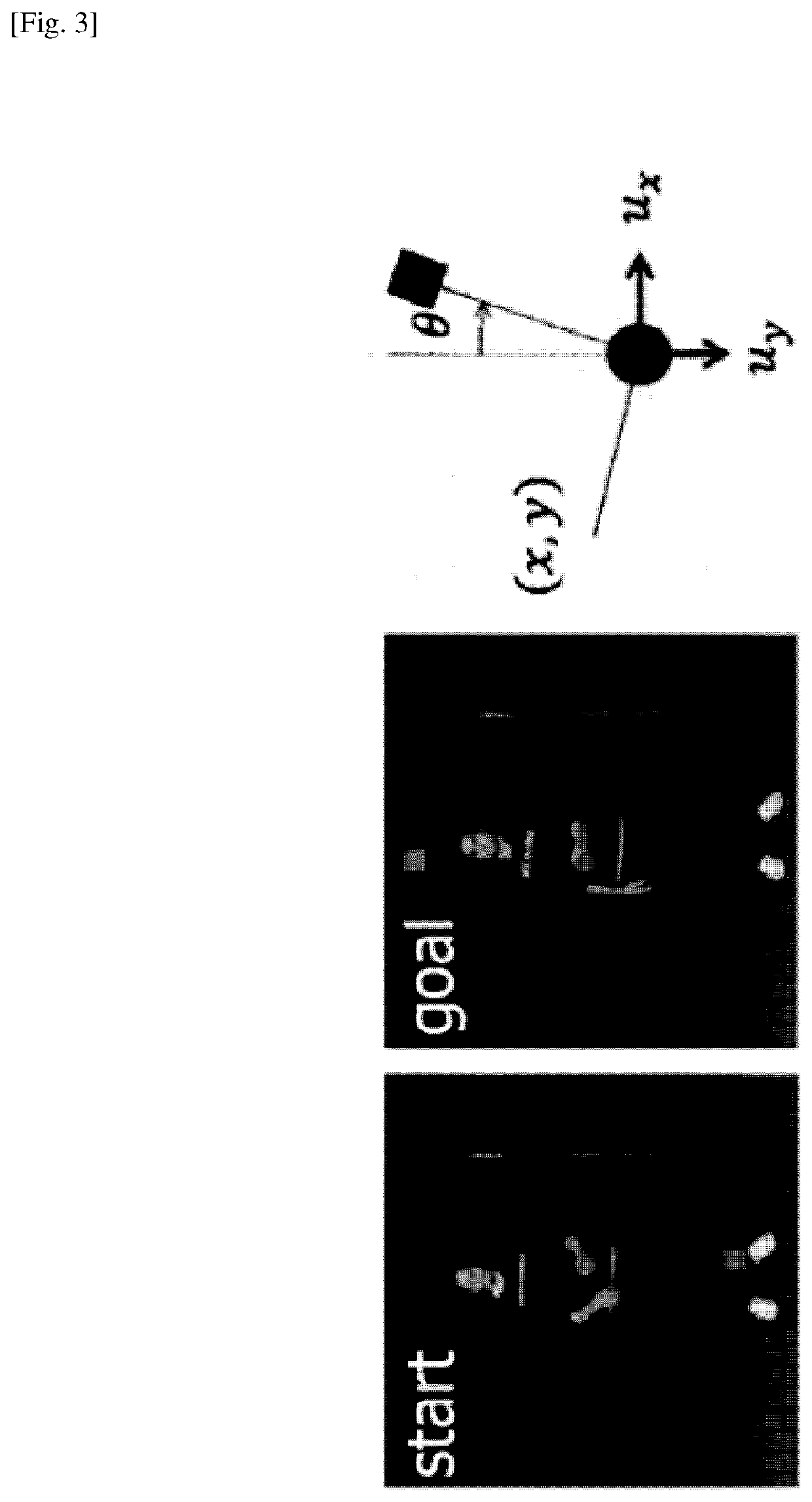

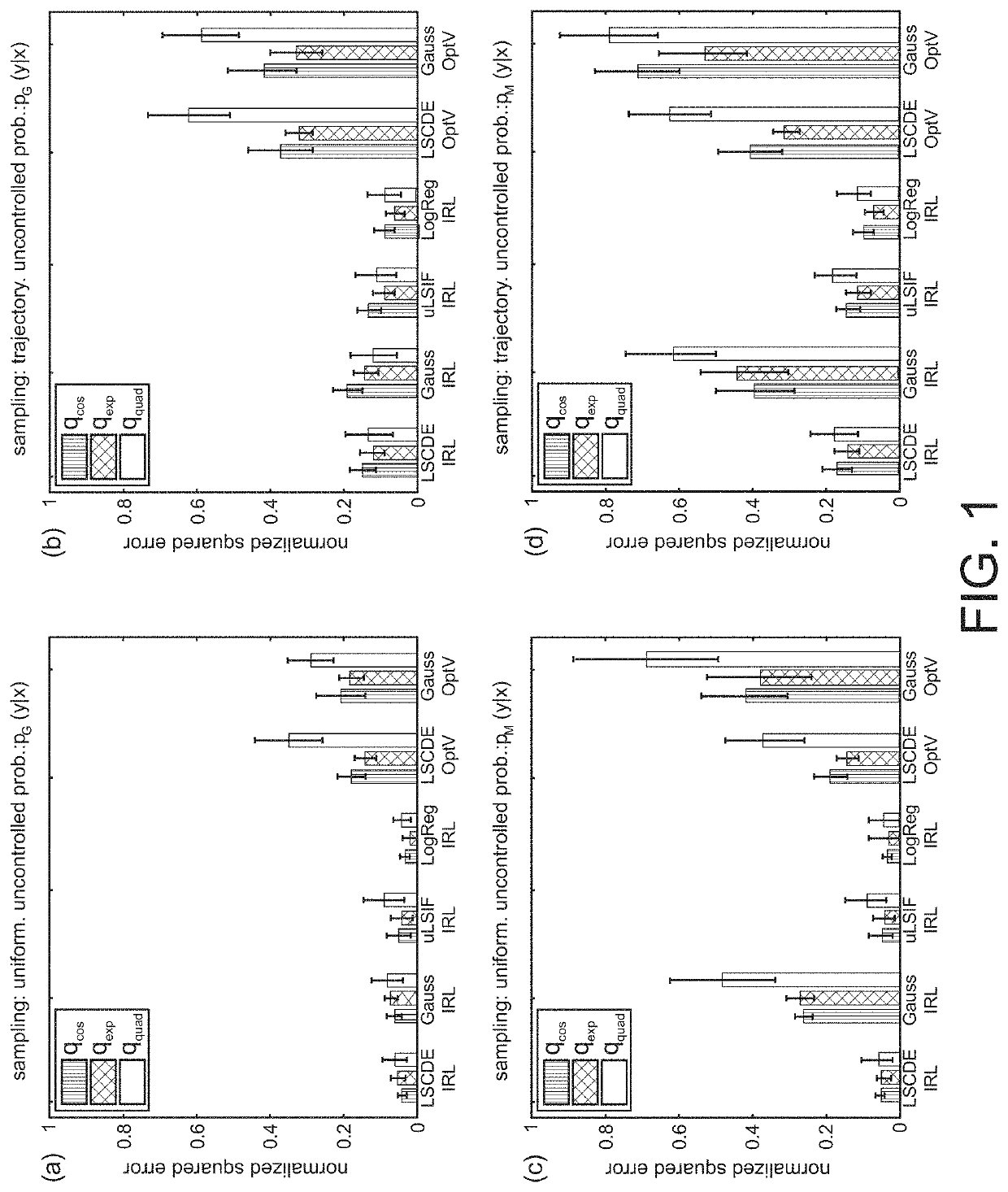

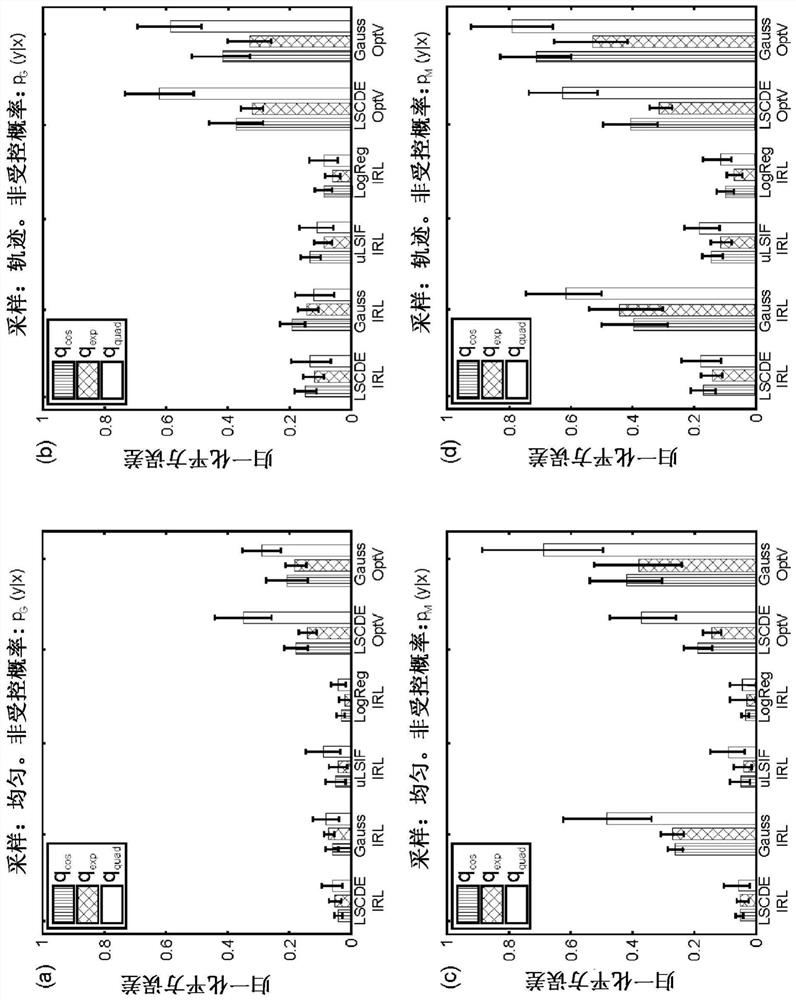

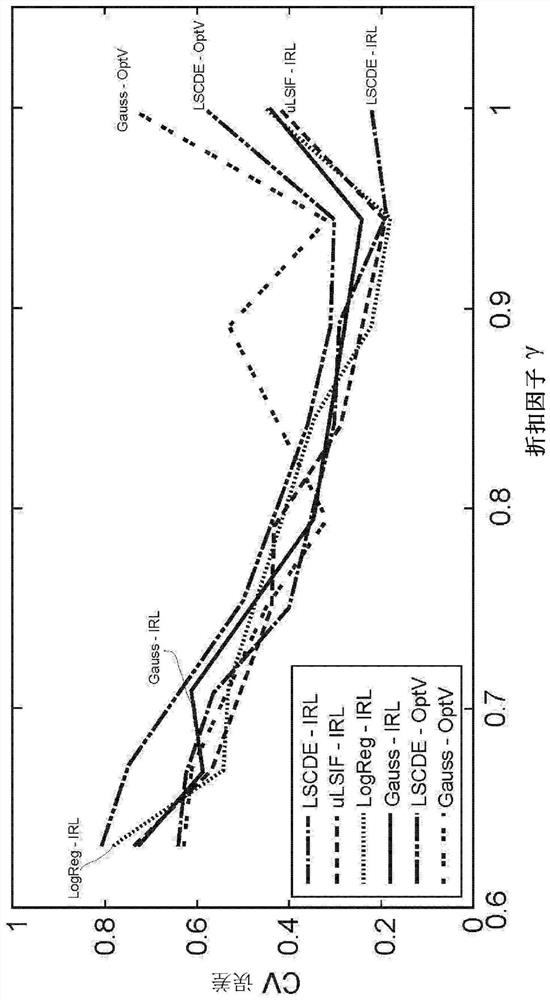

Direct inverse reinforcement learning with density ratio estimation

ActiveUS20170147949A1Efficient executionEffectively and efficiently performProbabilistic networksMachine learningDensity ratio estimationState variable

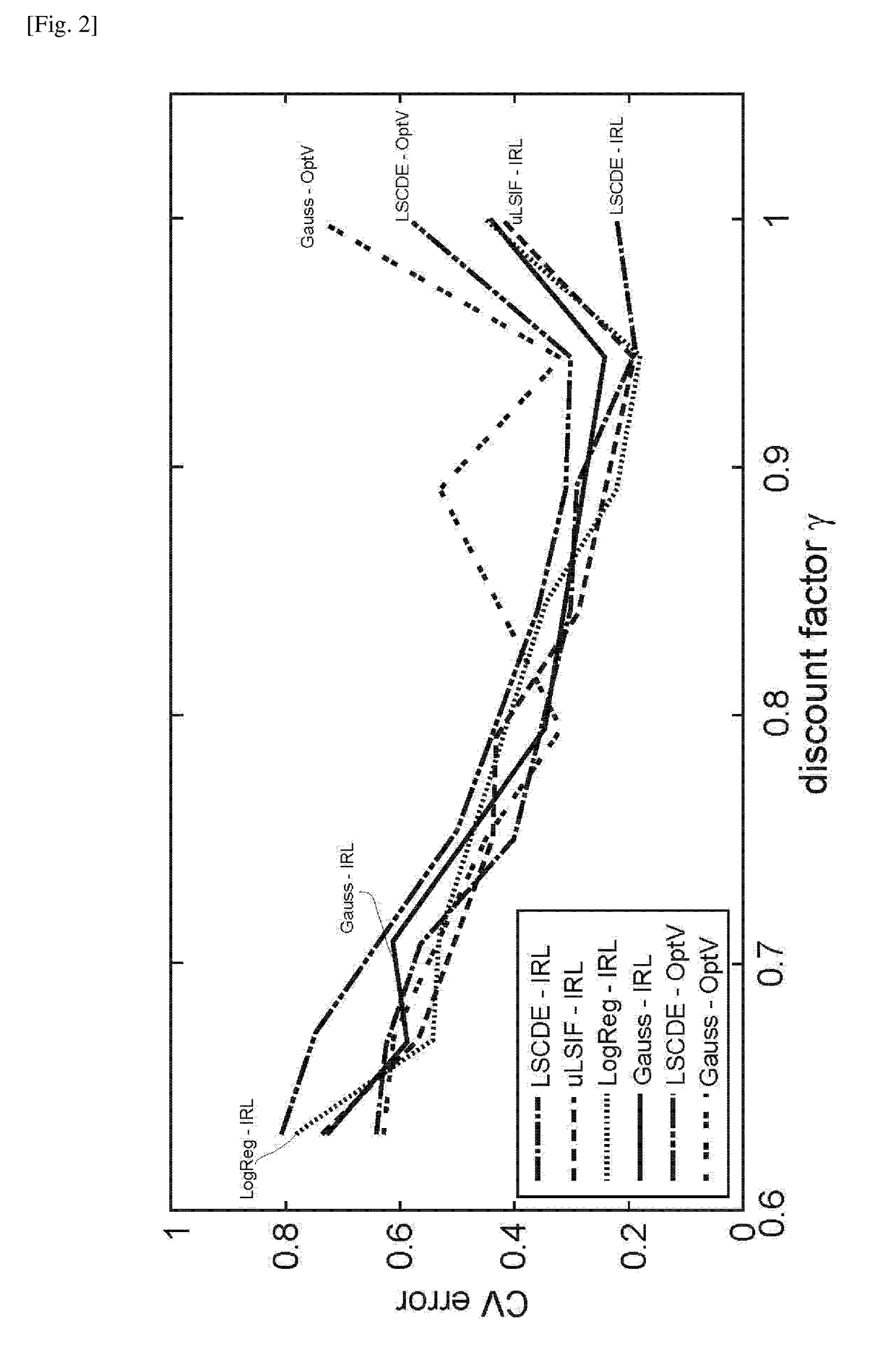

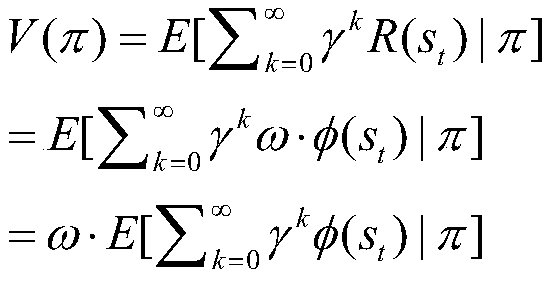

A method of inverse reinforcement learning for estimating reward and value functions of behaviors of a subject includes: acquiring data representing changes in state variables that define the behaviors of the subject; applying a modified Bellman equation given by Eq. (1) to the acquired data:r(x)+γV(y)-V(x)=lnπ(y|x)b(y|x),(1)=lnπ(x,y)b(x,y)-lnπ(x)b(x), (2)where r(x) and V(x) denote a reward function and a value function, respectively, at state x, and γ represents a discount factor, and b(y|x) and π(y|x) denote state transition probabilities before and after learning, respectively; estimating a logarithm of the density ratio π(x) / b(x) in Eq. (2); estimating r(x) and V(x) in Eq. (2) from the result of estimating a log of the density ratio π(x,y) / b(x,y); and outputting the estimated r(x) and V(x).

Owner:OKINAWA INST OF SCI & TECH SCHOOL

New rule creation using mdp and inverse reinforcement learning

ActiveUS20180293512A1Mathematical modelsAutonomous decision making processState dependentInverse reinforcement learning

A method is provided for rule creation that includes receiving (i) a MDP model with a set of states, a set of actions, and a set of transition probabilities, (ii) a policy that corresponds to rules for a rule engine, and (iii) a set of candidate states that can be added to the set of states. The method includes transforming the MDP model to include a reward function using an inverse reinforcement learning process on the MDP model and on the policy. The method includes finding a state from the candidate states, and generating a refined MDP model with the reward function by updating the transition probabilities related to the state. The method includes obtaining an optimal policy for the refined MDP model with the reward function, based on the reward policy, the state, and the updated probabilities. The method includes updating the rule engine based on the optimal policy.

Owner:IBM CORP

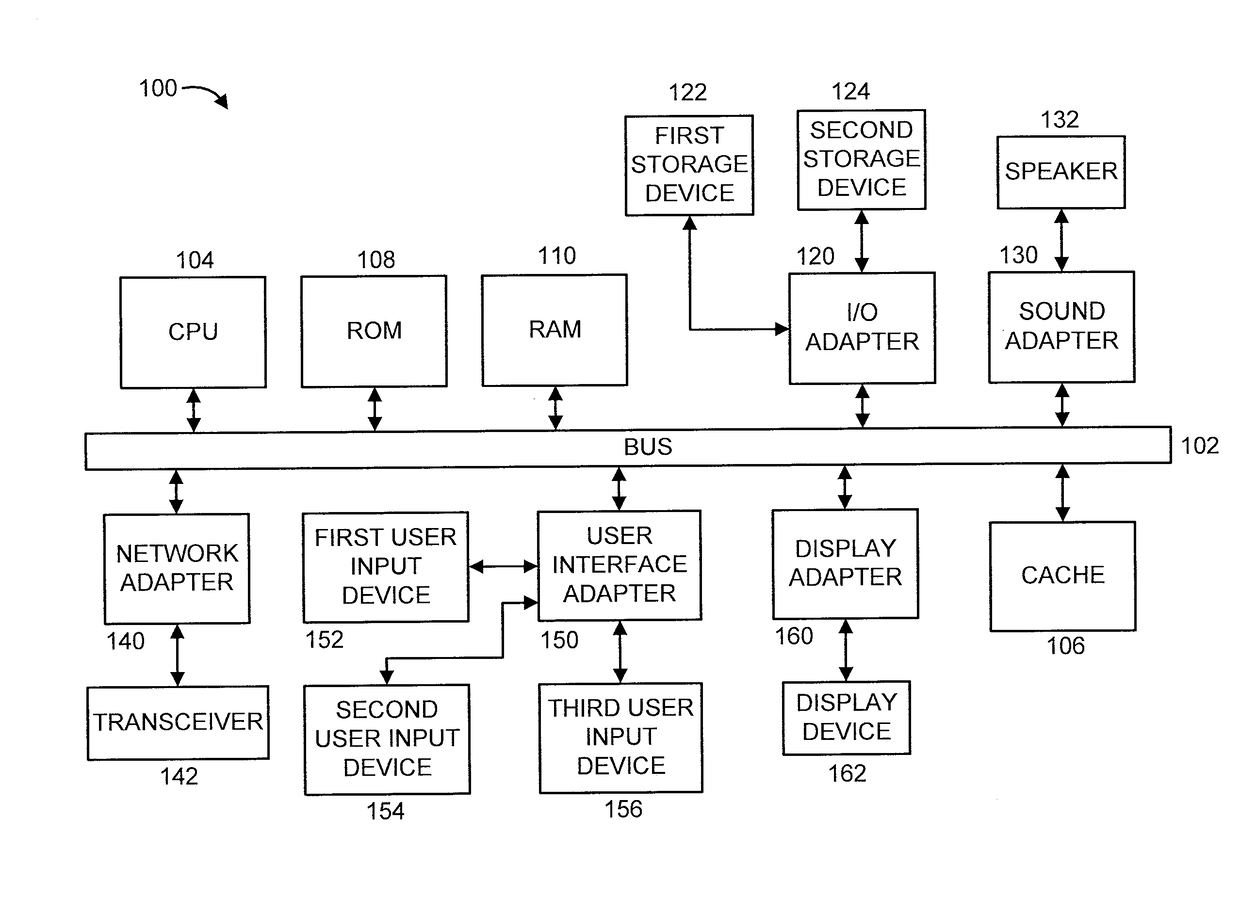

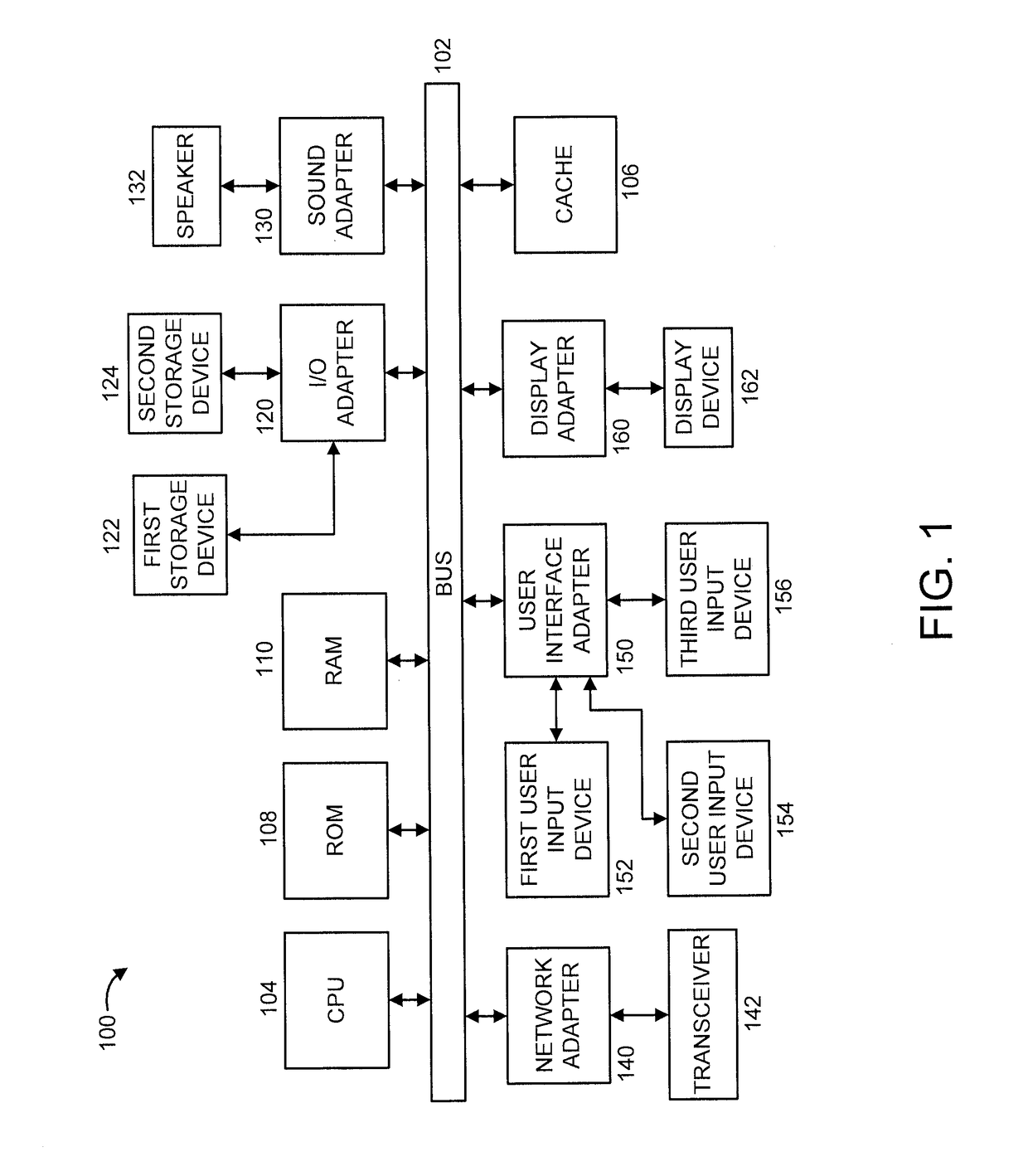

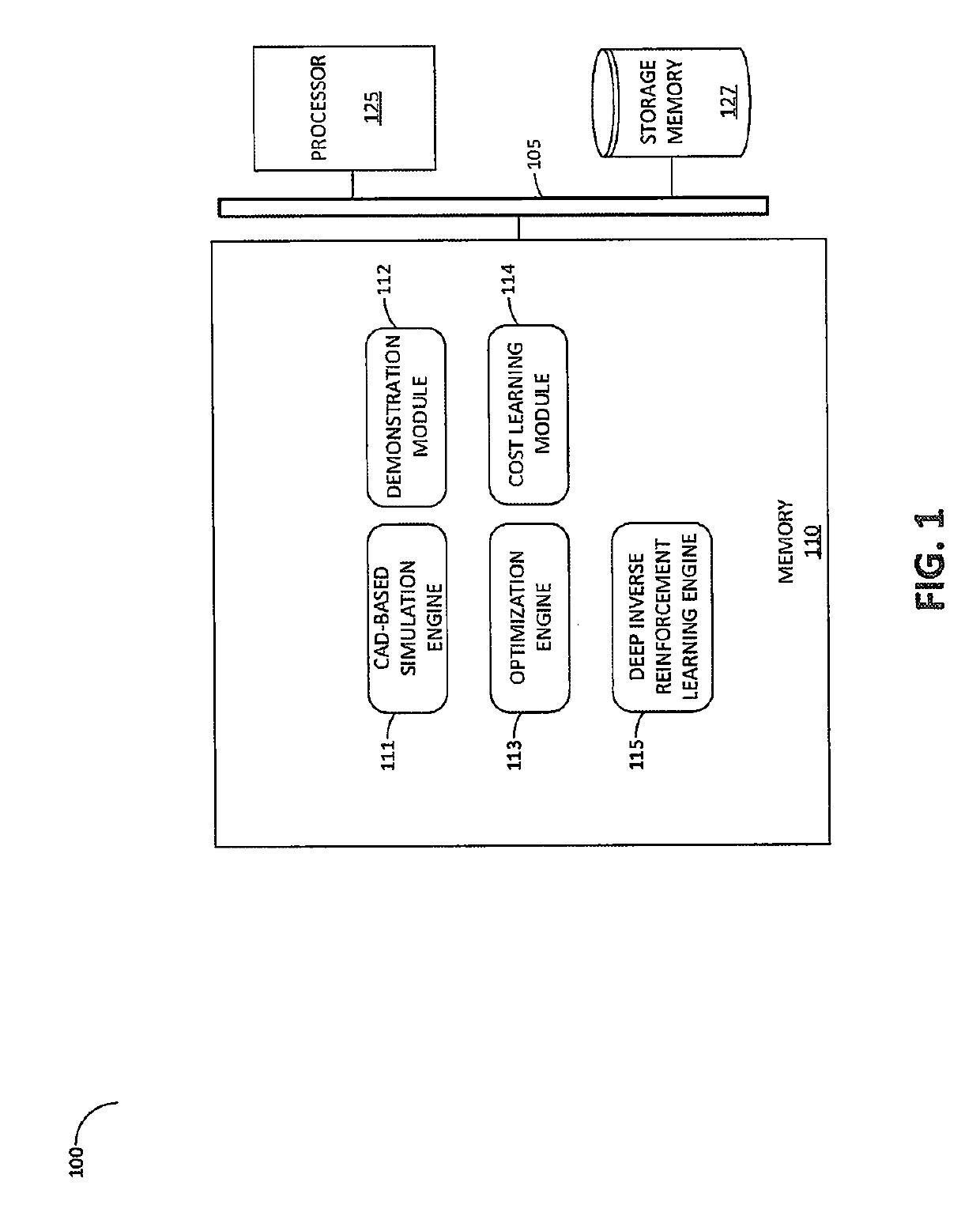

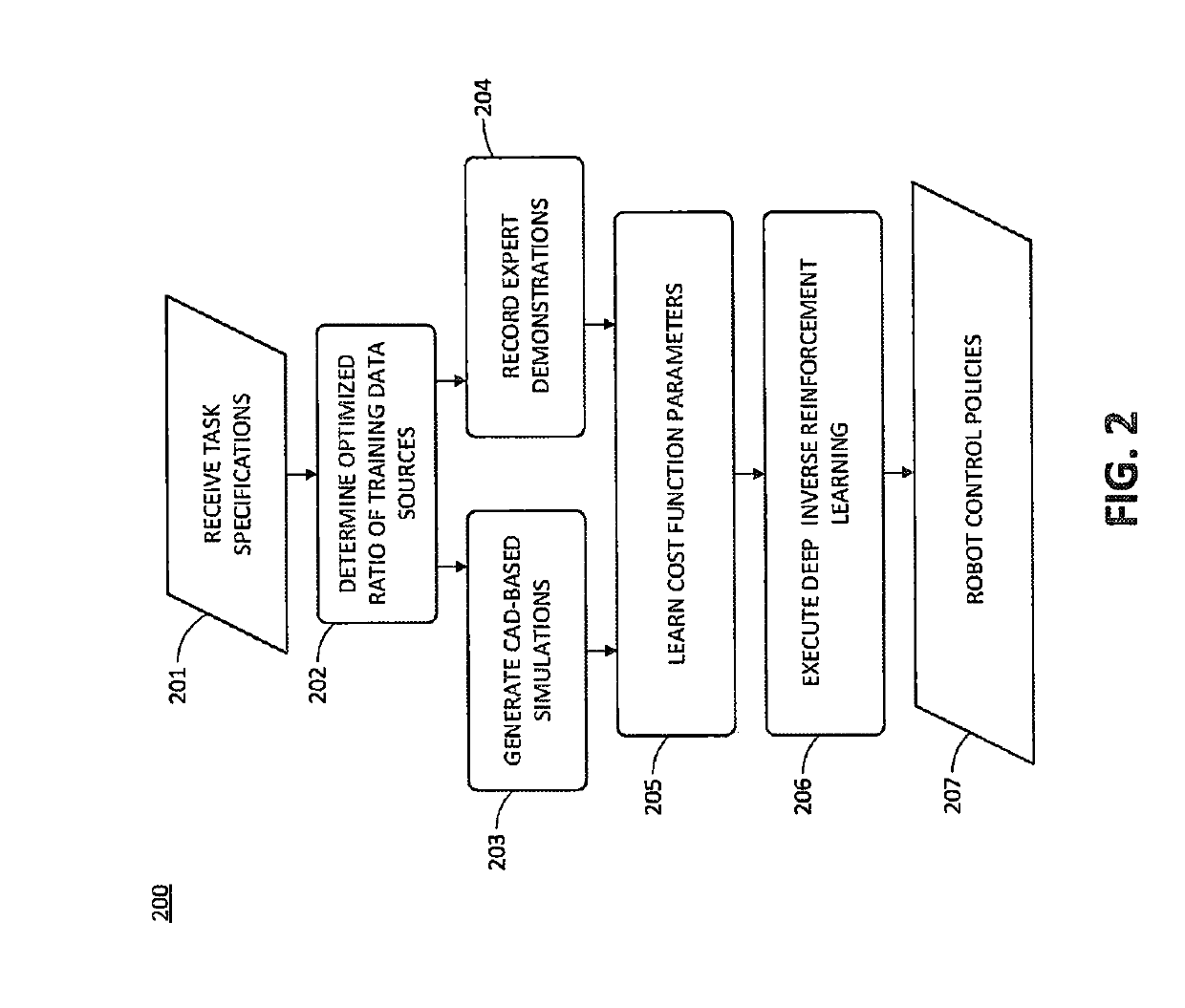

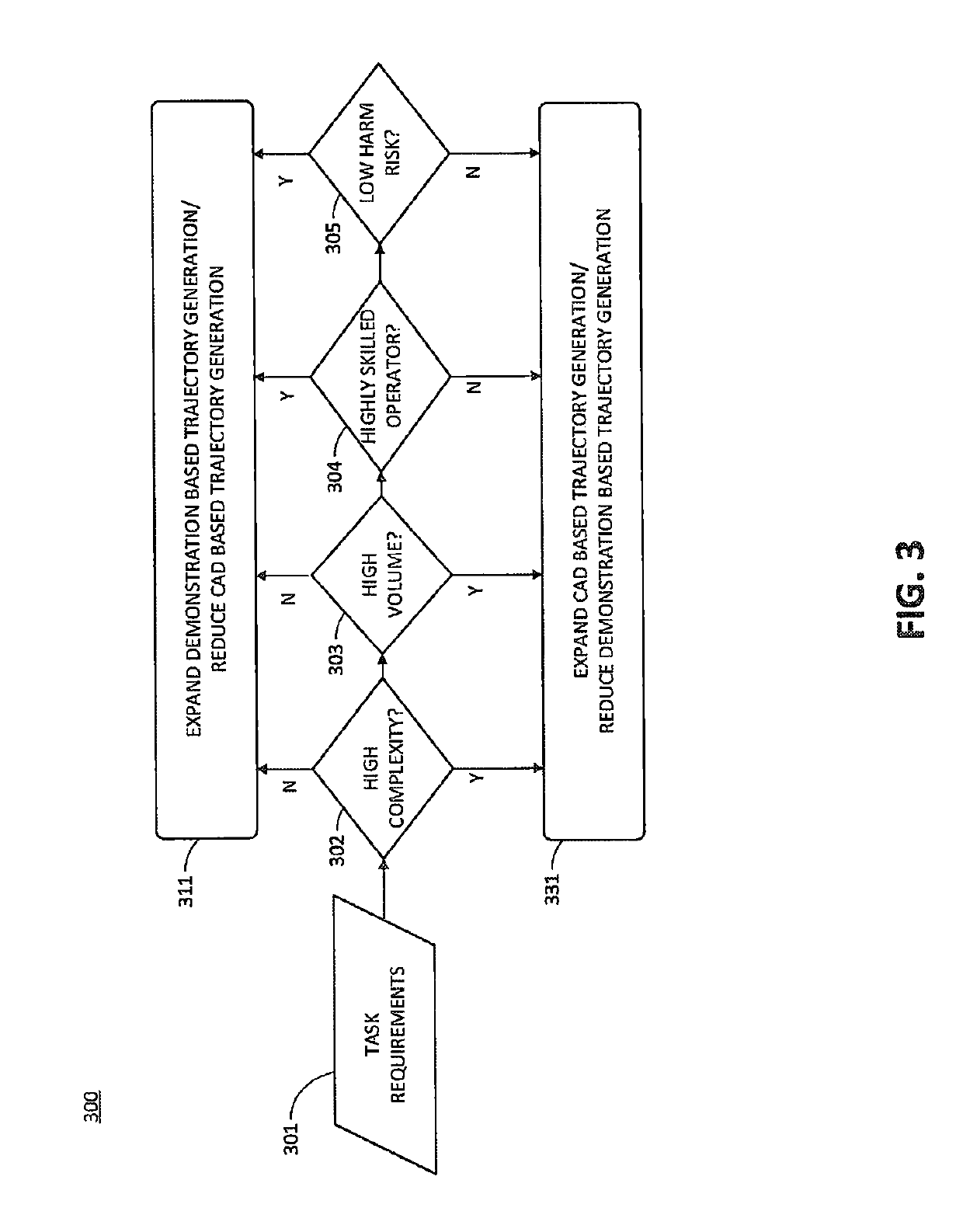

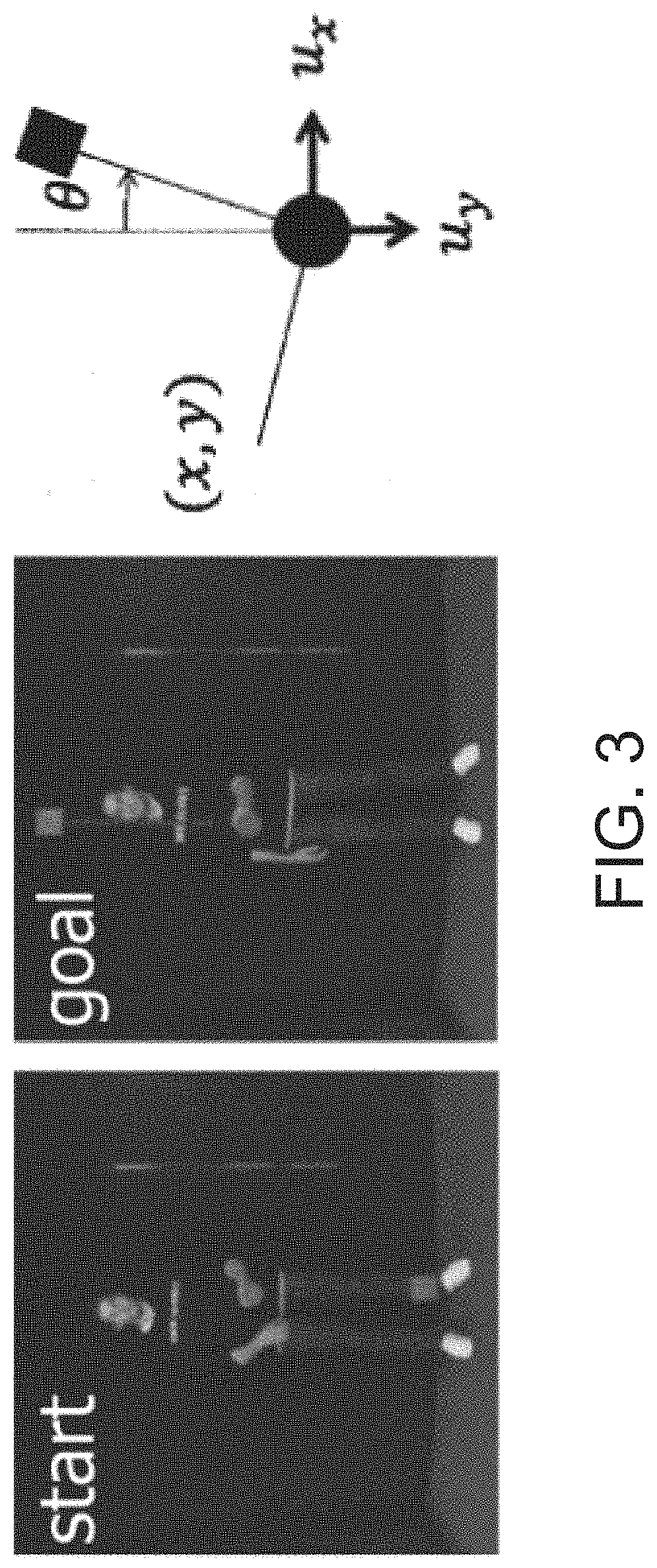

Method and system for automatic robot control policy generation via cad-based deep inverse reinforcement learning

ActiveUS20190091859A1Reduce human interventionProgramme controlProgramme-controlled manipulatorSimulationRobot control

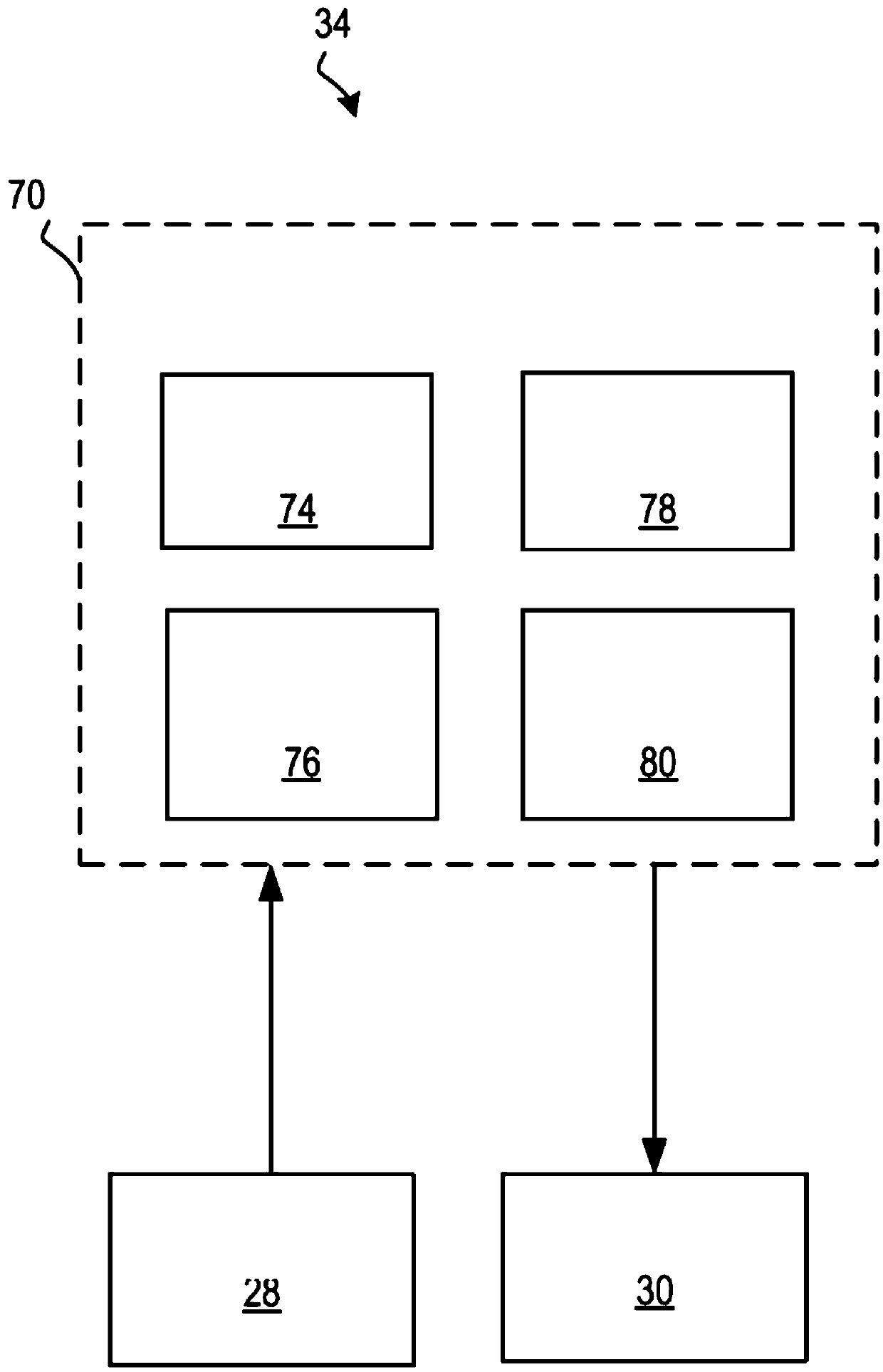

Systems and methods for automatic generation of robot control policies include a CAD-based simulation engine for simulating CAD-based trajectories for the robot based on cost function parameters, a demonstration module configured to record demonstrative trajectories of the robot, an optimization engine for optimizing a ratio of CAD-based trajectories to demonstrative trajectories based on computation resource limits, a cost learning module for learning cost functions by adjusting the cost function parameters using a minimized divergence between probability distribution of CAD-based trajectories and demonstrative trajectories; and a deep inverse reinforcement learning engine for generating robot control policies based on the learned cost functions.

Owner:SIEMENS AG

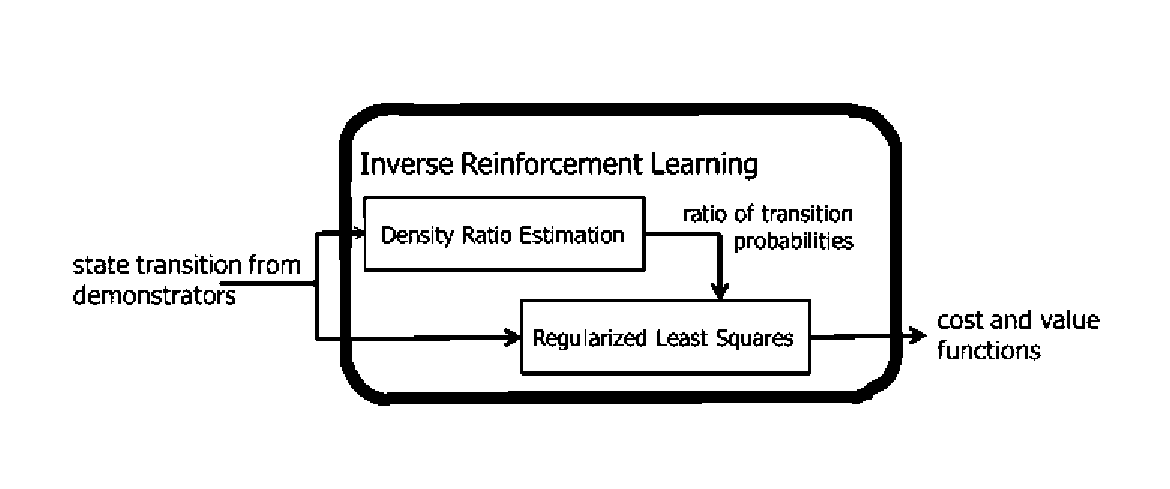

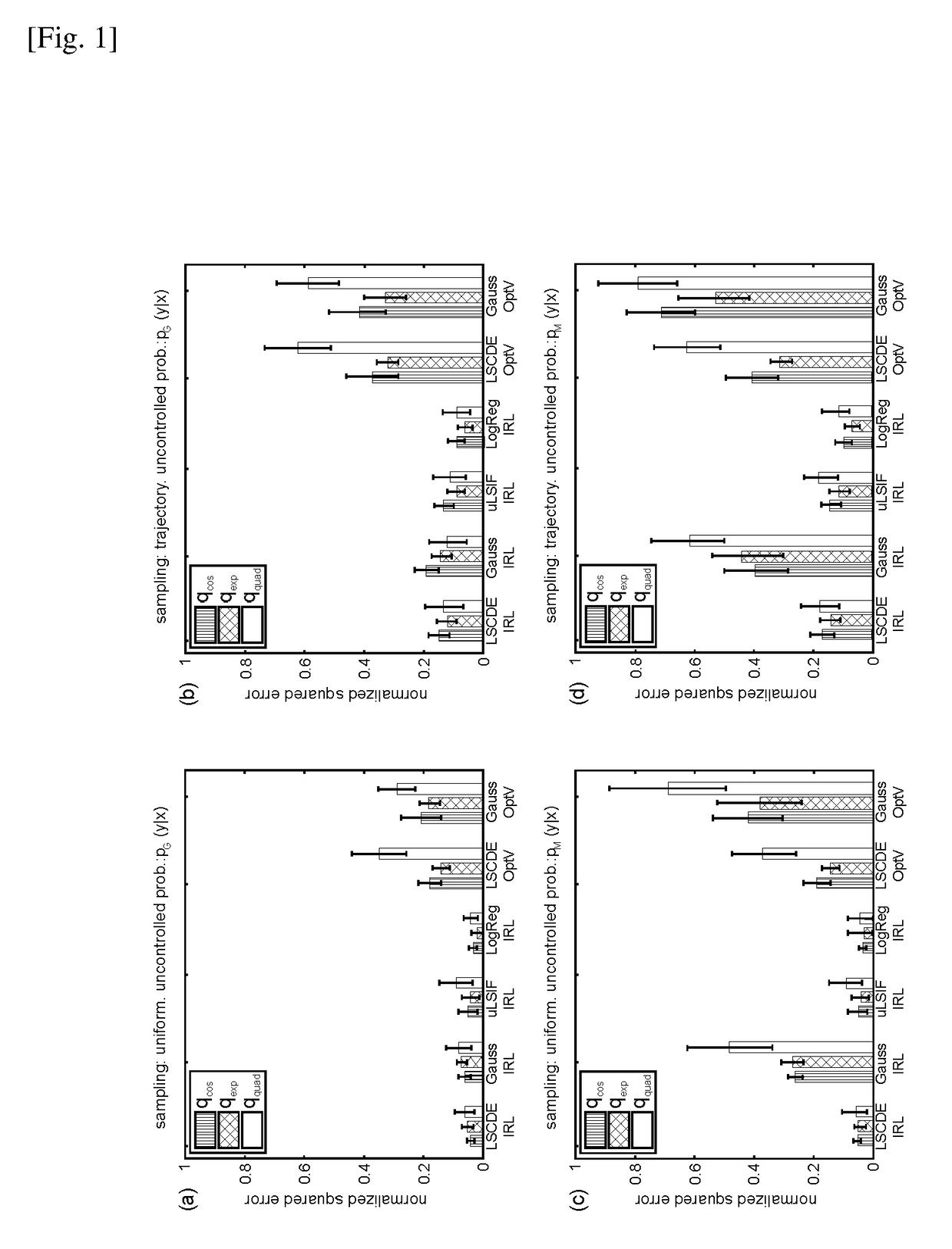

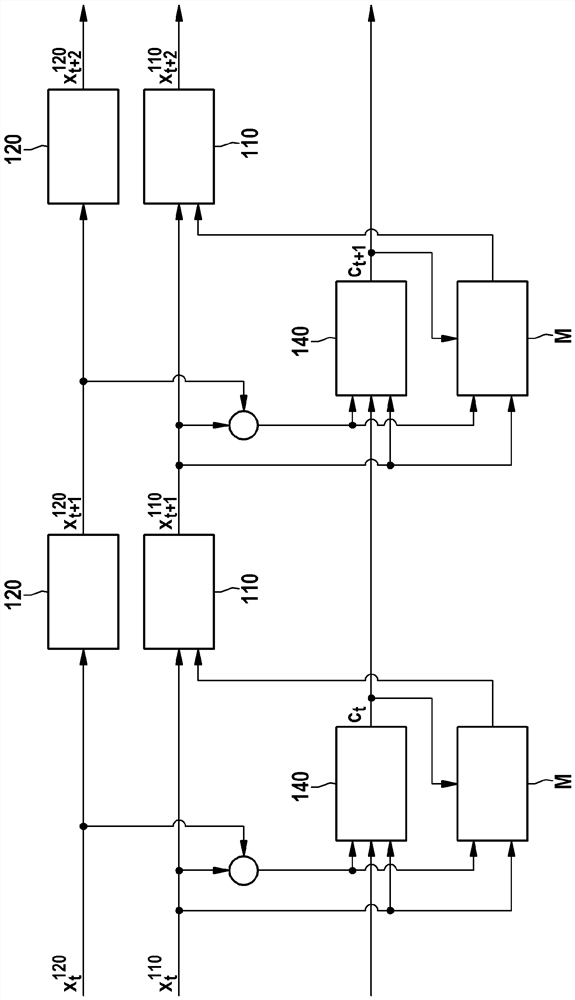

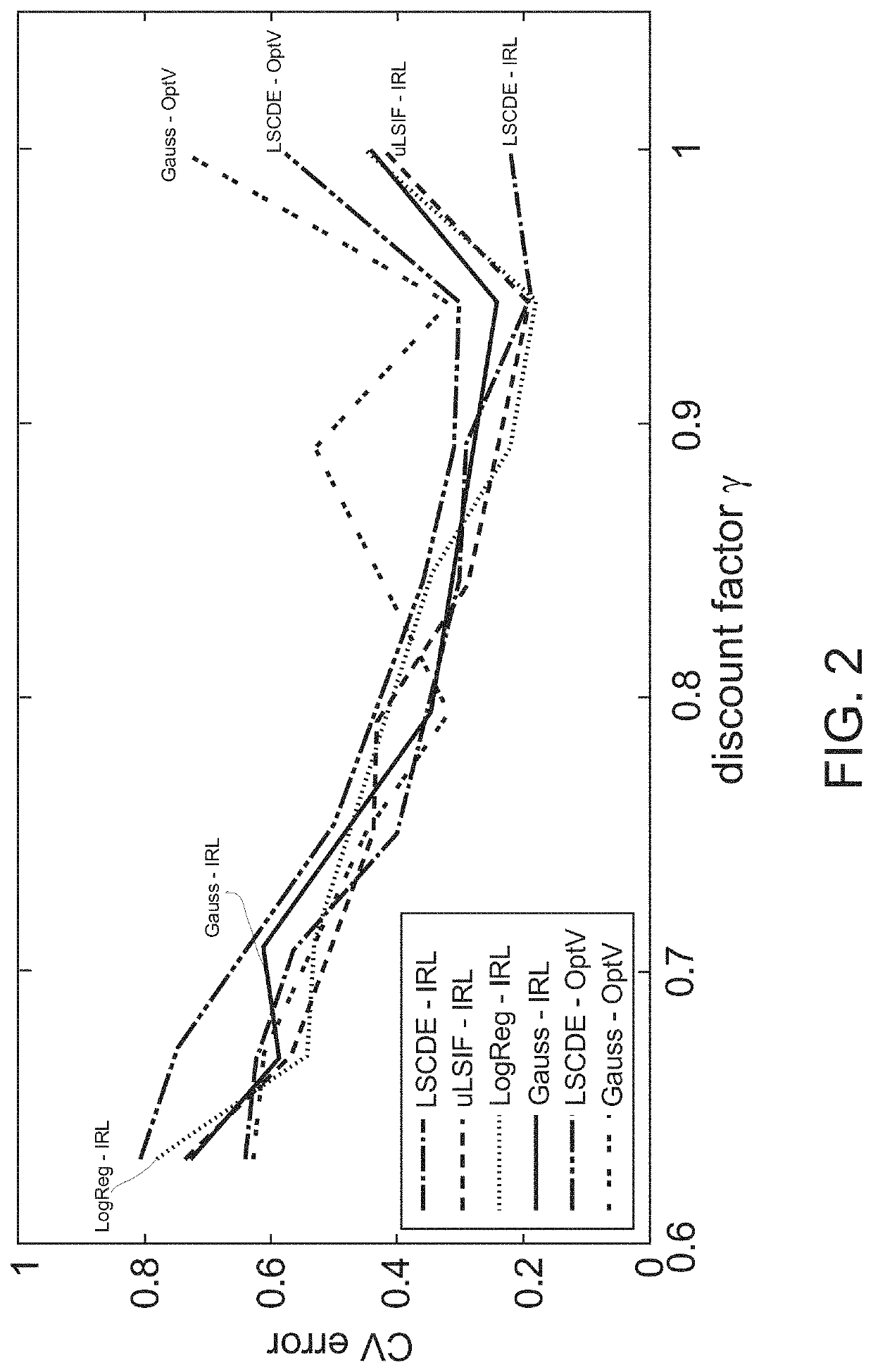

Inverse reinforcement learning by density ratio estimation

ActiveUS20170213151A1Efficient executionEffectively and efficiently performProbabilistic networksMachine learningDensity ratio estimationState variable

A method of inverse reinforcement learning for estimating cost and value functions of behaviors of a subject includes acquiring data representing changes in state variables that define the behaviors of the subject; applying a modified Bellman equation given by Eq. (1) to the acquired data: q(x)+gV(y)−V(x)=−1n{pi(y|x)) / (p(y|x)} (1) where q(x) and V(x) denote a cost function and a value function, respectively, at state x, g represents a discount factor, and p(y|x) and pi(y|x) denote state transition probabilities before and after learning, respectively; estimating a density ratio pi(y|x) / p(y|x) in Eq. (1); estimating q(x) and V(x) in Eq. (1) using the least square method in accordance with the estimated density ratio pi(y|x) / p(y|x), and outputting the estimated q(x) and V(x).

Owner:OKINAWA INST OF SCI & TECH SCHOOL

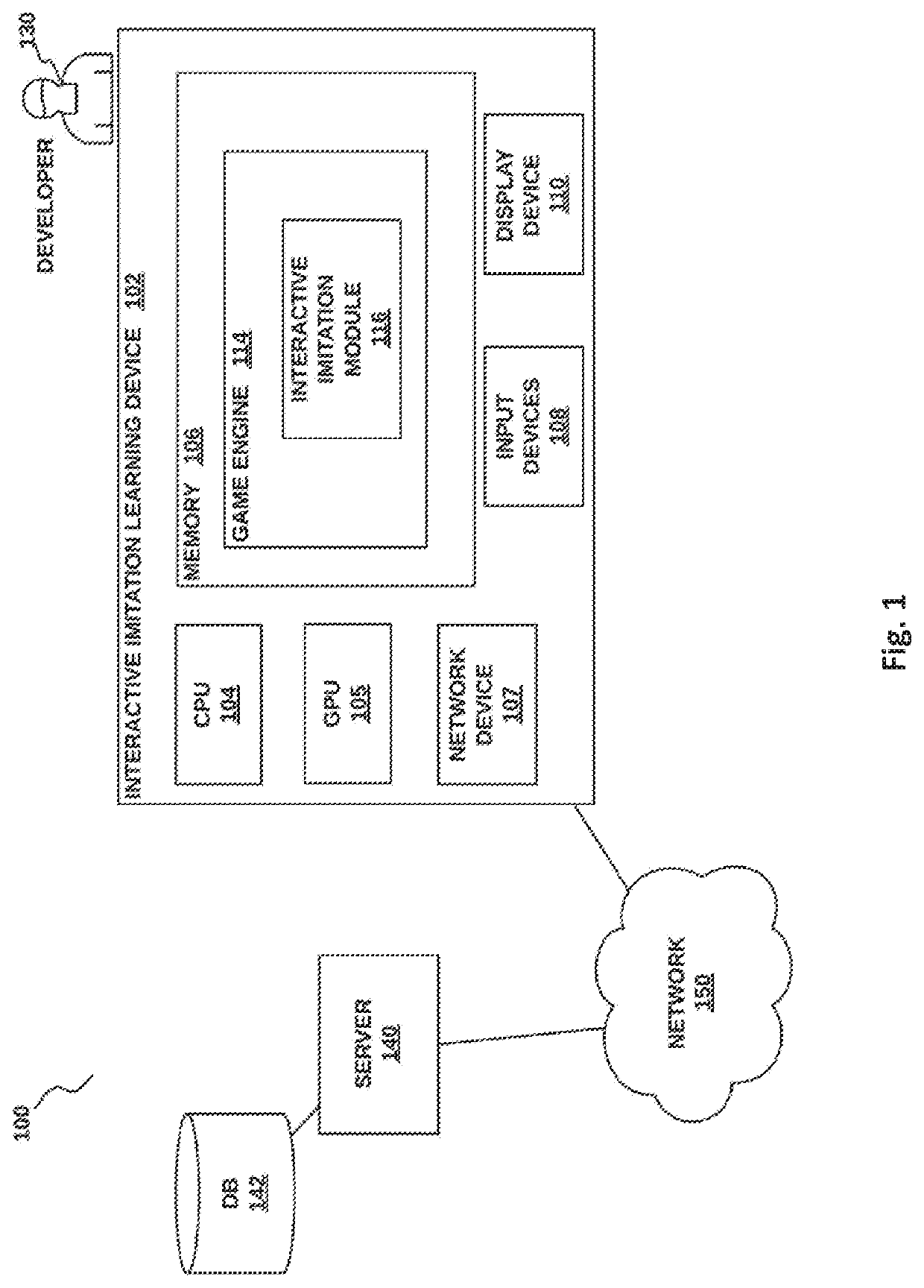

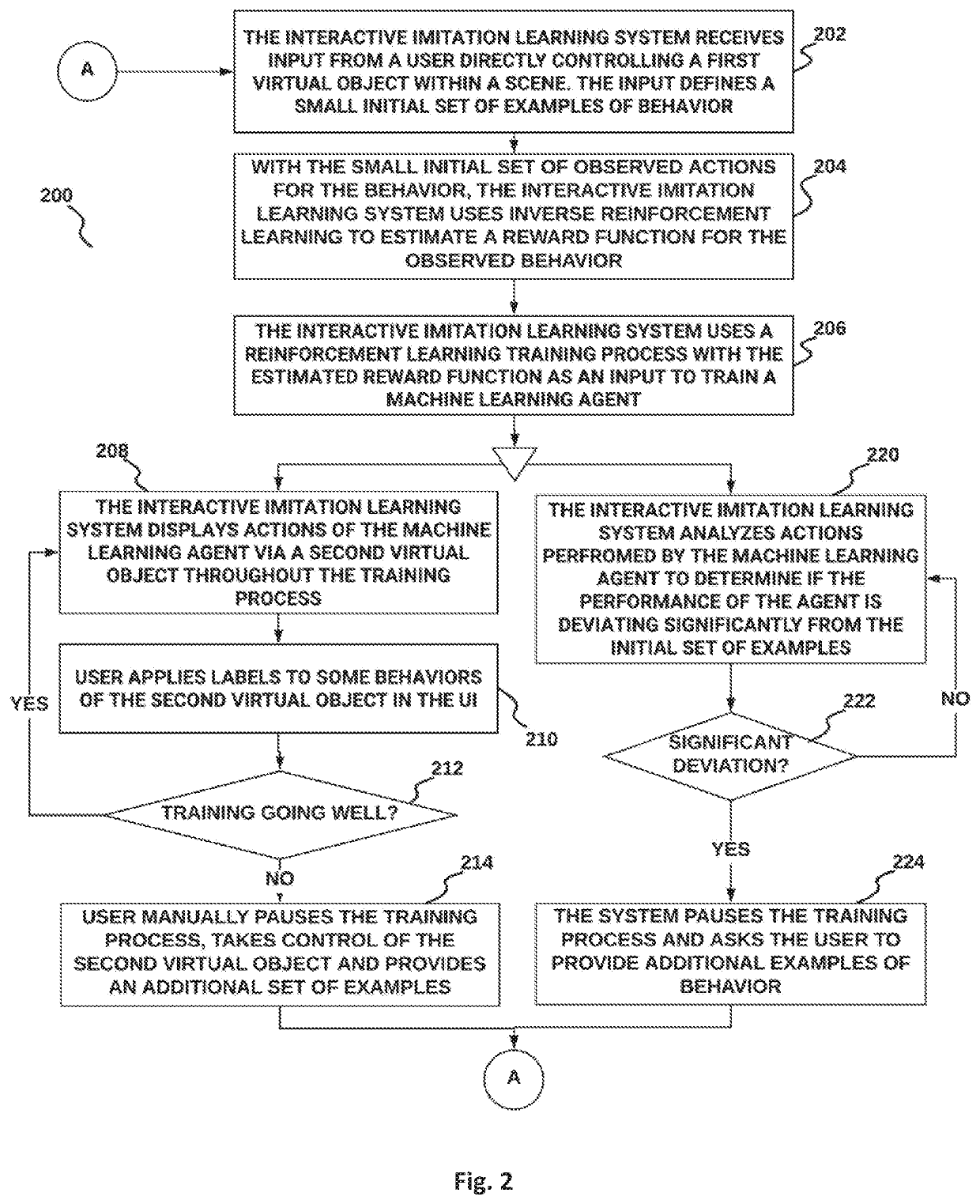

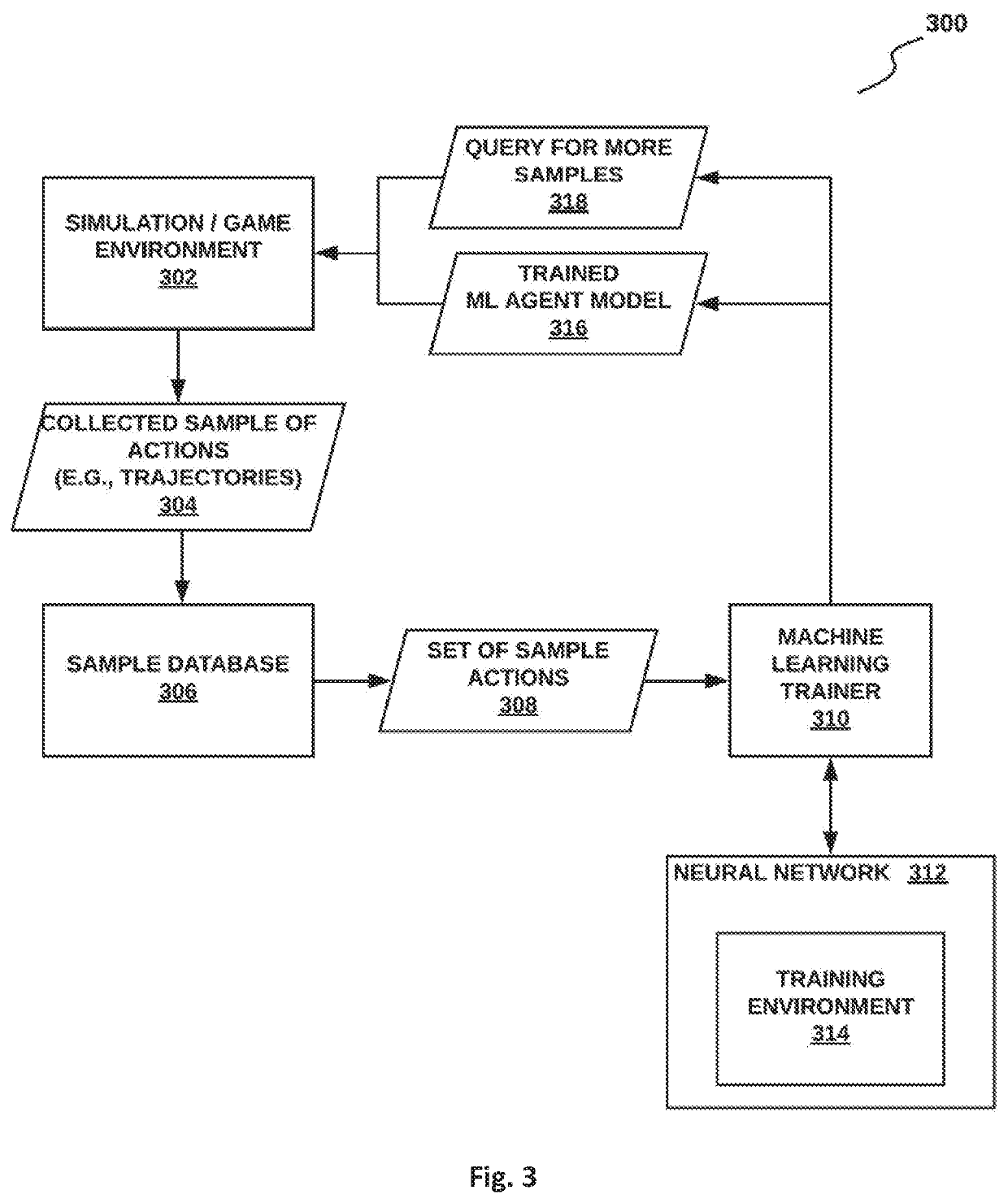

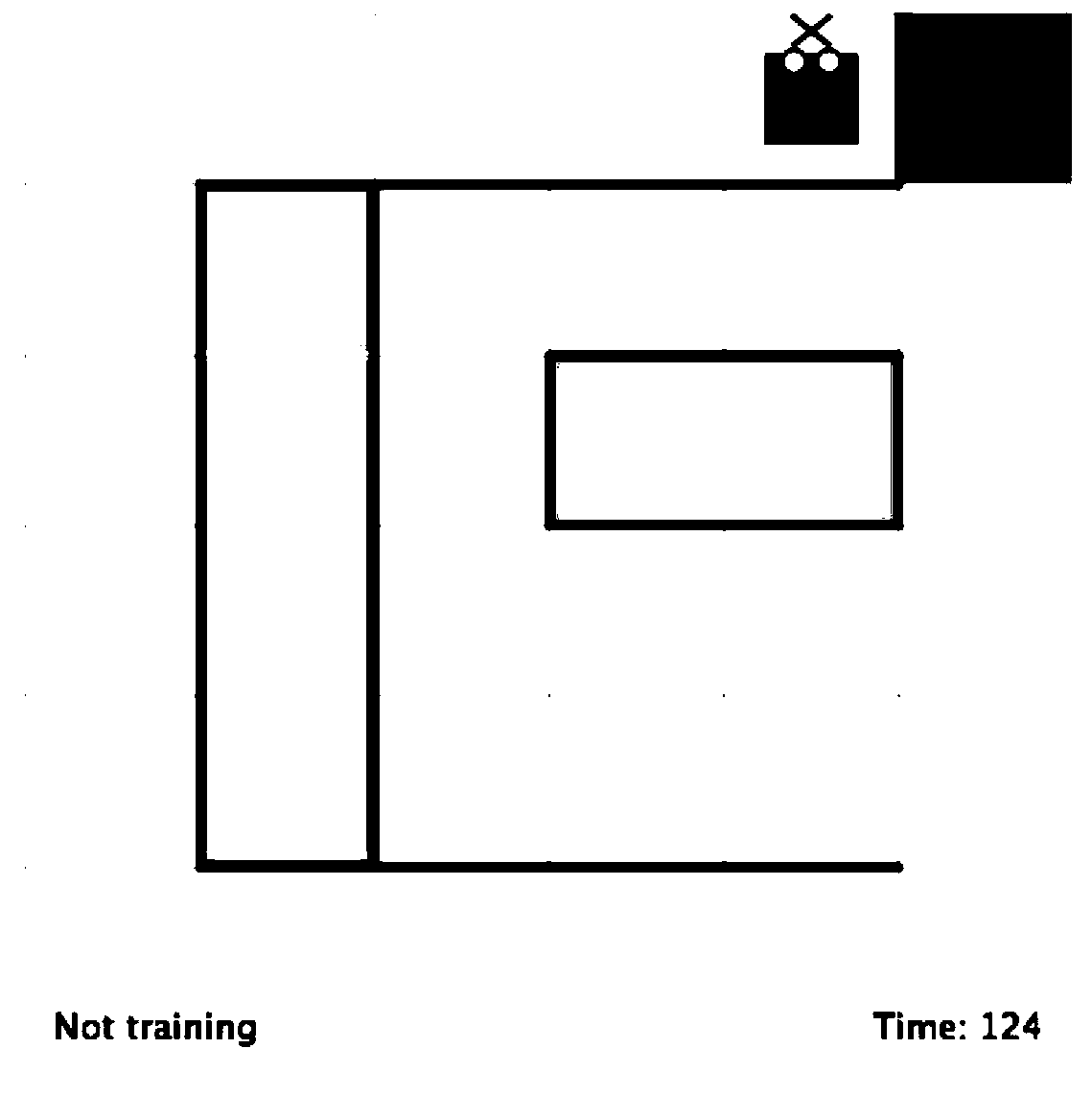

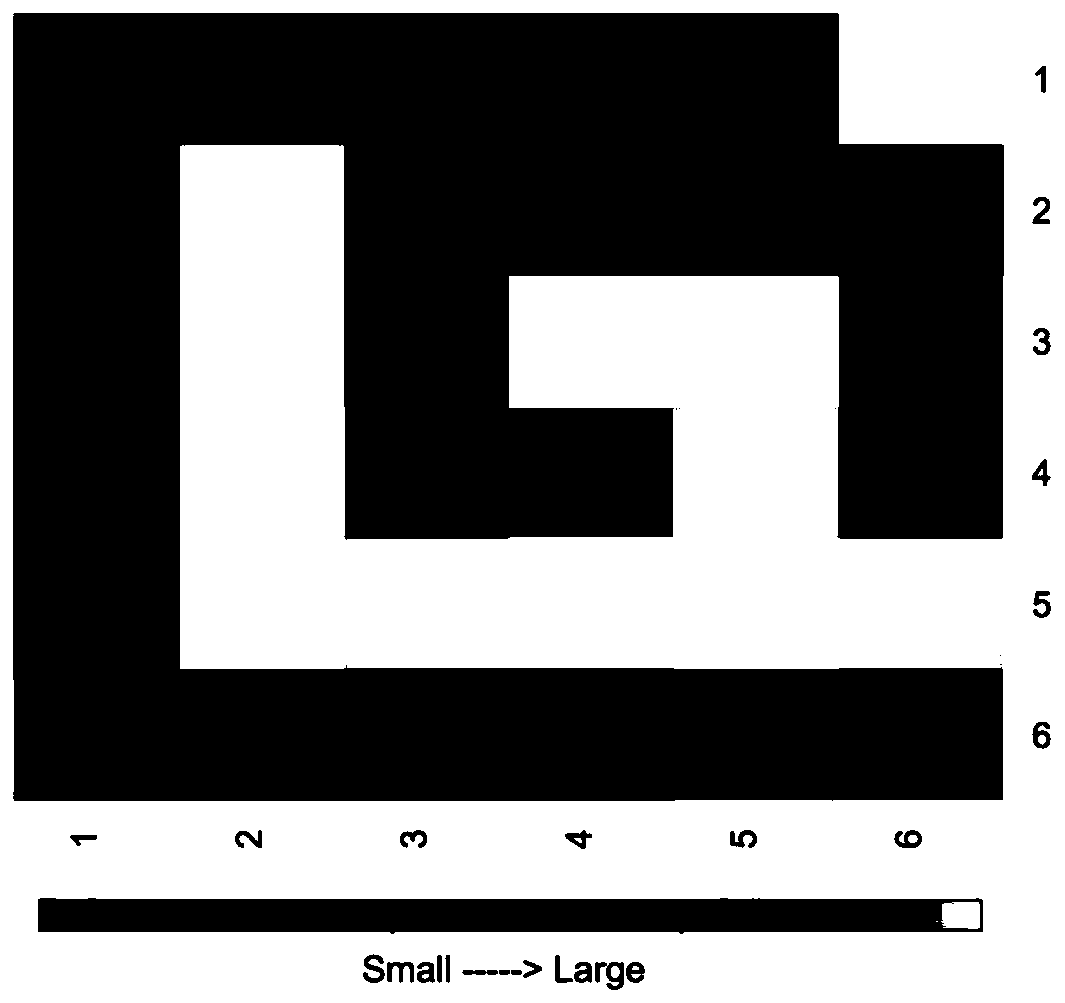

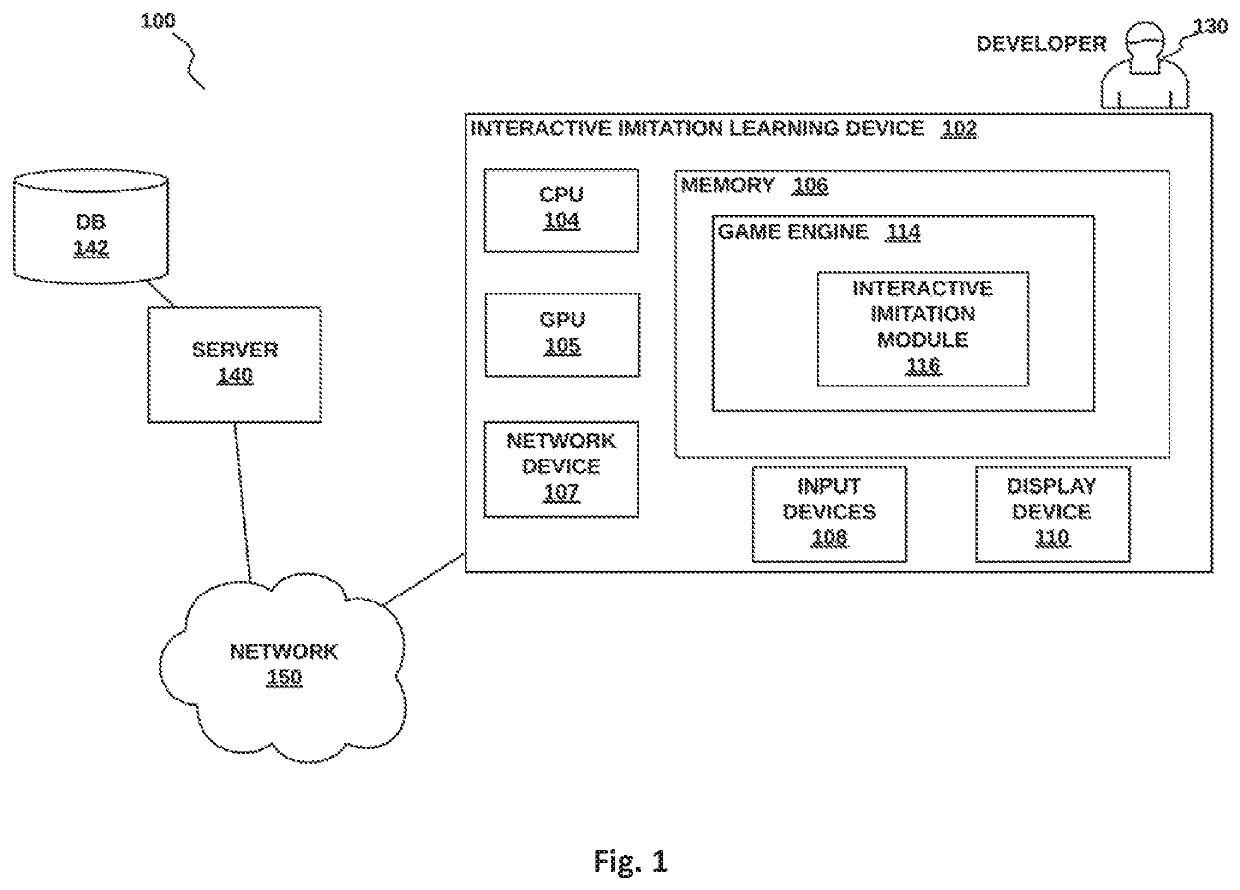

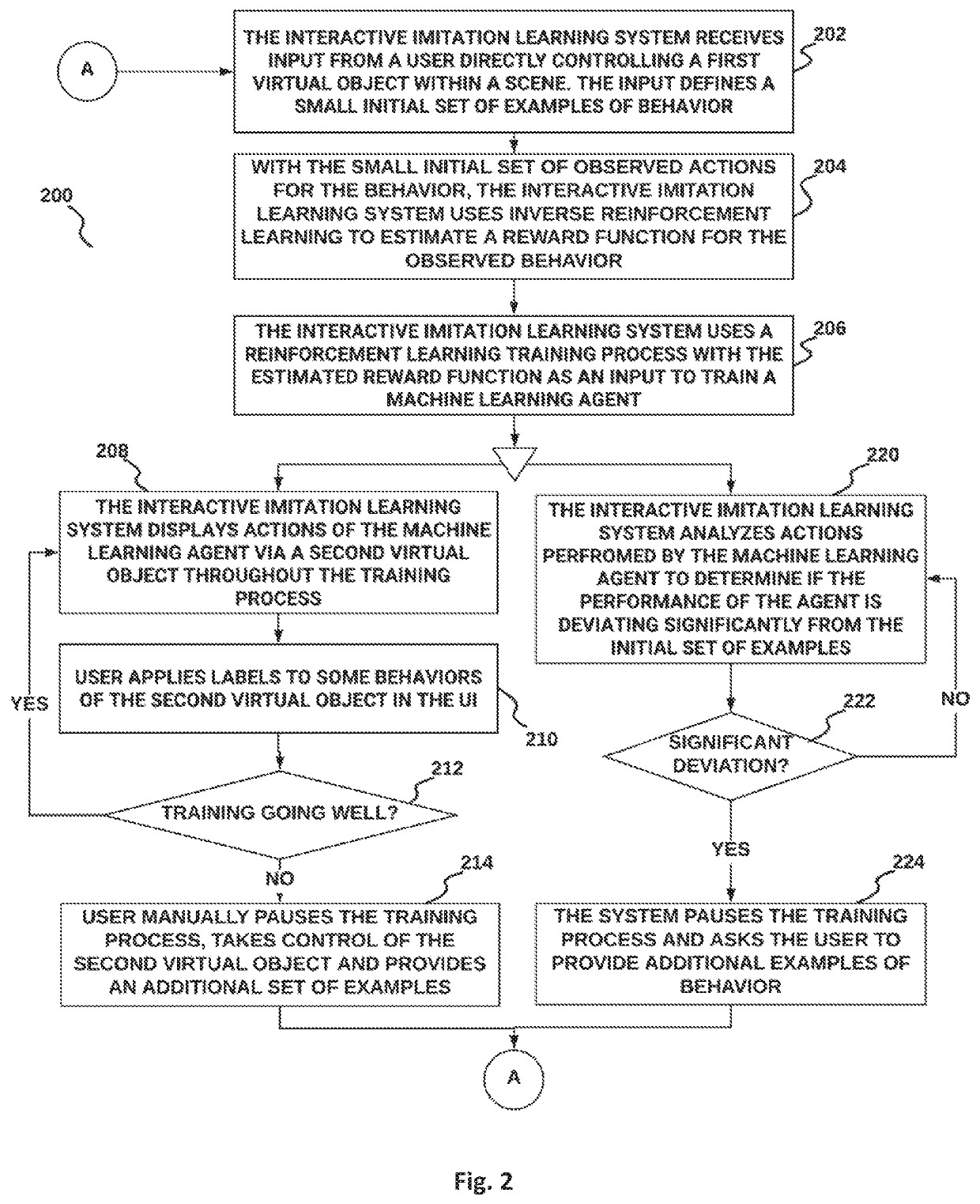

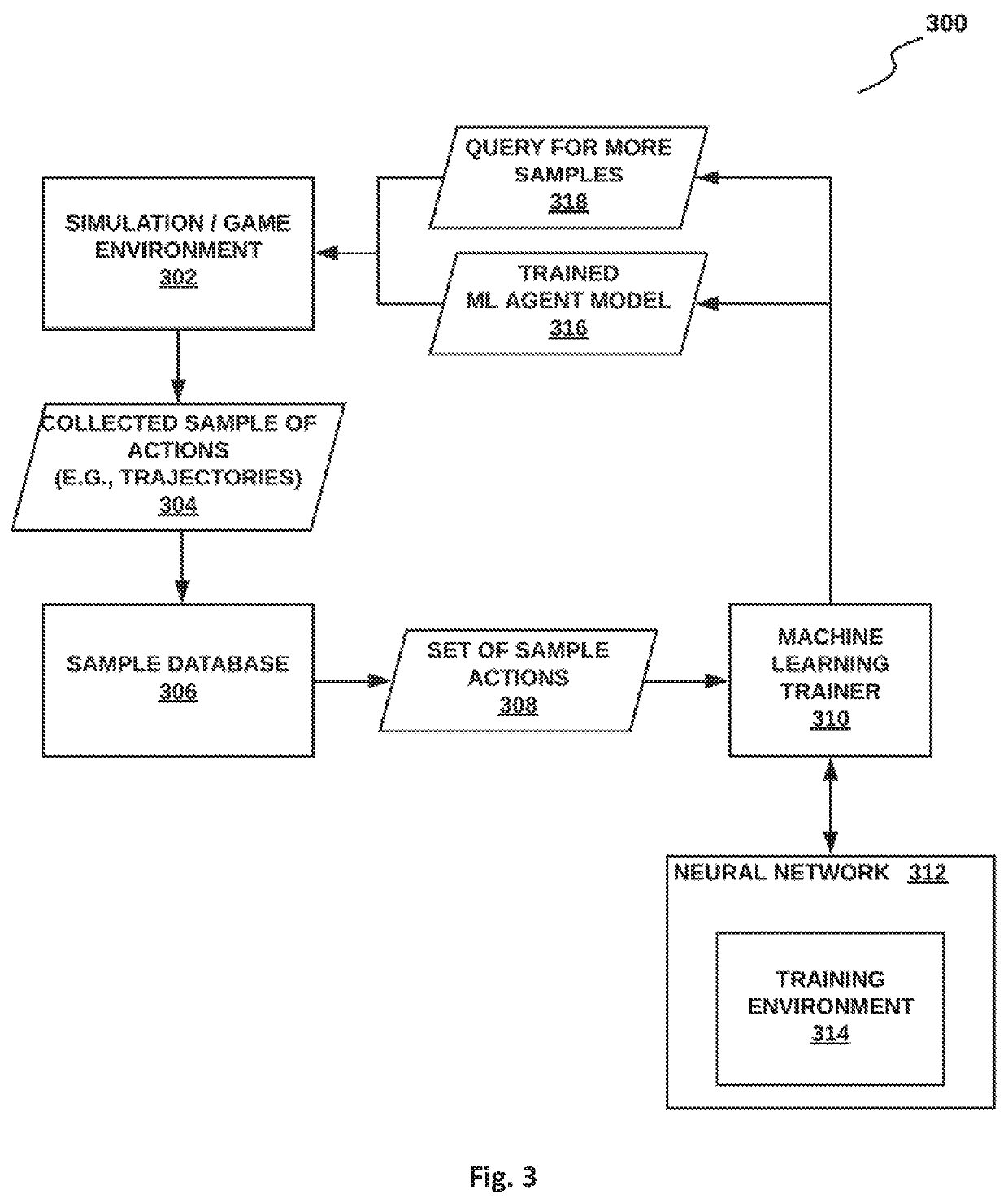

Method and system for interactive imitation learning in video games

In example embodiments, a method of interactive imitation learning method is disclosed. An input is received from an input device. The input includes data describing a first set of example actions defining a behavior for a virtual character. Inverse reinforcement learning is used to estimate a reward function for the set of example actions. The reward function and the set of example actions is used as input to a reinforcement learning model to train a machine learning agent to mimic the behavior in a training environment. Based on a measure of failure of the training of the machine learning agent reaching a threshold, the training of the machine learning agent is paused to request a second set of example actions from the input device. The second set of example actions is used in addition to the first set of example actions to estimate a new reward function.

Owner:UNITY IPR APS

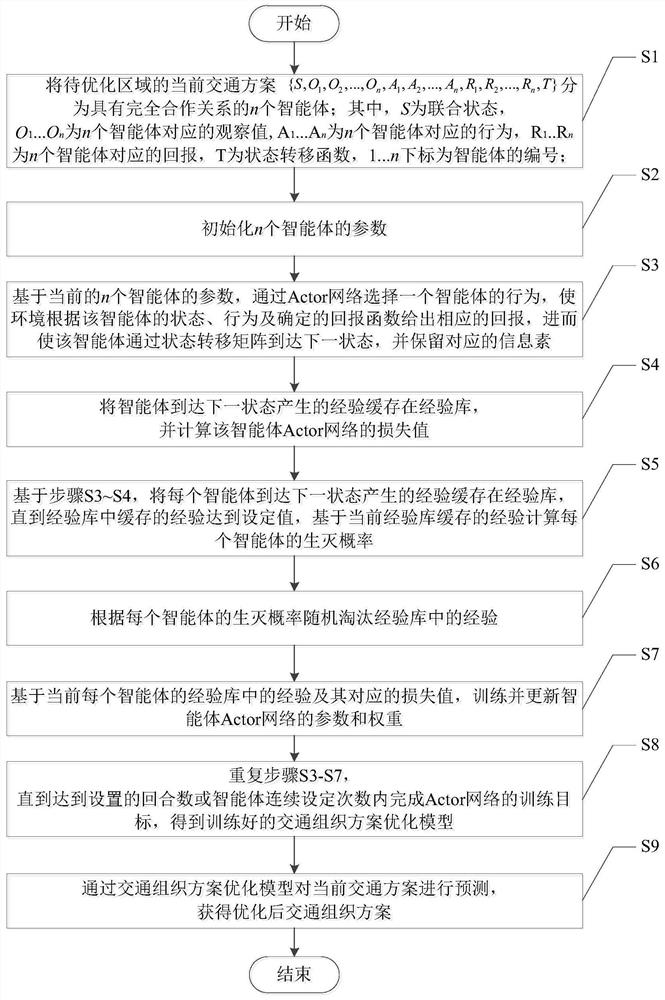

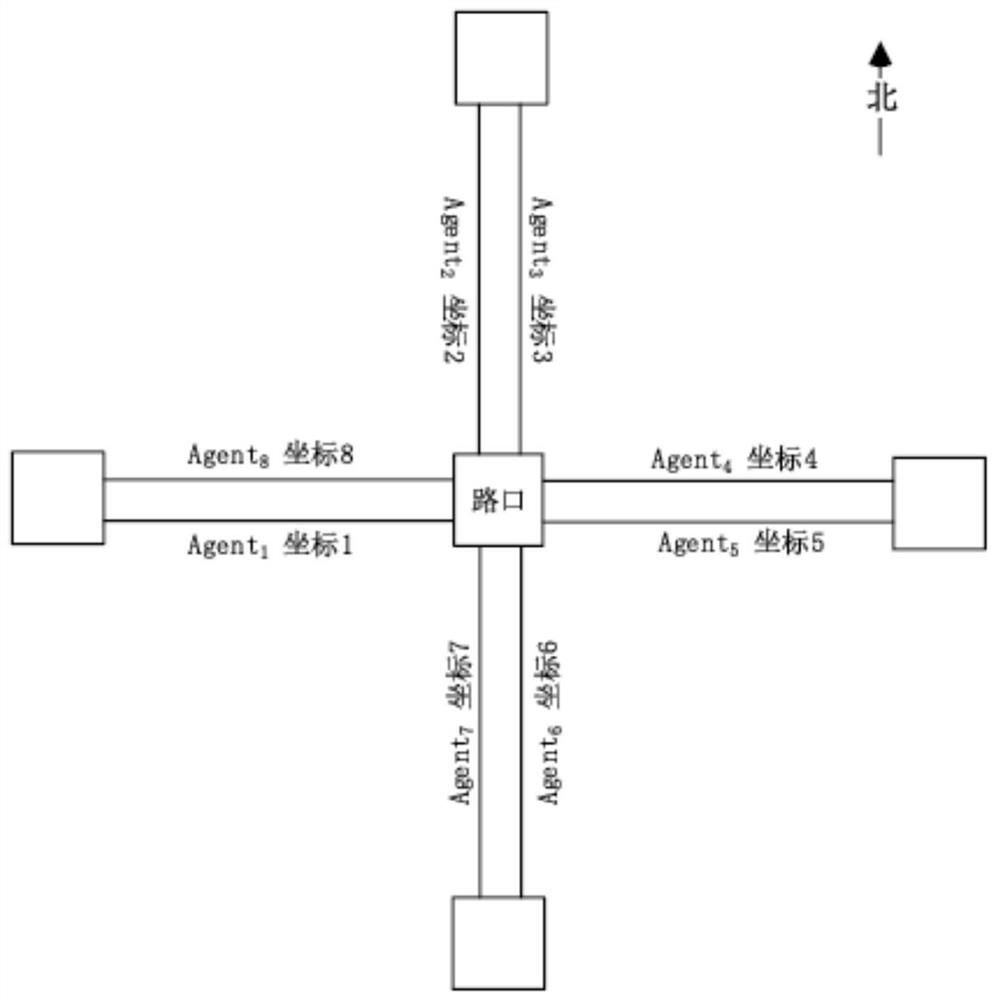

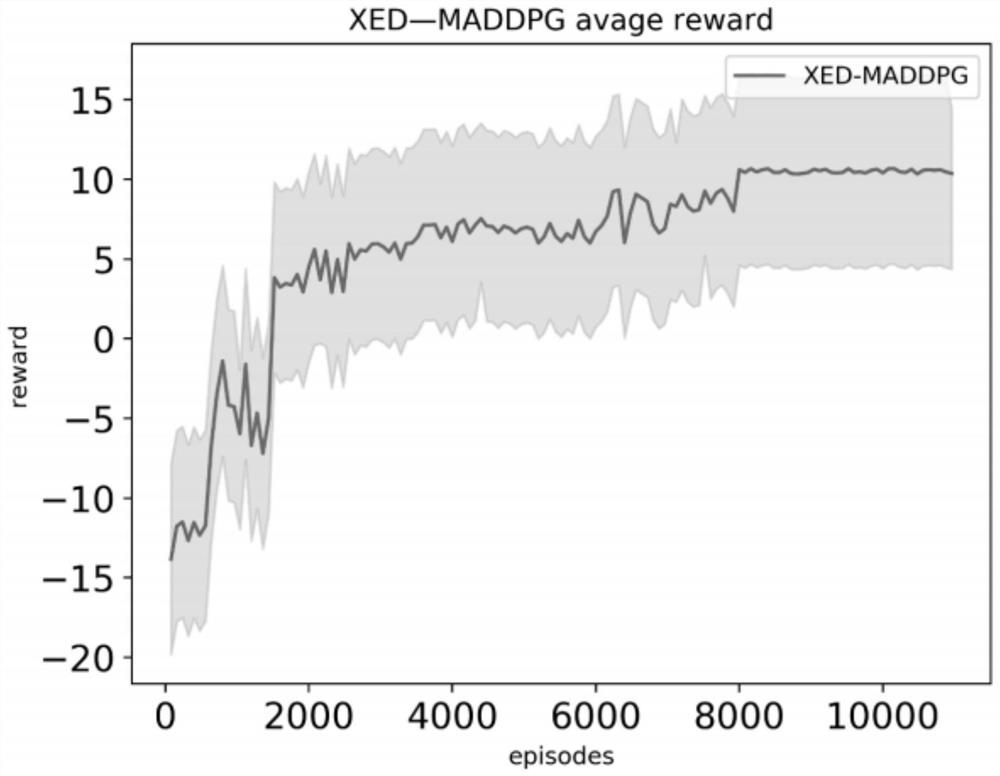

Traffic organization scheme optimization method based on multi-agent reinforcement learning

ActiveCN112949933AEasy to adaptImprove efficiencyInternal combustion piston enginesForecastingBirth and death processSmart city

The invention discloses a traffic organization scheme optimization method based on multi-agent reinforcement learning, and the method comprises the steps: improving an Actor network in MADDPG, improving an experience library in a Critic network based on a birth and death process, employing the maximum traffic flow at an early peak as an agent return index, training a maximum entropy inverse reinforcement learning model by using trajectory data to serve as a multi-agent return mechanism, and designing a return function of reinforcement learning based on the return mechanism. According to the method, the current urban traffic organization scheme is optimized, the current traffic data are analyzed to find out the cause of traffic jam, the method can well adapt to and quickly find out the optimal scheme, traffic guidance suggestions are provided for traffic police experts, and a foundation is laid for a smart city.

Owner:CHENGDU UNIV OF INFORMATION TECH +1

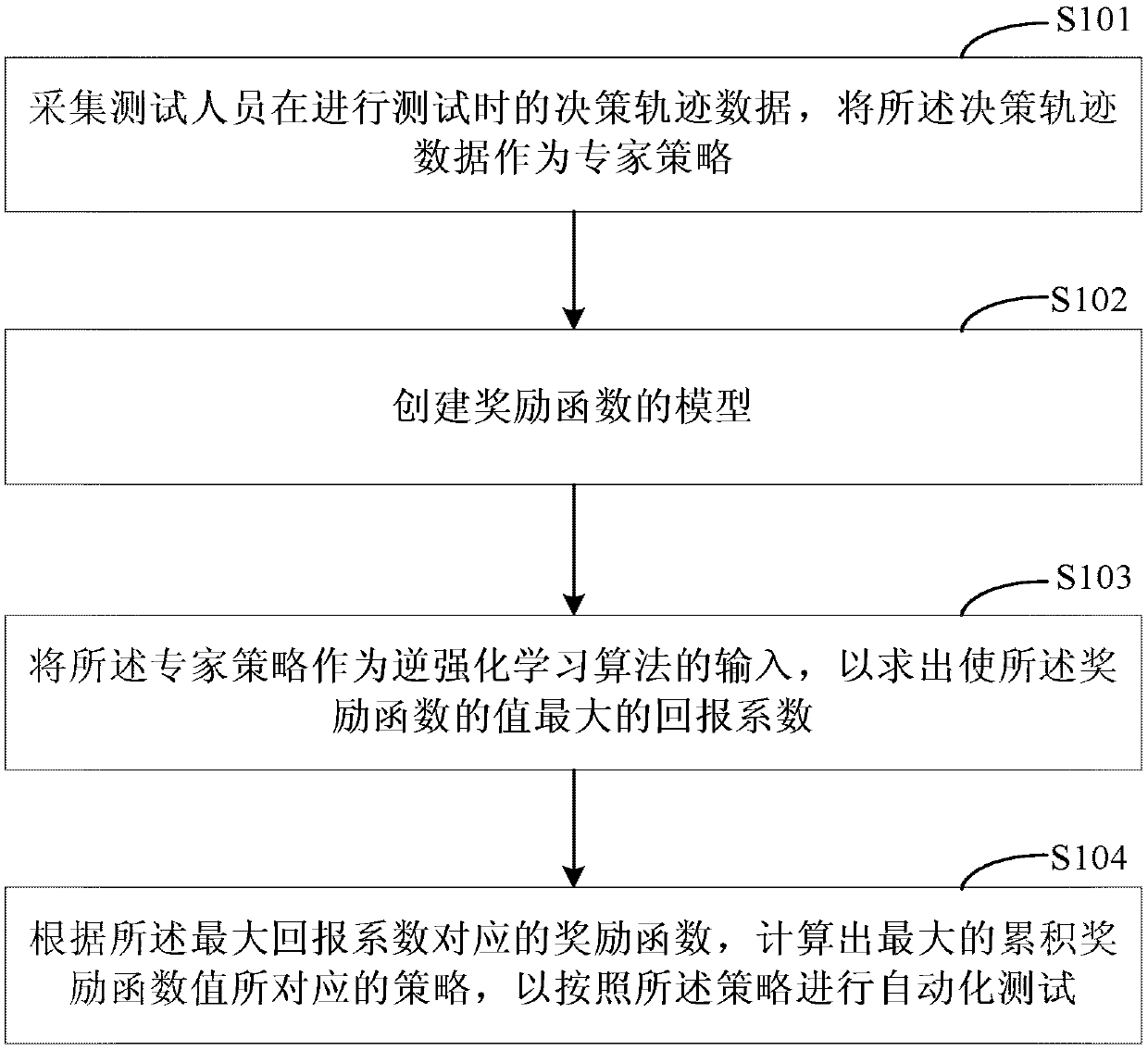

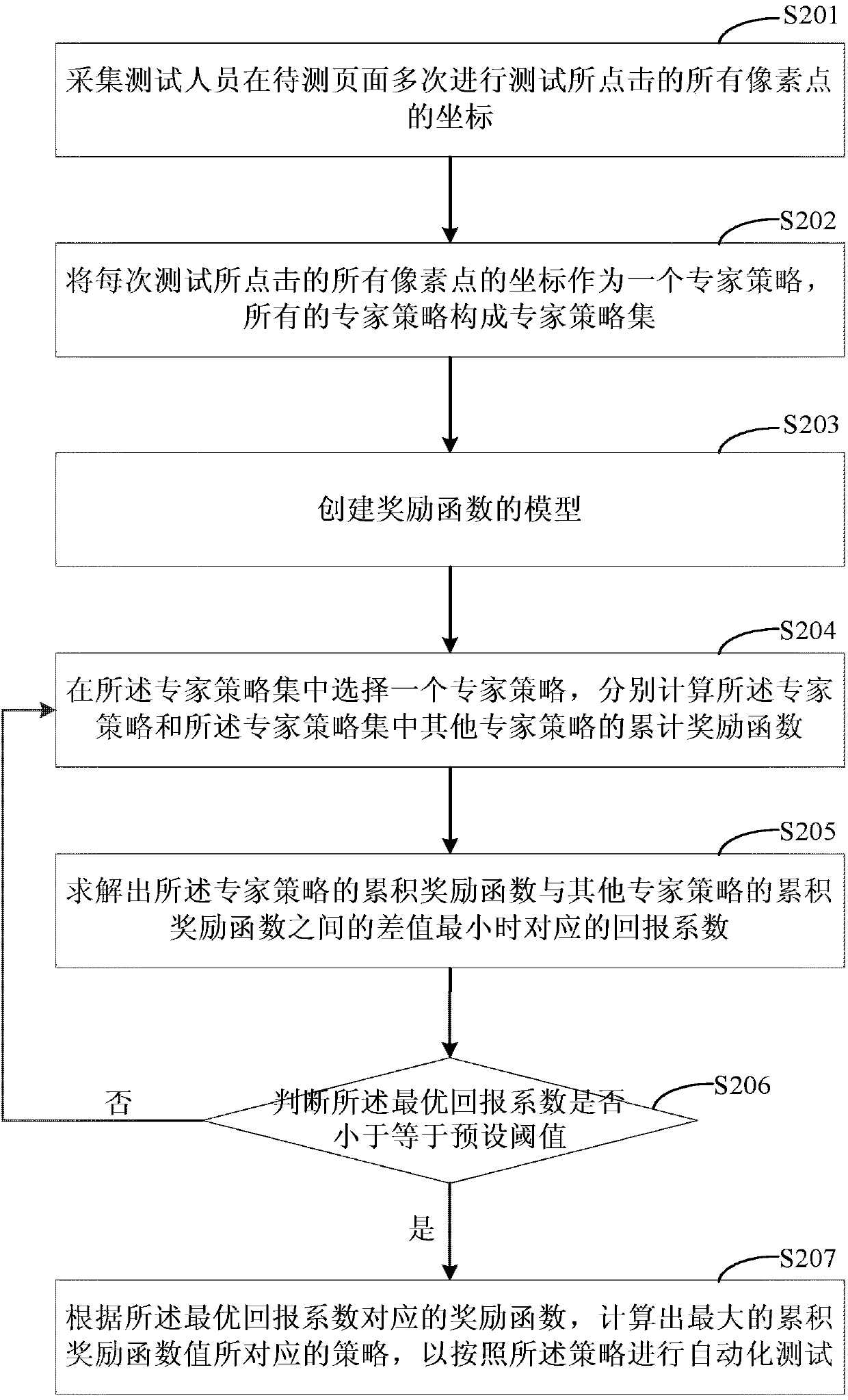

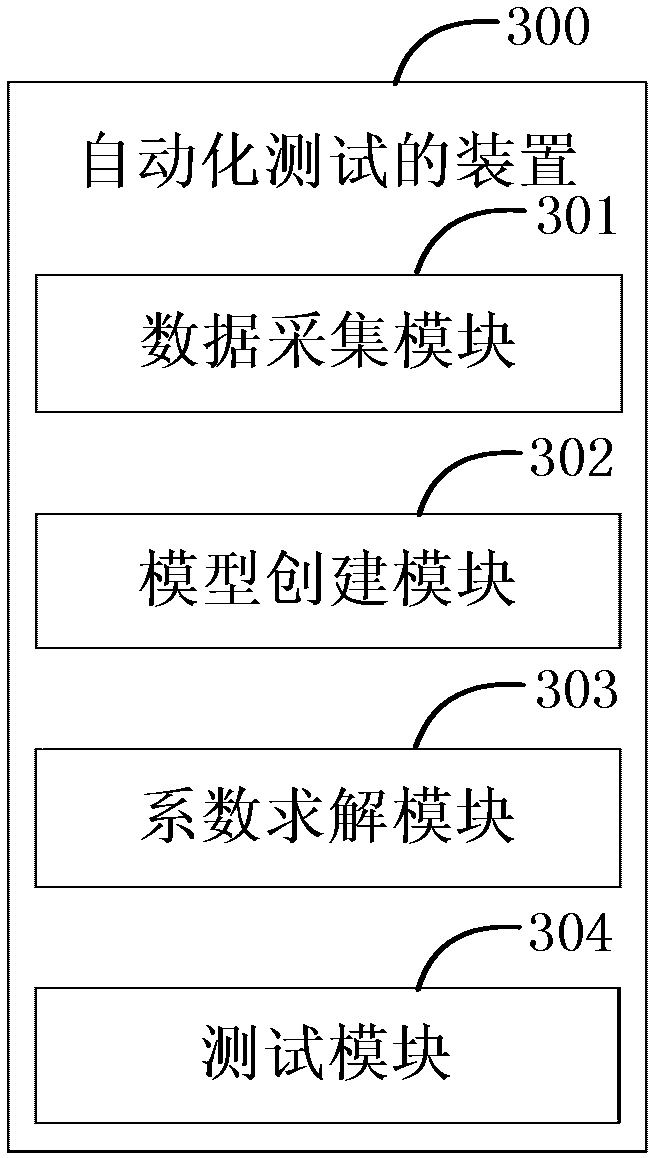

An automatic testing method and device

ActiveCN109710507ASave test resourcesHas the ability to generalizeSoftware testing/debuggingAlgorithmSimulation

Owner:BEIJING JINGDONG SHANGKE INFORMATION TECH CO LTD +1

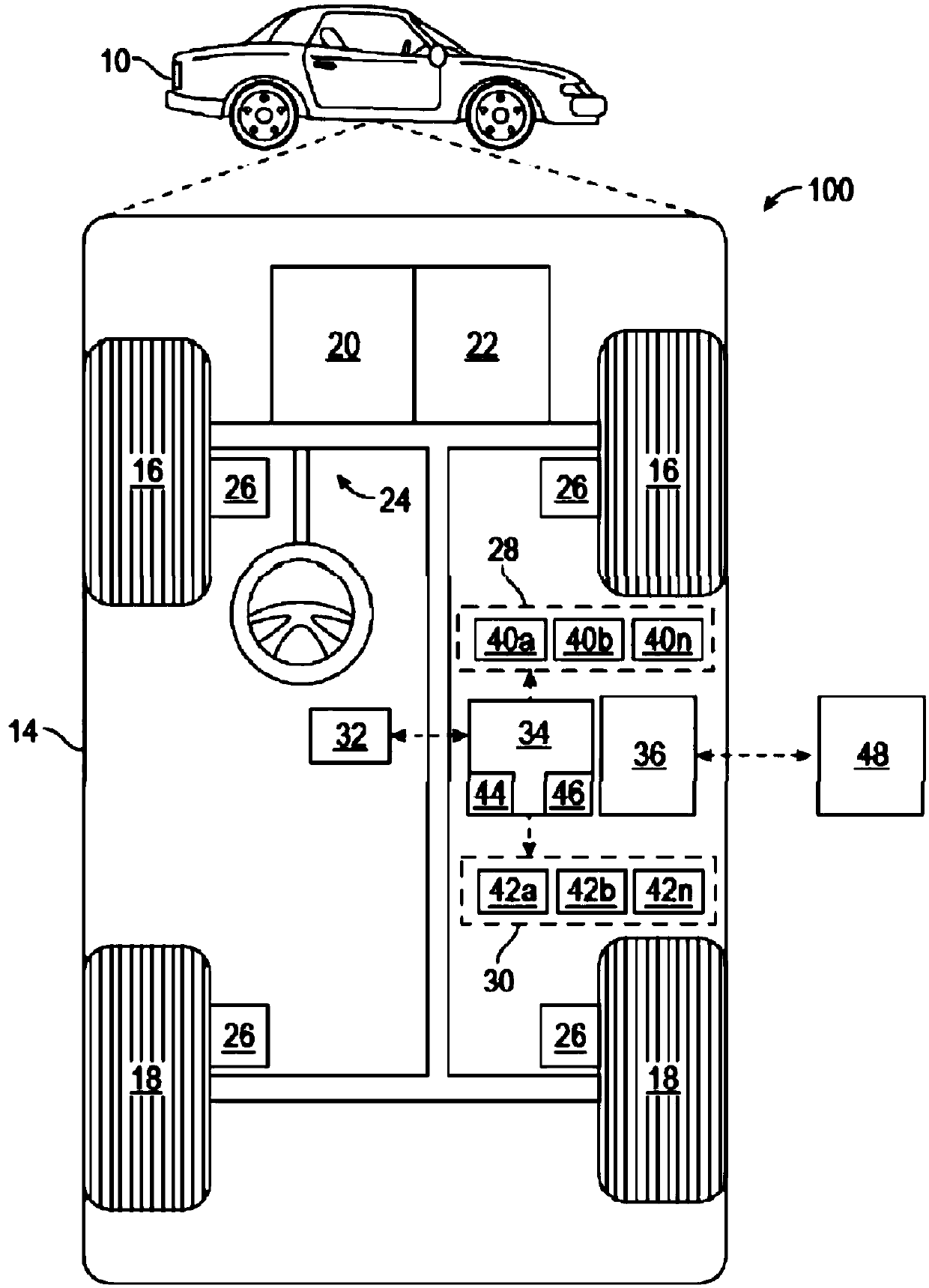

Hybrid vehicle fuel efficiency using inverse reinforcement learning

InactiveUS9090255B2Hybrid vehiclesInstruments for road network navigationFuel efficiencyElectric vehicle

A powertrain of a hybrid electric vehicle (HEV) is controlled. A first value α1 and a second value α2 are determined. α1 represents a proportion of an instantaneous power requirement (Preq) supplied by an engine of the HEV. α2 controls a recharging rate of a battery of the HEV. A determination is performed, based on α1 and α2, regarding how much engine power to use (Peng) and how much battery power to use (Pbatt). Peng and Pbatt are sent to the powertrain.

Owner:HONDA MOTOR CO LTD

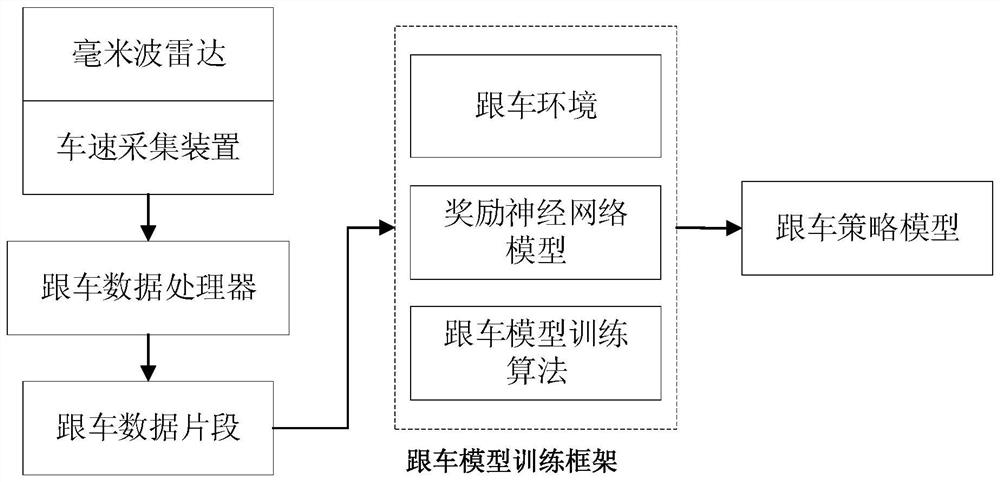

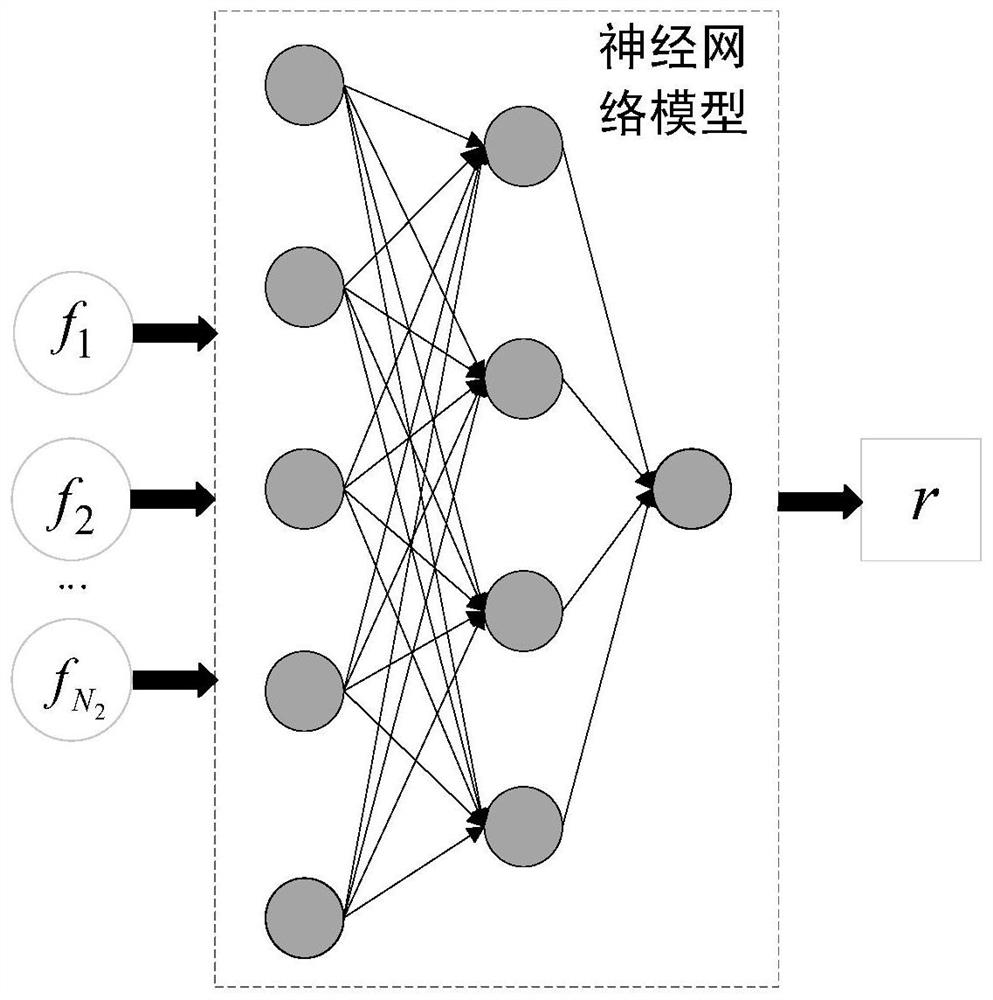

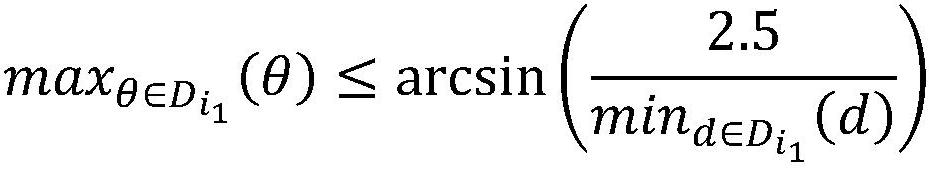

Vehicle following system and method for simulating driving style based on deep inverse reinforcement learning

ActiveCN112172813ASimple structureEasy to implementExternal condition input parametersIn vehicleWave radar

The invention belongs to the technical field of intelligent driving, and discloses a vehicle following system and method for simulating a driving style based on deep inverse reinforcement learning. The vehicle following system comprises a millimeter-wave radar which collects the information of the distance between a vehicle and a front vehicle, the lateral distance between the vehicle and the front vehicle, the relative speed and the azimuth angle, a vehicle speed collection device which collects the speed of the vehicle, and a vehicle-mounted industrial personal computer. A vehicle followingdata processor in the vehicle-mounted industrial personal computer processes information acquired by the millimeter wave radar and the vehicle speed collection device, extracts a vehicle following data fragment required by vehicle following model training, and performs vehicle following model training on the vehicle following data fragment to obtain a vehicle following strategy model; the vehiclefollowing system is simple in structure, a reward function is learned from the historical vehicle following data of a driver through the deep inverse reinforcement learning method, the vehicle following strategy of the driver is solved through the reward function and the reinforcement learning method, the obtained vehicle following model can simulate the driving styles of different drivers and understand the preference of the driver in the vehicle following process, and a personified vehicle following behavior is generated.

Owner:CHANGAN UNIV

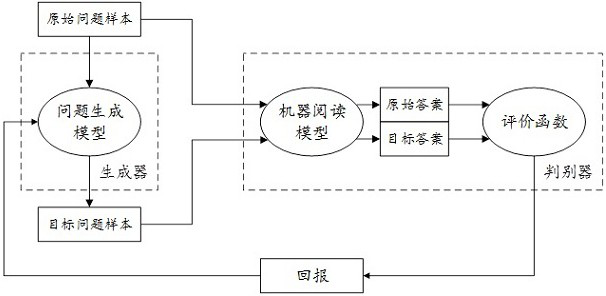

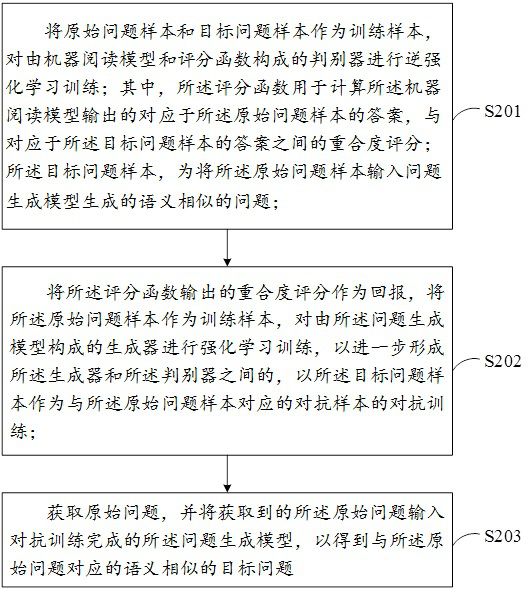

Problem generation method and device

ActiveCN111737439AReduce consumptionAccelerated trainingDigital data information retrievalMachine learningDiscriminatorQuestion generation

The invention discloses a problem generation method and device, and the method comprises the steps: taking an original problem sample and a target problem sample as training samples, and carrying outthe inverse reinforcement learning training of a discriminator composed of a machine reading model and a scoring function, wherein the target problem sample is a semantic similarity problem generatedby inputting the original problem sample into a problem generation model; and taking the overlap ratio score output by the scoring function as a return; taking the original problem sample as a training sample, and carrying out reinforcement learning training on a generator formed by the problem generation model, so as to further form adversarial training between the generator and the discriminator and taking the target problem sample as an adversarial sample corresponding to the original problem sample, wherein the problem generation model after adversarial training can be used for generatingtarget problems with similar semantics.

Owner:ALIPAY (HANGZHOU) INFORMATION TECH CO LTD

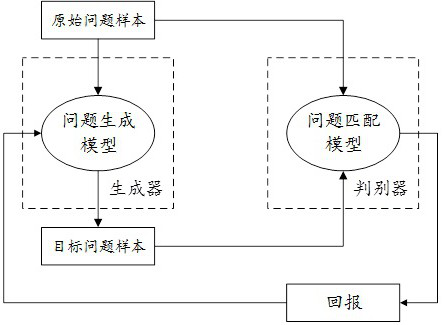

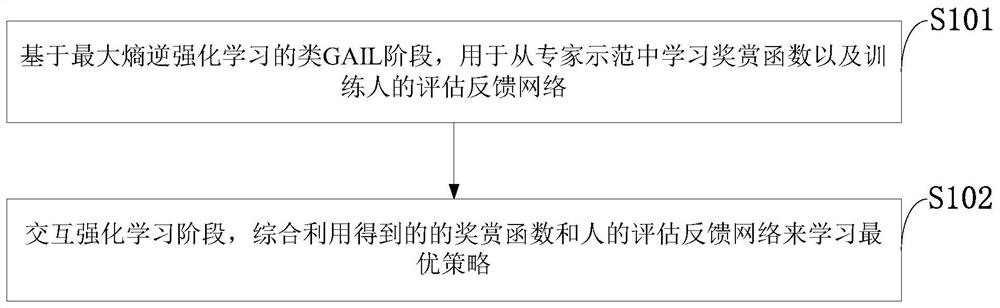

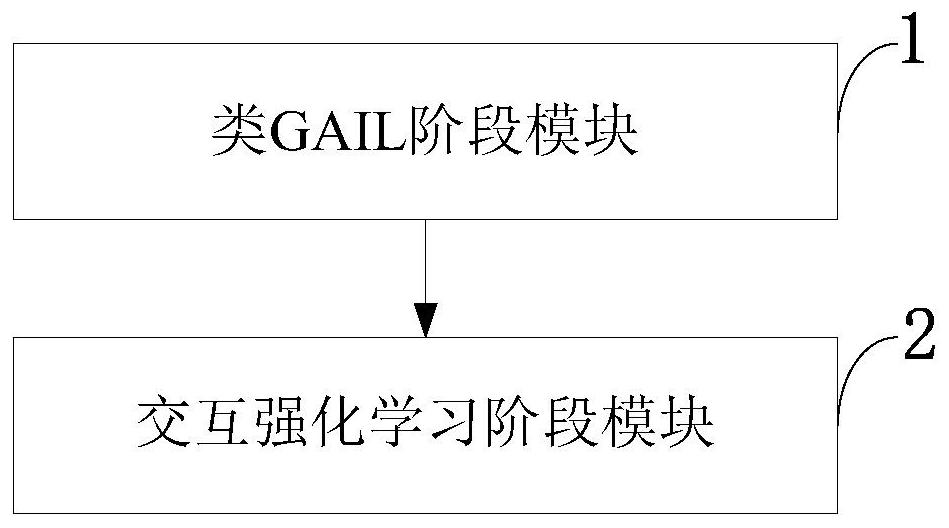

Generative confrontation interactive imitation learning method and system, storage medium and application

PendingCN113379027AImprove stabilityEasy to learnNeural architecturesNeural learning methodsInteractive LearningImitation learning

The invention belongs to the technical field of artificial intelligence, and discloses a generative adversarial interactive imitation learning method and system, a storage medium and application, the generative adversarial interactive imitation learning method combines generative adversarial interactive imitation learning and an interactive learning framework to form generative adversarial interactive imitation learning GA2IL; the GA2IL is composed of two stages: (1) a GAIL-like stage based on maximum entropy inverse reinforcement learning; and (2) an interactive reinforcement learning stage. The GA2IL intelligent agent can surpass the performance of expert demonstration and obtain the optimal or near-optimal strategy under the condition that expert demonstration is optimal or suboptimal, the stability of the strategy can be improved, and the GA2IL intelligent agent can be expanded to large-scale complex tasks. According to the method, under the condition that whether optimal expert demonstration or suboptimal expert demonstration is given, the GA2IL intelligent agent can always surpass the performance of expert demonstration and can obtain an optimal strategy or a strategy close to the optimal strategy.

Owner:OCEAN UNIV OF CHINA

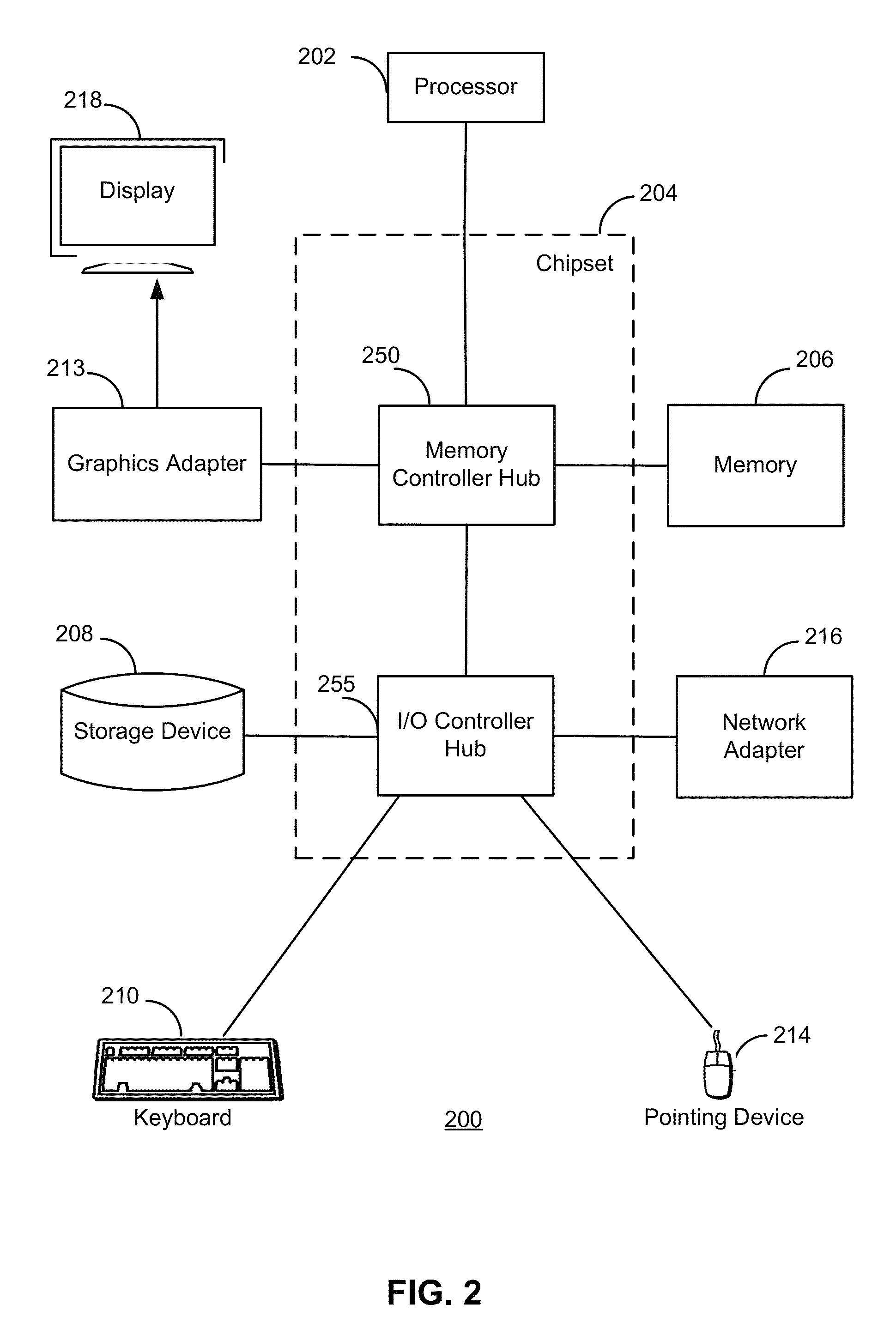

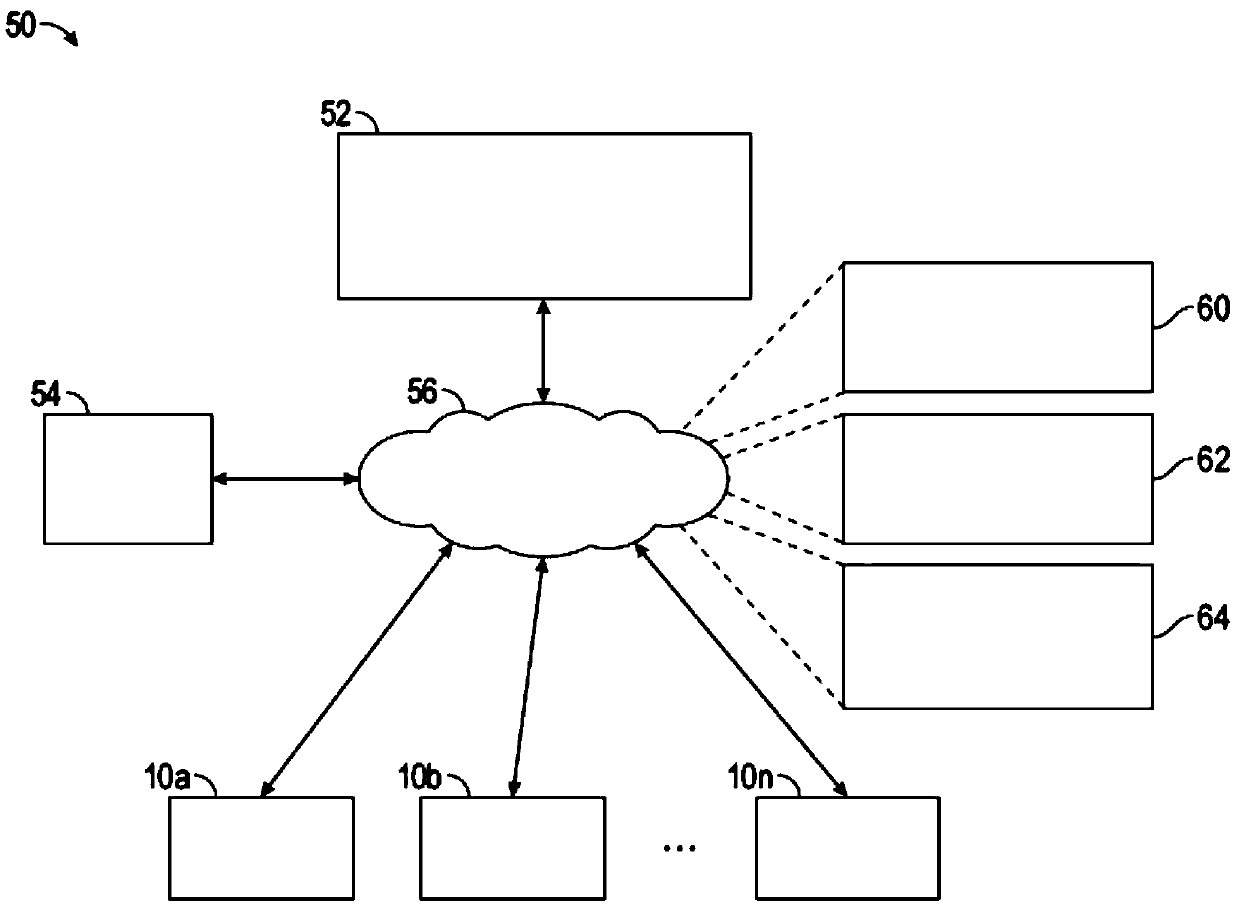

Autonomous vehicle operations with automated assistance

Systems and method are provided for controlling a vehicle. In one embodiment, a vehicle includes: one or more onboard sensing devices, one or more onboard actuators, and a controller that, by a processor, obtains sensor data from the one or more sensing devices, identifies an assistance event based at least in part on the sensor data, determines an automated response to the assistance event basedat least in part on the sensor data and an autonomous assistance policy, and autonomously operates the one or more actuators onboard the vehicle in accordance with the automated response. In exemplaryembodiments, the autonomous assistance policy is determined using inverse reinforcement learning to optimize a reward function and mimic a remote human assistor.

Owner:GM GLOBAL TECH OPERATIONS LLC

A method of interactive reinforcement learning from demonstration and human assessment feedback

The invention discloses a method of interactive reinforcement learning from demonstration and human assessment feedback. An IRL-TAMER is formed by combining an inverse reinforcement learning IRL and aTAMER framework. The method has the beneficial effect that the intelligent agent can effectively learn from human rewards and demonstration through the learning method.

Owner:OCEAN UNIV OF CHINA

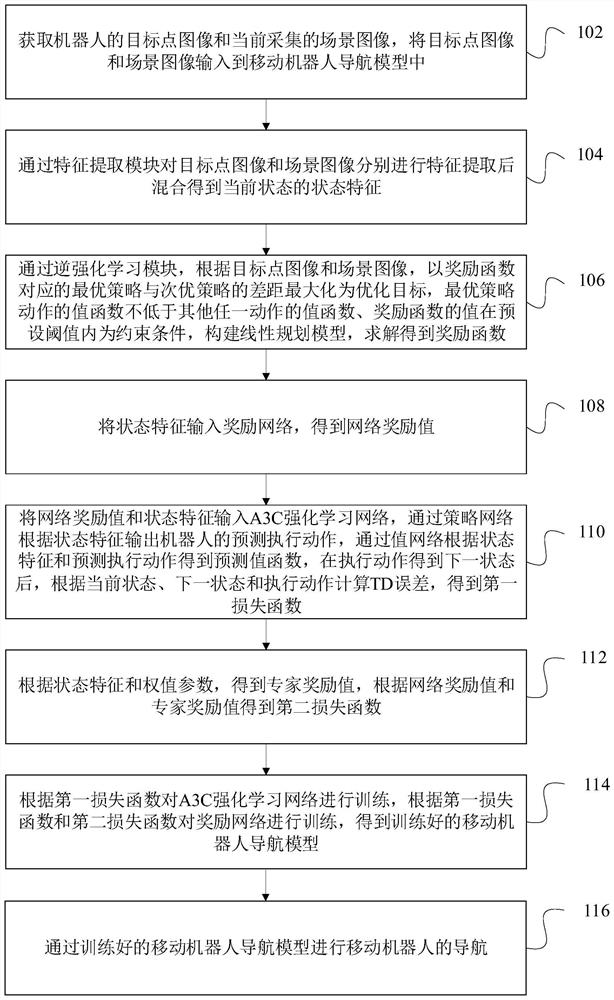

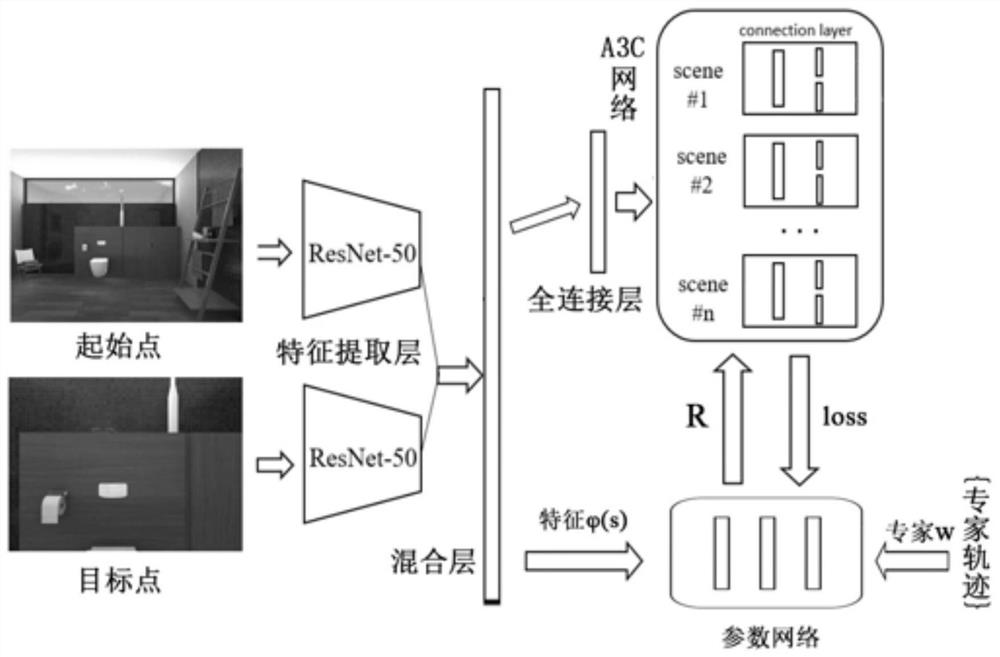

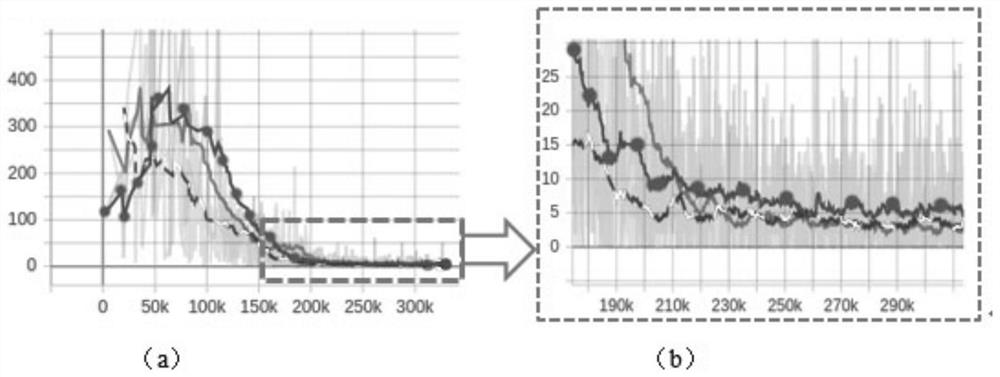

Mobile robot navigation method and device, computer equipment and storage medium

ActiveCN113609786ASmall amount of calculationImprove accuracyInternal combustion piston enginesDesign optimisation/simulationFeature extractionSimulation

The invention relates to a mobile robot navigation method and device, computer equipment and a storage medium. The method comprises the following steps of: extracting features of a target point image and a scene image through a feature extraction module to obtain state features of a current state; resolving a preset expert track through an inverse reinforcement learning module to obtain a reward function; outputting a predicted execution action of a robot through a strategy network in an A3C reinforcement learning network, obtaining a predicted value function through a value network, and after the execution action obtains a next state, calculating a TD error according to the current state, the next state and the execution action to obtain a first loss function; obtaining an expert reward value according to the state features and a weight parameter, and obtaining a second loss function according to the network reward value and the expert reward value; and training the A3C reinforcement learning network and a reward network to obtain a trained mobile robot navigation model for navigation. According to the invention, the accuracy and efficiency of indoor navigation of the robot can be improved, and the generalization ability is high.

Owner:NAT UNIV OF DEFENSE TECH

Inverse reinforcement learning by density ratio estimation

ActiveUS10896382B2Efficient executionEffectively and efficiently performProbabilistic networksMachine learningDensity ratio estimationState variable

A method of inverse reinforcement learning for estimating cost and value functions of behaviors of a subject includes acquiring data representing changes in state variables that define the behaviors of the subject; applying a modified Bellman equation given by Eq. (1) to the acquired data: q(x)+gV(y)−V(x)=−ln{pi(y|x)) / (p(y|x)} (1) where q(x) and V(x) denote a cost function and a value function, respectively, at state x, g represents a discount factor, and p(y|x) and pi(y|x) denote state transition probabilities before and after learning, respectively; estimating a density ratio pi(y|x) / p(y|x) in Eq. (1); estimating q(x) and V(x) in Eq. (1) using the least square method in accordance with the estimated density ratio pi(y|x) / p(y|x), and outputting the estimated q(x) and V(x).

Owner:OKINAWA INST OF SCI & TECH SCHOOL

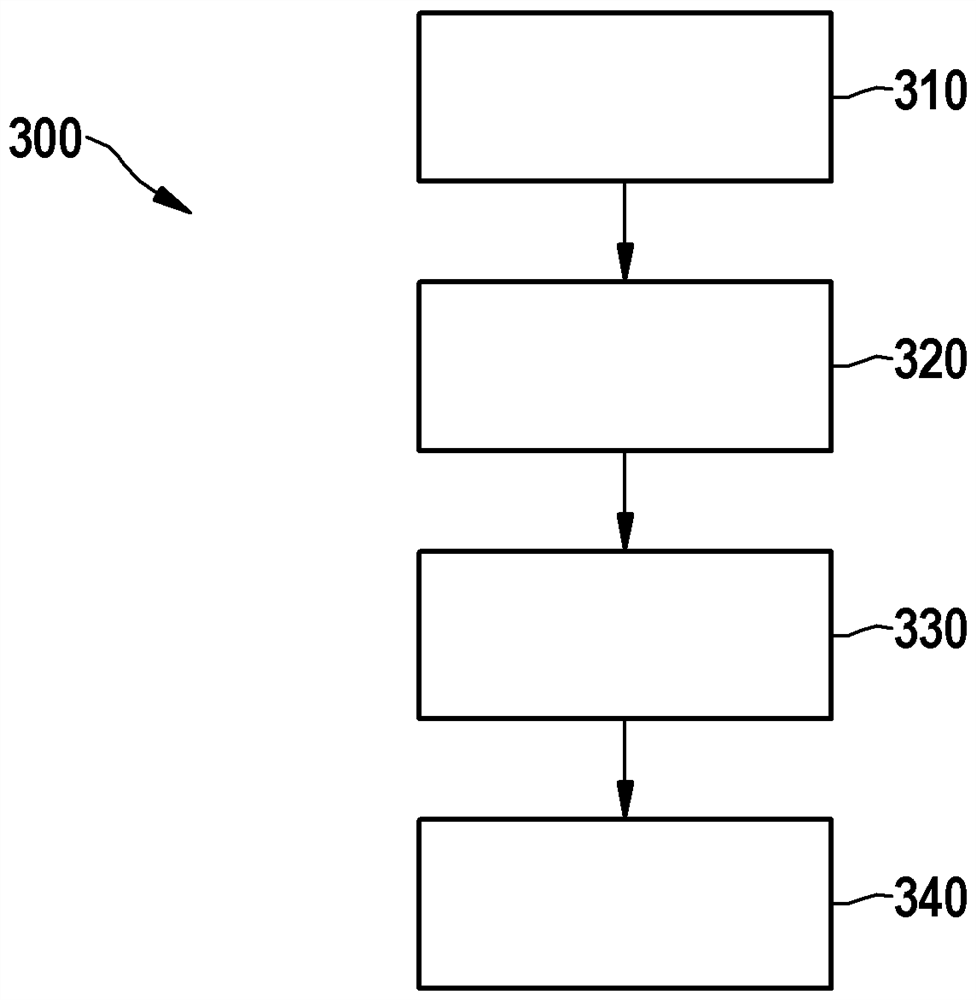

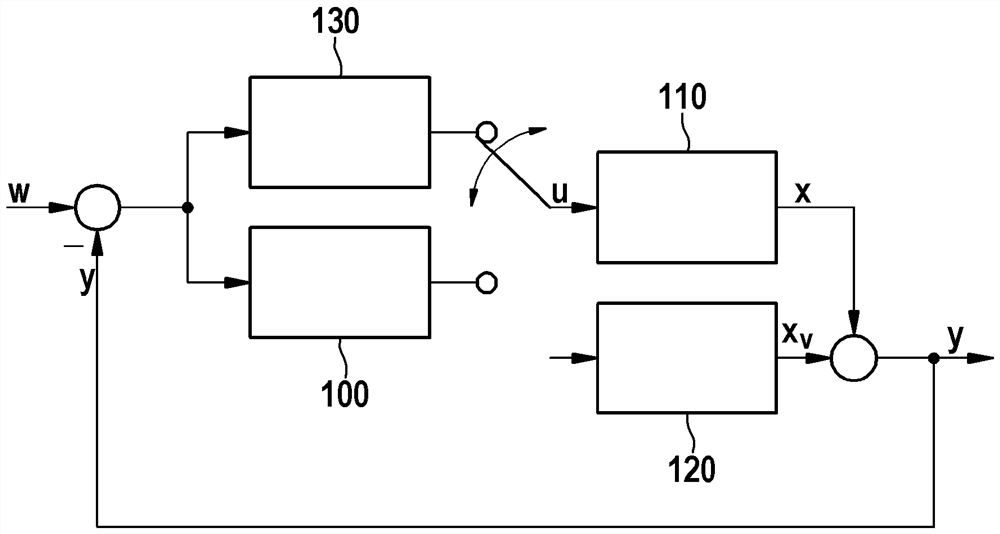

Method for determining control parameters for a control system

The invention relates to a method (200) for using machine learning to determine control parameters (thetaj) for a control system (100), in particular a control system (100) of a motor vehicle (110), in particular a control system (100) for controlling a driving operation of the motor vehicle (110). The method (200)includes: providing (210) travel trajectories (D); deriving (220) reward functions (Rj) from the travel trajectories (D), using an inverse reinforcement learning method; deriving (230) driver type-specific clusters (Cj) based on the reward functions (Rj); determining (240) control parameters (thetaj) for a particular driver type-specific cluster (cj).

Owner:ROBERT BOSCH GMBH

Direct inverse reinforcement learning with density ratio estimation

ActiveUS10896383B2Efficient executionEffectively and efficiently performCharacter and pattern recognitionProbabilistic networksDensity ratio estimationState variable

A method of inverse reinforcement learning for estimating reward and value functions of behaviors of a subject includes: acquiring data representing changes in state variables that define the behaviors of the subject; applying a modified Bellman equation given by Eq. (1) to the acquired data:r(x)+γV(y)-V(x)=lnπ(y|x)b(y|x),(1)=lnπ(x,y)b(x,y)-lnπ(x)b(x), (2)where r(x) and V(x) denote a reward function and a value function, respectively, at state x, and γ represents a discount factor, and b(y|x) and π(y|x) denote state transition probabilities before and after learning, respectively; estimating a logarithm of the density ratio π(x) / b(x) in Eq. (2); estimating r(x) and V(x) in Eq. (2) from the result of estimating a log of the density ratio π(x,y) / b(x,y); and outputting the estimated r(x) and V(x).

Owner:OKINAWA INST OF SCI & TECH SCHOOL

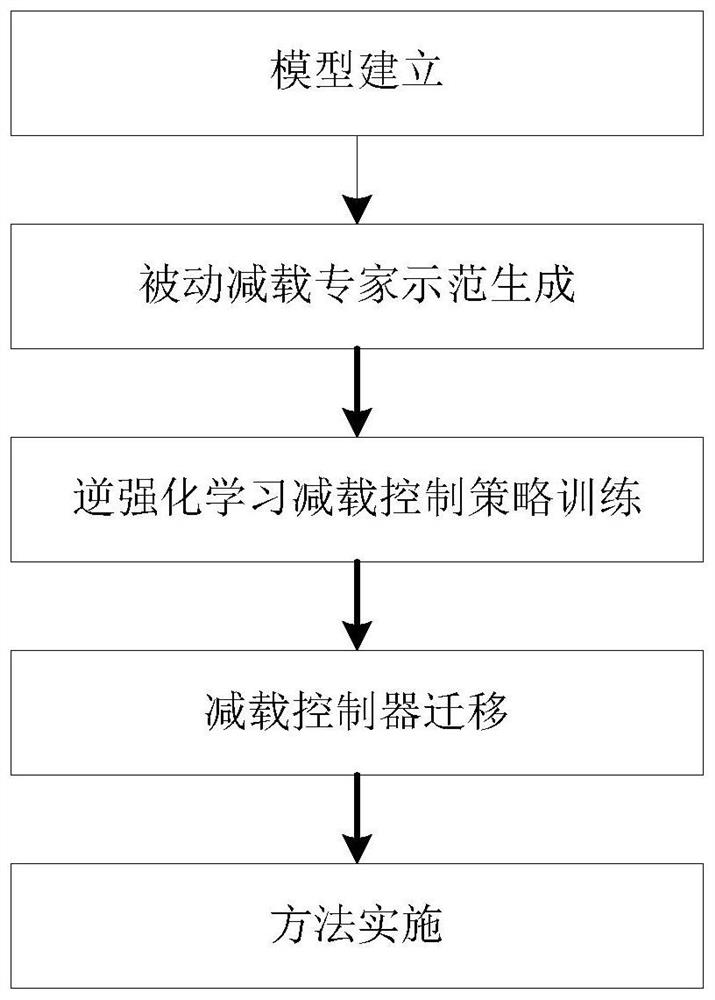

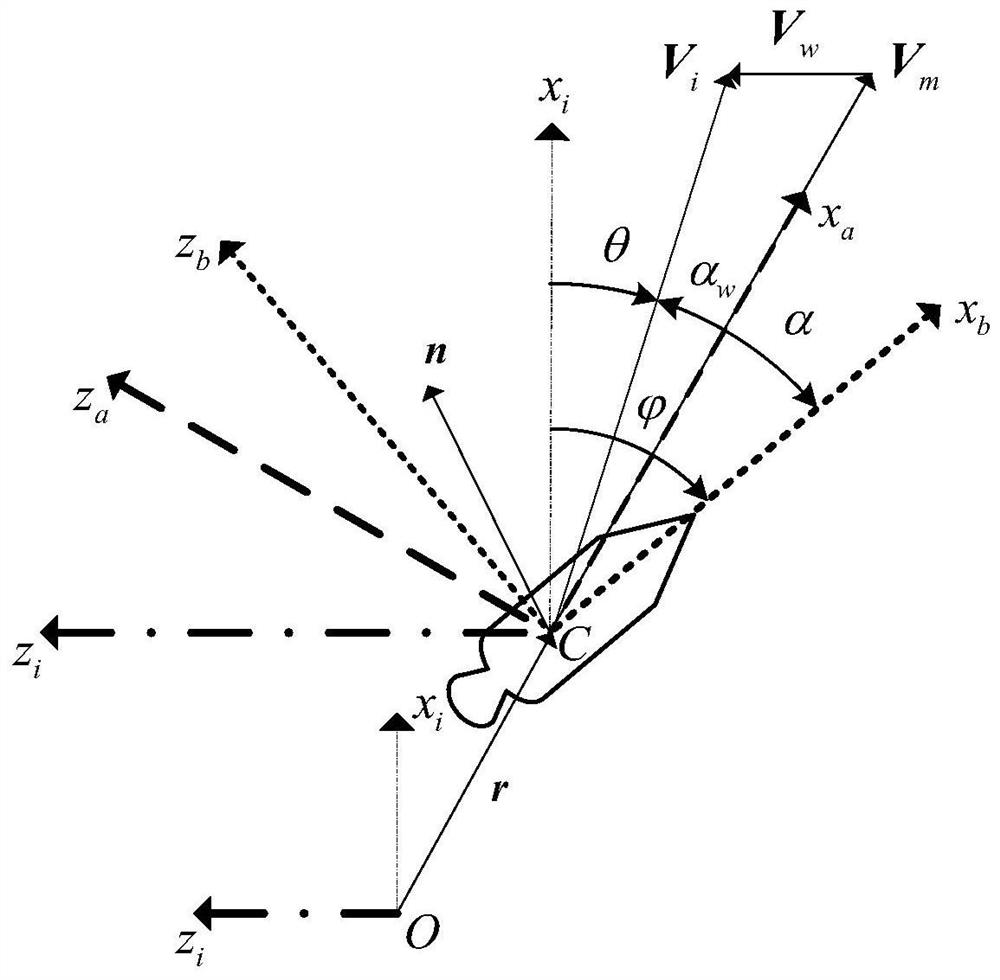

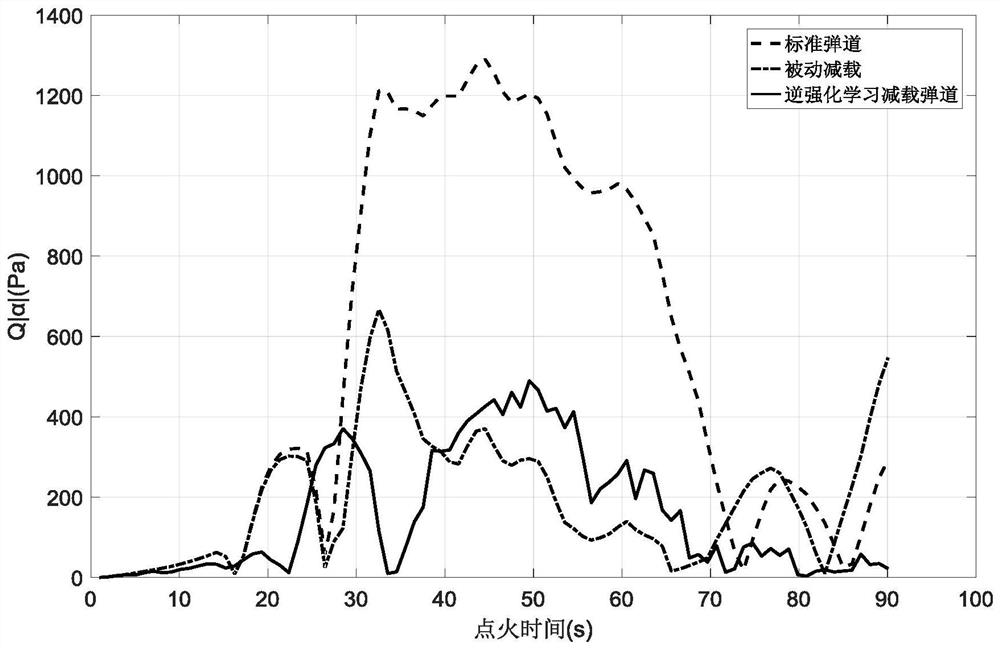

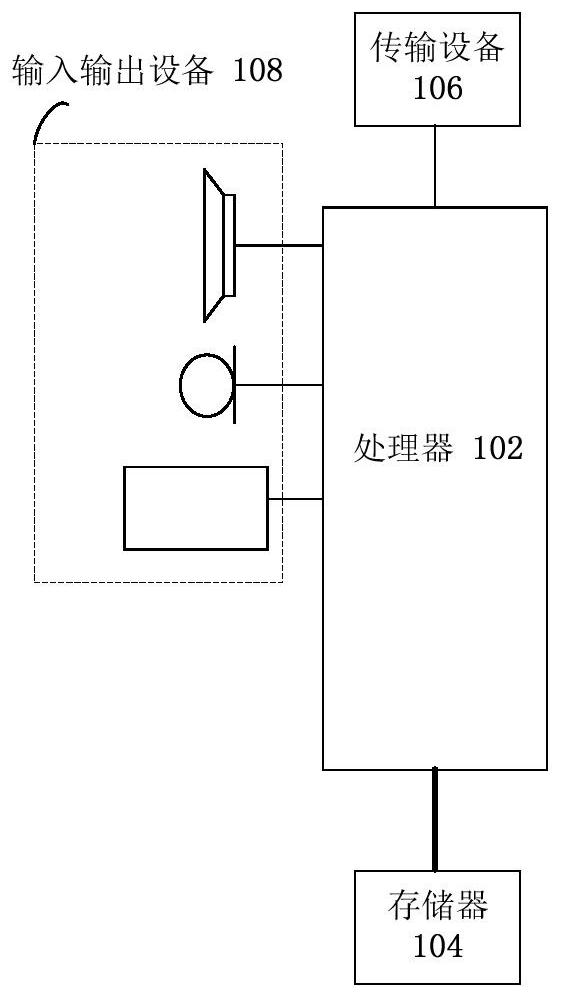

Carrier rocket load shedding control method based on inverse reinforcement learning

PendingCN113504723AResolve dependenciesSolve the accuracy problemAdaptive controlSustainable transportationLoad SheddingGuidance control

The invention provides a carrier rocket load shedding control method based on inverse reinforcement learning. The carrier rocket load shedding control method comprises the following specific steps: 1, establishing a carrier rocket dynamic model considering a wind field condition; 2, generating a passive load shedding expert demonstration; 3, training an inverse reinforcement learning load shedding control strategy; and 4, migrating the load shedding controller, that is, load shedding control strategy network parameters obtained through training are solidified, a closed loop is achieved through input and output interfaces of carrier rocket dynamics, and the load shedding controller serves as the load shedding controller. Through the above steps, the method can achieve the load shedding control of the carrier rocket, solves a problem that the existing method depends on precise wind field information and cannot guarantee the guidance precision, and achieves better stability and universality. The guidance control method is scientific and good in manufacturability and has wide application and popularization value.

Owner:BEIHANG UNIV

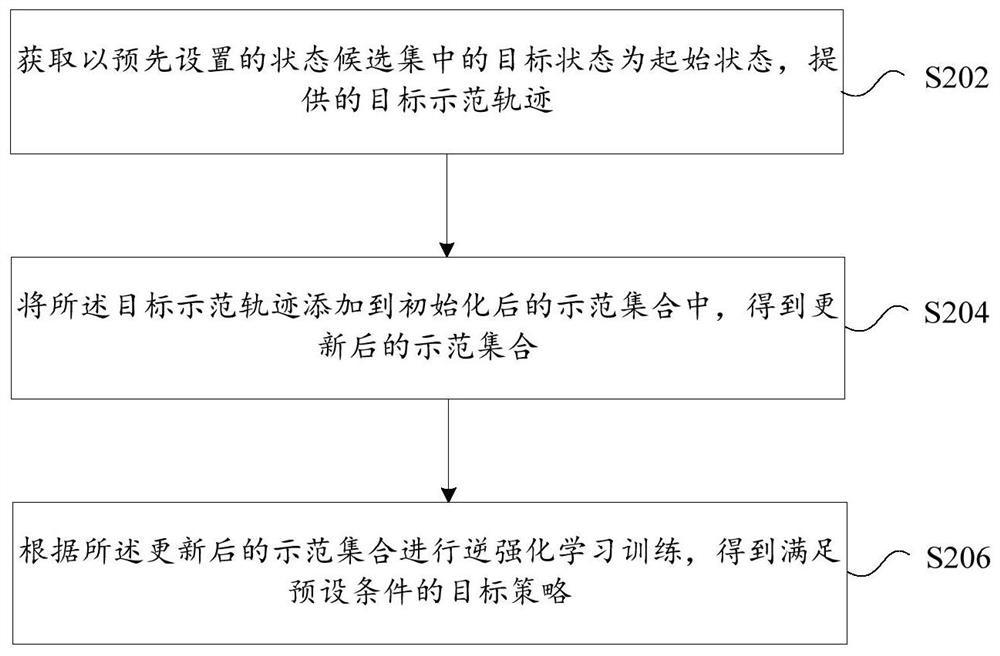

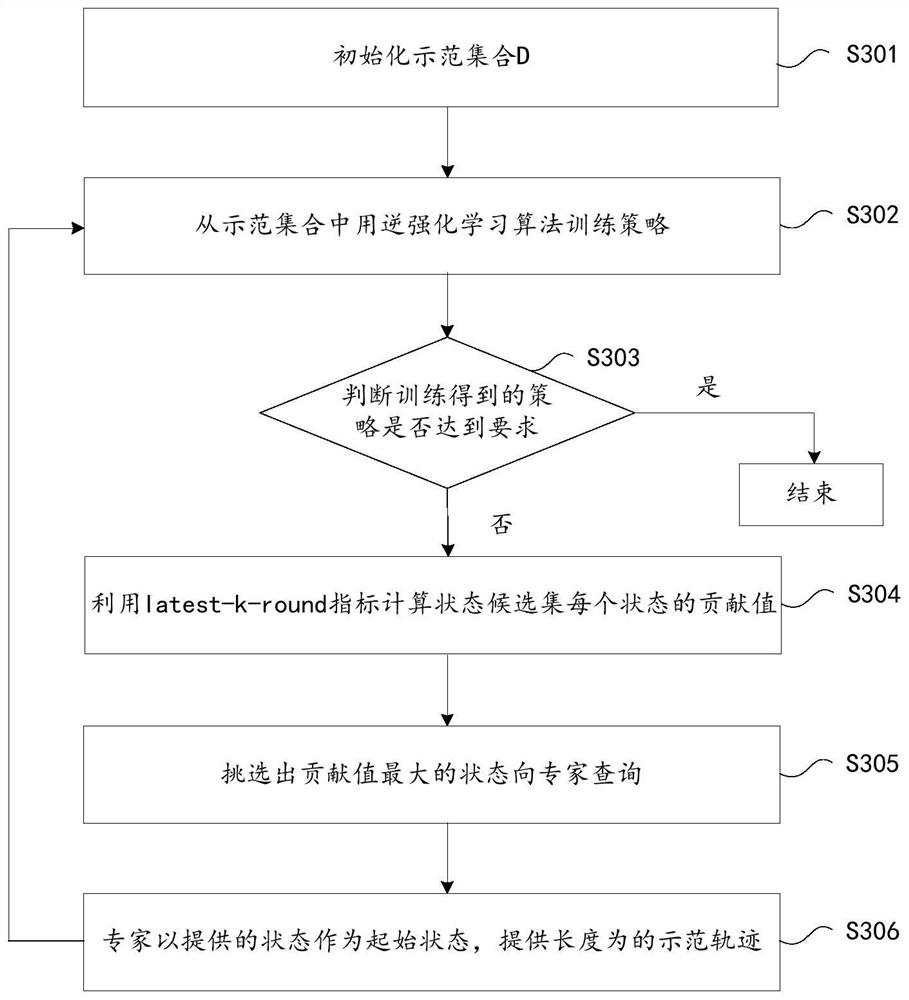

Inverse reinforcement learning processing method and device, storage medium and electronic device

The embodiment of the invention provides an inverse reinforcement learning processing method and device, a storage medium and an electronic device, and the method comprises the steps of obtaining a target demonstration track which is provided by taking a target state in a preset state candidate set as an initial state; adding the target demonstration track into the initialized demonstration set to obtain an updated demonstration set; and performing inverse reinforcement learning training according to the updated demonstration set to obtain a target strategy meeting a preset condition. According to the invention, the problem of high cost caused by a large number of expert demonstration due to the need of collecting a large number of expert demonstration in inverse reinforcement learning in related technologies can be solved; comprising selecting a most valuable state (a target state with a maximum contribution value) from a state candidate set as an initial state; obtaining a demonstration track taking the target state as an initial state; and updating the demonstration set, and training a strategy according to the updated demonstration set, thereby achieving the purpose of reducing the demonstration cost.

Owner:ZTE CORP +1

Method and system for interactive imitation learning in video games

Owner:UNITY IPR APS

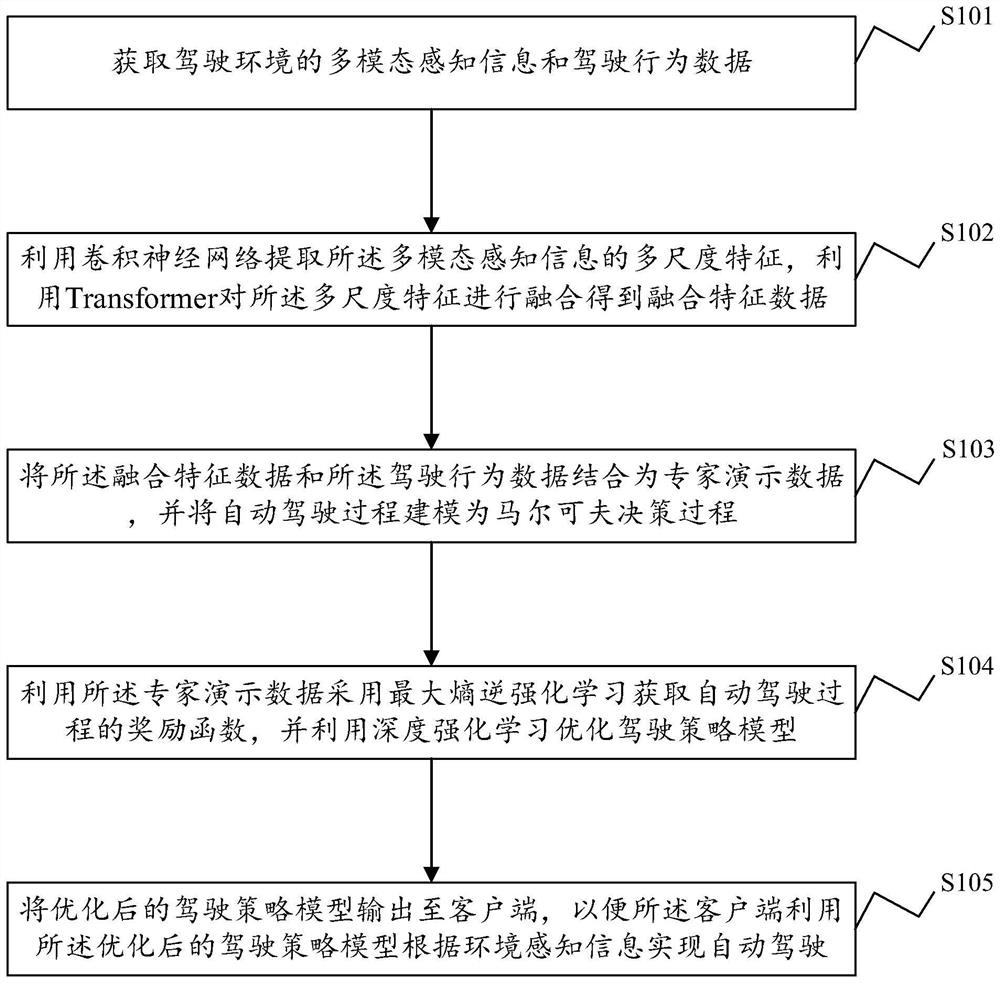

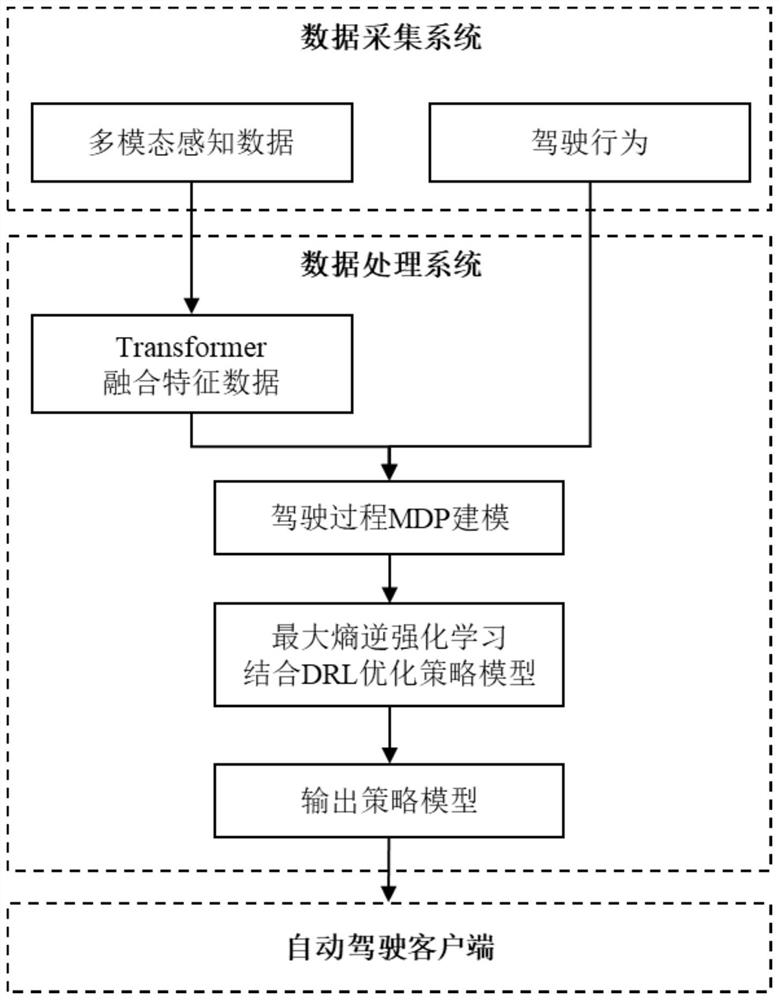

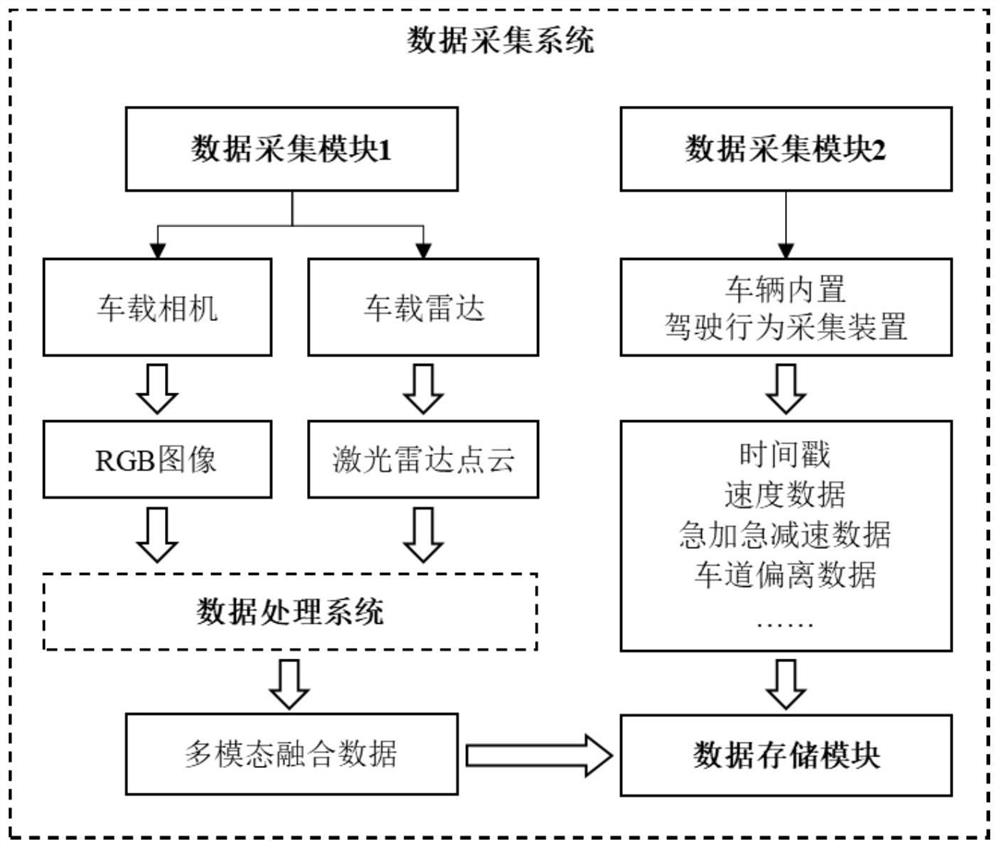

Automatic driving method and device, electronic equipment and storage medium

ActiveCN114194211ARealize autonomous drivingAvoid lossControl devicesInternal combustion piston enginesMulti modalityInverse reinforcement learning

The invention discloses an automatic driving method and device, electronic equipment and a computer readable storage medium. The method comprises the steps that multi-mode sensing information and driving behavior data of a driving environment are acquired; extracting multi-scale features of the multi-modal perception information by using a convolutional neural network, and fusing the multi-scale features by using Transform to obtain fused feature data; the fusion feature data and the driving behavior data are combined into expert demonstration data, and an automatic driving process is modeled into a Markov decision process; obtaining a reward function of an automatic driving process by using expert demonstration data and maximum entropy inverse reinforcement learning, and optimizing a driving strategy model by using deep reinforcement learning; and outputting the optimized driving strategy model to the client, so that the client realizes automatic driving by using the optimized driving strategy model according to the environmental perception information. The reliability of automatic driving perception data is ensured, and the reasonability of decision planning in the automatic driving process is improved.

Owner:INSPUR BEIJING ELECTRONICS INFORMATION IND

Method and system for interactive imitation learning in video games

In example embodiments, a method of interactive imitation learning method is disclosed. An input is received from an input device. The input includes data describing a first set of example actions defining a behavior for a virtual character. Inverse reinforcement learning is used to estimate a reward function for the set of example actions. The reward function and the set of example actions is used as input to a reinforcement learning model to train a machine learning agent to mimic the behavior in a training environment. Based on a measure of failure of the training of the machine learning agent reaching a threshold, the training of the machine learning agent is paused to request a second set of example actions from the input device. The second set of example actions is used in addition to the first set of example actions to estimate a new reward function.

Owner:UNITY TECH APS

Server-free computing resource allocation method based on maximum entropy inverse reinforcement learning

PendingCN114416343ABenefit maximizationResource allocationMachine learningOnline learningData mining

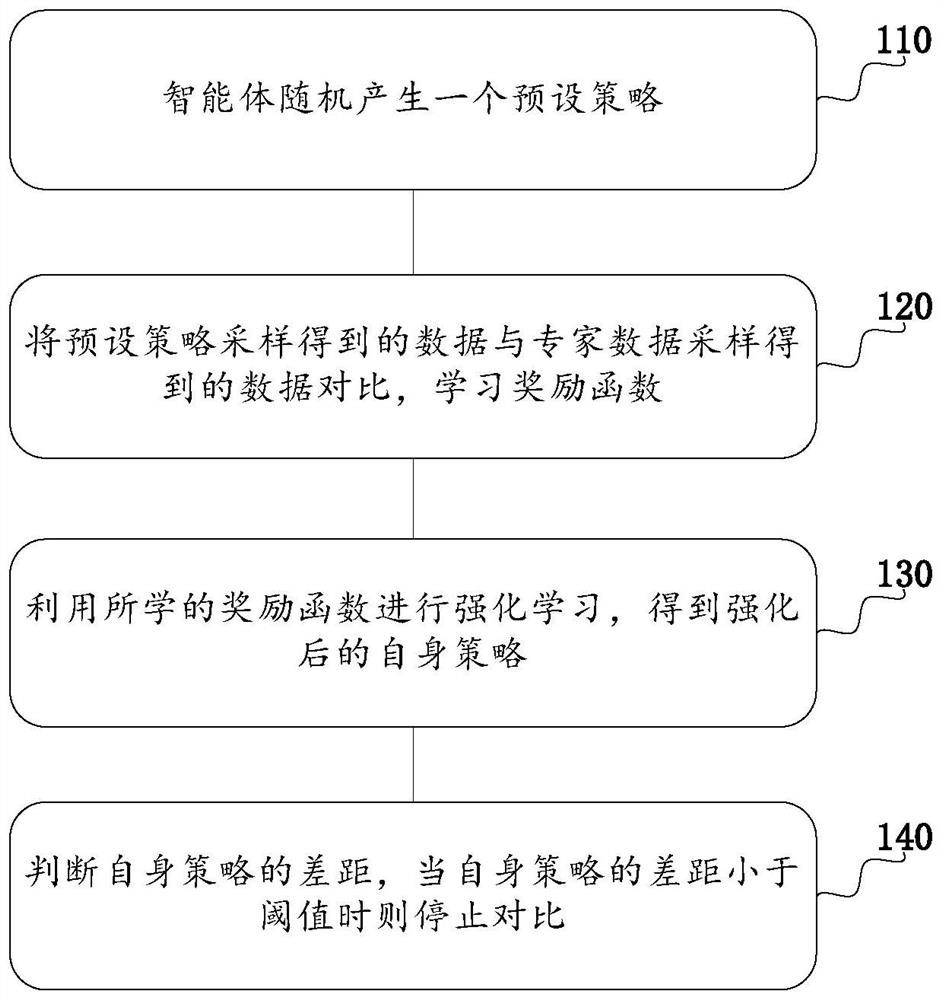

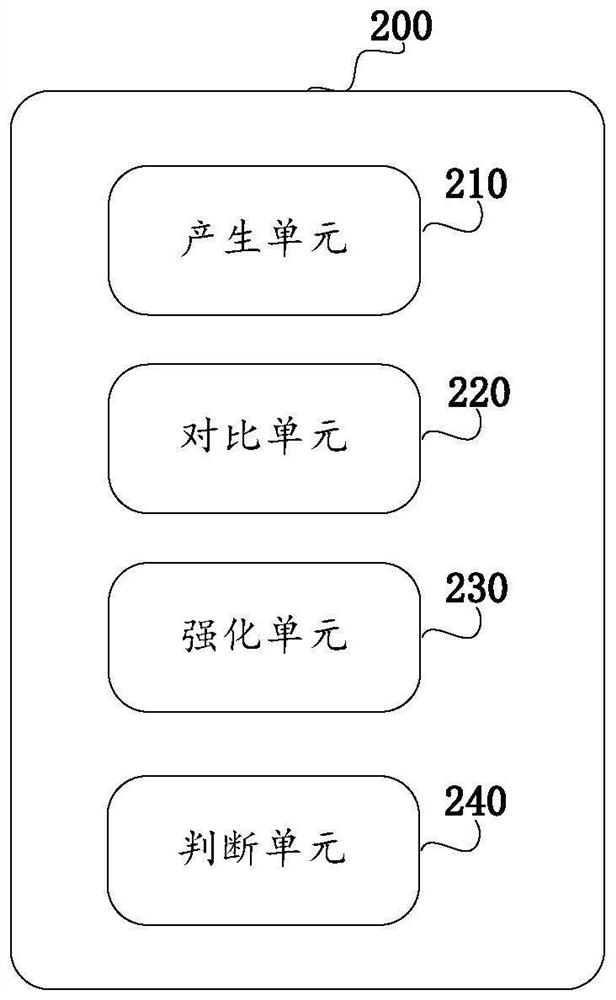

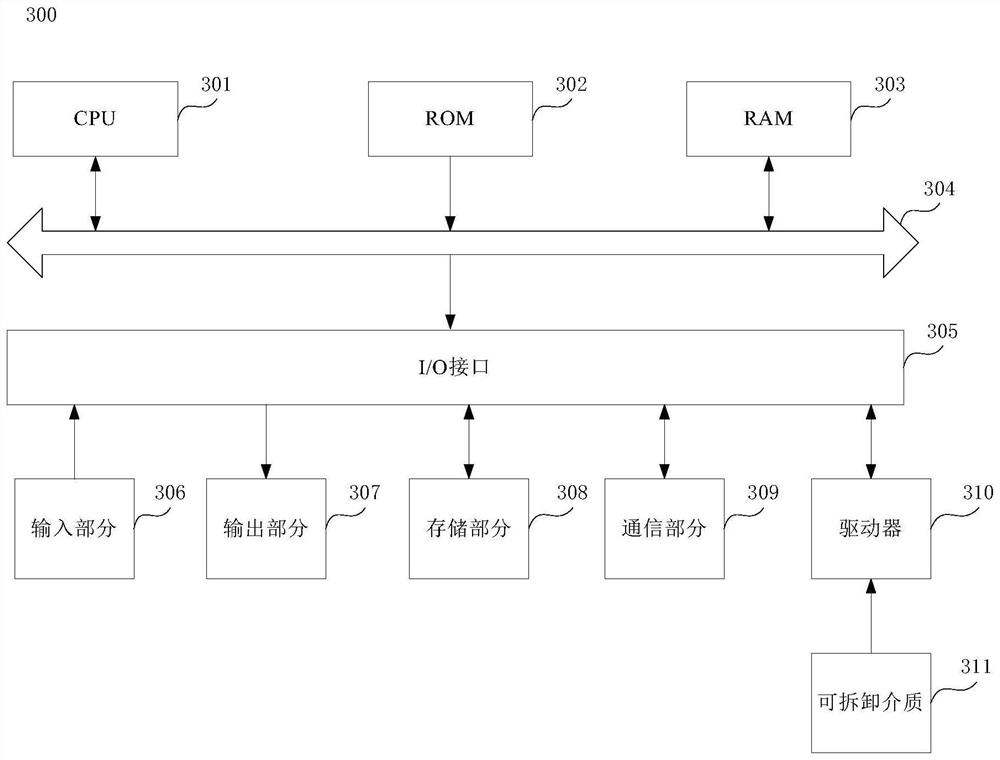

The invention discloses a server-free computing resource allocation method, device and equipment based on maximum entropy inverse reinforcement learning and a storage medium thereof. The method comprises the steps that an intelligent agent randomly generates a preset strategy; comparing the data obtained by sampling the preset strategy with the data obtained by sampling the expert data, and learning a reward function; carrying out reinforcement learning by utilizing the learned reward function to obtain a reinforced self-strategy; and judging the difference of the own strategies, and stopping comparison when the difference of the own strategies is smaller than a threshold value. According to the scheme provided by the invention, after basic training is completed, the scheme is a completely online learning method, and the training is not needed. According to the invention, the parameters can be dynamically adjusted, and the space of price and income is fully explored, so that the benefits of the user and the platform are maximized.

Owner:SHENZHEN INST OF ADVANCED TECH

Direct Inverse Reinforcement Learning Using Density Ratio Estimation

ActiveCN108885721BEffective and Efficient Inverse Reinforcement LearningMathematical modelsMachine learningDensity ratio estimationState variable

A method for estimating the reverse reinforcement learning of the reward and value function of the behavior of an object, the method comprising: obtaining data representing changes in a state variable, which defines the behavior of the object; will be given by formula (1) The modified Bellman equation is applied to the acquired data, where r(x) and V(x) represent the reward function and value function in state x, respectively, while γ represents the discount factor, and b(y|x) and π(y |x) respectively represent the state transition probability before and after learning; estimate the logarithm of the density ratio π(x) / b(x) in formula (2); according to the estimated density ratio π(x,y) / b( x, y), estimate r(x) and V(x) in Equation 2; and output the estimated r(x) and V(x).

Owner:OKINAWA INST OF SCI & TECH SCHOOL

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com