Patents

Literature

92 results about "Learning agent" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

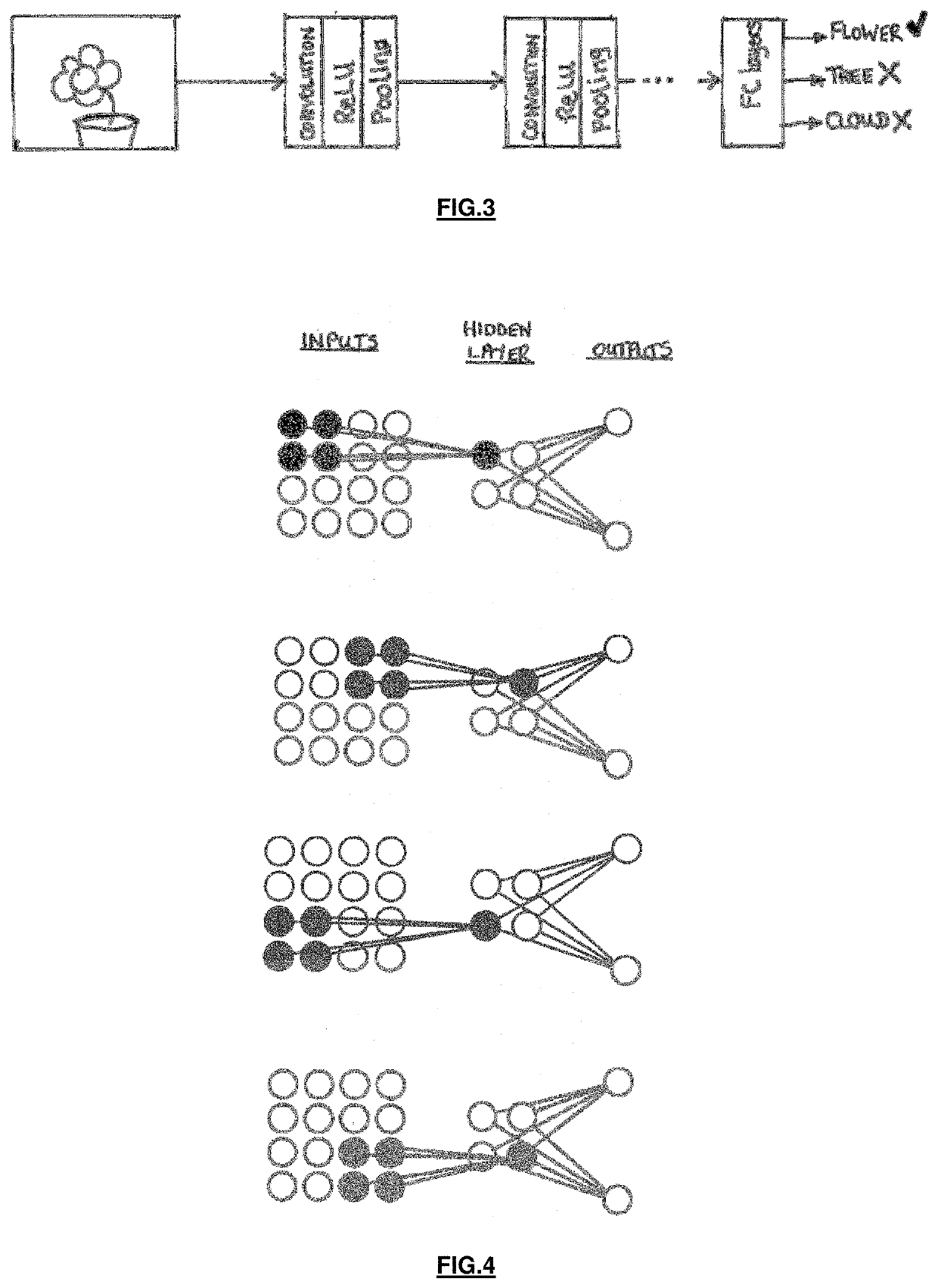

A deep learning agent is any autonomous or semi-autonomous AI-driven system that uses deep learning to perform and improve at its tasks. Systems (agents) that use deep learning include chatbots, self-driving cars, expert systems, facial recognition programs and robots.

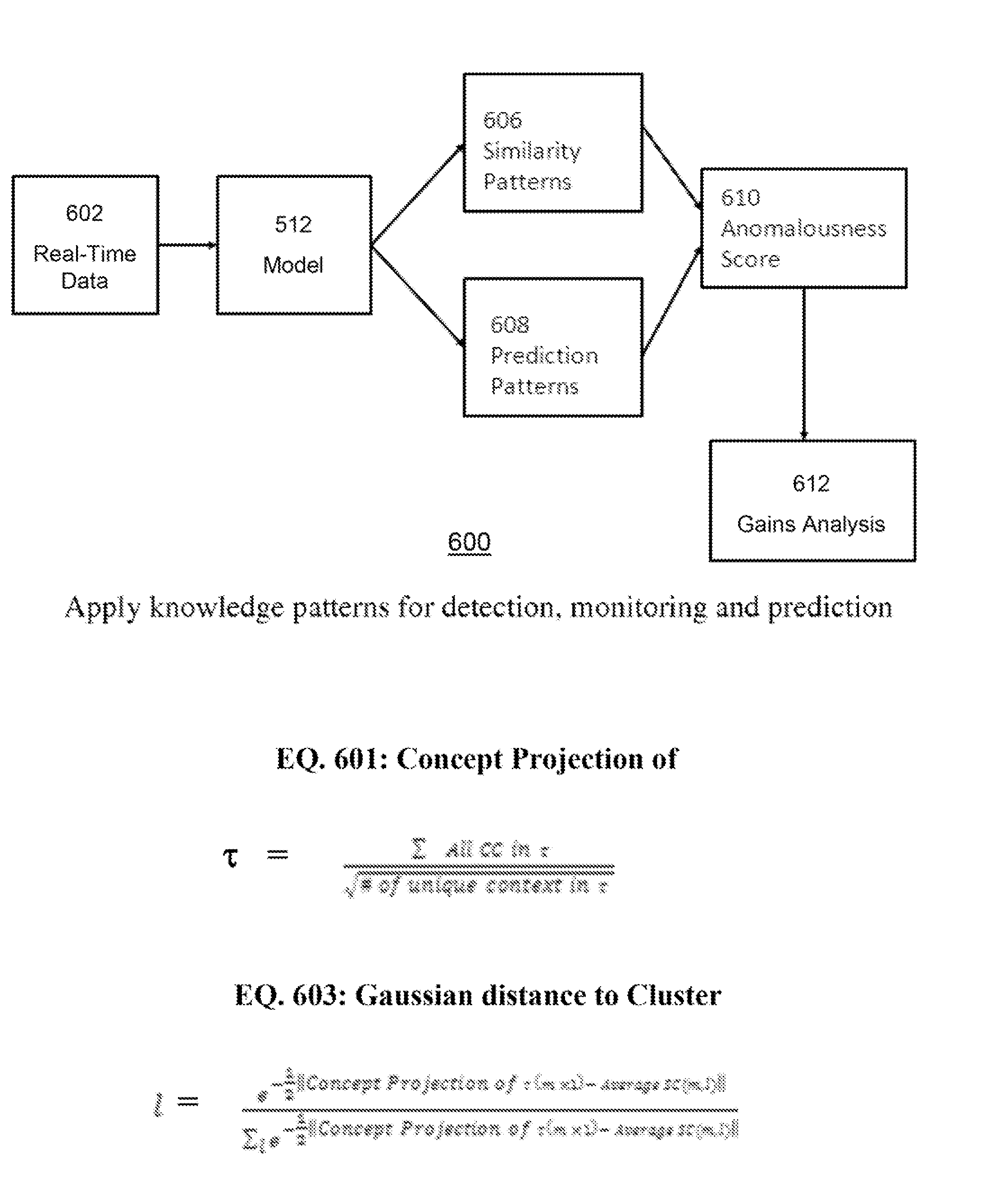

System and method for knowledge pattern search from networked agents

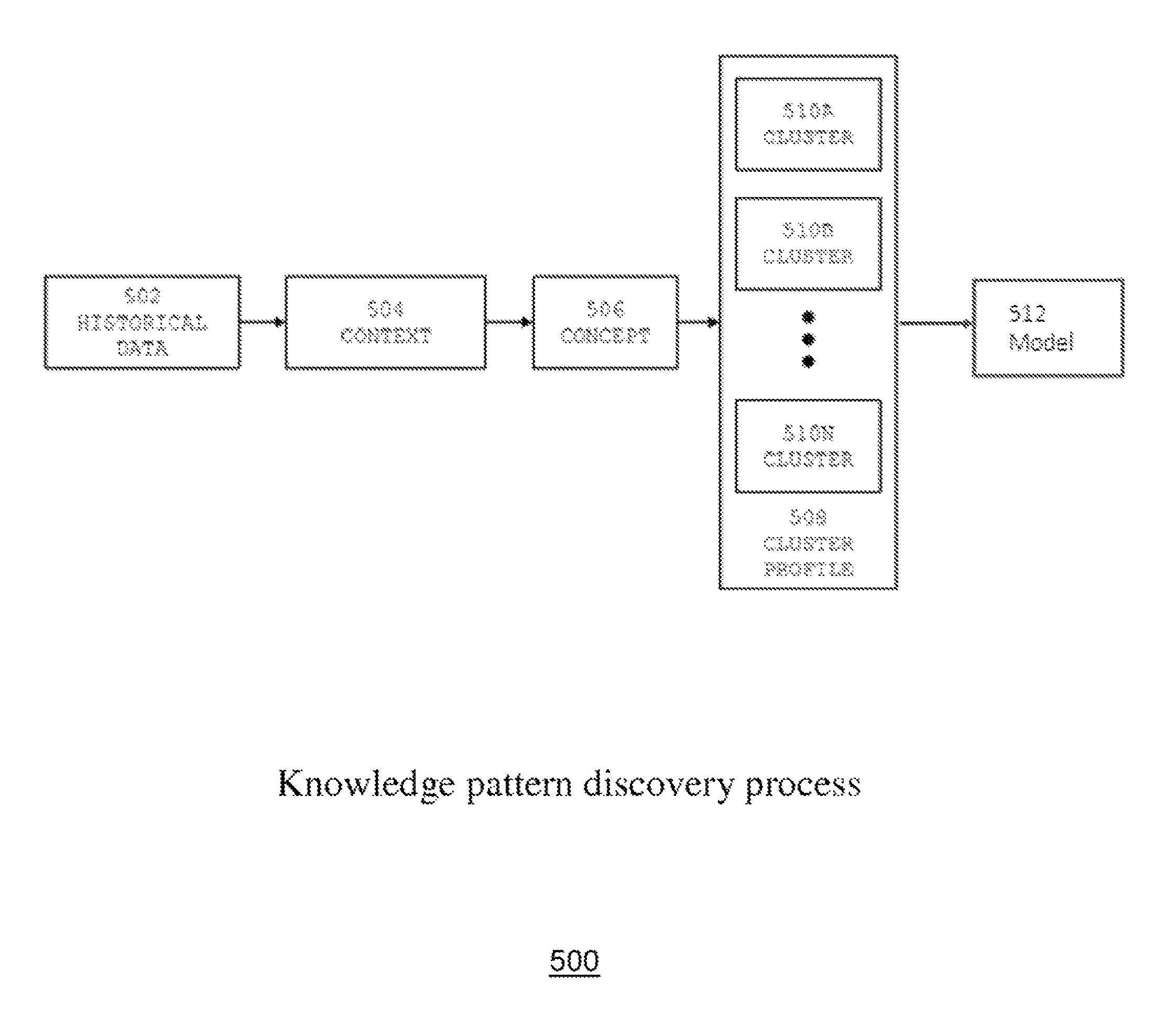

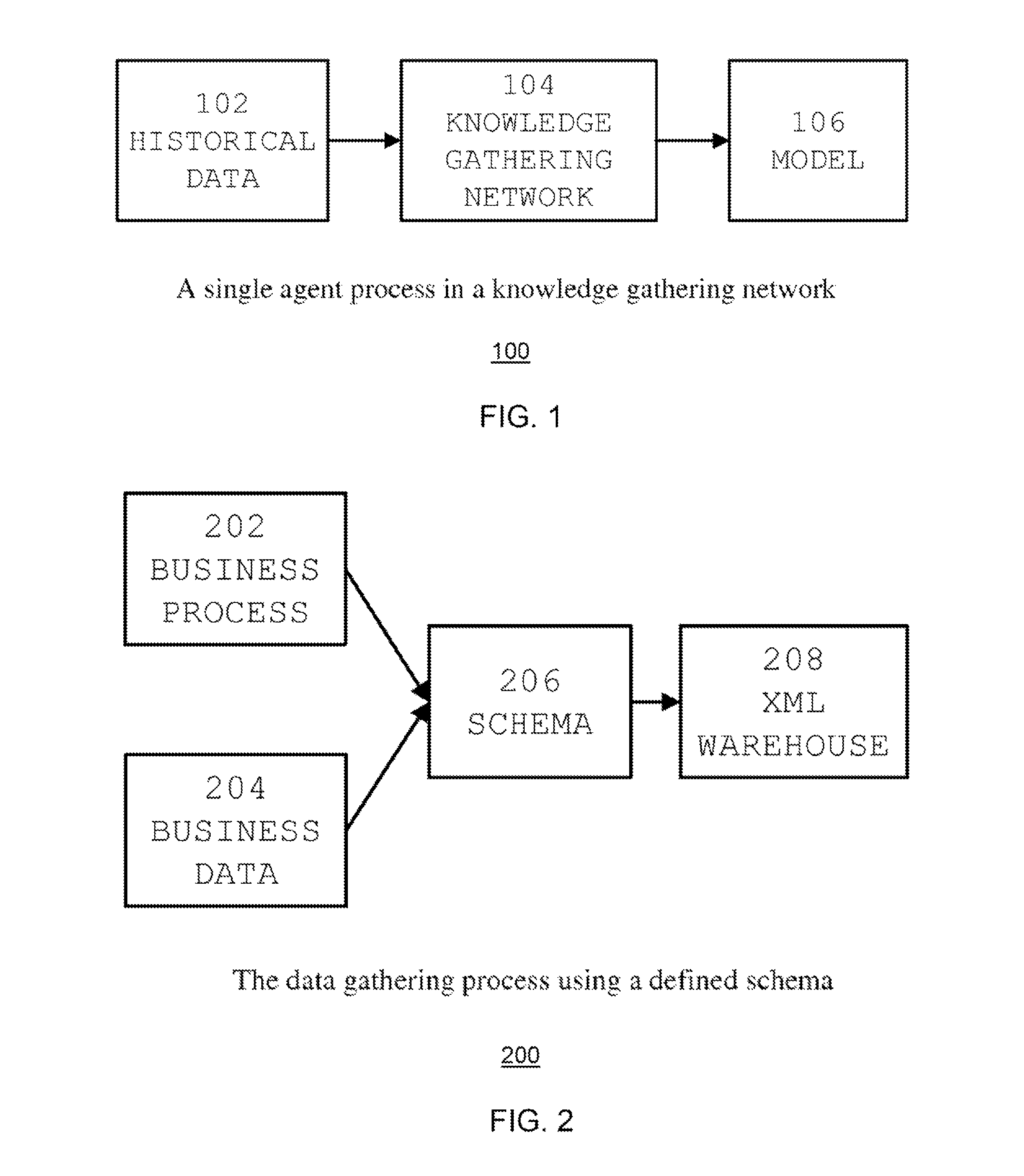

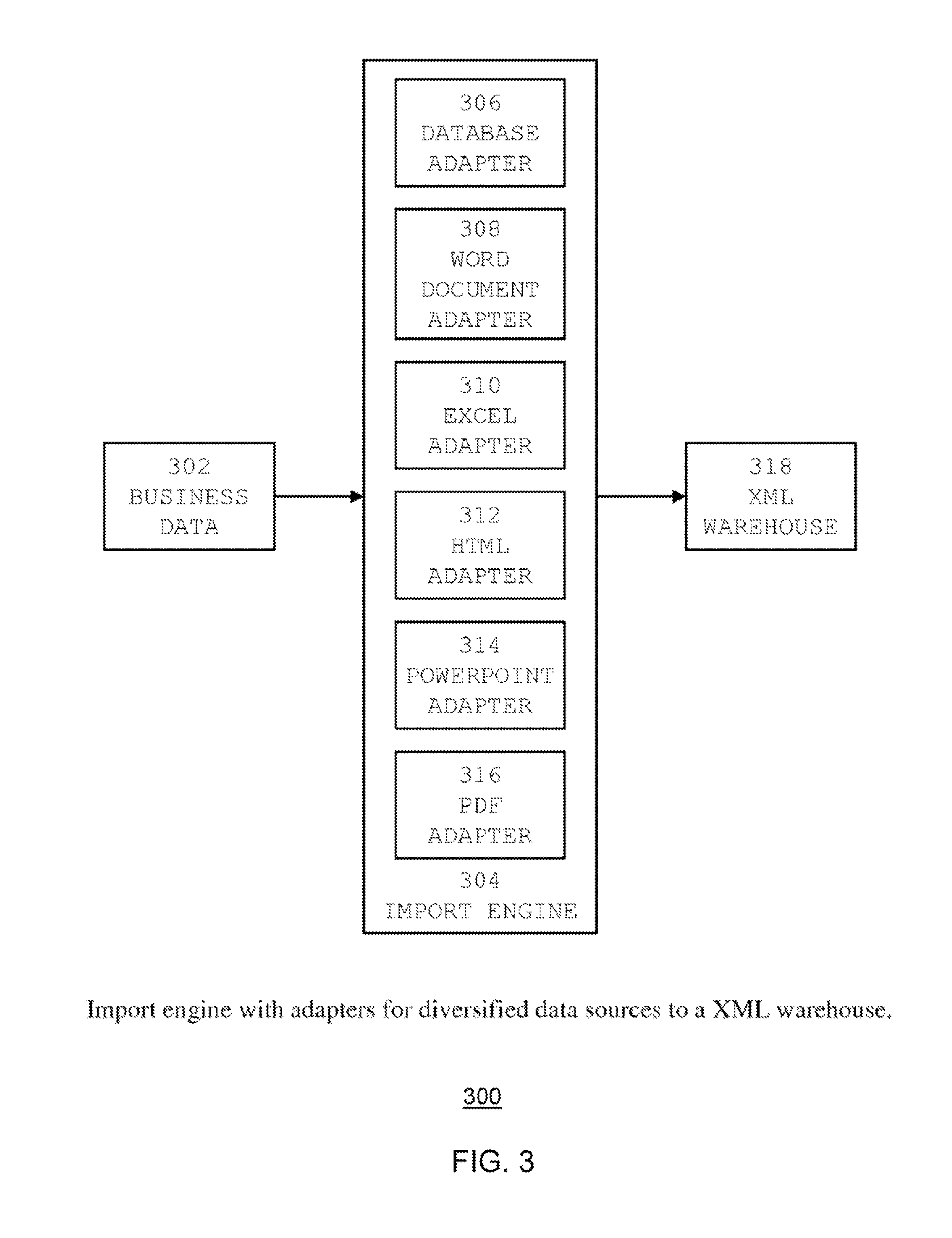

One or more systems and methods for knowledge pattern search from networked agents are disclosed in various embodiments of the invention. A system and a related method can utilizes a knowledge pattern discovery process, which involves analyzing historical data, contextualizing, conceptualizing, clustering, and modeling of data to pattern and discover information of interest. This process may involve constructing a pattern-identifying model using a computer system by applying a context-concept-cluster (CCC) data analysis method, and visualizing that information using a computer system interface. In one embodiment of the invention, once the pattern-identifying model is constructed, the real-time data can be gathered using multiple learning agent devices, and then analyzed by the pattern-identifying model to identify various patterns for gains analysis and derivation of an anomalousness score. This system can be useful for knowledge discovery applications in various industries, including business, competitive intelligence, and academic research.

Owner:ZHAO YING +1

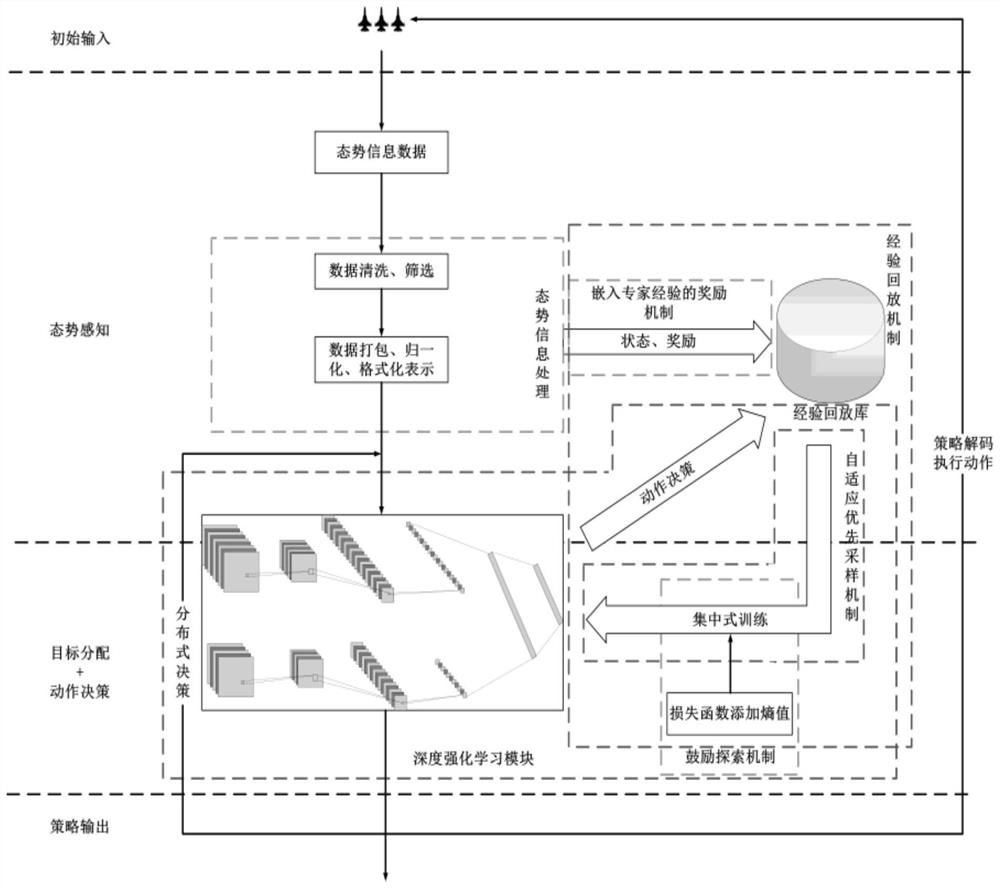

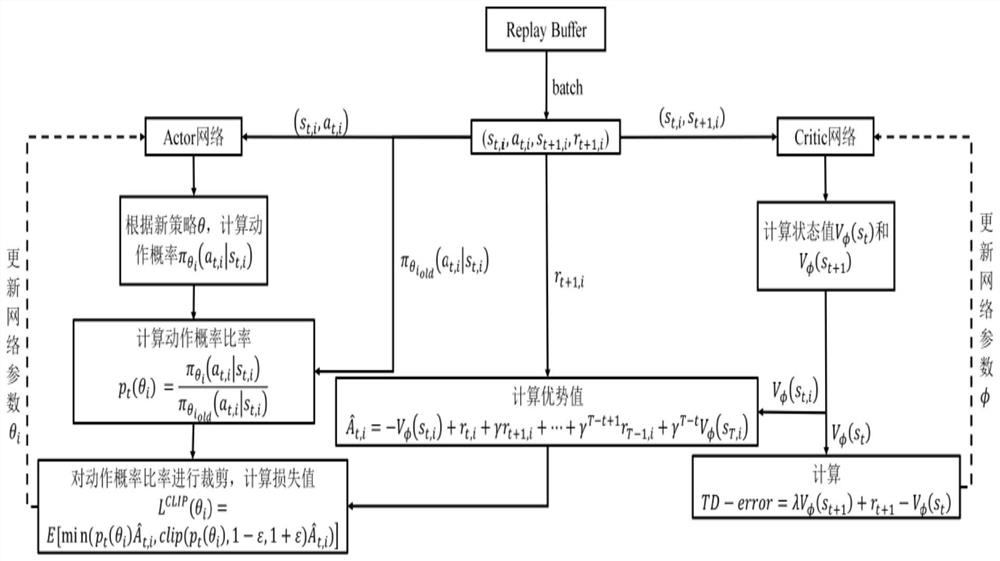

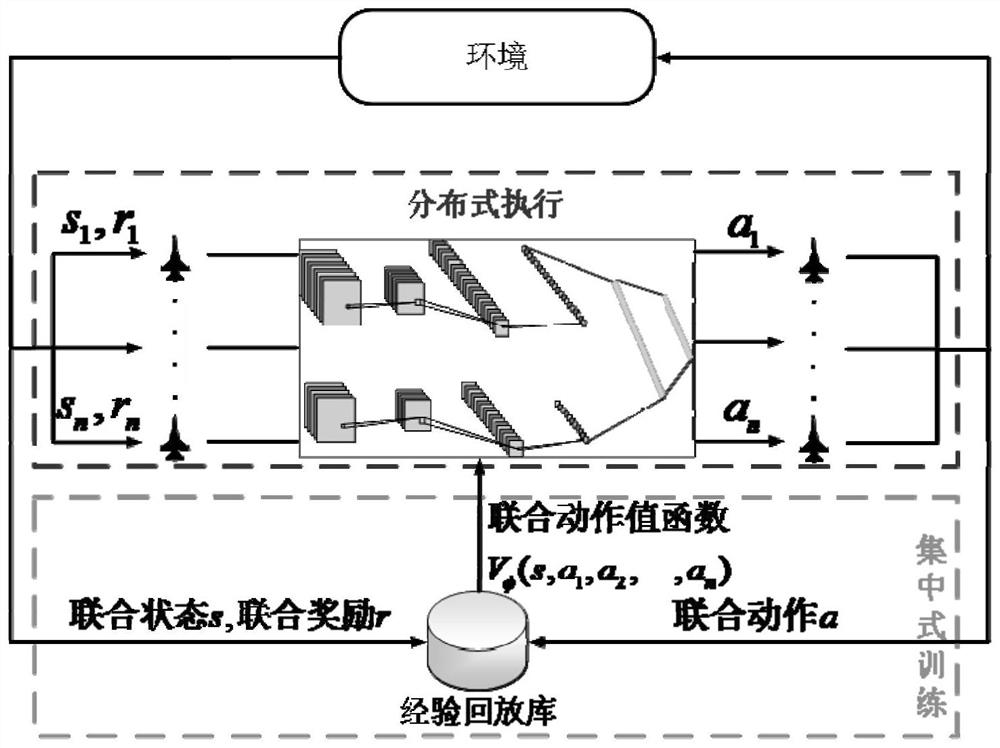

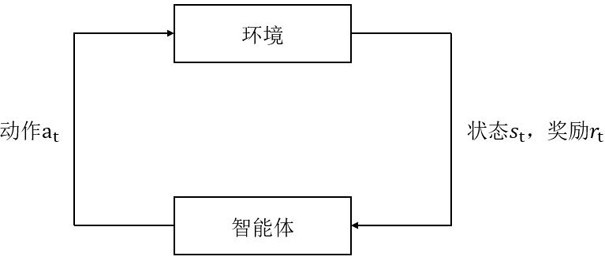

Multi-machine collaborative air combat planning method and system based on deep reinforcement learning

ActiveCN112861442ASolve hard-to-converge problemsMake up for the shortcomings of poor exploratoryDesign optimisation/simulationNeural architecturesEngineeringNetwork model

According to the multi-aircraft cooperative air combat planning method and system based on deep reinforcement learning provided by the invention, a combat aircraft is regarded as an intelligent agent, a reinforcement learning agent model is constructed, and a network model is trained through a centralized training-distributed execution architecture, so that the defect that the exploratory performance of a network model is not strong due to low action distinction degree among different entities during multi-aircraft cooperation is overcome; and by embedding expert experience in the reward value, the problem that a large amount of expert experience support is needed in the prior art is solved. Through an experience sharing mechanism, all agents share one set of network parameters and experience playback library, and the problem that the strategy of a single intelligent agent is not only dependent on the feedback of the own strategy and the environment, but also influenced by the behaviors and cooperation relationships of other agents is solved. By increasing the sampling probability of the samples with large absolute values of the advantage values, the samples with extremely large or extremely small reward values can influence the training of the neural network, and the convergence speed of the algorithm is accelerated. The exploration capability of the intelligent agent is improved by adding the strategy entropy.

Owner:NAT UNIV OF DEFENSE TECH

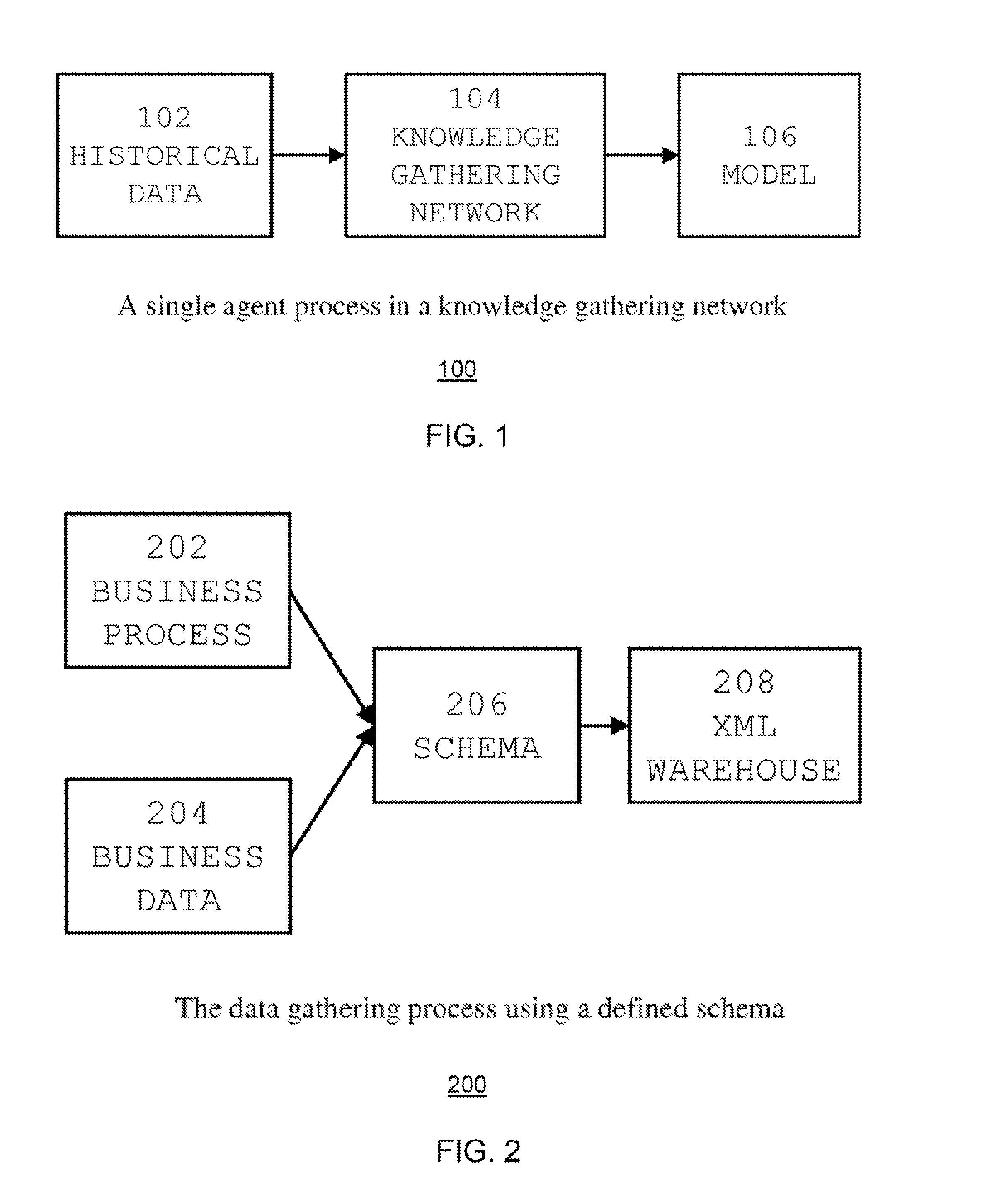

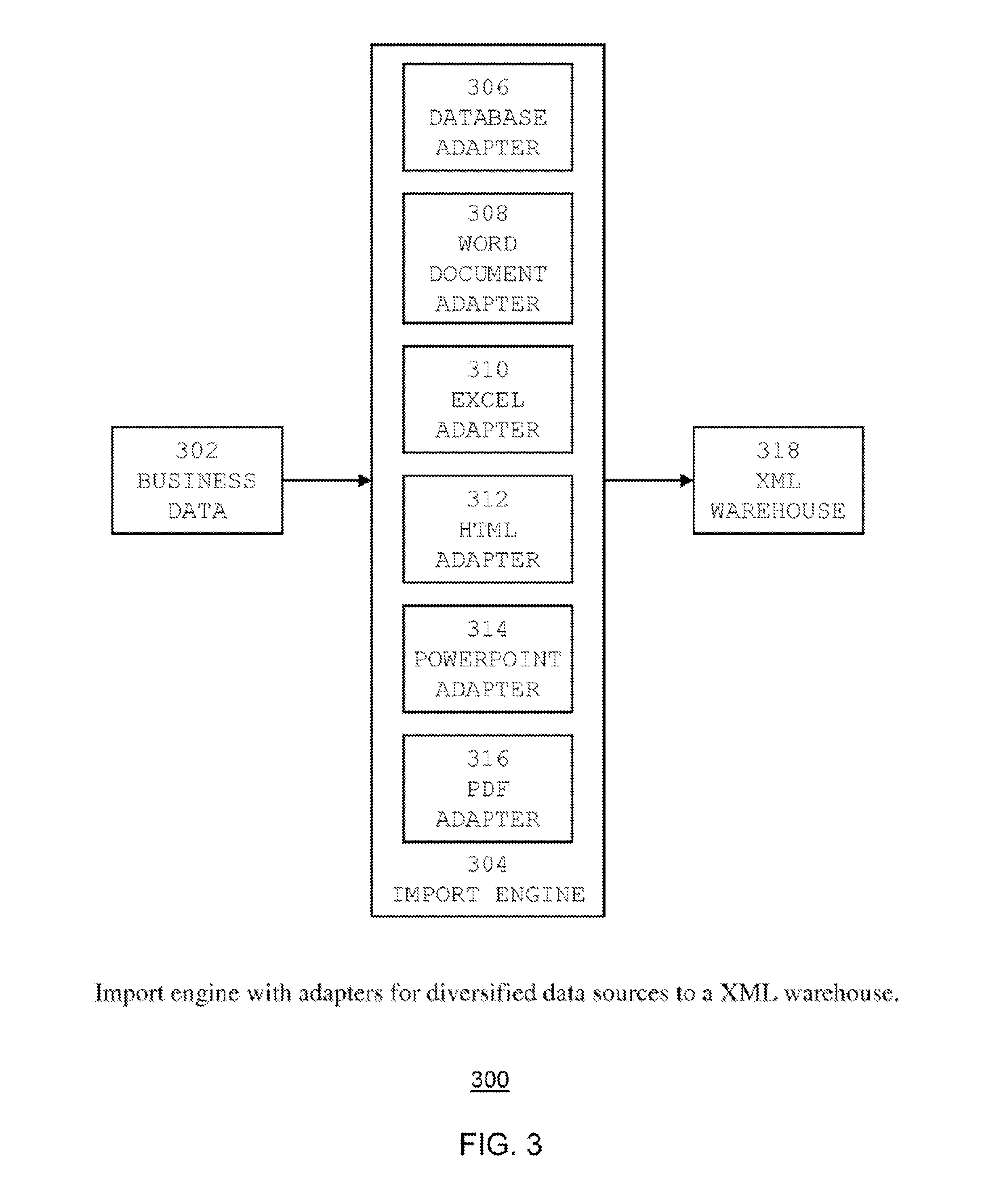

Knowledge discovery agent system and method

ActiveUS20060112029A1Return on investmentIncrease valueChaos modelsNon-linear system modelsComputerized systemSoftware agent

A software agent system is provided that continues to learn as it utilizes natural language processors to tackle limited semantic awareness, and creates superior communication between disparate computer systems. The software provides intelligent middleware and advanced learning agents which extend the parameters for machine agent capabilities beyond simple, fixed tasks thus producing cost savings in future hardware and software platforms.

Owner:DIGITAL REASONING SYST

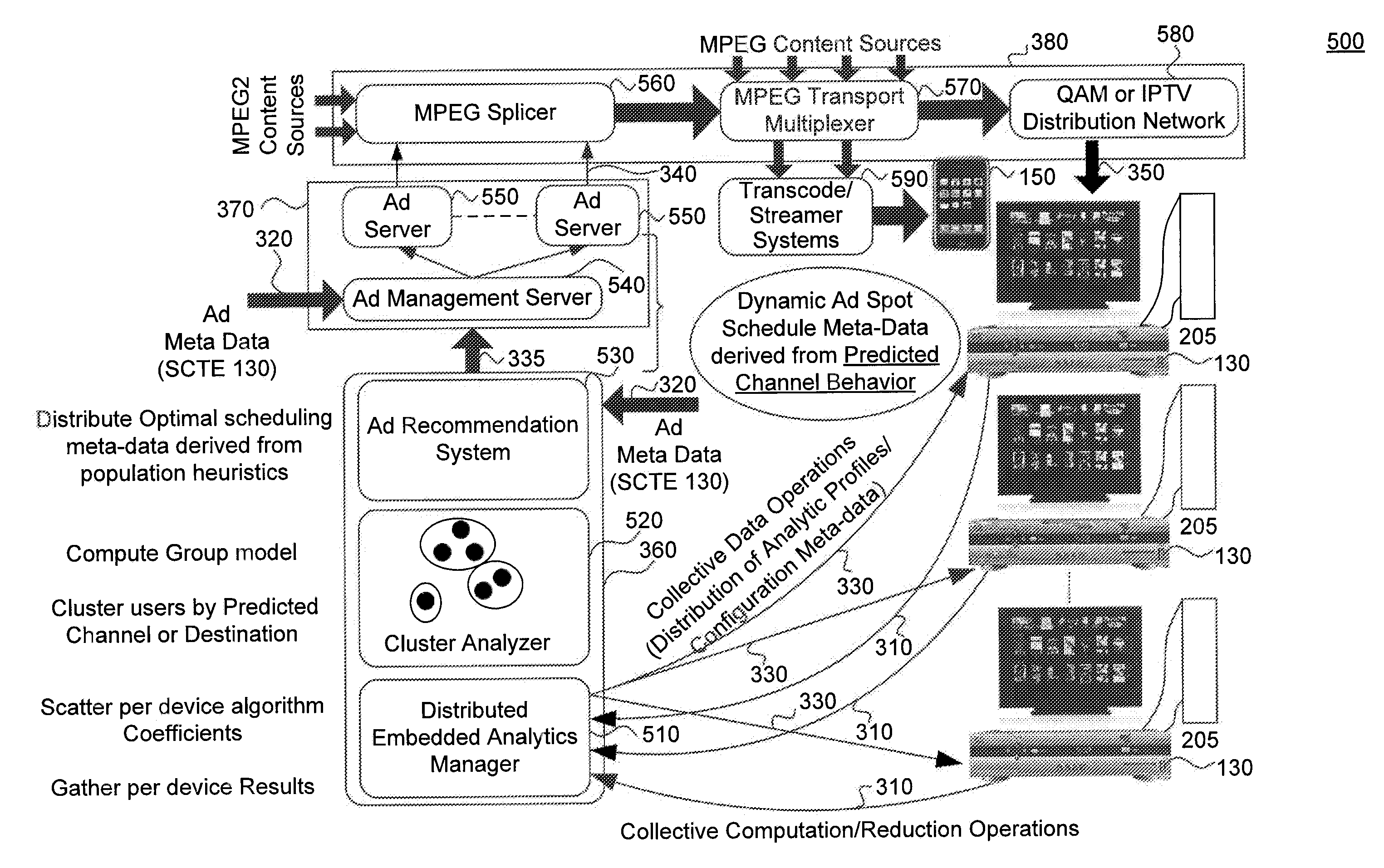

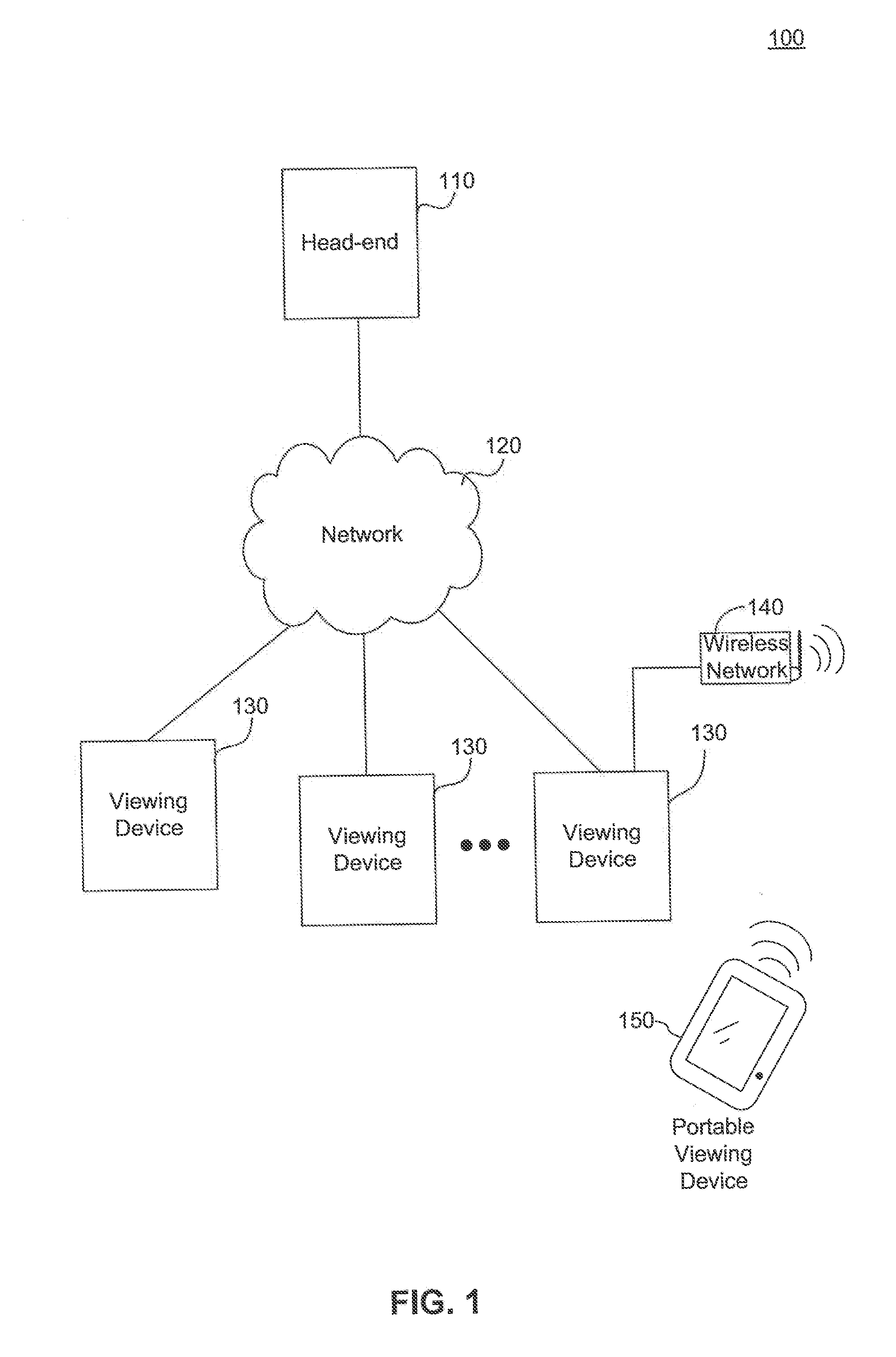

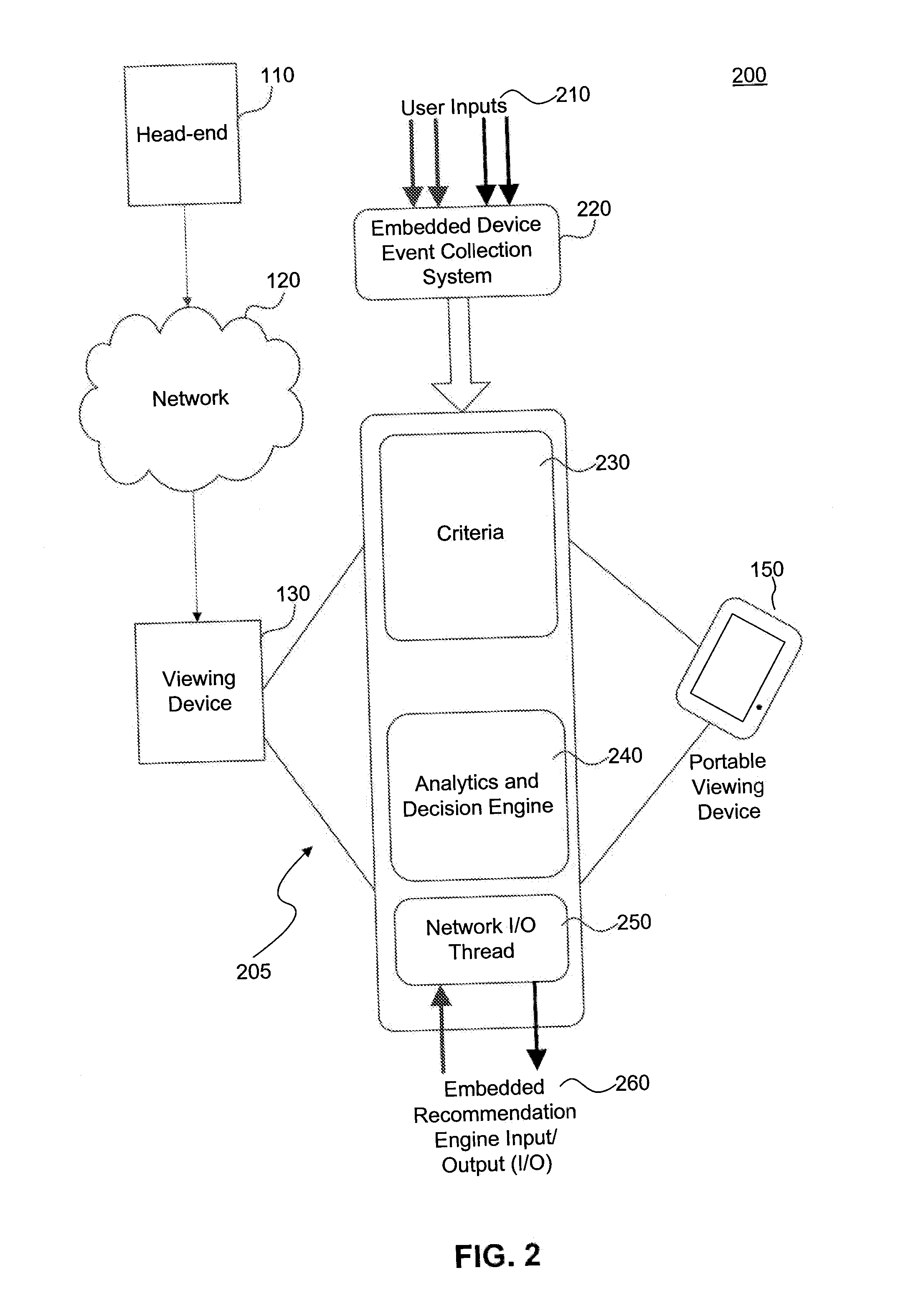

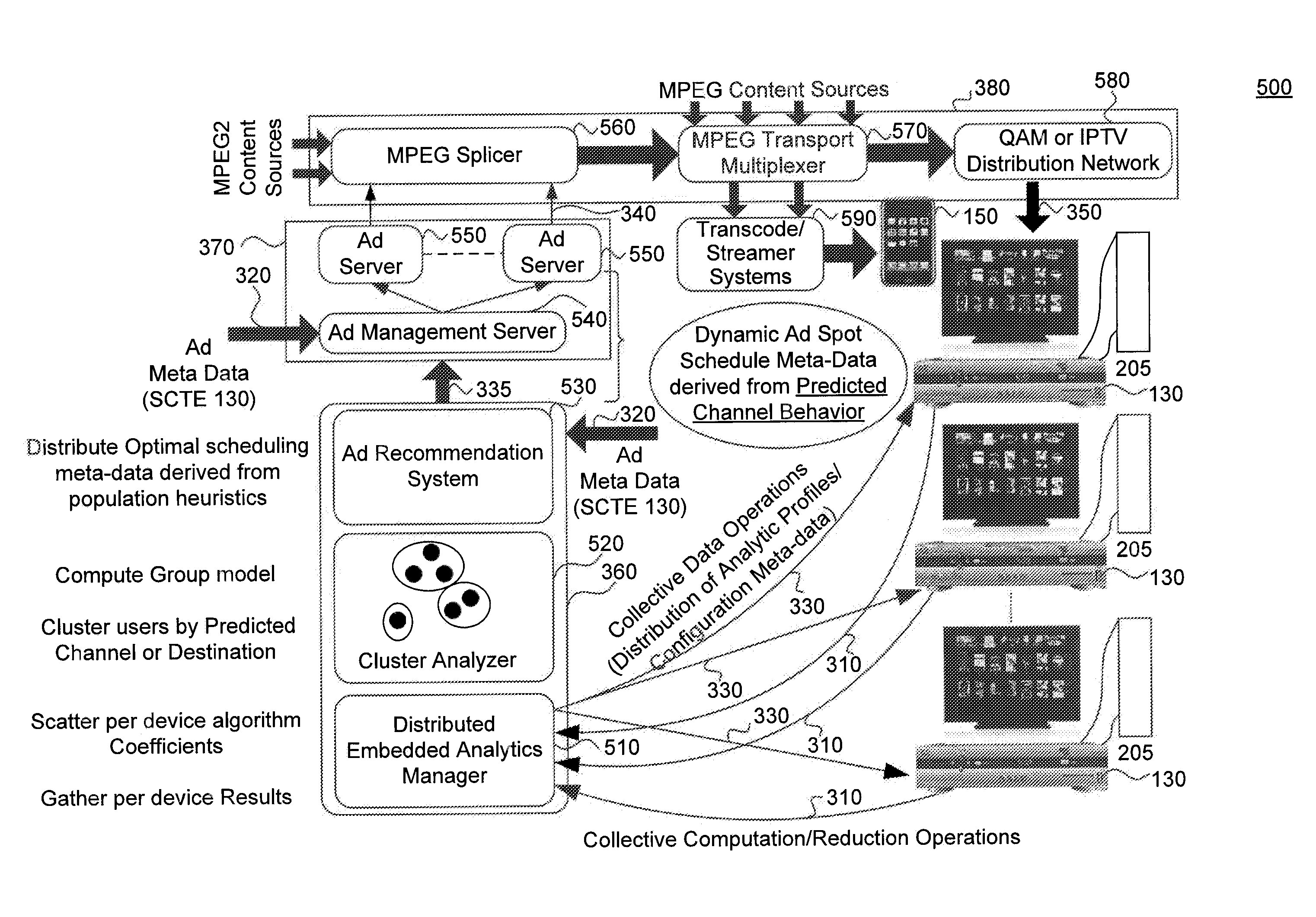

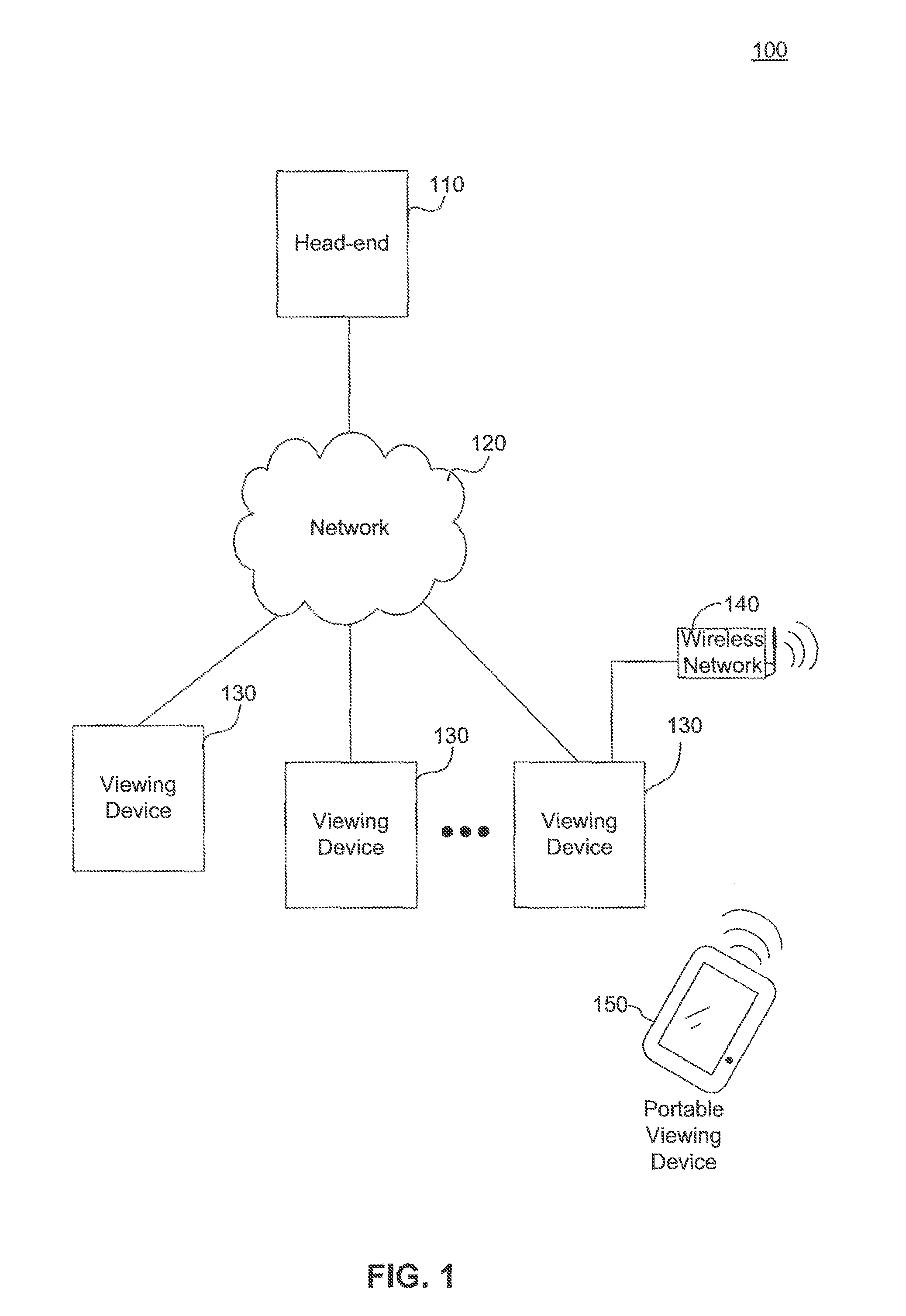

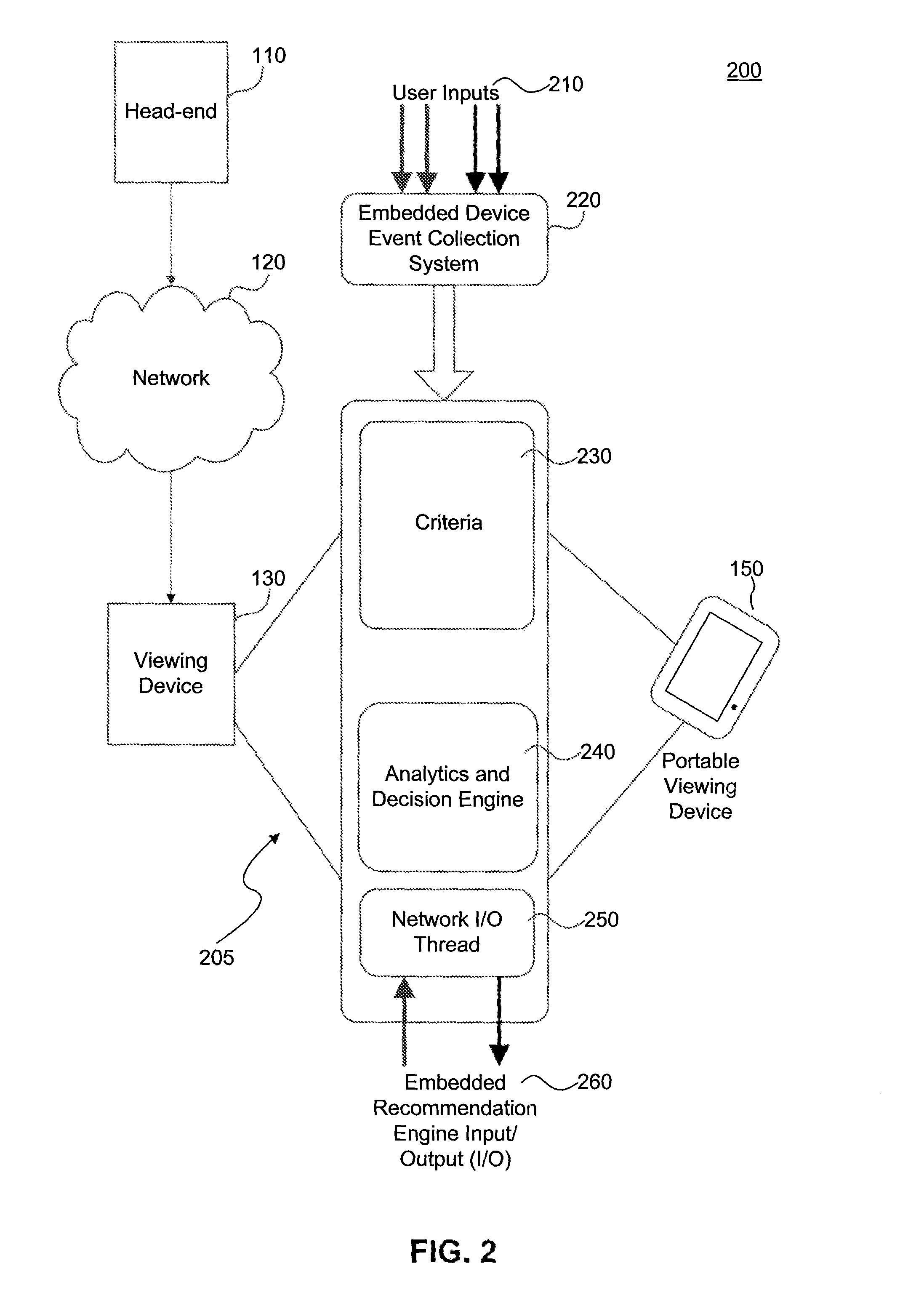

Predictive Content Placement on a Managed Services Systems

ActiveUS20120227064A1Analogue secracy/subscription systemsBroadcast information monitoringSet top boxService system

A distributed stochastic learning agent analyzes viewing and / or interactive service behavior patterns of users of a managed services system. The agent may operate on embedded and / or distributed devices such as set-top boxes, portable video devices, and interactive consumer electronic devices. Content may be provided with services such as video and / or interactive applications at a future time with maximum likelihood that a subscriber will be viewing a video or utilizing an interactive service at that future time. For example, user impressions can be maximized for content such as advertisements, and content may be scheduled in real-time to maximize viewership from across all video and / or interactive services.

Owner:CSC HLDG

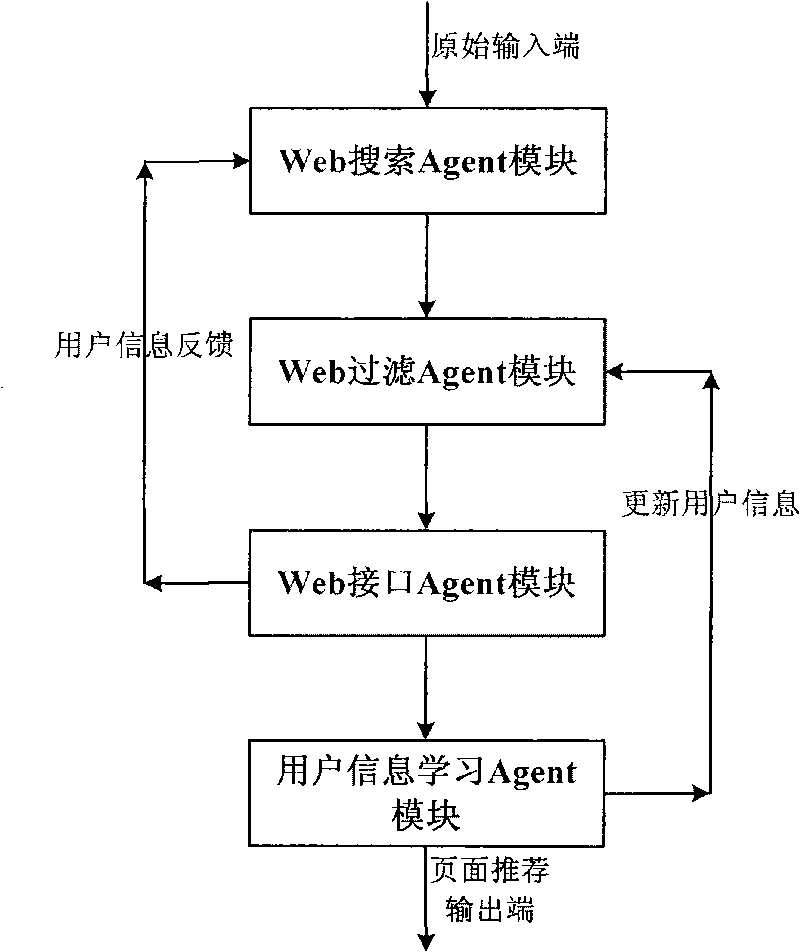

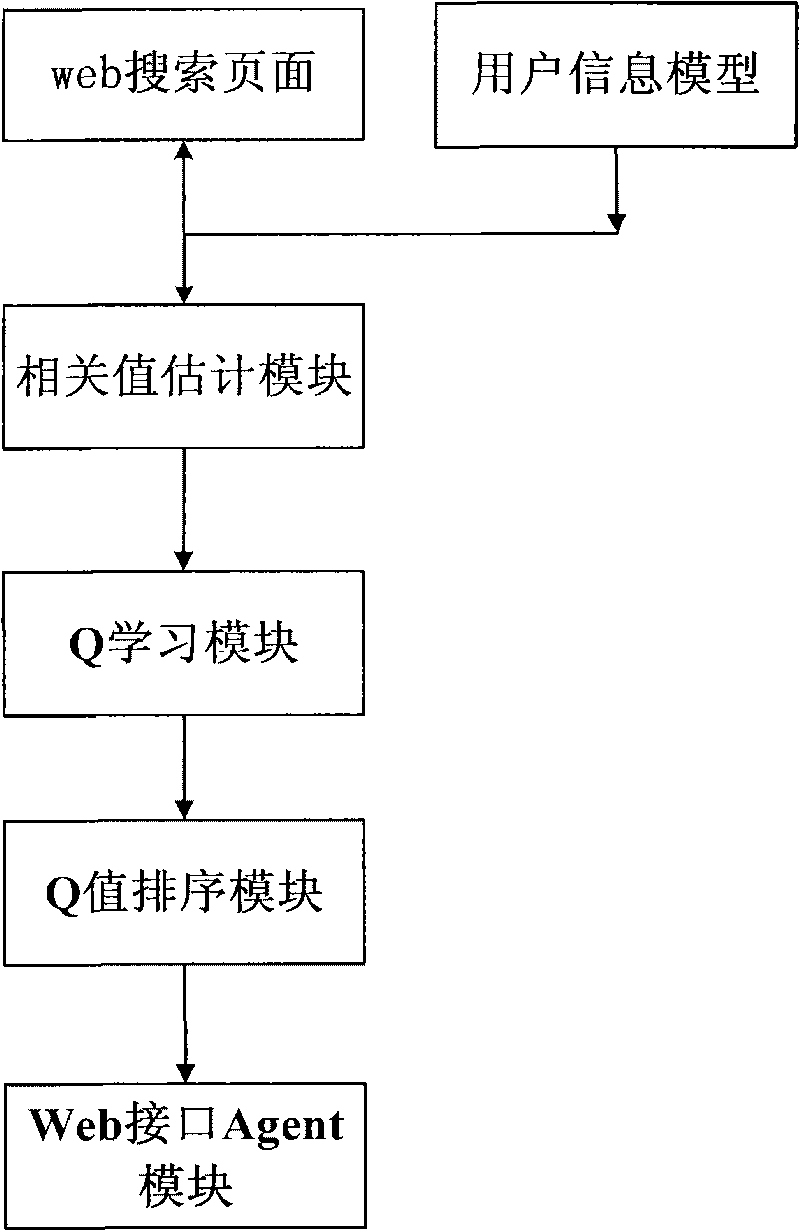

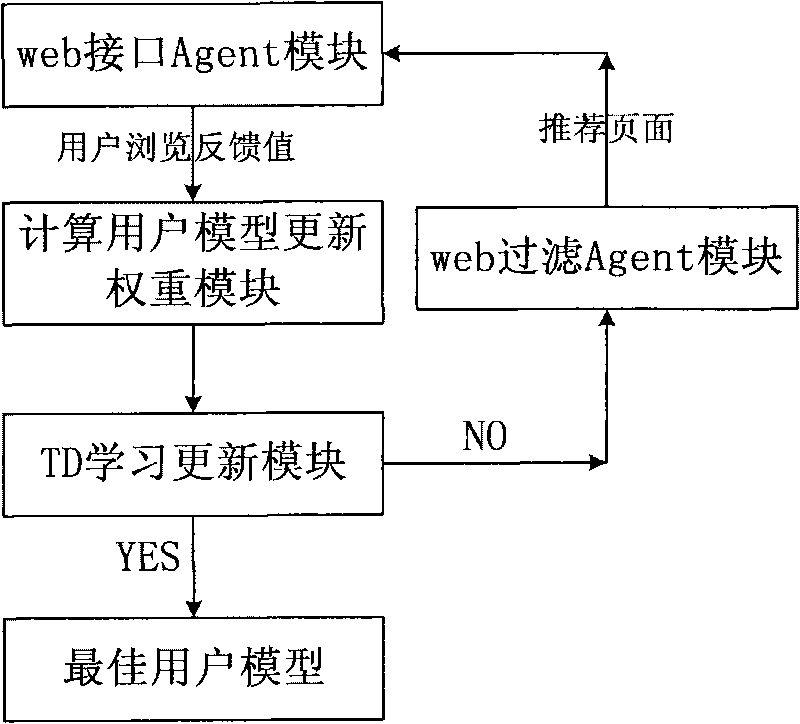

Web active retrieval system based on reinforcement learning

InactiveCN101751437AImprove accuracyEasy to useSpecial data processing applicationsStatistical analysisContent analytics

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

System and methods for intrinsic reward reinforcement learning

ActiveUS20170364829A1Maximize its knowledgeSignificant changeArtificial lifeDesign optimisation/simulationAlgorithmTheoretical computer science

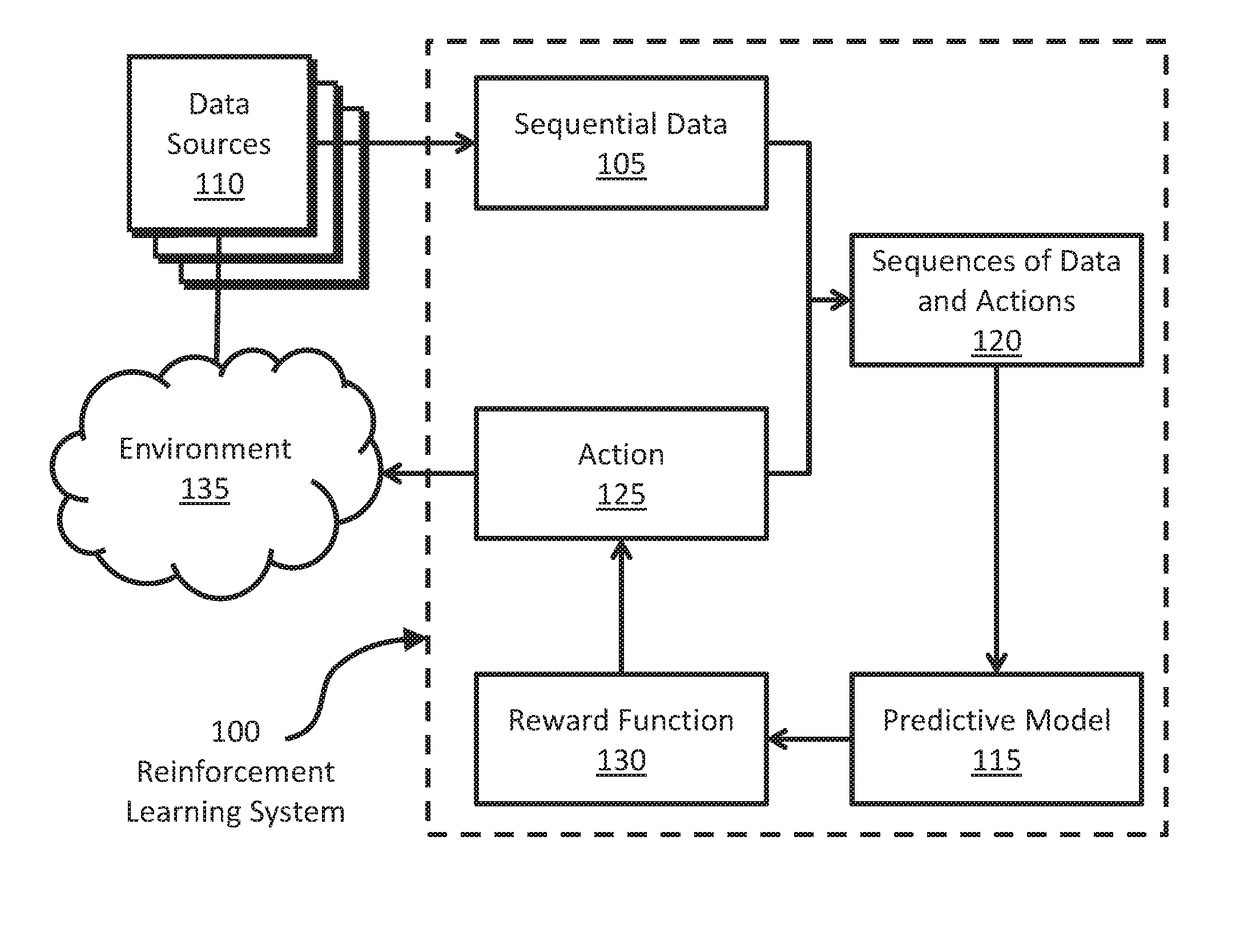

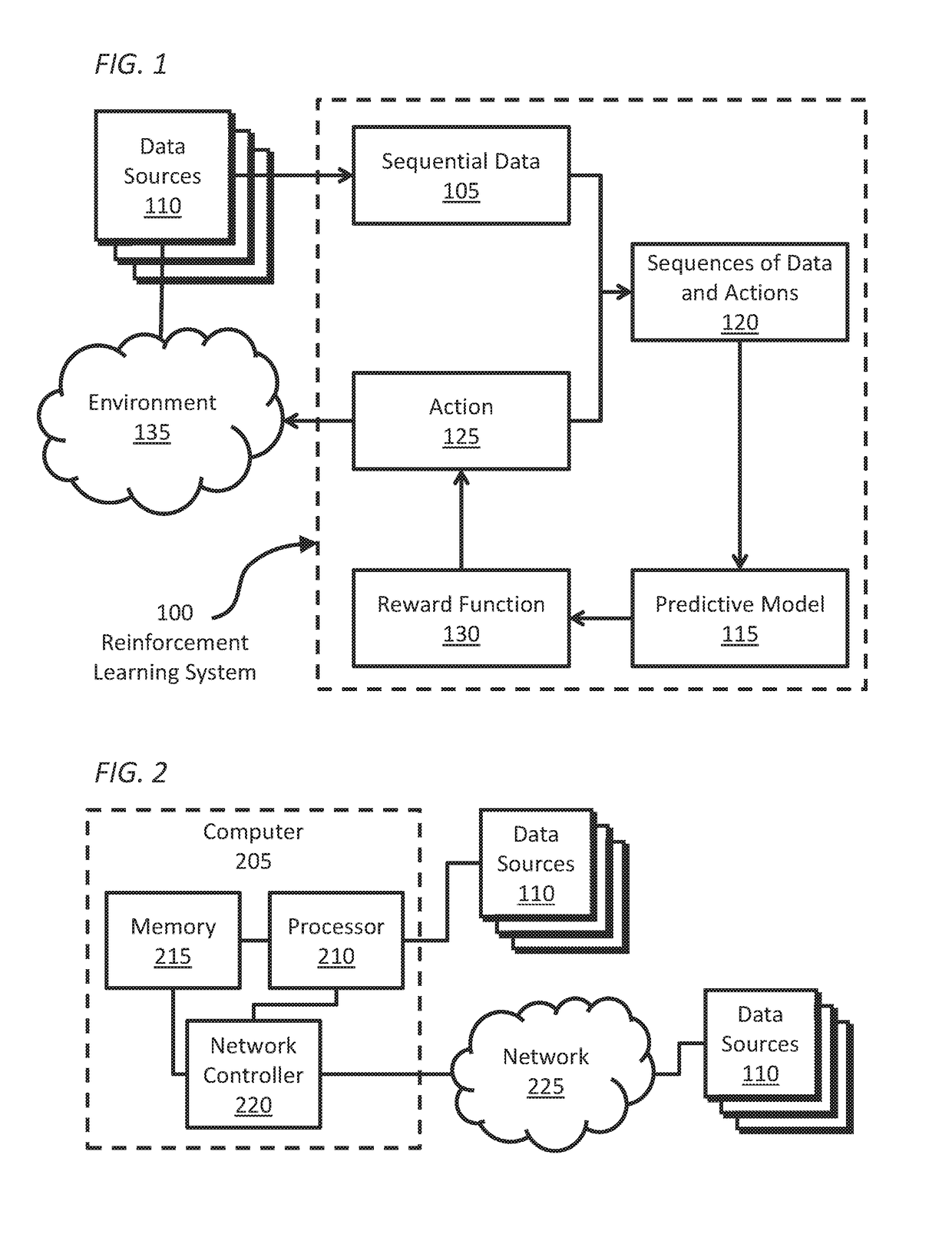

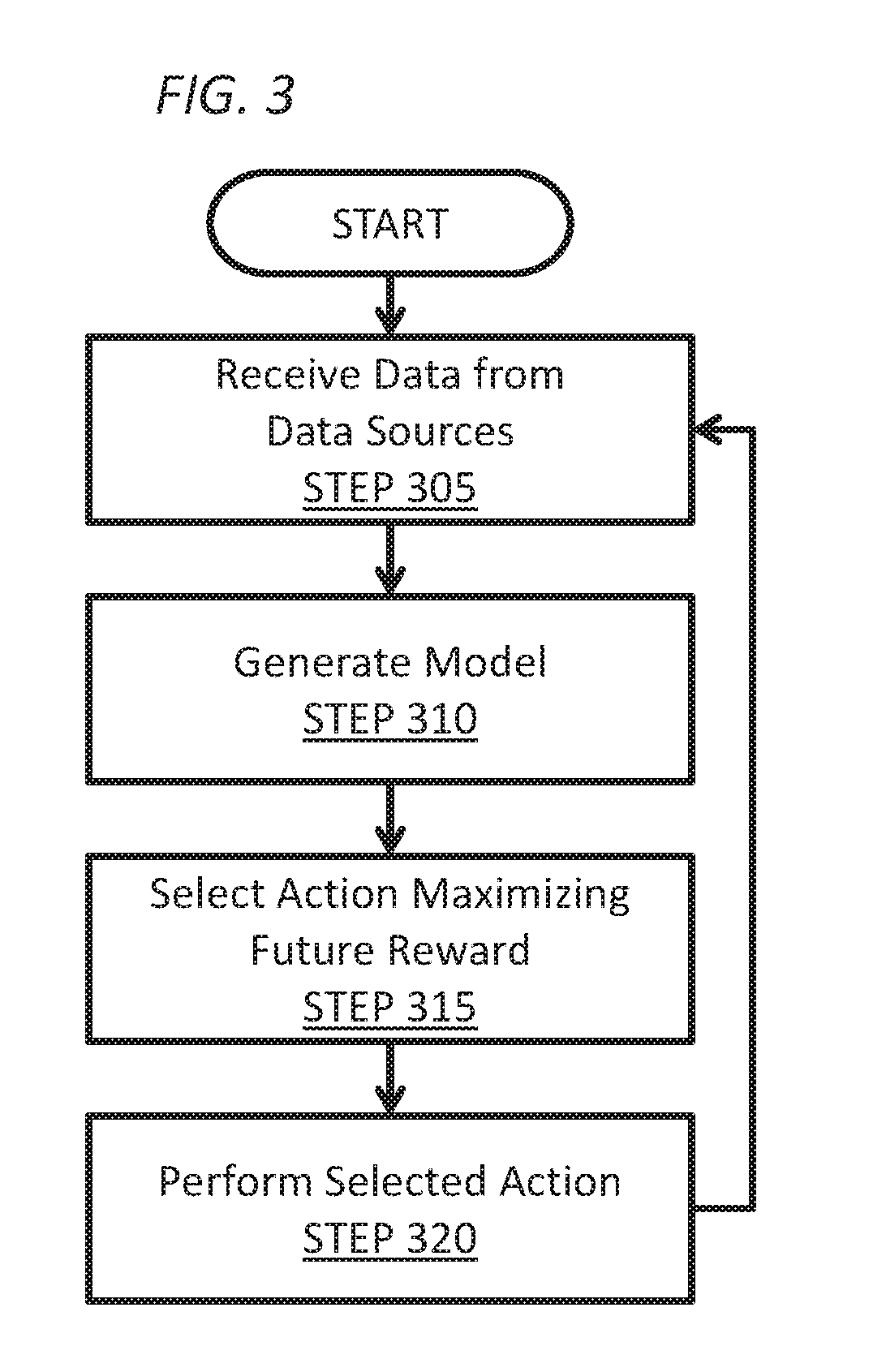

A learning agent is disclosed that receives data in sequence from one or more sequential data sources; generates a model modelling sequences of data and actions; and selects an action maximizing the expected future value of a reward function, wherein the reward function depends at least partly on at least one of: a measure of the change in complexity of the model, or a measure of the complexity of the change in the model. The measure of the change in complexity of the model may be based on, for example, the change in description length of the first part of a two-part code describing one or more sequences of received data and actions, the change in description length of a statistical distribution modelling, the description length of the change in the first part of the two-part code, or the description length of the change in the statistical distribution modelling.

Owner:FYFFE GRAHAM

Distributed learning preserving model security

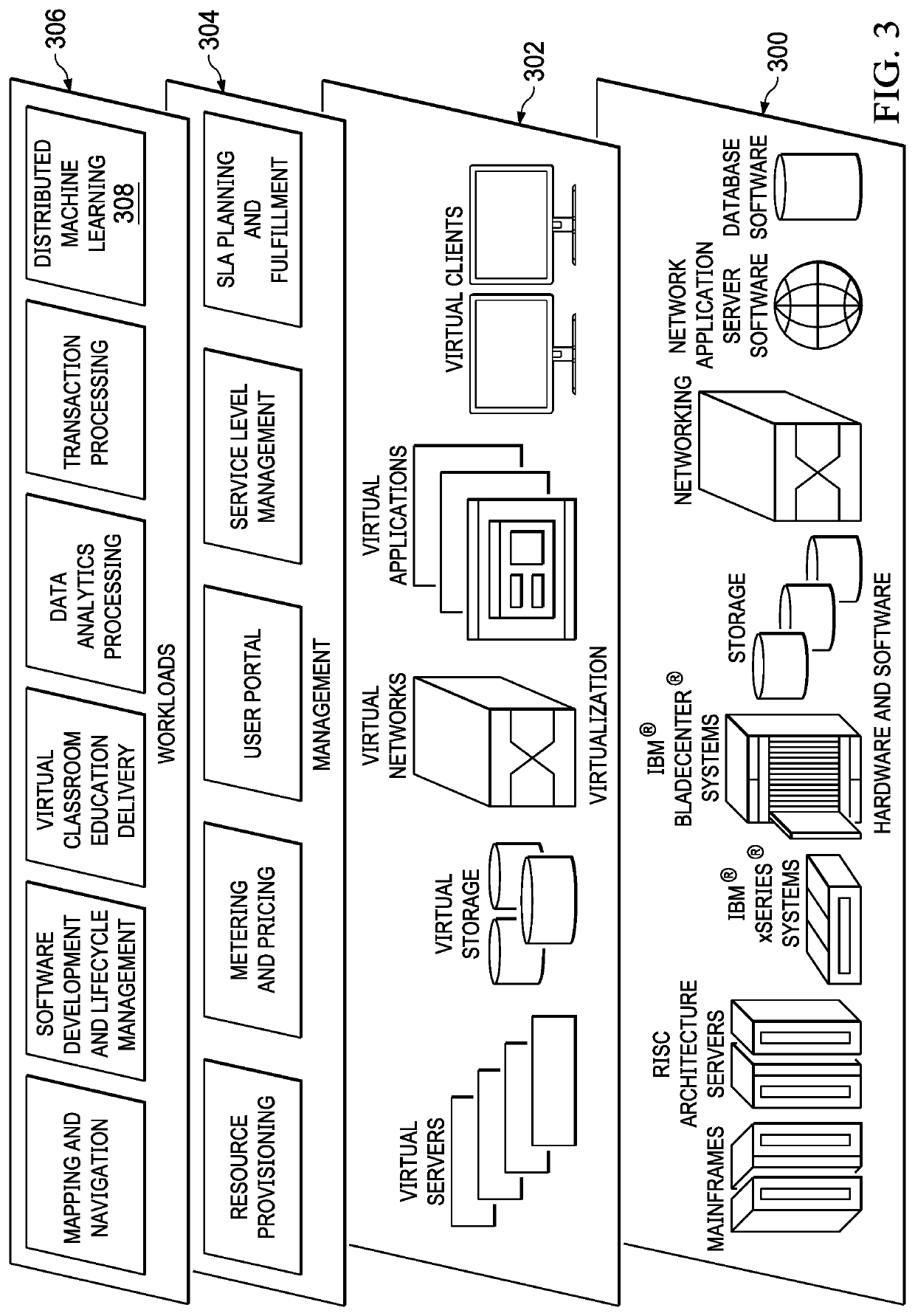

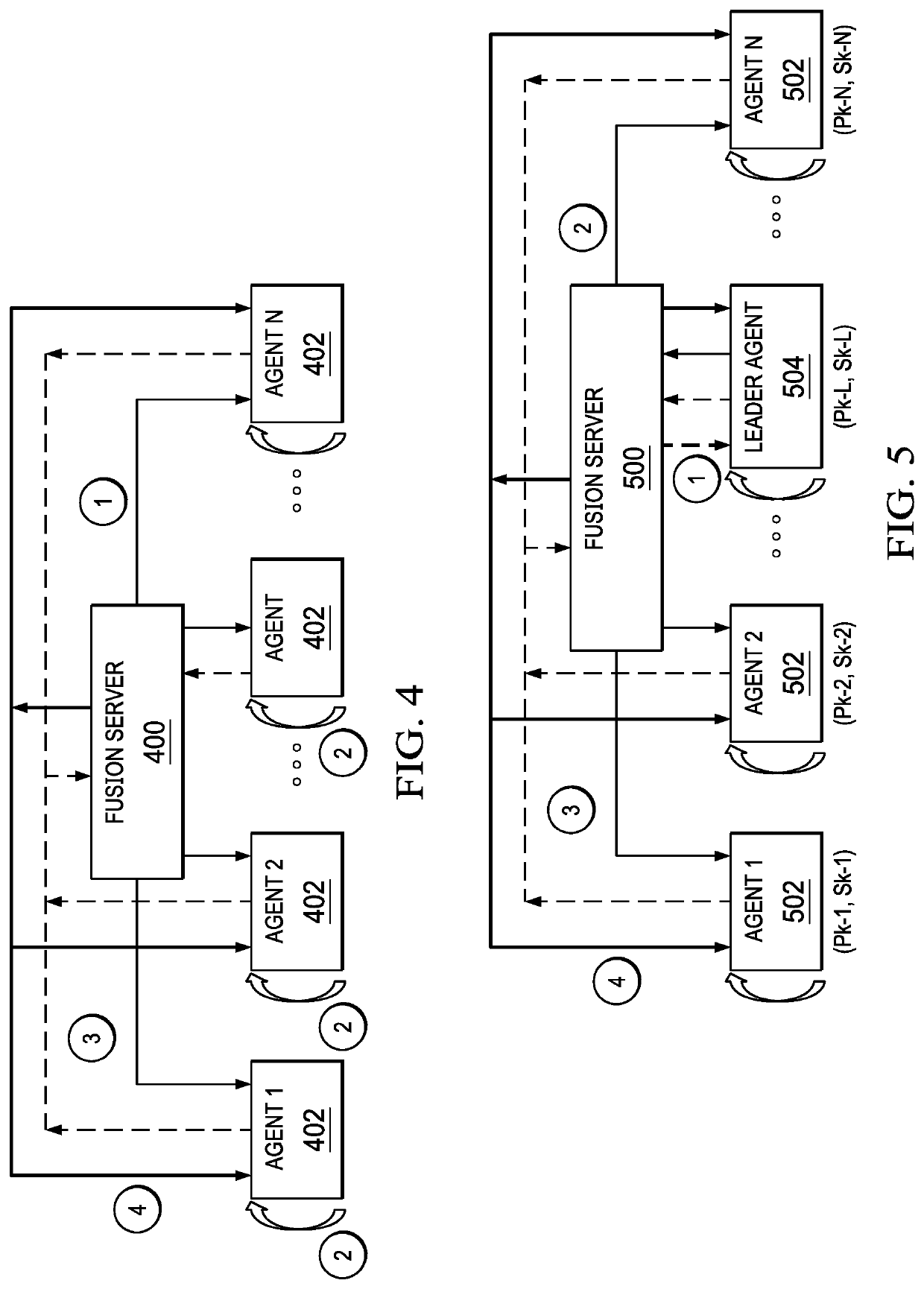

Distributed machine learning employs a central fusion server that coordinates the distributed learning process. Preferably, each of set of learning agents that are typically distributed from one another initially obtains initial parameters for a model from the fusion server. Each agent trains using a dataset local to the agent. The parameters that result from this local training (for a current iteration) are then passed back to the fusion server in a secure manner, and a partial homomorphic encryption scheme is then applied. In particular, the fusion server fuses the parameters from all the agents, and it then shares the results with the agents for a next iteration. In this approach, the model parameters are secured using the encryption scheme, thereby protecting the privacy of the training data, even from the fusion server itself.

Owner:IBM CORP

Systems and Methods for Implementing a Machine-Learning Agent to Retrieve Information in Response to a Message

ActiveUS20110022552A1Digital data processing detailsMultiple digital computer combinationsContinual improvement processData source

Owner:CARNEGIE MELLON UNIV

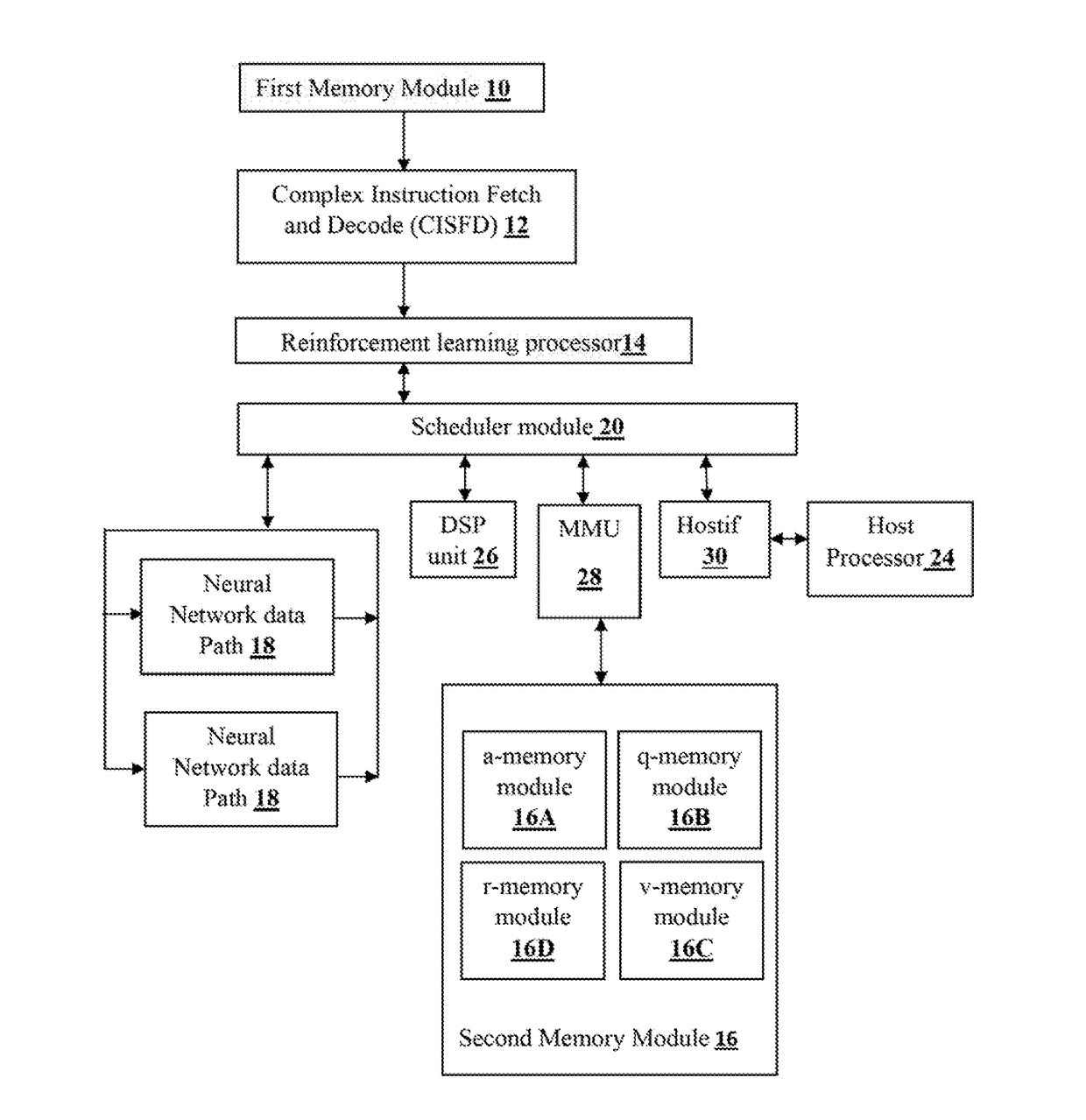

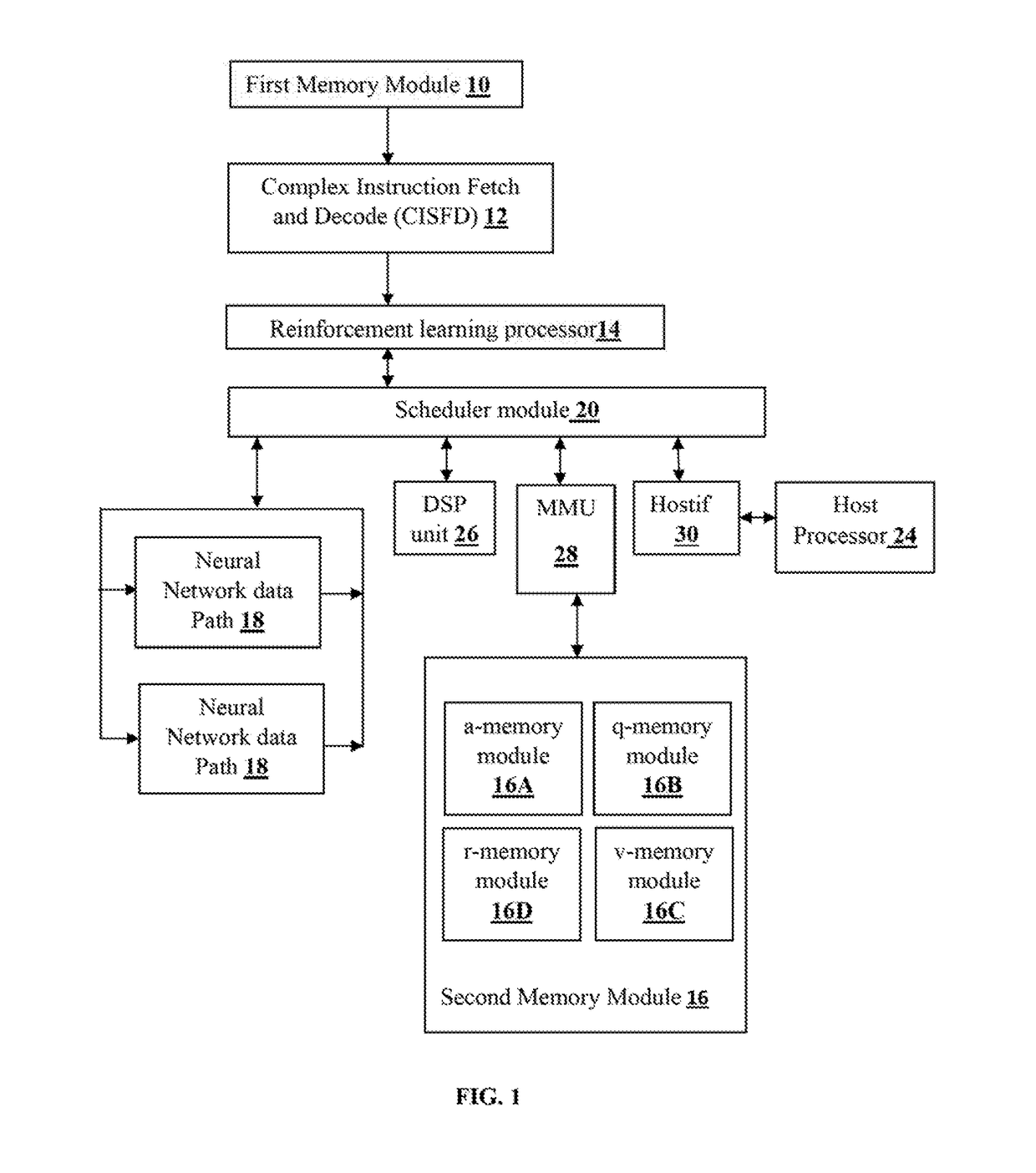

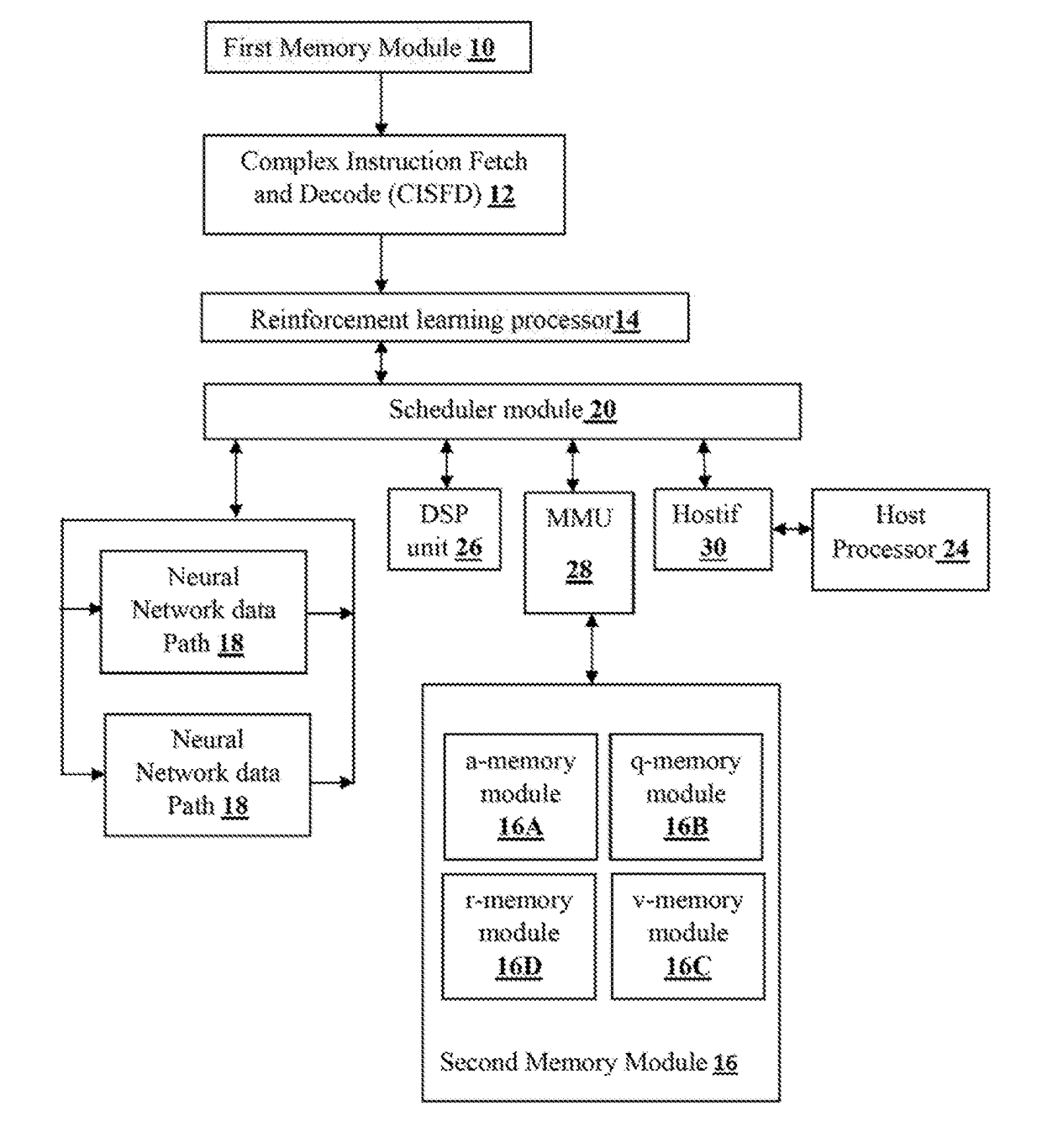

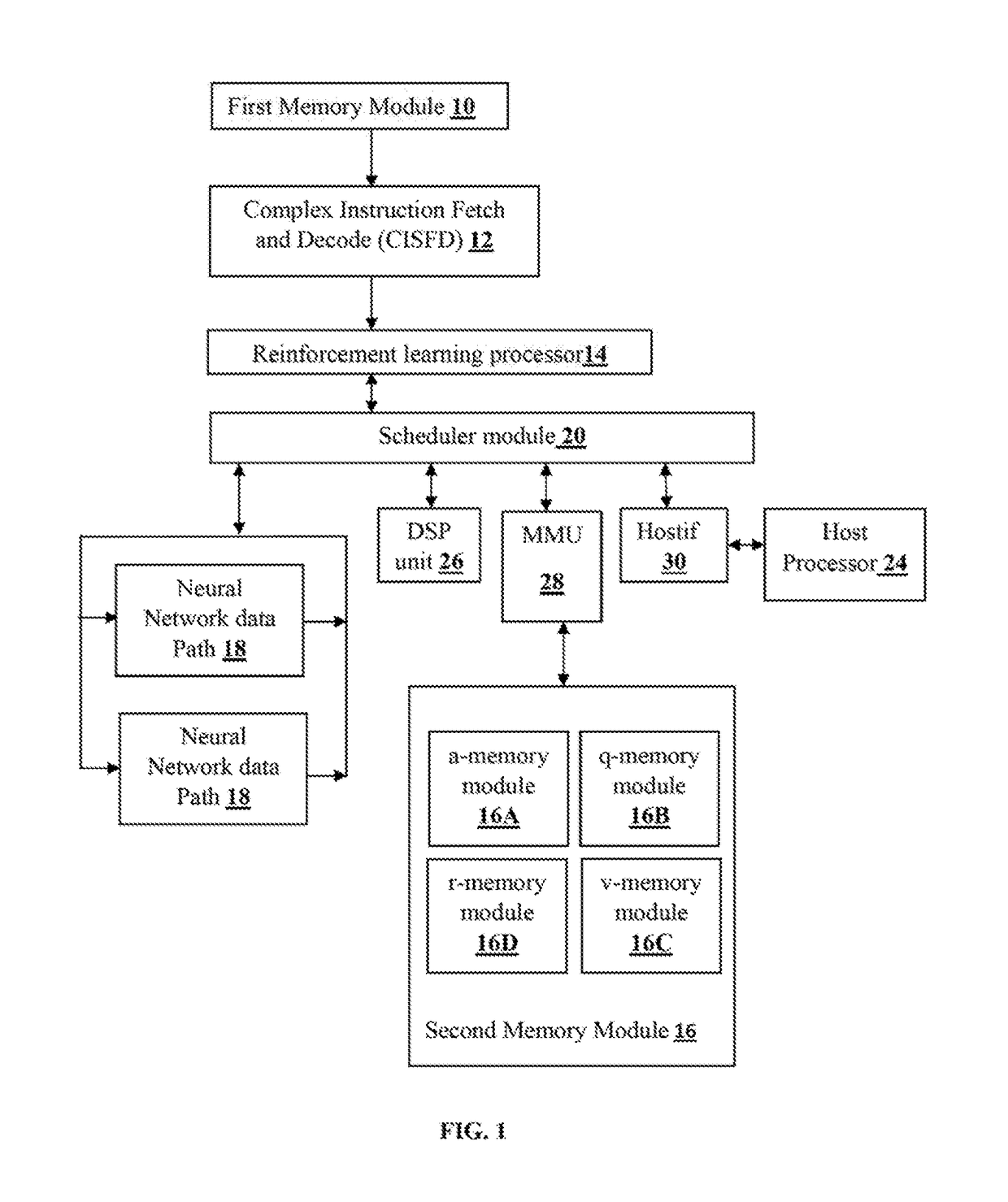

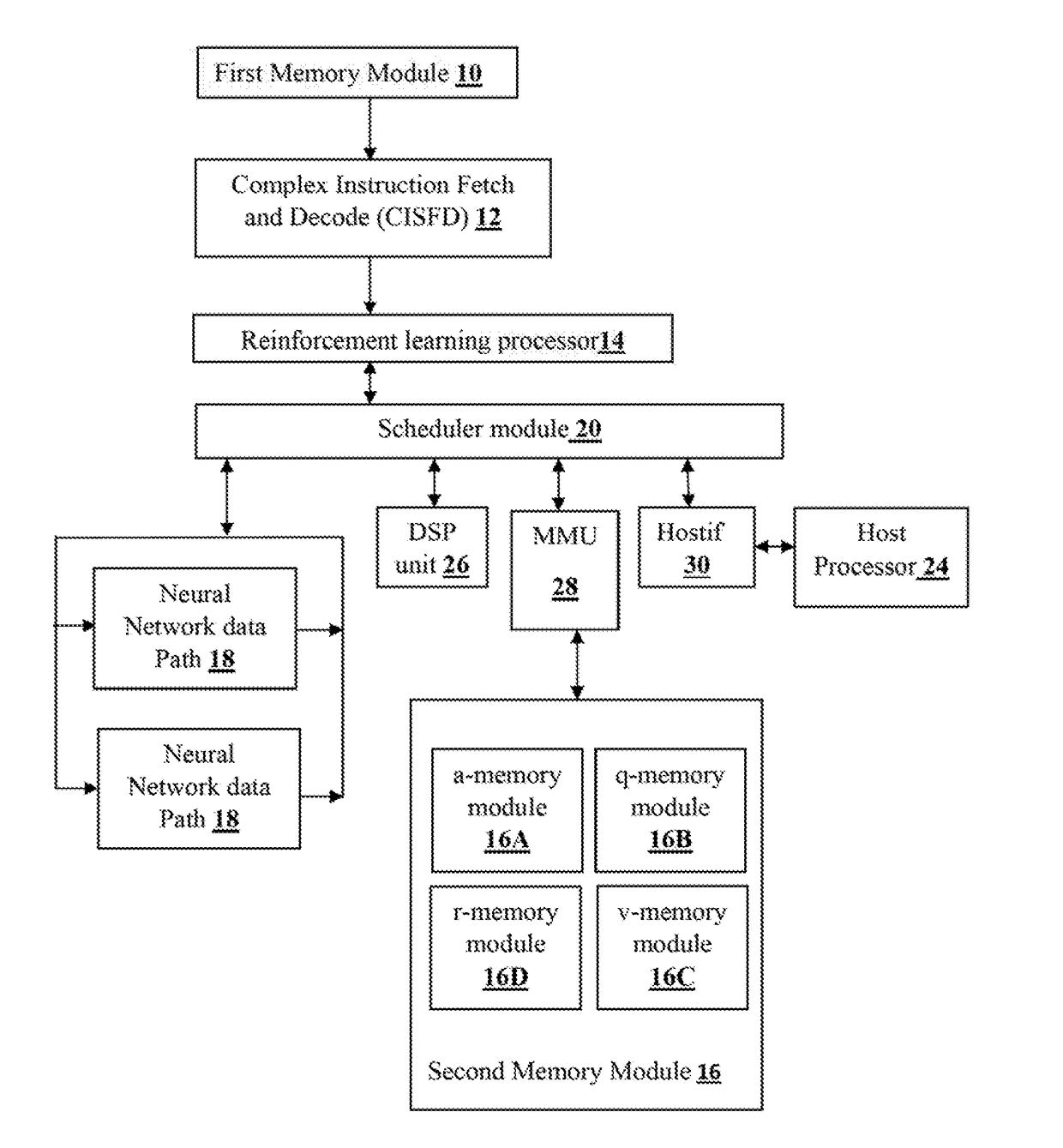

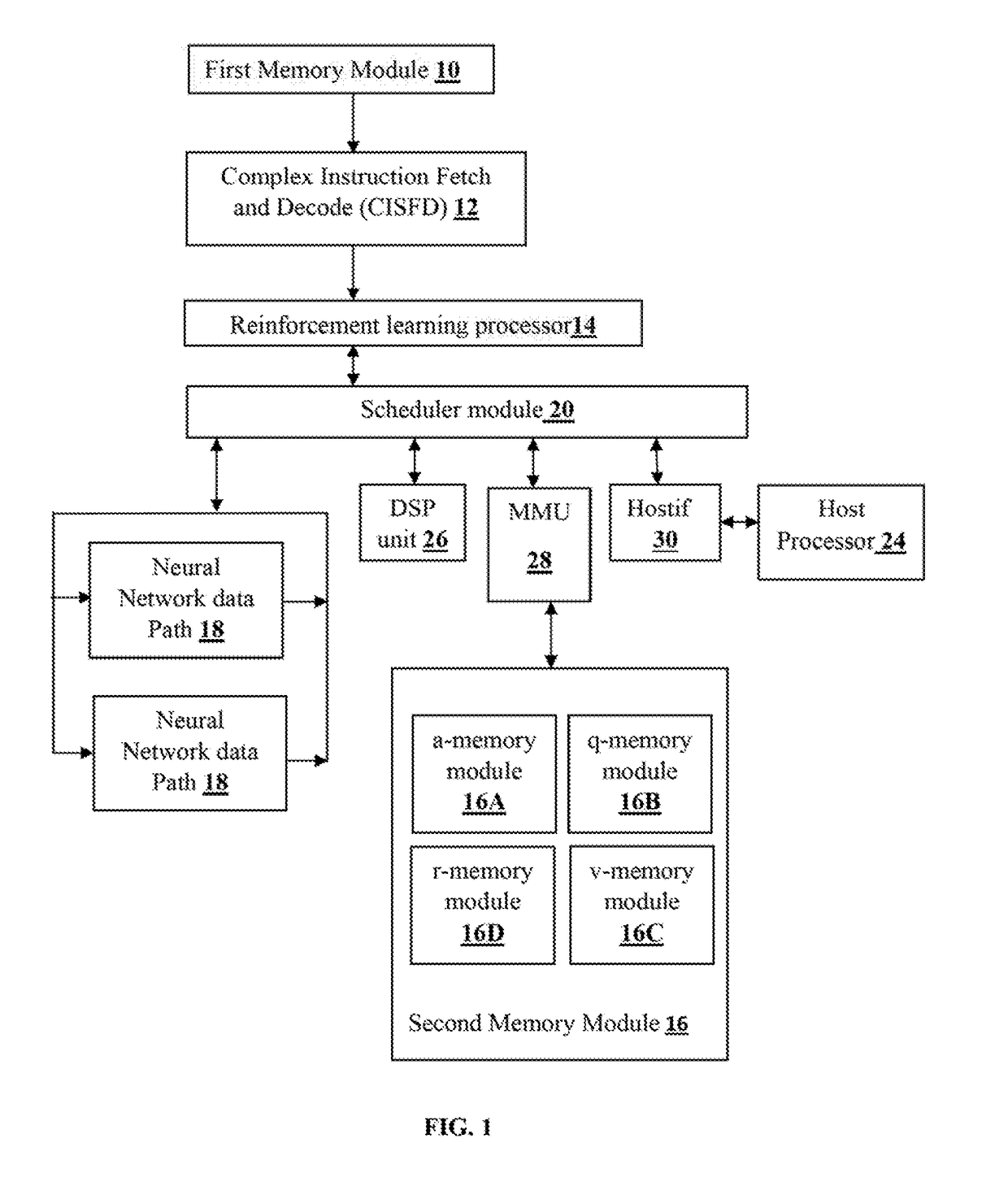

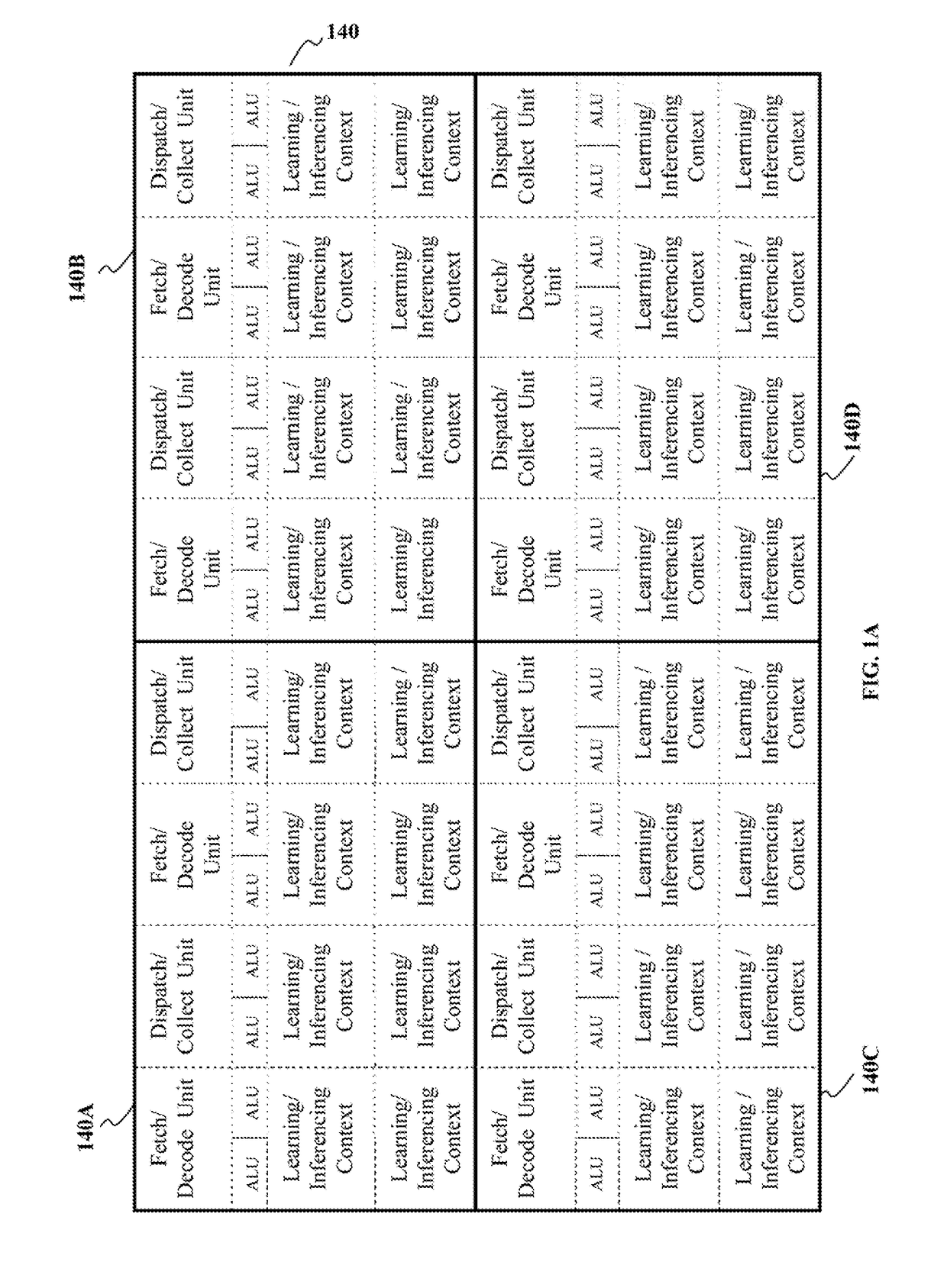

Method and system for implementing reinforcement learning agent using reinforcement learning processor

ActiveUS20180260700A1Efficient solutionEfficient and effective implementationMathematical modelsArtificial lifeTheoretical computer scienceOperand

The embodiments herein disclose a system and method for implementing reinforcement learning agents using a reinforcement learning processor. An application-domain specific instruction set (ASI) for implementing reinforcement learning agents and reward functions is created. Further, instructions are created by including at least one of the reinforcement learning agent ID vectors, the reinforcement learning environment ID vectors, and length of vector as an operand. The reinforcement learning agent ID vectors and the reinforcement learning environment ID vectors are pointers to a base address of an operations memory. Further, at least one of said reinforcement learning agent ID vector and reinforcement learning environment ID vector is embedded into operations associated with the decoded instruction. The instructions retrieved by agent ID vector indexed operation are executed using a second processor, and applied onto a group of reinforcement learning agents. The operations defined by the instructions are stored in an operations storage memory.

Owner:ALPHAICS CORP

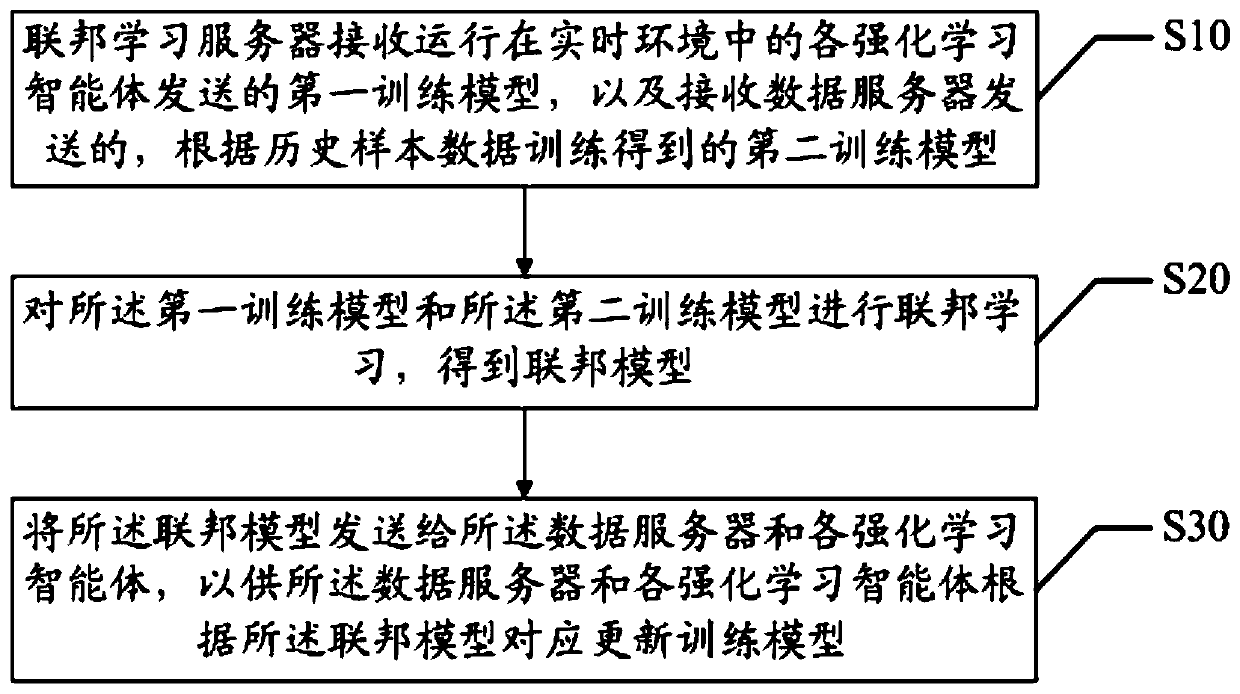

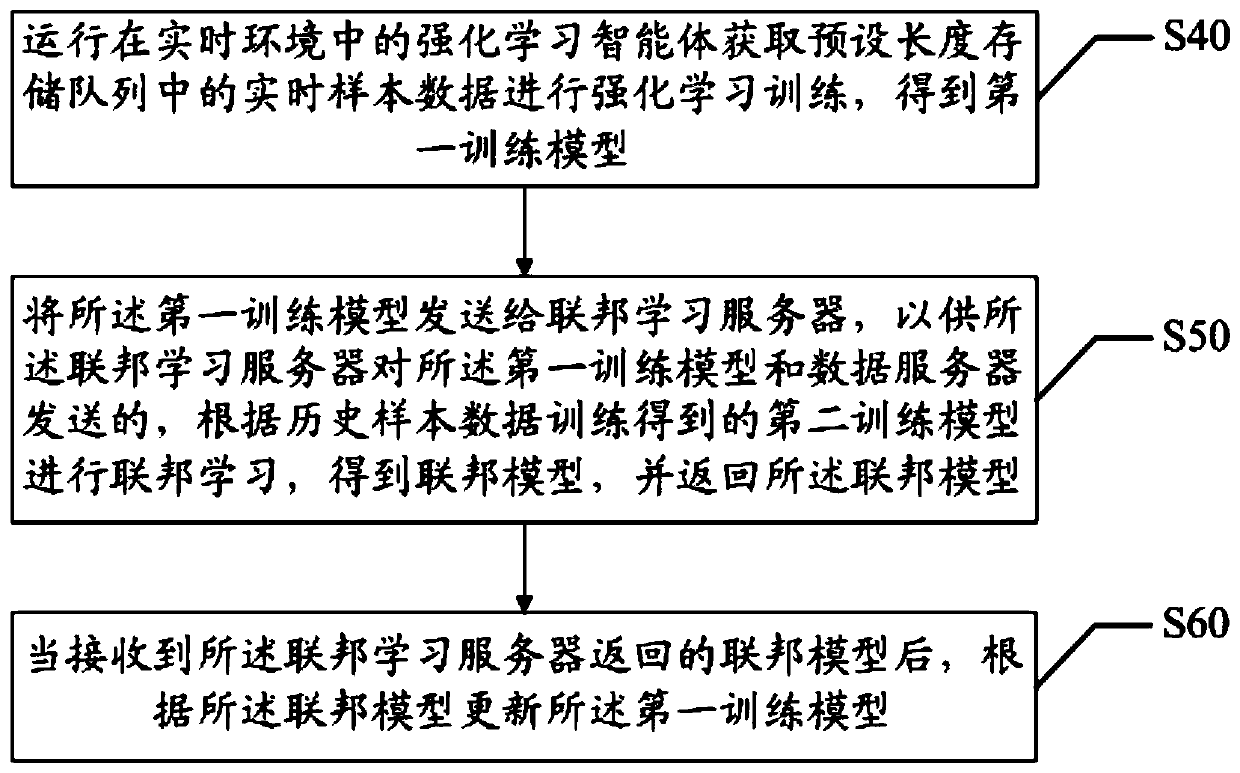

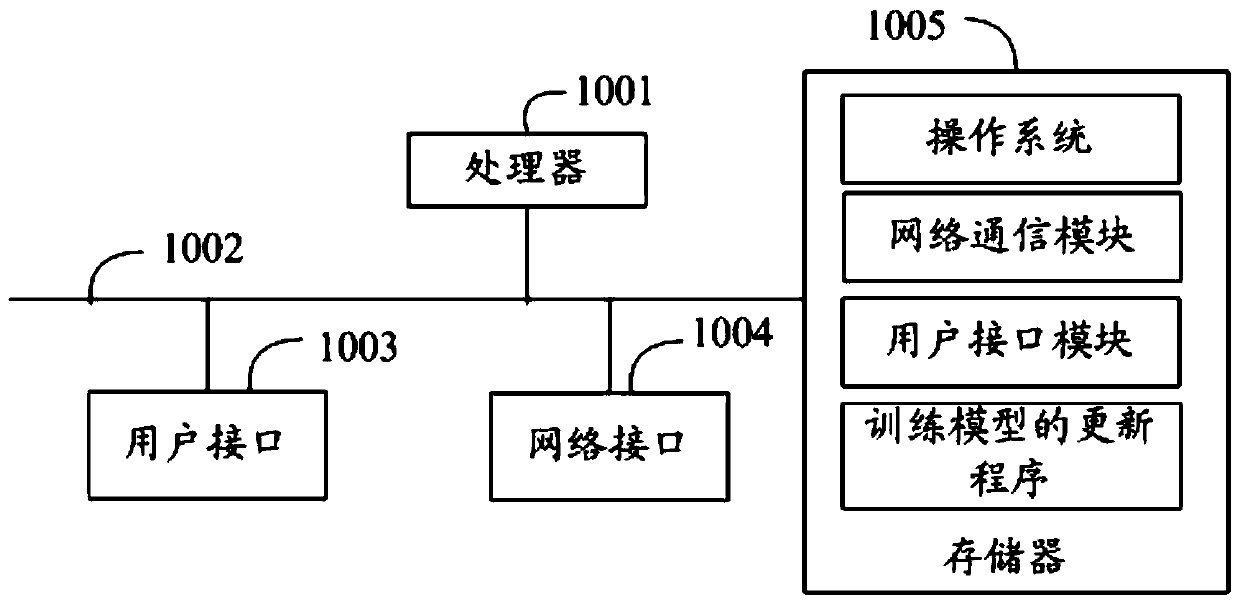

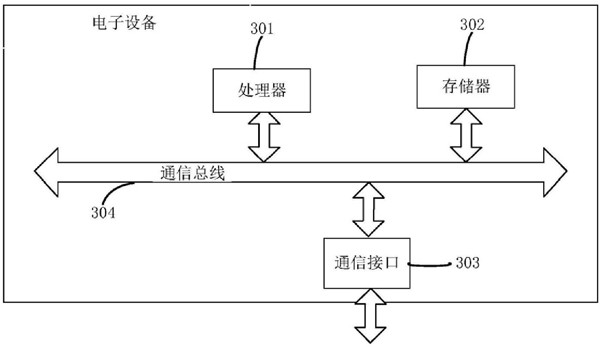

Training model updating method and system, intelligent agent, server and storage medium

The invention discloses a training model updating method and system, an intelligent agent, a server and a storage medium. The method relates to the field of financial science and technology, and comprises the following steps: a federated learning server receives a first training model sent by each reinforcement learning agent running in a real-time environment, and receives a second training modelsent by a data server and obtained by training according to historical sample data; performing federated learning on the first training model and the second training model to obtain a federated model; and sending the federated model to the data server and each reinforcement learning agent, so that the data server and each reinforcement learning agent correspondingly update a training model according to the federated model. According to the method, the accuracy of obtaining the training result through the training model is improved, that is, the sample knowledge extraction capability of the training model is improved, and the stability of the training model is improved.

Owner:WEBANK (CHINA)

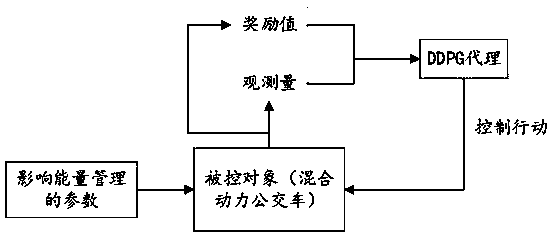

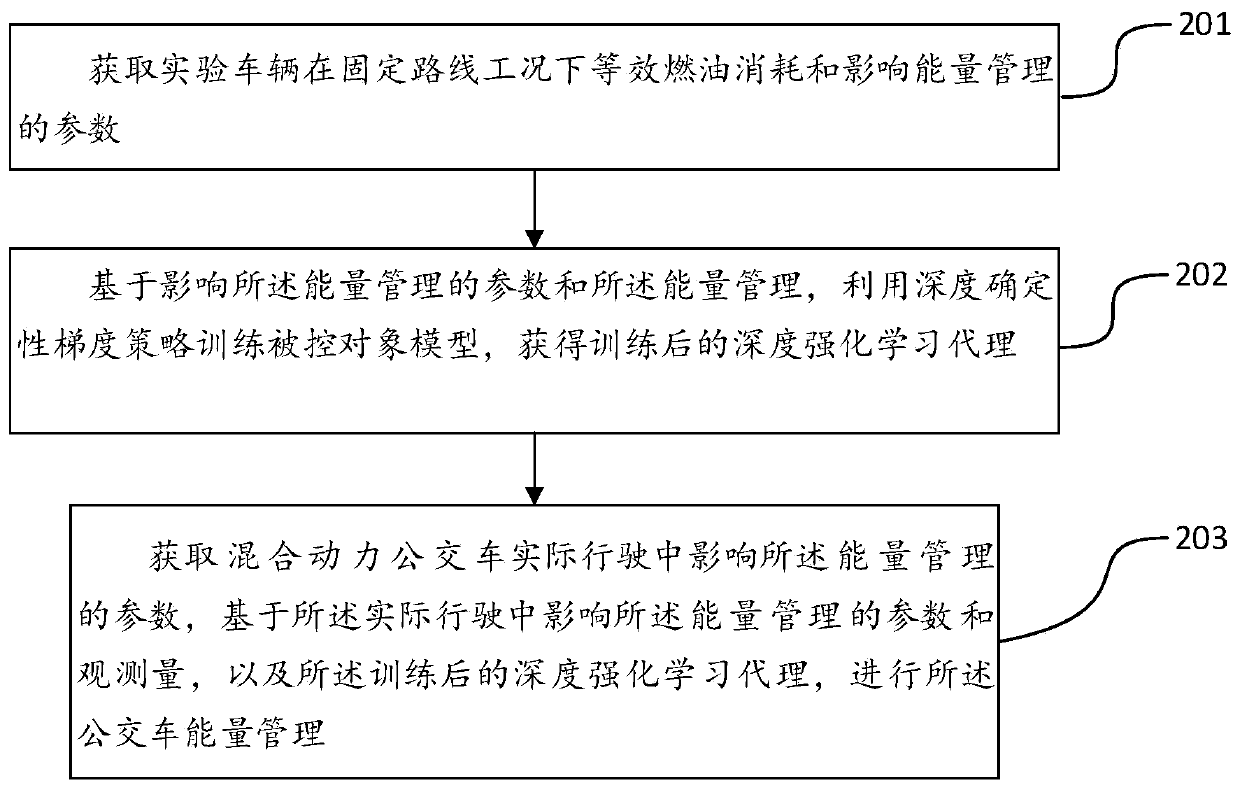

Hybrid power bus energy management method, hybrid bus energy management method equipment and storage medium

ActiveCN111267830AReduce consumptionEffective controlHybrid vehiclesOther vehicle parametersTransit busBus

The invention discloses a hybrid bus energy management method, hybrid bus energy management method equipment and a storage medium. The method comprises: obtaining parameters influencing energy management of an experimental vehicle under the bus fixed route working condition; based on the parameters influencing the energy management and the observed quantity training model, obtaining a trained deepreinforcement learning agent; and obtaining parameters influencing the energy management in actual driving of the bus and an observed quantity, and performing energy management of the hybrid power bus under a fixed route working condition based on the parameters influencing the energy management in actual driving and the trained deep reinforcement learning agent. By the adoption of the technicalscheme, more effective control over energy management of the hybrid power bus can be achieved, and energy consumption is reduced.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

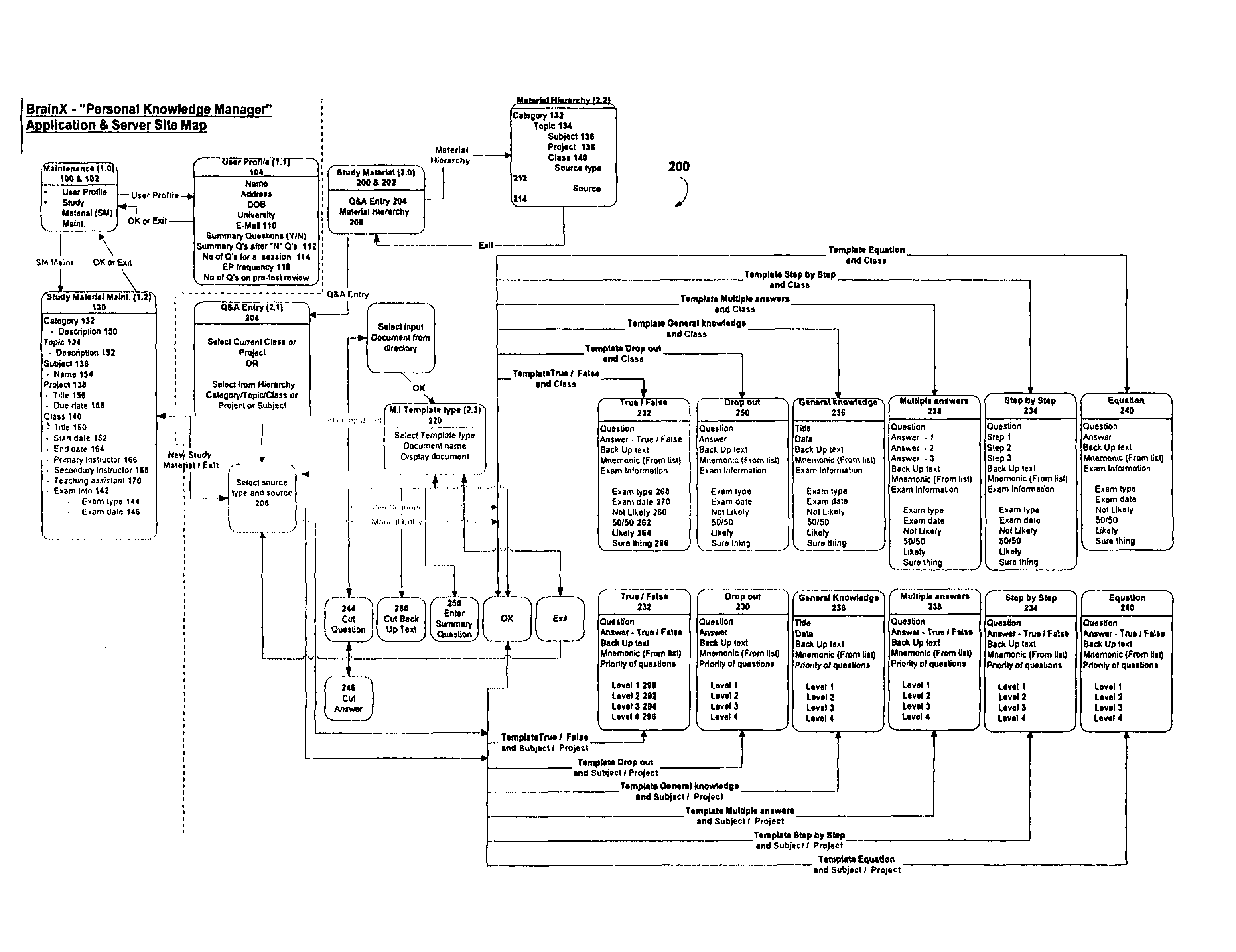

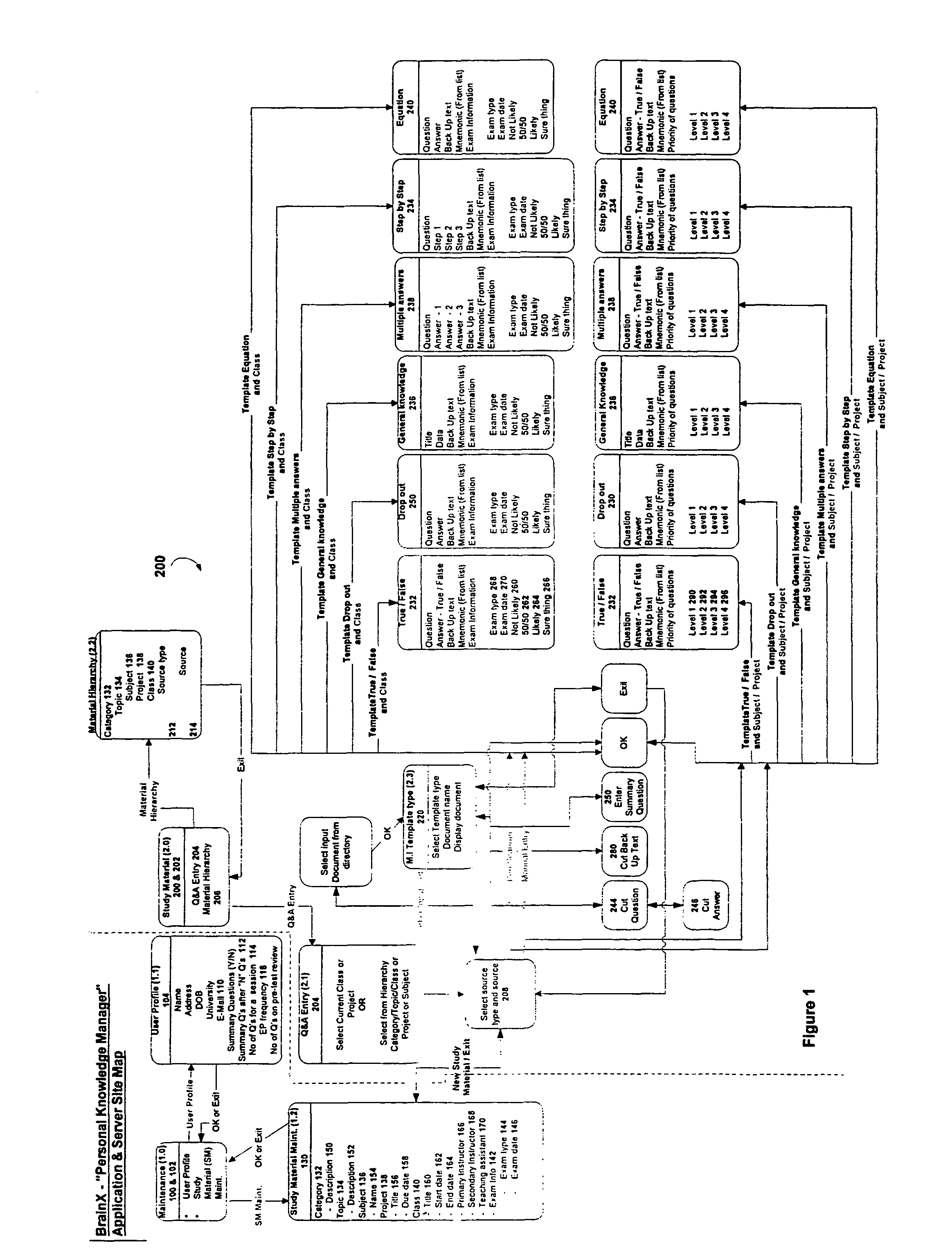

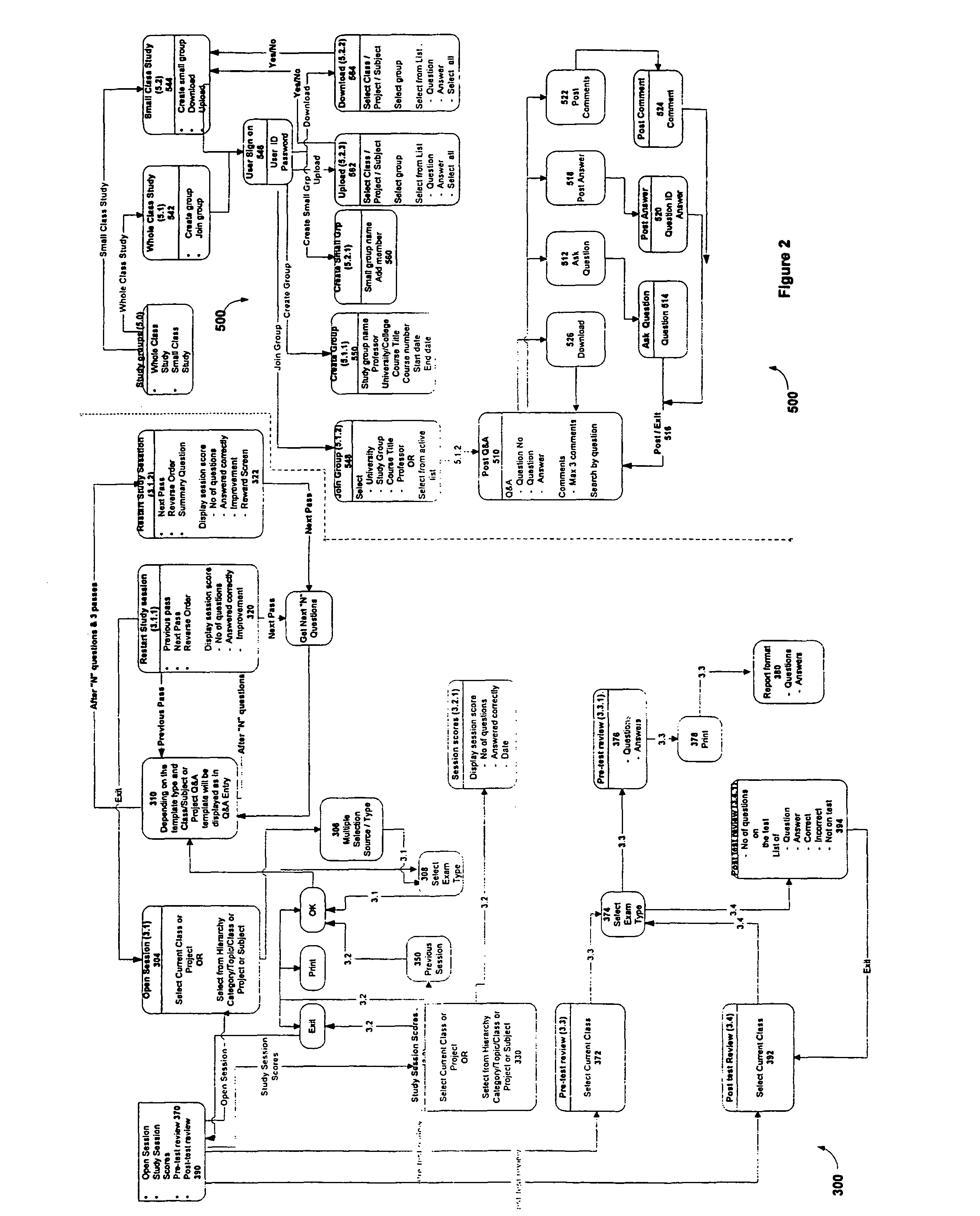

Interactive computer networked study aid and guide

InactiveUS7918666B1Better understandingEfficiently presentedElectrical appliancesMechanical appliancesInformation processingInteractive Learning

An interactive study aid provides student- or other source-selected (208) questions for studying. Preferably in conjunction with machine-implemented or other information processing, the interactive study aid of the present invention provides a student with the ability to acquire, present / study, and collaborate upon study material (202) information. Acquisition of study material (202) information may be made through soft-text resources such as digital books or online resources. A pen scanner may be used to access hard copy information making pertinent portions available to the interactive study aid. Study material information may also be entered by hand. Upon entering the study material, the student can indicate the likelihood of its appearing on a test or the importance of the information to the student. Study sessions orchestrated by an Intelligent Learning Agent guide the student towards that material he or she is the most interested in either for tested / evaluated course work for a grade or for self study and interest.

Owner:BRAINX COM

System and Method for Knowledge Pattern Search from Networked Agents

One or more systems and methods for knowledge pattern search from networked agents are disclosed in various embodiments of the invention. A system and a related method can utilizes a knowledge pattern discovery process, which involves analyzing historical data, contextualizing, conceptualizing, clustering, and modeling of data to pattern and discover information of interest. This process may involve constructing a pattern-identifying model using a computer system by applying a context-concept-cluster (CCC) data analysis method, and visualizing that information using a computer system interface. In one embodiment of the invention, once the pattern-identifying model is constructed, the real-time data can be gathered using multiple learning agent devices, and then analyzed by the pattern-identifying model to identify various patterns for gains analysis and derivation of an anomalousness score. This system can be useful for knowledge discovery applications in various industries, including business, competitive intelligence, and academic research.

Owner:ZHAO YING +1

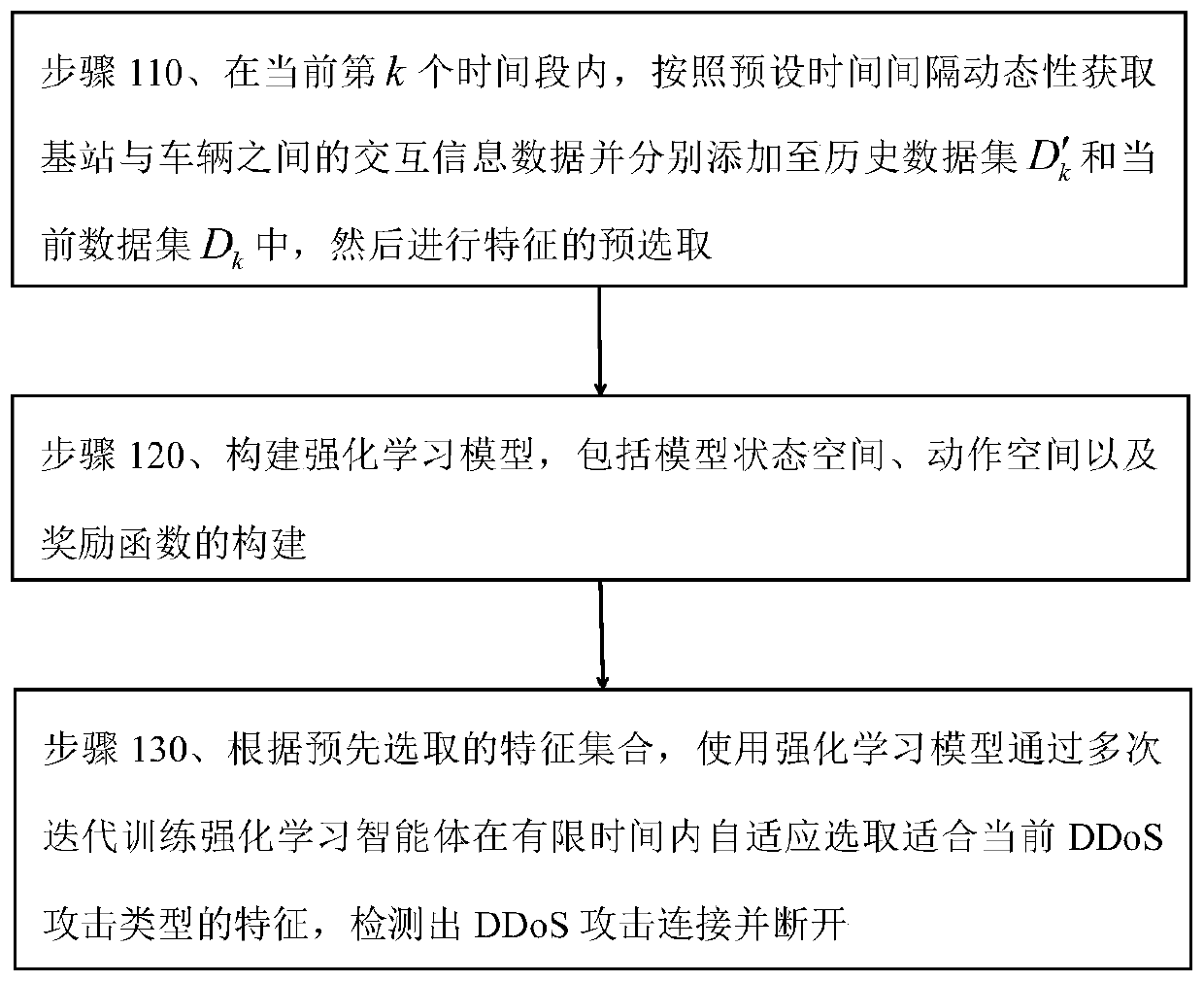

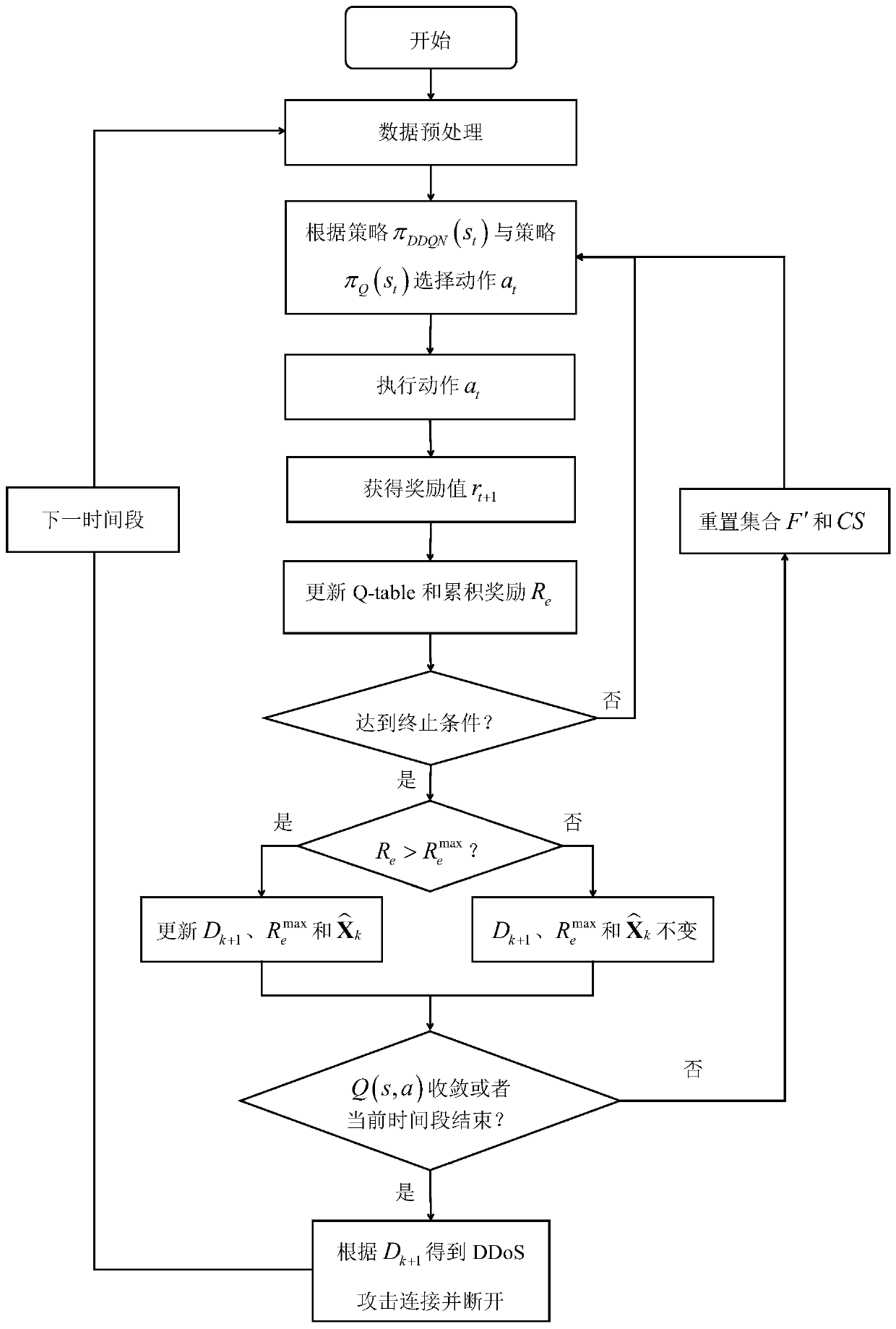

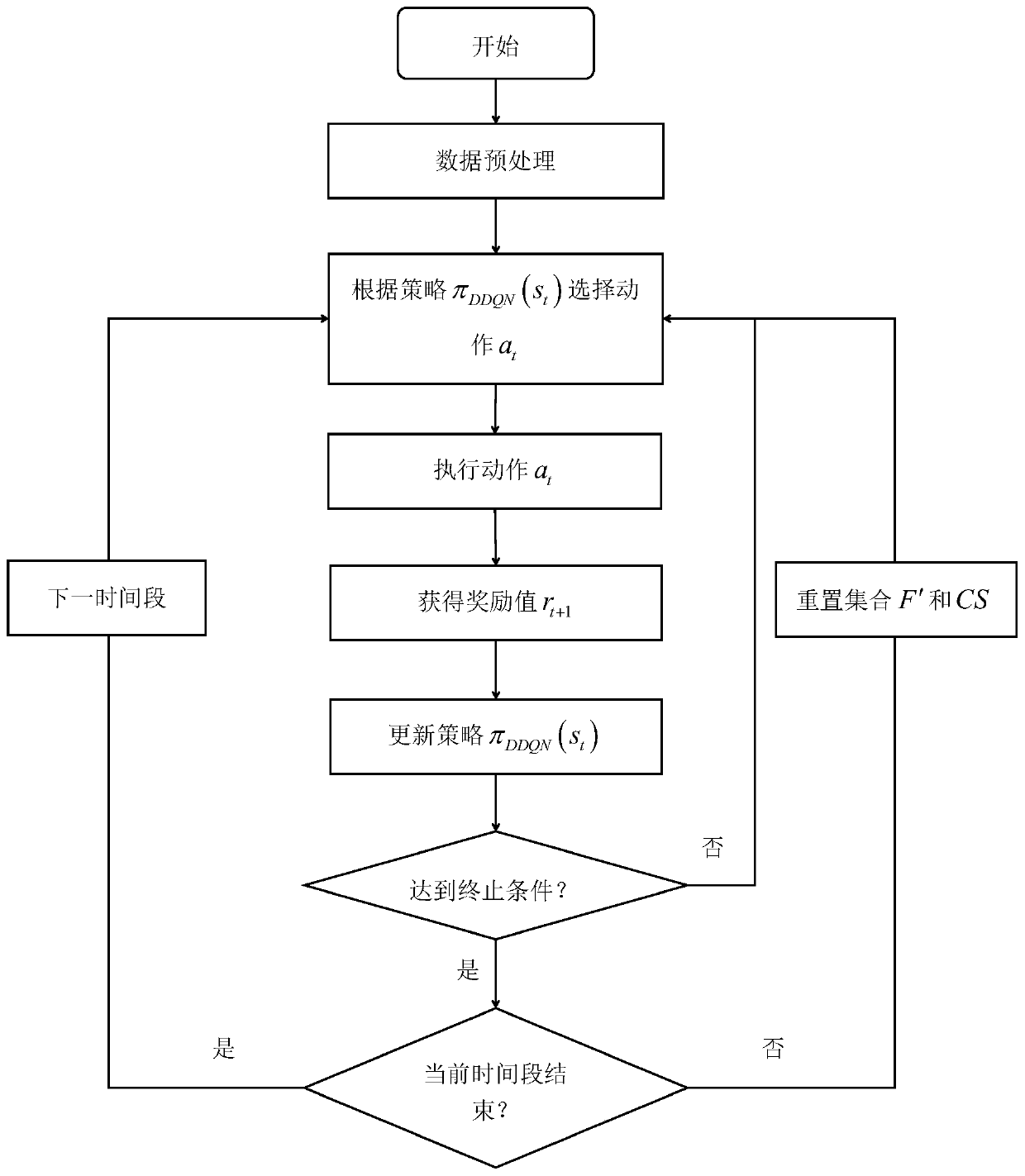

Feature adaptive reinforcement learning DDoS attack elimination method and system

The embodiment of the invention discloses a feature adaptive reinforcement learning DDoS attack elimination method and a feature adaptive reinforcement learning DDoS attack elimination system. According to the collected historical data information, a better simplified feature subset is extracted; a reinforcement learning model is established according to a potential and predictable traffic flow space-time rule in the Internet of Vehicles; and a Q-learning agent is trained according to the reinforcement learning model to select a feature suitable for the current DDoS attack type, and meanwhile,a DDQN agent is asynchronously trained to obtain a strategy pi DDQN (st) to guide the selection of the Q-learning agent action. According to the method, the purpose of detecting the unknown type of DDoS attack in the Internet of Vehicles is achieved with a small amount of priori knowledge by adaptively learning attack features, dependence on labeled data is gotten rid of, and therefore the DDoS attack elimination method is obtained, and the requirements for low time delay and high accuracy in the Internet of Vehicles are met.

Owner:DONGHUA UNIV

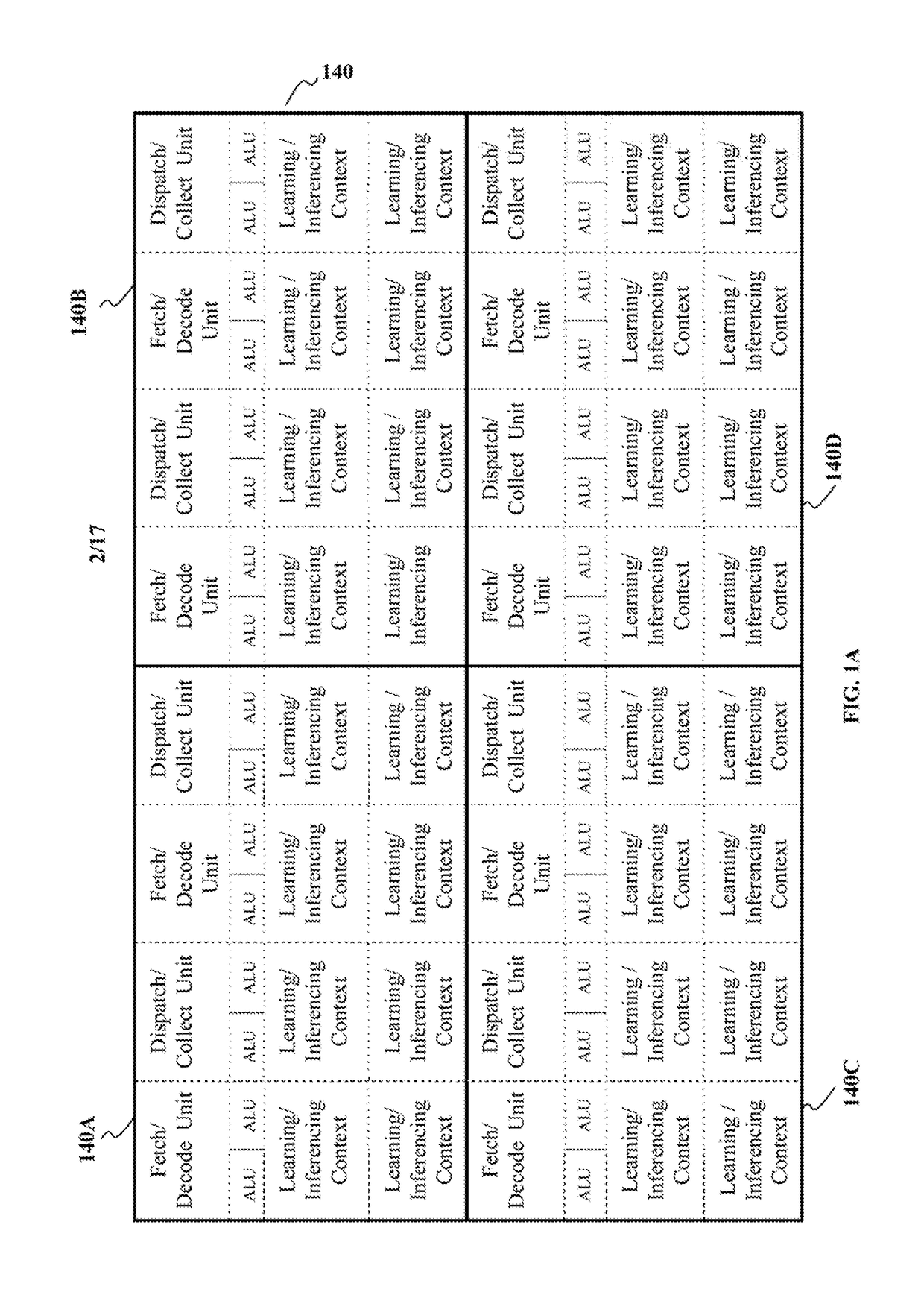

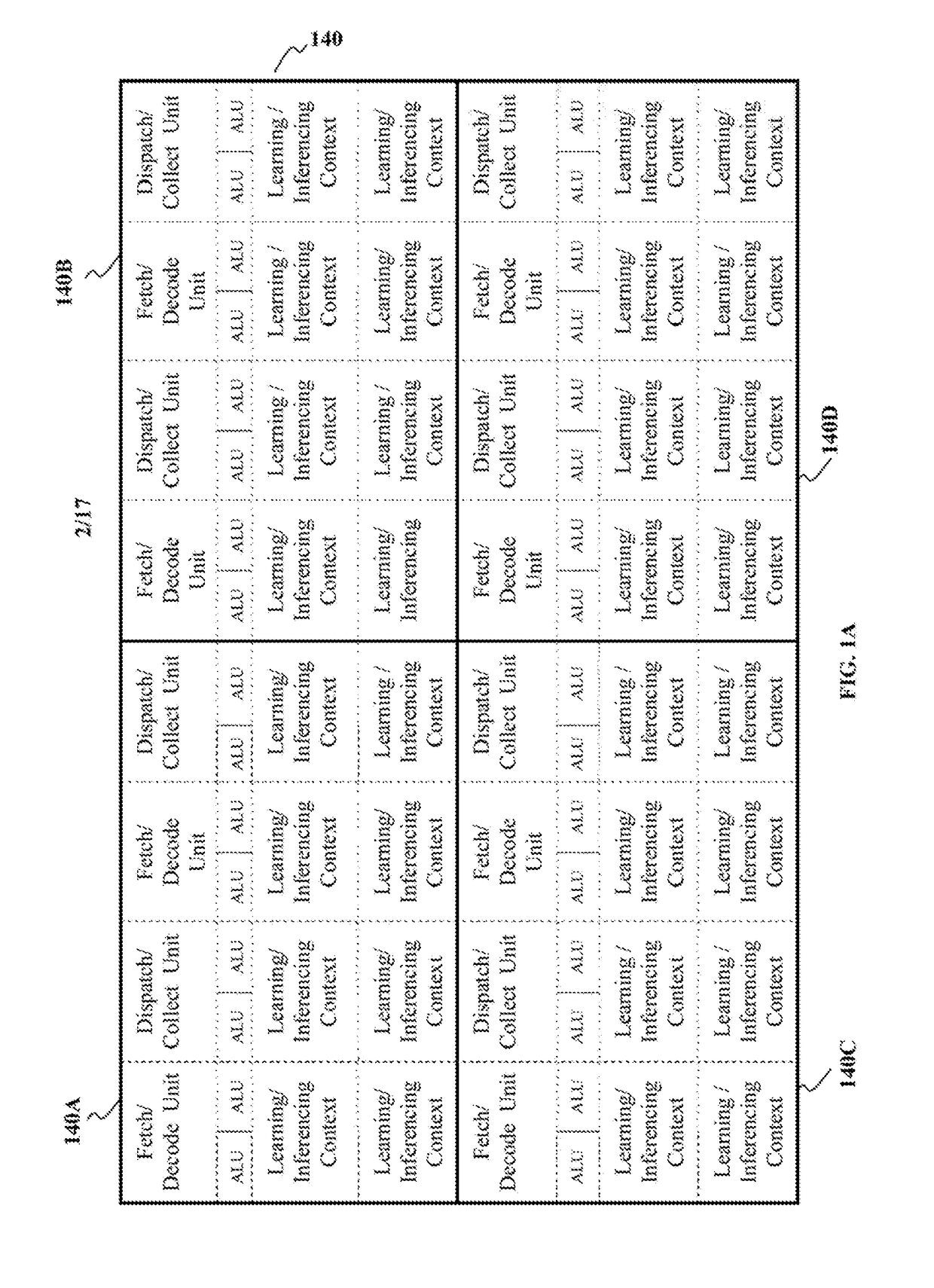

System and method for training artificial intelligence systems using a sima based processor

ActiveUS20180260692A1Efficient and effective implementationMathematical modelsMultiprogramming arrangementsAlgorithmOperand

A reinforcement learning processor specifically configured to train reinforcement learning agents in the AI systems by the way of implementing an application-specific instruction set is disclosed. The application-specific instruction set incorporates ‘Single Instruction Multiple Agents (SIMA)’ instructions. SIMA type instructions are specifically designed to be implemented simultaneously on a plurality of reinforcement learning agents which interact with corresponding reinforcement learning environments. The SIMA type instructions are specifically configured to receive either a reinforcement learning agent ID or a reinforcement learning environment ID as the operand. The reinforcement learning processor is designed for parallelism in reinforcement learning operations. The reinforcement learning processor executing of a plurality of threads associated with an operation or task in parallel.

Owner:ALPHAICS CORP

System and method for training artificial intelligence systems using a sima based processor

ActiveUS20180260691A1Reduce training timeEasy to learnMathematical modelsMultiprogramming arrangementsAlgorithmOperand

A reinforcement learning processor specifically configured to train reinforcement learning agents in the AI systems by the way of implementing an application-specific instruction set is disclosed. The application-specific instruction set incorporates ‘Single Instruction Multiple Agents (SIMA)’ instructions. SIMA type instructions are specifically designed to be implemented simultaneously on a plurality of reinforcement learning agents which interact with corresponding reinforcement learning environments. The SIMA type instructions are specifically configured to receive either a reinforcement learning agent ID or a reinforcement learning environment ID as the operand. The reinforcement learning processor is designed for parallelism in reinforcement learning operations. The reinforcement learning processor executing of a plurality of threads associated with an operation or task in parallel.

Owner:ALPHAICS CORP

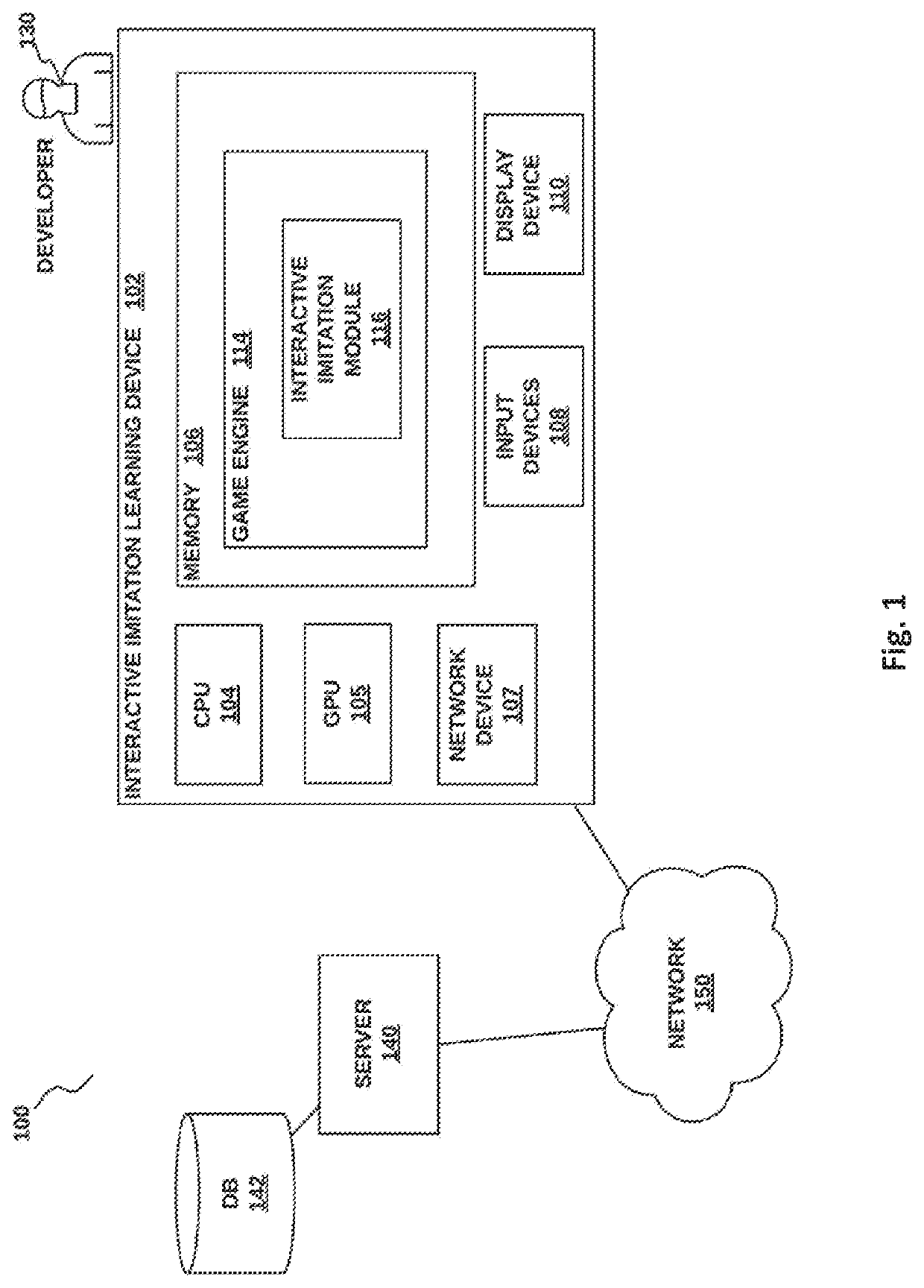

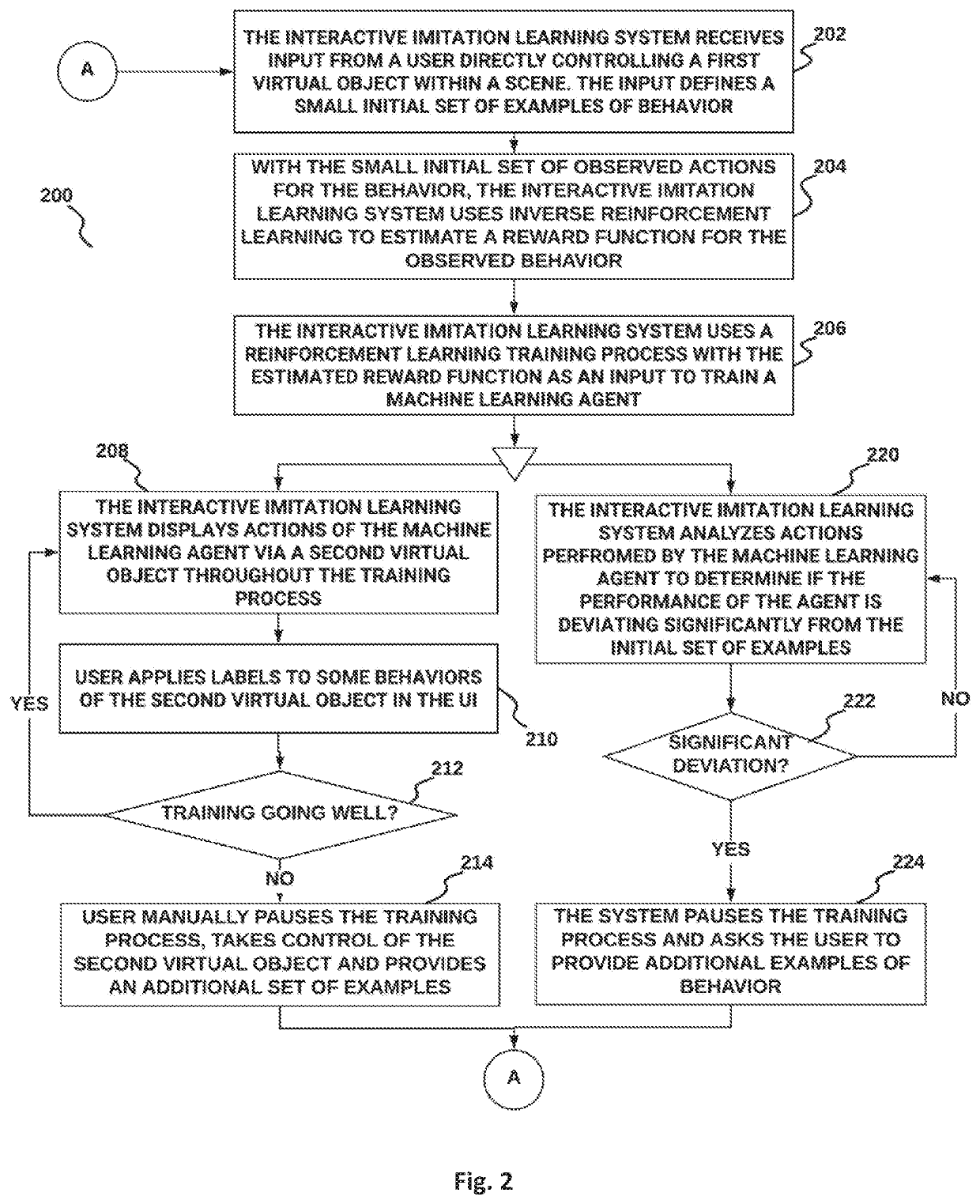

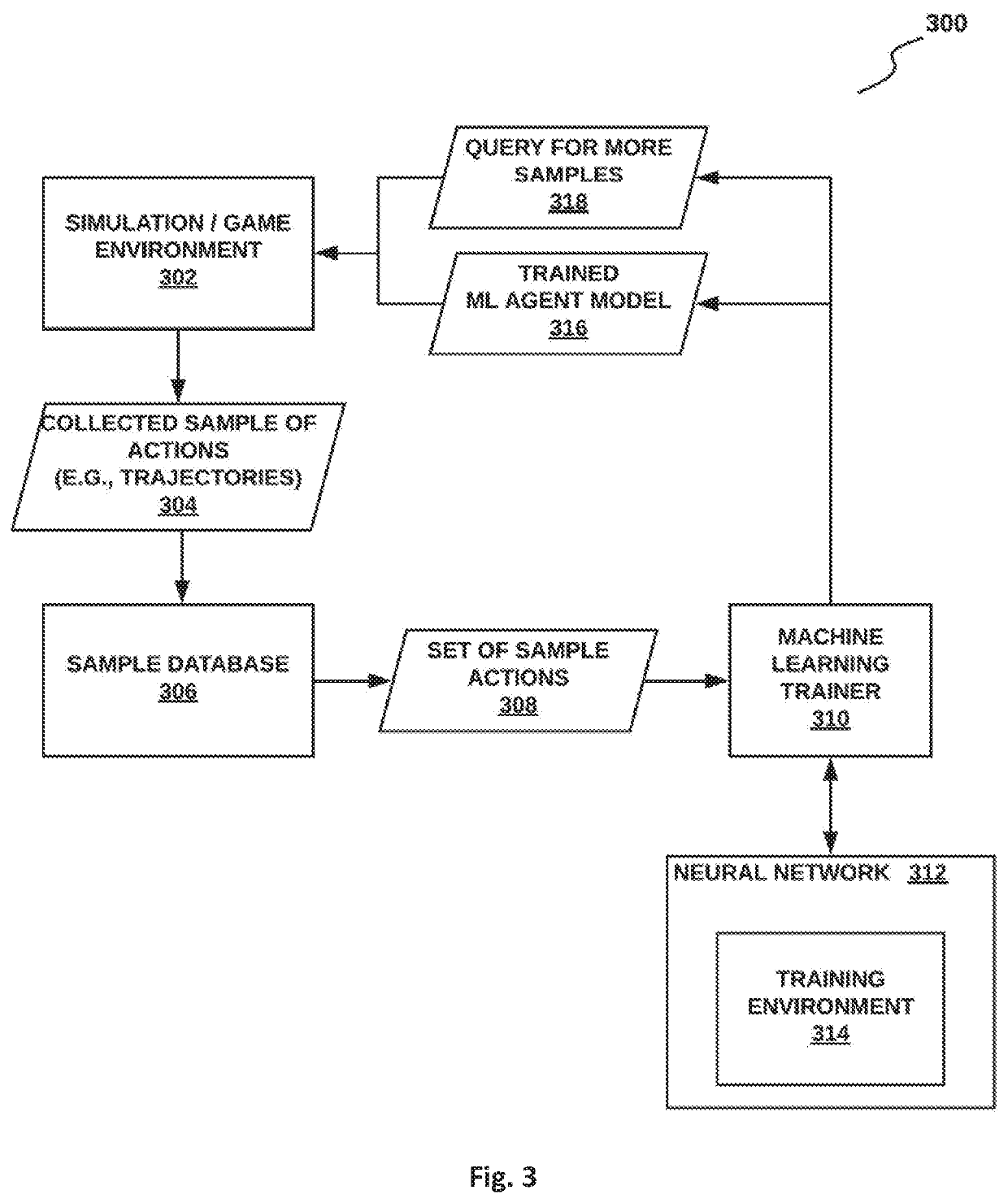

Method and system for interactive imitation learning in video games

In example embodiments, a method of interactive imitation learning method is disclosed. An input is received from an input device. The input includes data describing a first set of example actions defining a behavior for a virtual character. Inverse reinforcement learning is used to estimate a reward function for the set of example actions. The reward function and the set of example actions is used as input to a reinforcement learning model to train a machine learning agent to mimic the behavior in a training environment. Based on a measure of failure of the training of the machine learning agent reaching a threshold, the training of the machine learning agent is paused to request a second set of example actions from the input device. The second set of example actions is used in addition to the first set of example actions to estimate a new reward function.

Owner:UNITY IPR APS

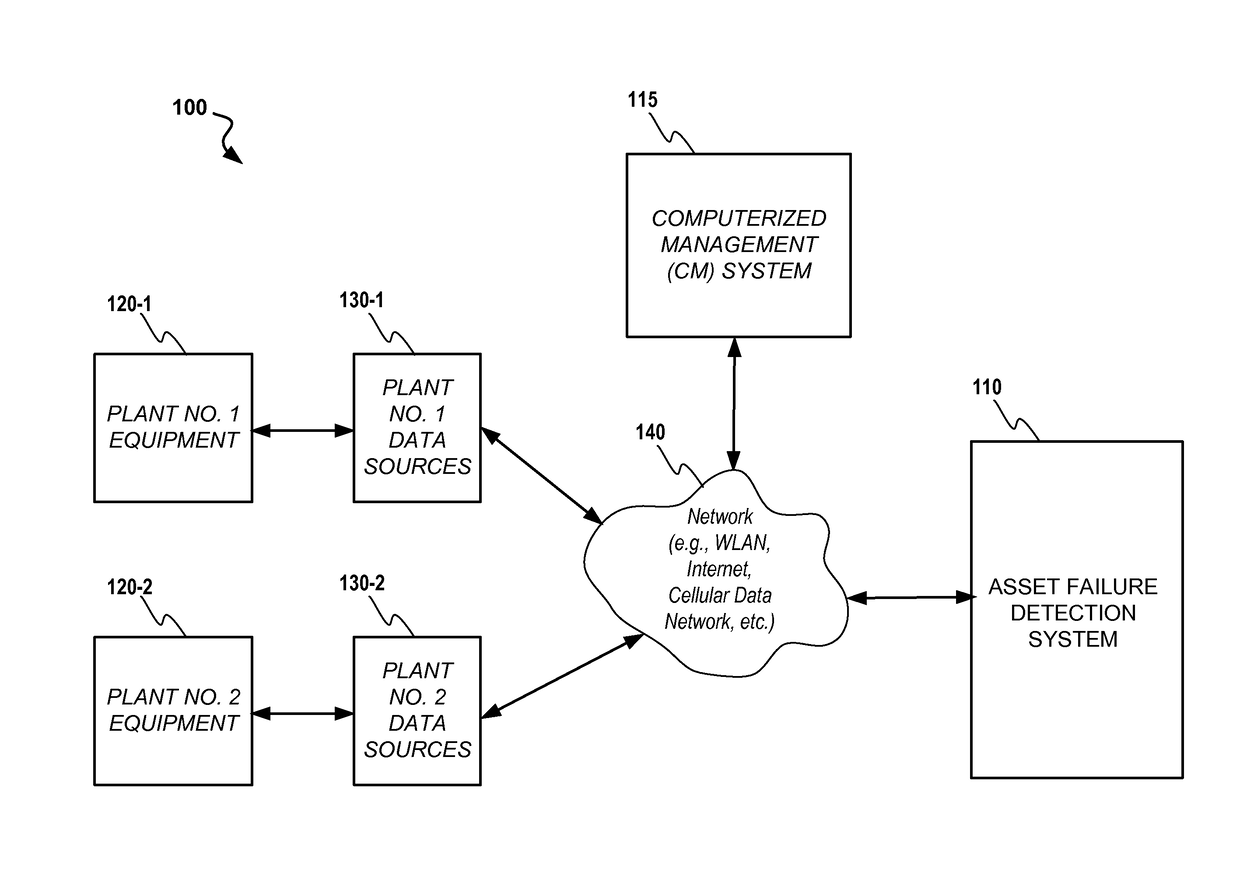

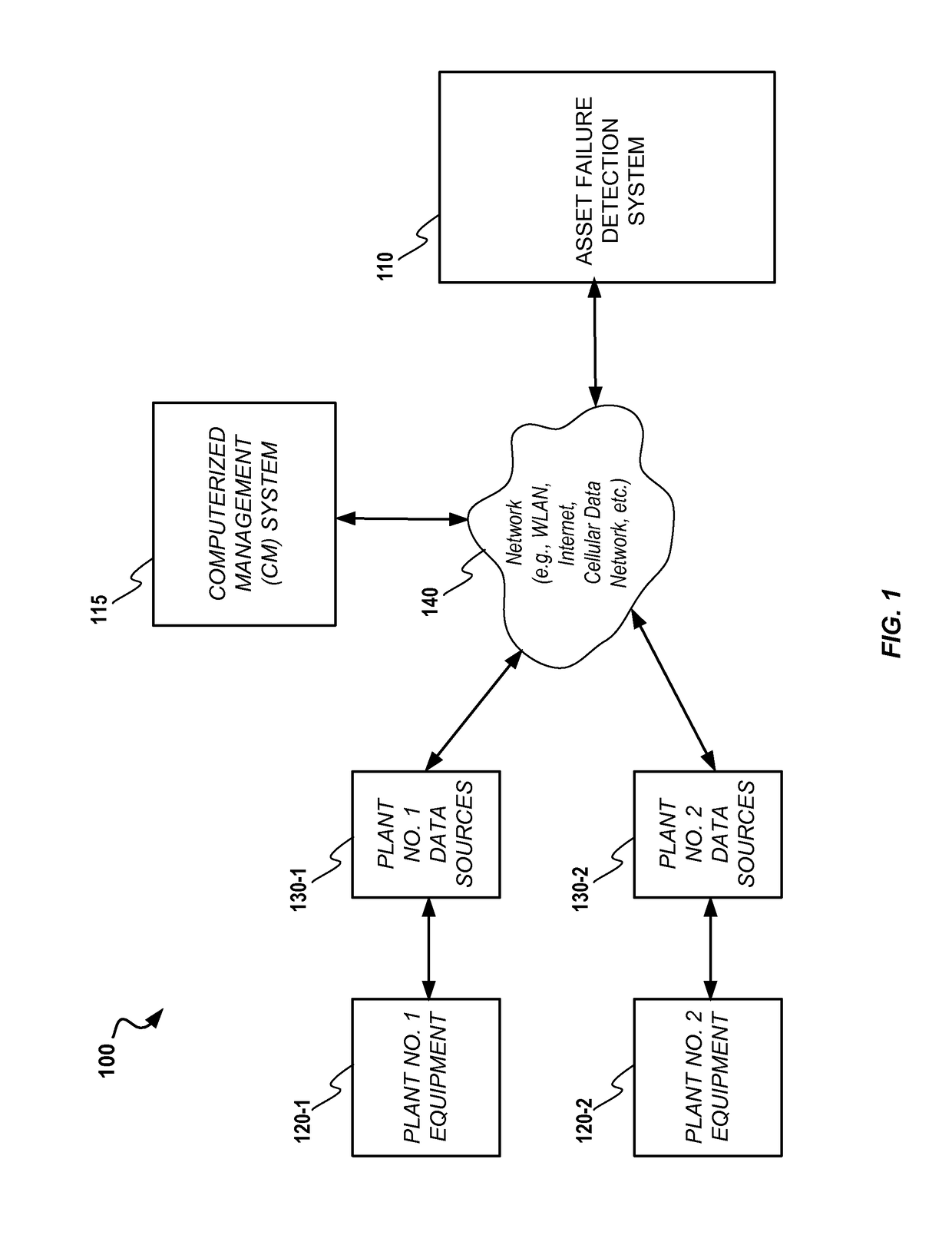

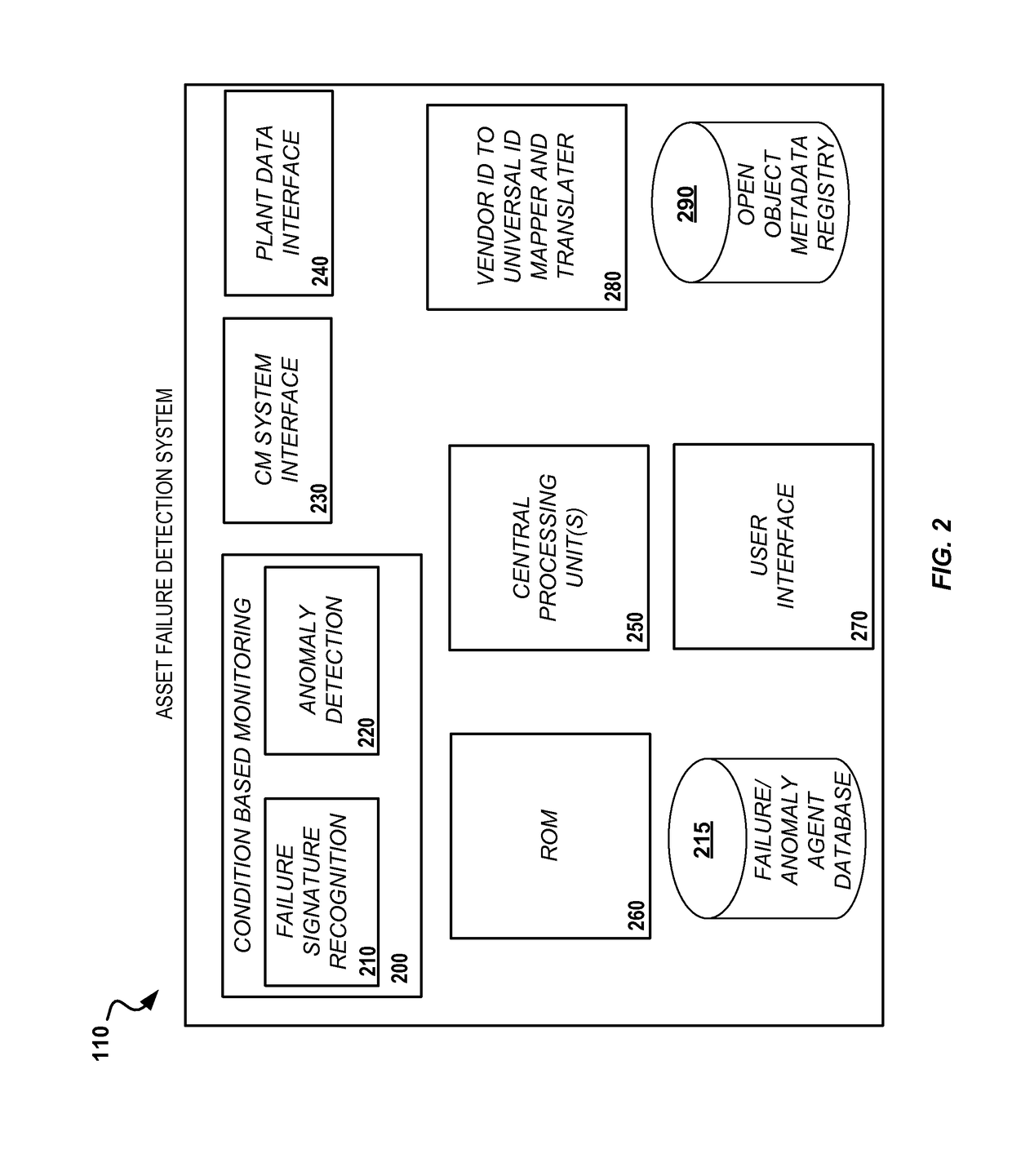

System and Methods for Automated Plant Asset Failure Detection

ActiveUS20170083830A1Testing/monitoring control systemsNon-redundant fault processingInformation analysisDevice failure

A system for performing failure signature recognition training for at least one unit of equipment. The system includes a memory and a processor coupled to the memory. The processor is configured by computer code to receive sensor data relating to the unit of equipment and to receive failure information relating to equipment failures. The processor is further configured to analyze the sensor data in view of the failure information in order to develop at least one learning agent for performing failure signature recognition with respect to the at least one unit of equipment.

Owner:ASPENTECH CORP

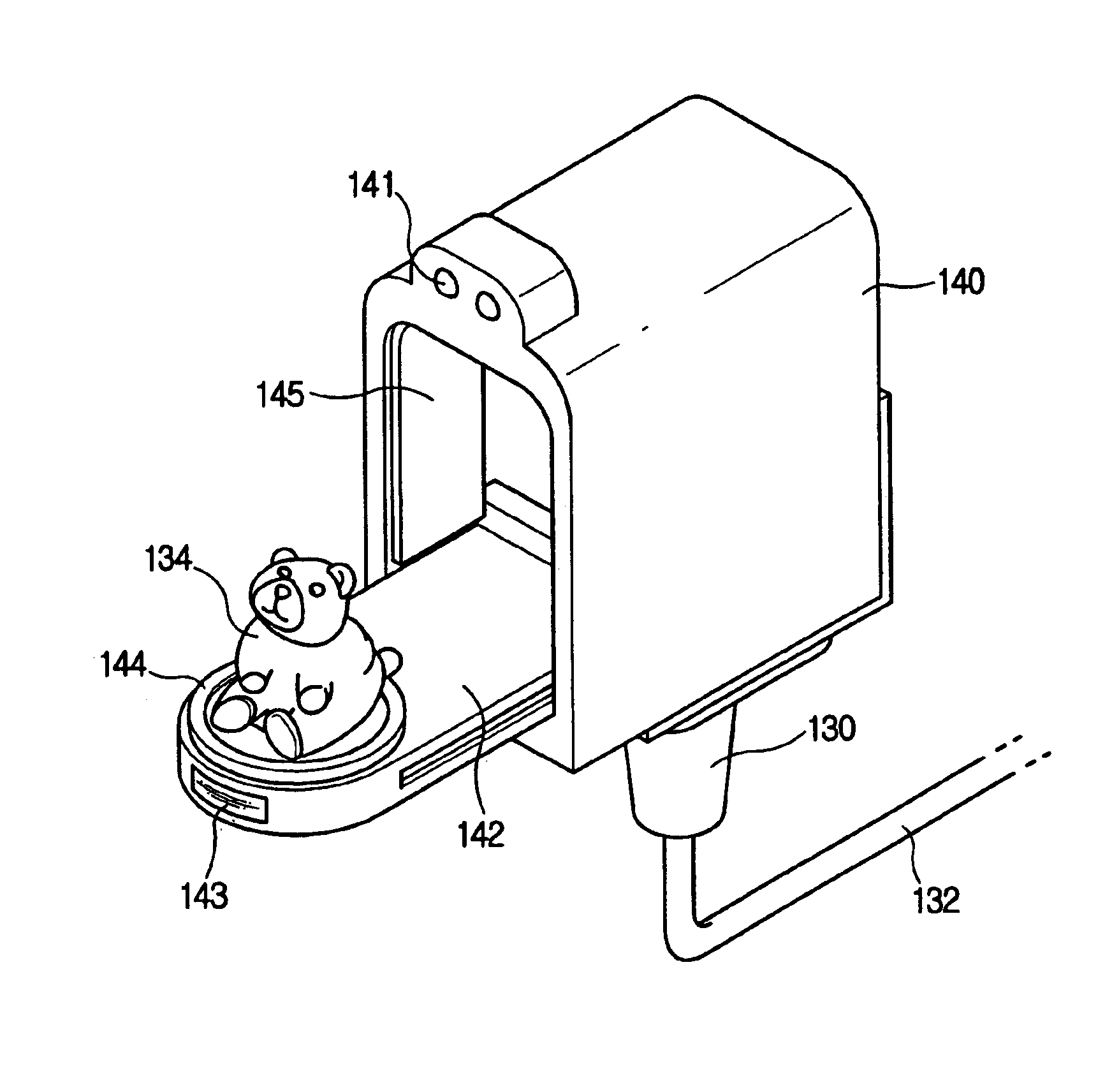

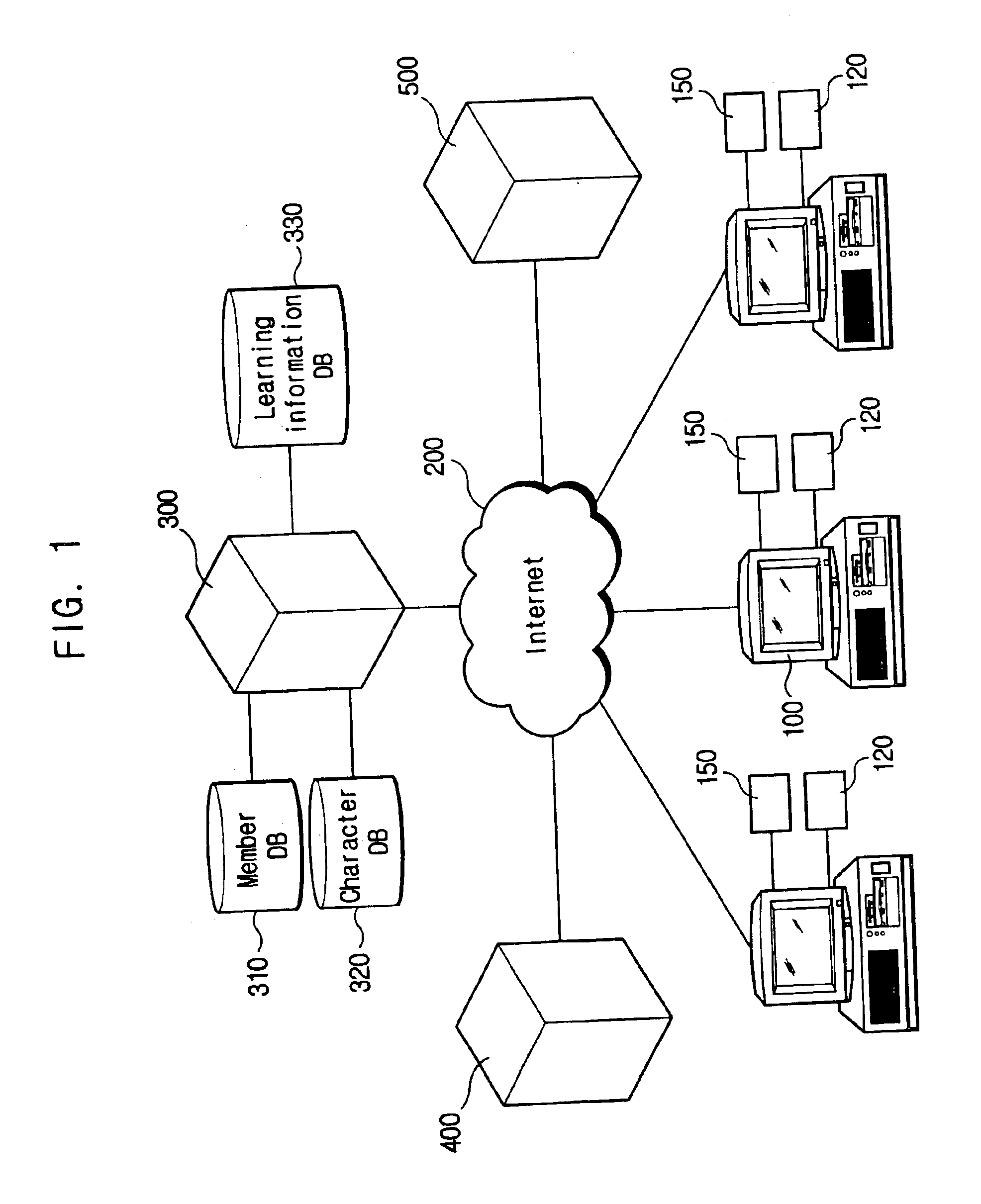

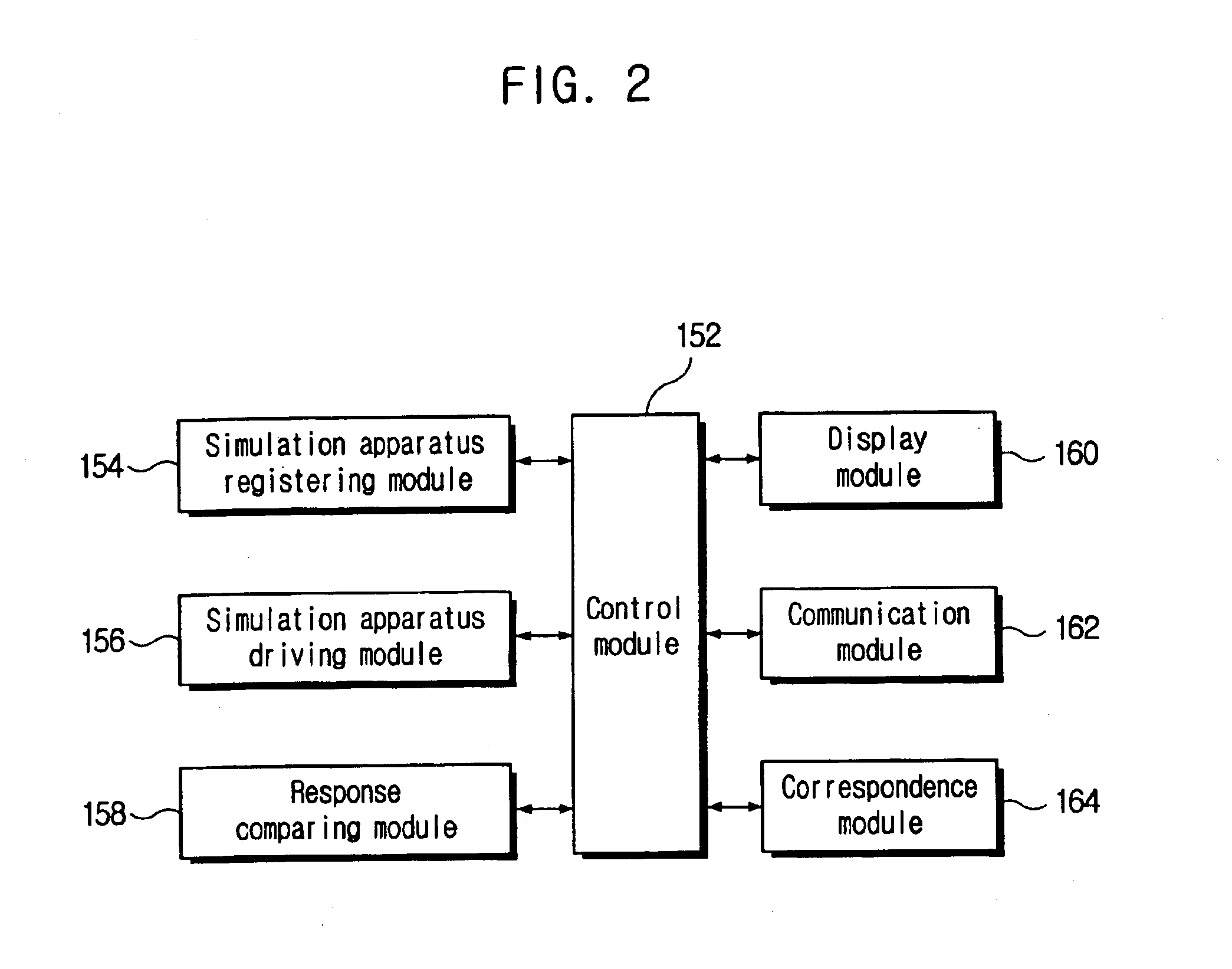

Monitor top typed simulation system and method for studying based on internet

InactiveUS6988896B2Increase curiosityImprove learning efficiencyDollsData processing applicationsLearning basedThe Internet

Disclosed is a computer interactive learning system and method, the method being applied to the Internet simulation learning system comprising a learner client to which at least one computer-controllable learner aid device is electrically connected and at which a learning agent is installed, and a learning server connected with the learner client through Internet, the method comprising the steps of: operating the learning agent; downloading a learning information and a character information corresponding to the learner aid device from the learning server; registering the respective learner aid devices as specific characters based on the character information downloaded; responding to a content provided from the learning agent based on the learning information at the learner; determining the response of the learner; and operating the learner aid device to perform a corresponding action depending on the determining result.

Owner:CHO IN HYUNG

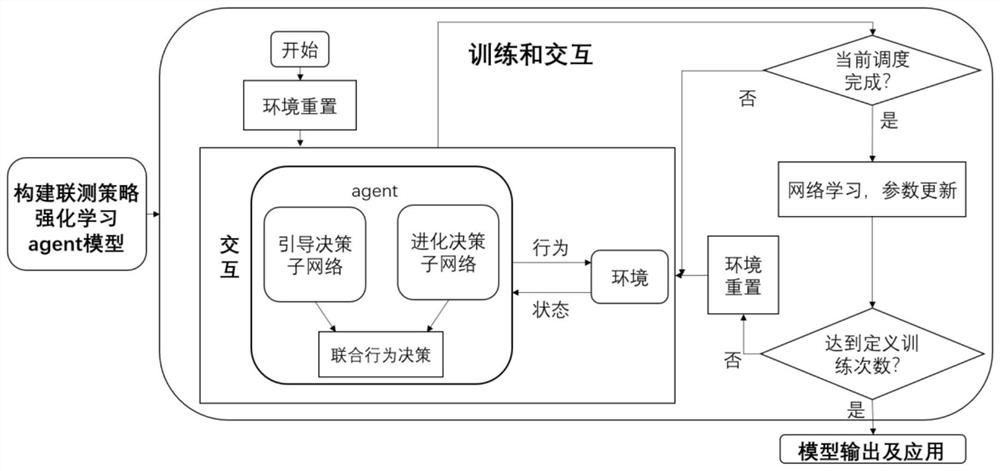

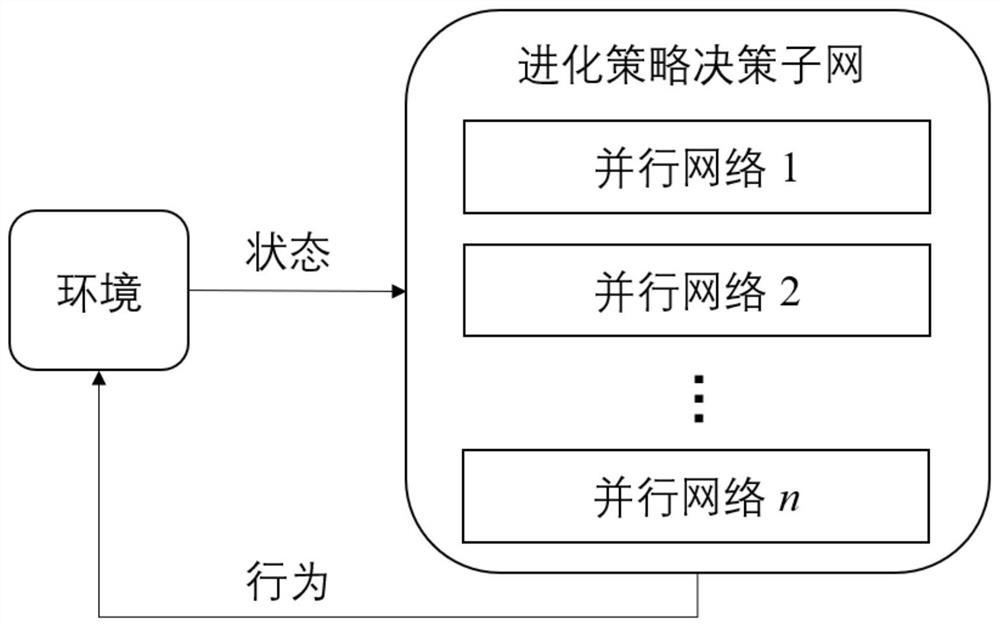

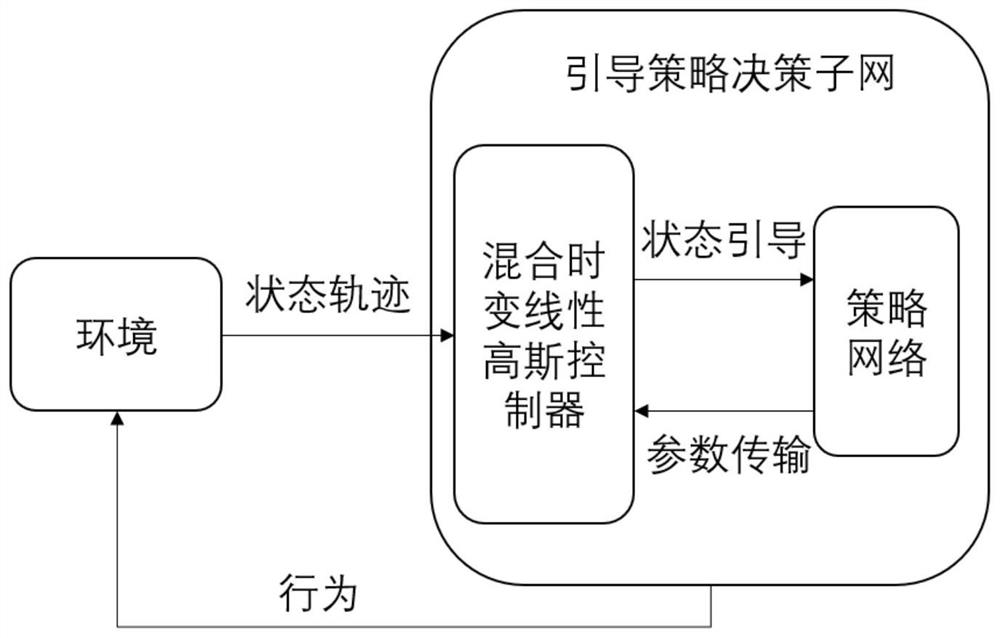

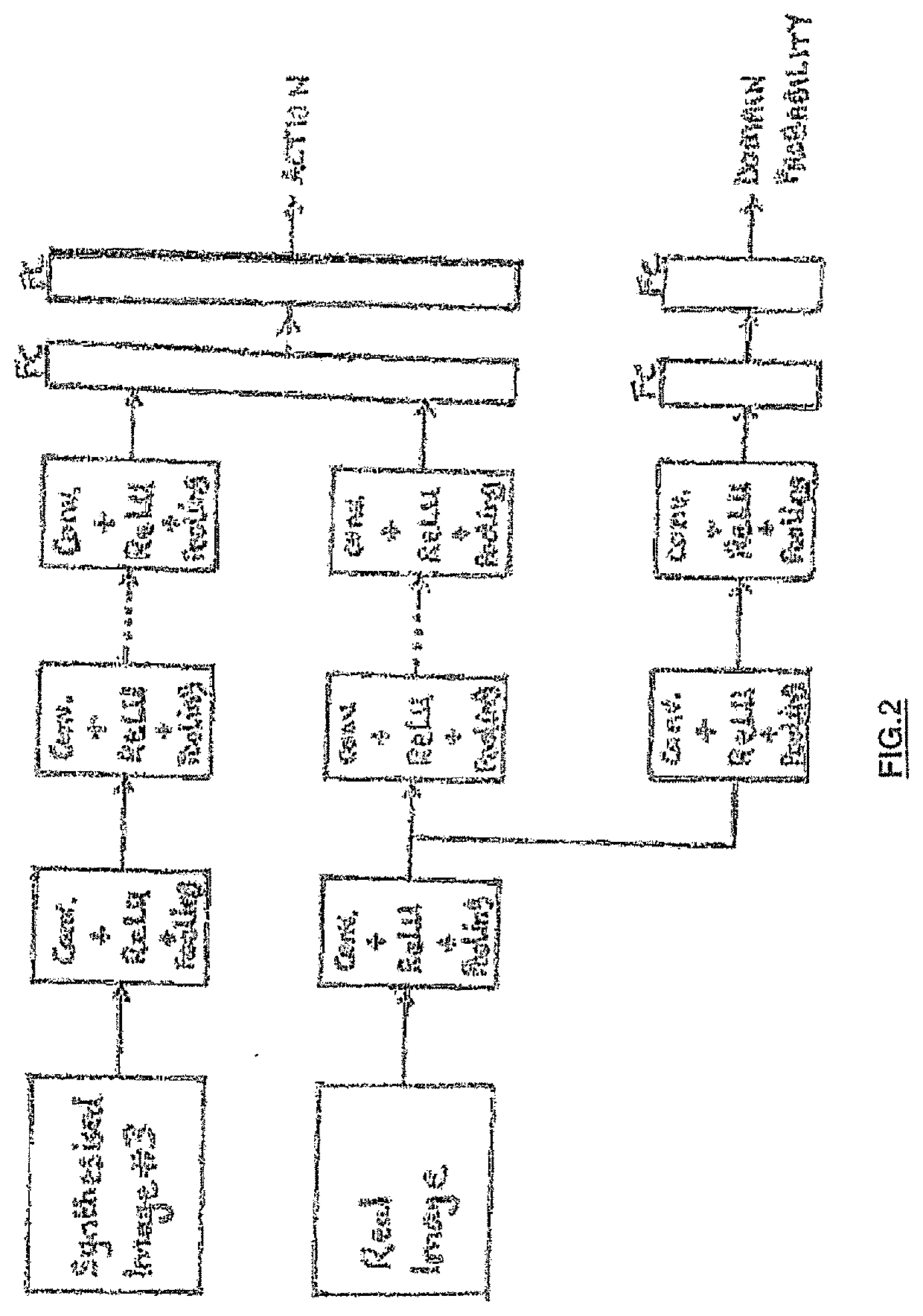

Multi-target cloud workflow scheduling method based on joint reinforcement learning strategy

ActiveCN112685165AImprove practicalityIncrease dominanceProgram initiation/switchingMachine learningEngineeringCloud workflow

The invention discloses a multi-target cloud workflow scheduling method based on a joint reinforcement learning strategy, and the method comprises the steps: building a reinforcement learning agent joint strategy model through the extension of the attributes and methods of a workflow request and a cloud resource, and enabling the scheduling model to be more suitable for an actual workflow application scene. According to the method, the influence of the scheduling process, each decision sub-network and historical decision information is comprehensively considered during behavior selection, so that the finally selected behavior is more reasonable, the dominance and diversity of generating a non-dominated solution set by the algorithm are further improved, and the practicability of the method is effectively improved.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

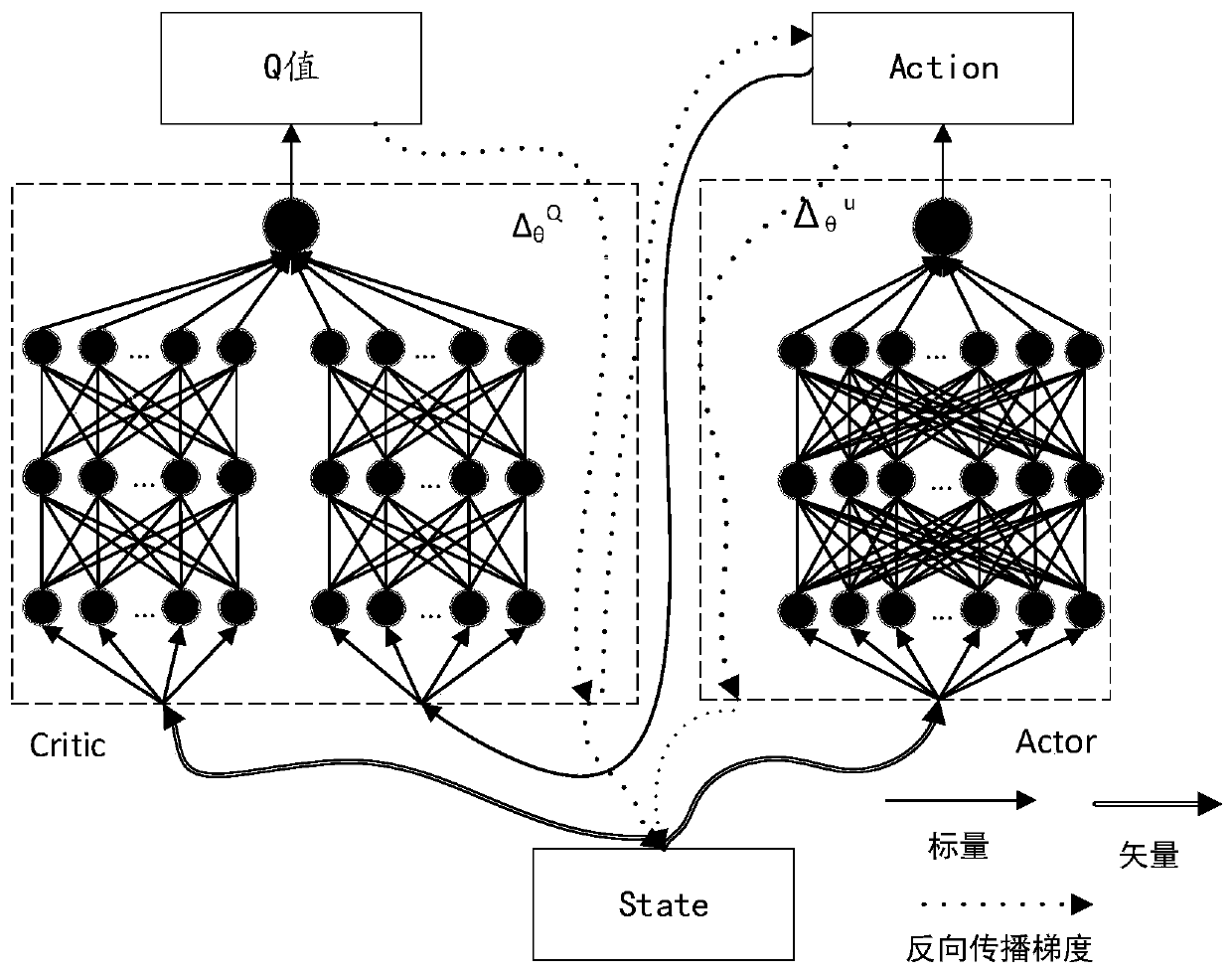

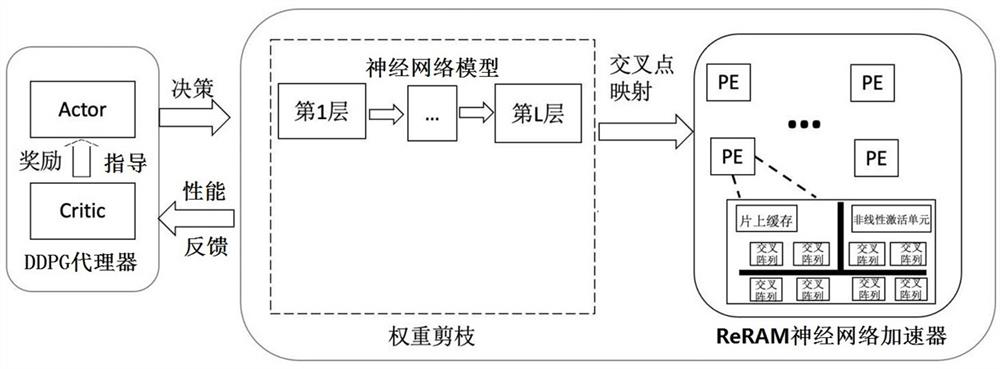

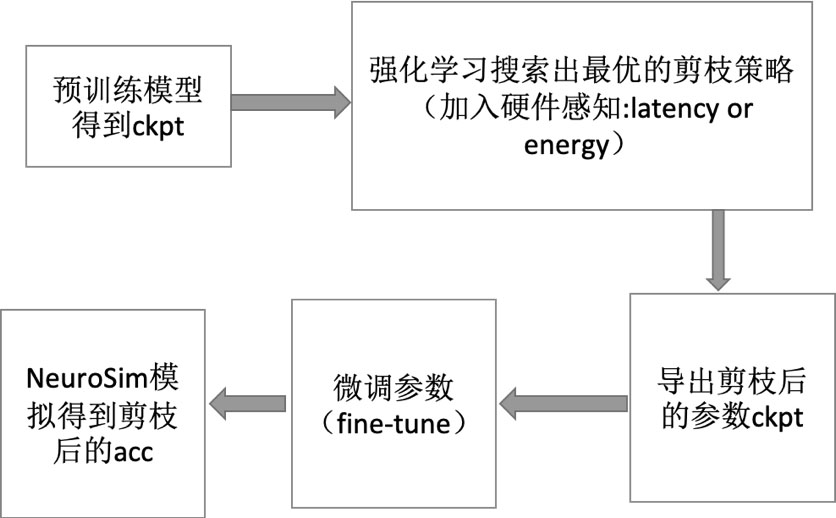

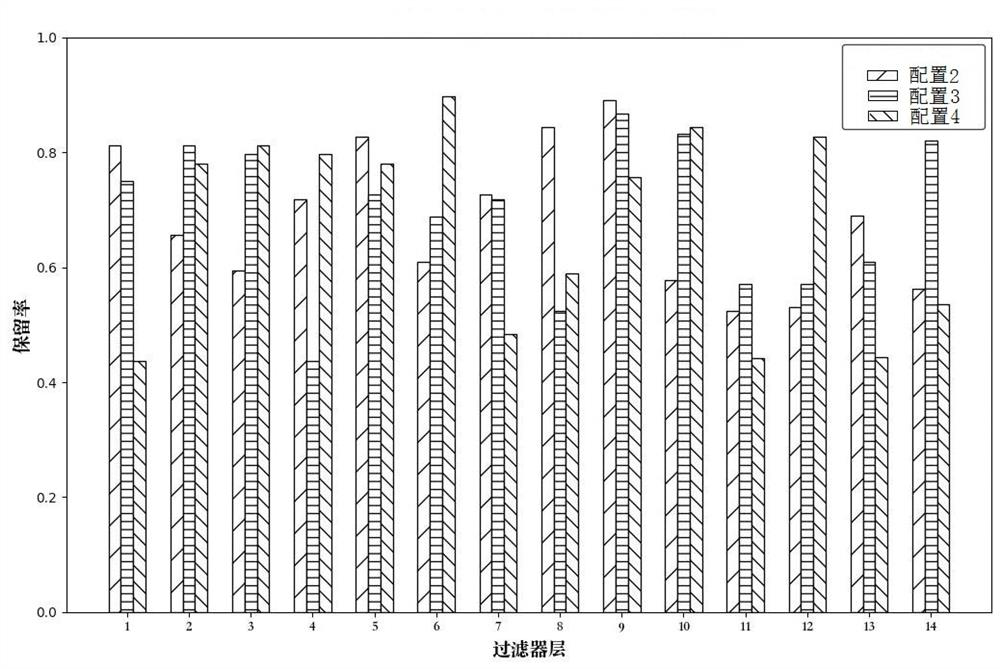

Adjustable hardware aware pruning and mapping framework based on ReRAM neural network accelerator

The invention provides an adjustable hardware perception pruning and mapping framework based on a ReRAM neural network accelerator. The pruning and mapping framework comprises a DDPG agent and the ReRAM neural network accelerator. The DDPG agent is composed of a behavior decision module Actor and an evaluation module Critic, and the behavior decision module Actor is used for making a pruning decision for the neural network; the ReRAM neural network accelerator is used for mapping a model formed under a pruning decision generated by the behavior decision module Actor, and feeding back a performance parameter mapped by the model under the pruning decision to the evaluation module Critic as a signal; the performance parameters comprise energy consumption, delay and model accuracy of the simulator; the judgment module Critic updates a reward function value according to the fed back performance parameters and guides a pruning decision of the behavior decision module Actor in the next stage;according to the method, a pruning scheme which is most matched with hardware and user requirements and is most efficient is made by utilizing a reinforcement learning DDPG agent, so that the delay performance and the energy consumption performance on the hardware are improved while the accuracy is ensured.

Owner:ZHEJIANG LAB +1

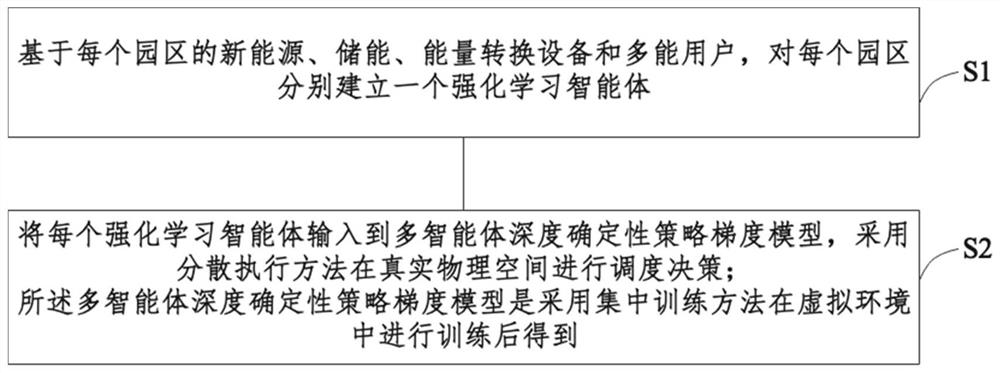

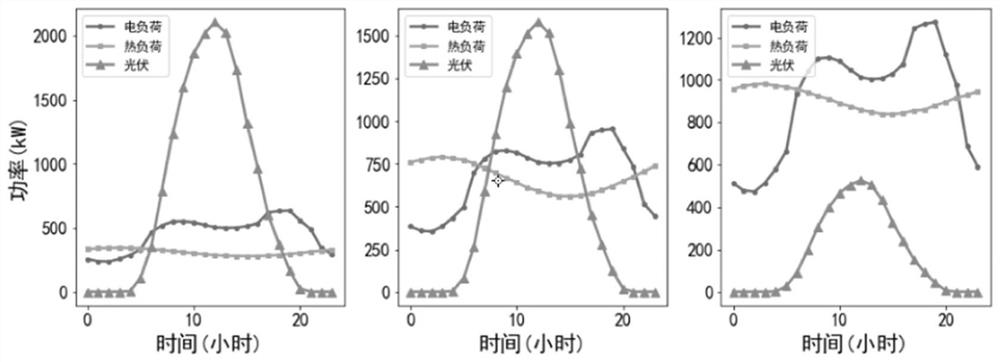

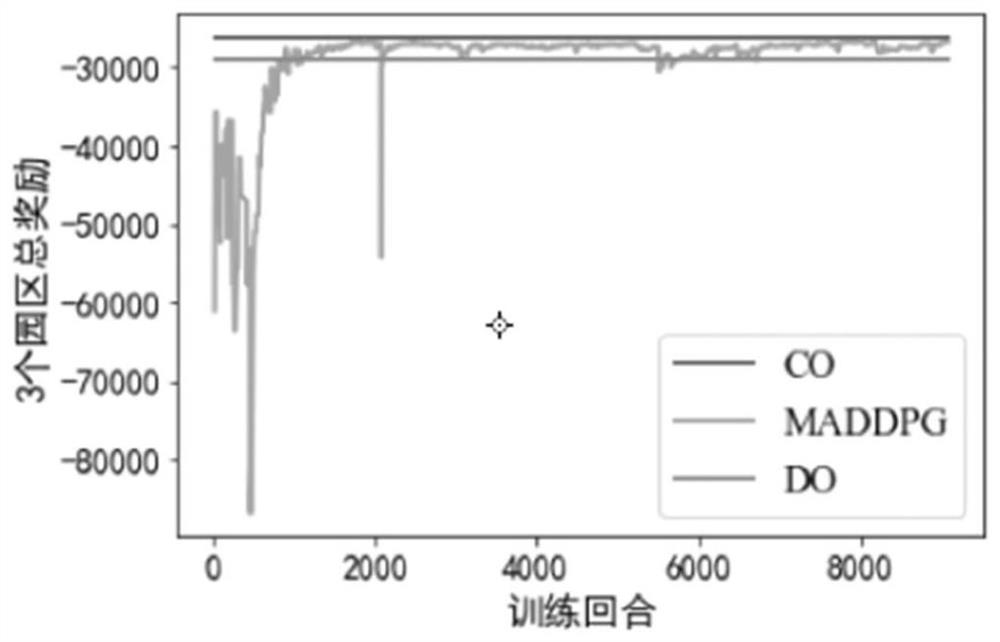

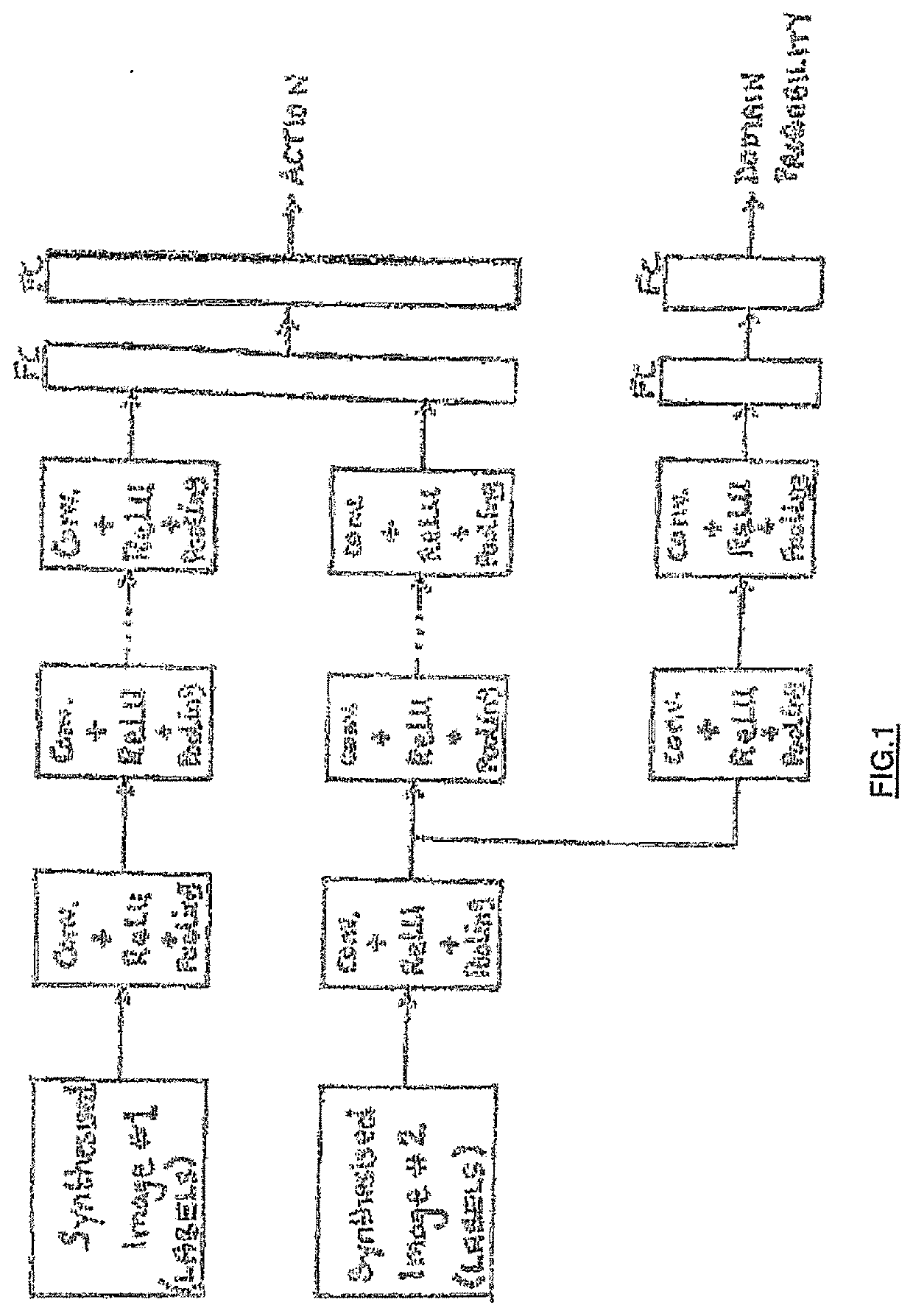

Multi-park comprehensive energy scheduling method and system

ActiveCN113378456APrivacy protectionLow running costMathematical modelsForecastingPhysical spaceEnergy scheduling

The embodiment of the invention provides a multi-park comprehensive energy scheduling method and system, and the method comprises the steps: building a reinforcement learning agent for each park based on the new energy, energy storage, energy conversion equipment and multi-energy users of each park; inputting each reinforcement learning agent into the multi-agent depth deterministic strategy gradient model, and performing scheduling decision in a real physical space by adopting a decentralized execution method; and obtaining the multi-agent depth deterministic strategy gradient model by training in a virtual environment by adopting a centralized training method. According to the embodiment of the invention, the reinforcement learning agent of a single park is established, then based on the established multi-agent depth deterministic strategy gradient model, training is carried out in a virtual environment by adopting a centralized training method, scheduling decision making is carried out in a real physical space by adopting a decentralized execution method, the method does not depend on accurate prediction of uncertainties, the privacy of each park is protected, and the operation cost of each park is reduced.

Owner:QINGHAI UNIVERSITY +1

Method, learning apparatus, and medical imaging apparatus for registration of images

ActiveUS20200202507A1Ability of the domain classifier to discriminate between the synthesized structure and the imaged structure is reducedReduce capacityImage enhancementReconstruction from projectionAnatomical structuresMedical imaging

A method of training a computer system for use in determining a transformation between coordinate frames of image data representing an imaged subject. The method trains a learning agent according to a machine learning algorithm, to determine a transformation between respective coordinate frames of a number of different views of an anatomical structure simulated using a 3D model. The views are images containing labels. The learning agent includes a domain classifier comprising a feature map generated by the learning agent during the training operation. The classifier is configured to generate a classification output indicating whether image data is synthesized or real images data. Training includes using unlabeled real image data to training the computer system to determine a transformation between a coordinate frame of a synthesized view of the imaged structure and a view of the structure within a real image. This is done whilst deliberately reducing the ability of the domain classifier to discriminate between a synthesized image and a real image of the structure.

Owner:SIEMENS HEALTHCARE GMBH +1

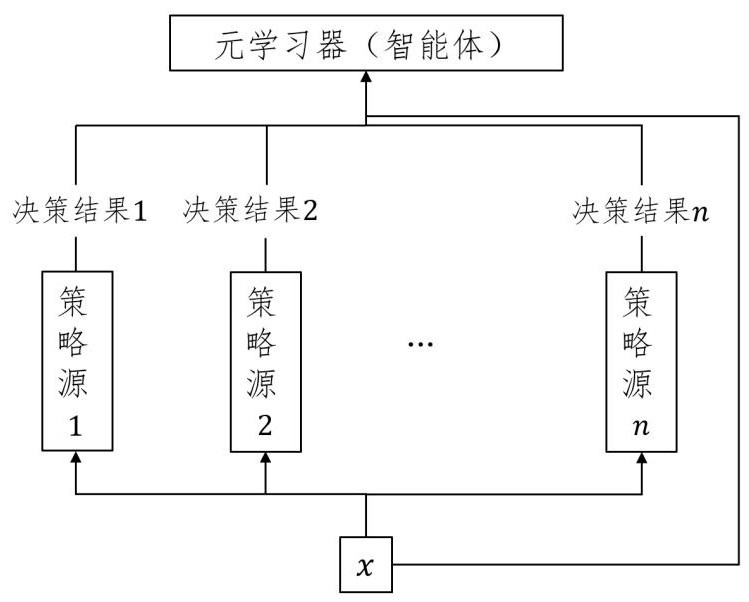

Knowledge strategy selection method and device based on reinforcement learning

InactiveCN112990485APrevent overfittingAvoid fit problemsMachine learningNeural architecturesOptimal decisionDecision system

The invention discloses a knowledge strategy selection method and device based on reinforcement learning, and the method comprises a lower layer and an upper layer: taking n decision-making systems of different sources of the lower layer as first-level strategy sources, respectively inputting the first-level strategy sources, and generating n different decision-making results; n different decision results are fused through an upper meta-learning agent trained based on a reinforcement learning algorithm, and a final decision is generated and output; and the meta-learning agent explores and selects a relatively optimal decision which can be generated in the first-level strategy sources under different inputs from the decisions generated by the first-level strategy sources. According to the strategy selection method and selection device provided by the invention, higher-quality decisions and stronger generalization are realized.

Owner:NAT INNOVATION INST OF DEFENSE TECH PLA ACAD OF MILITARY SCI

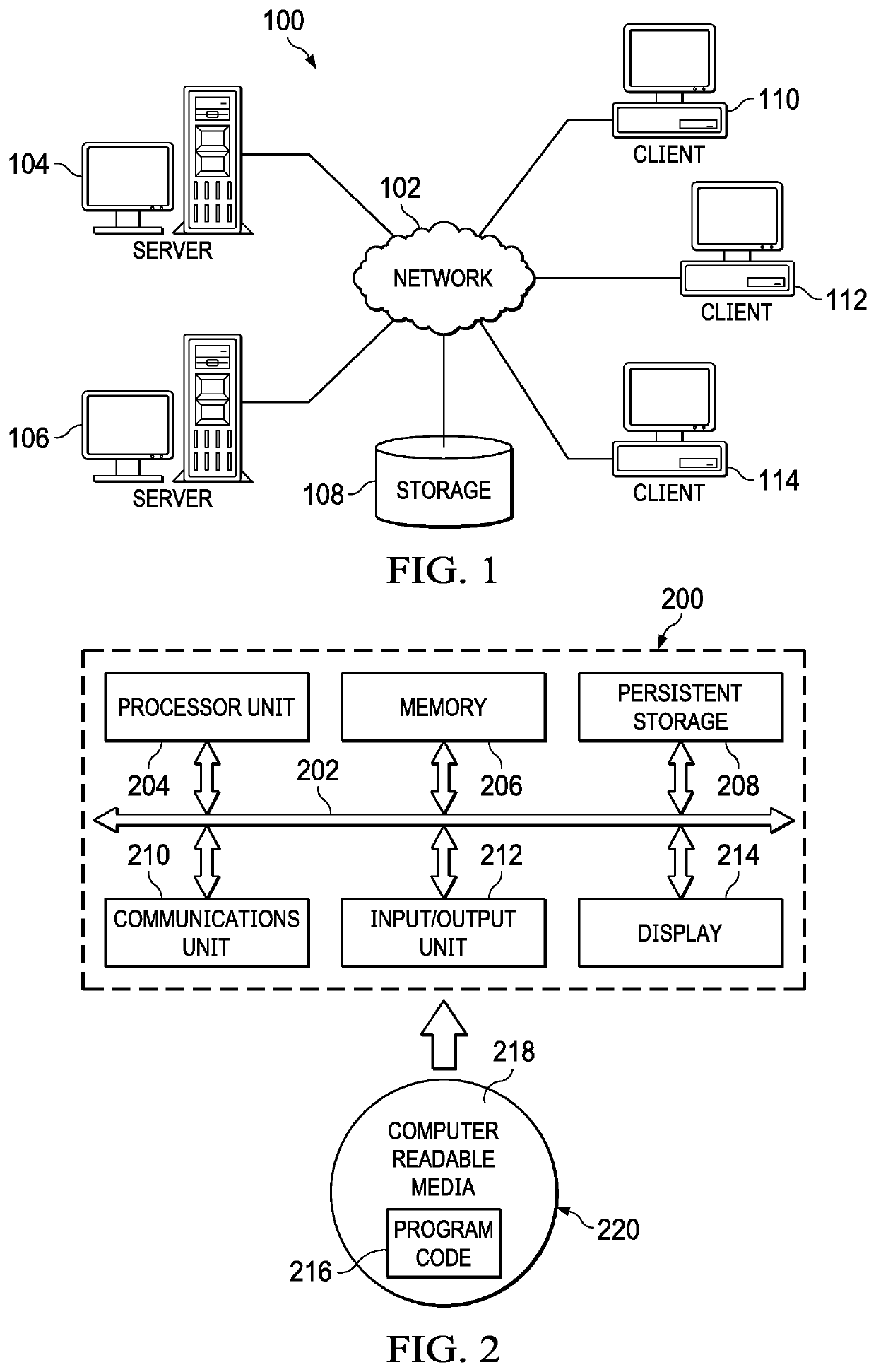

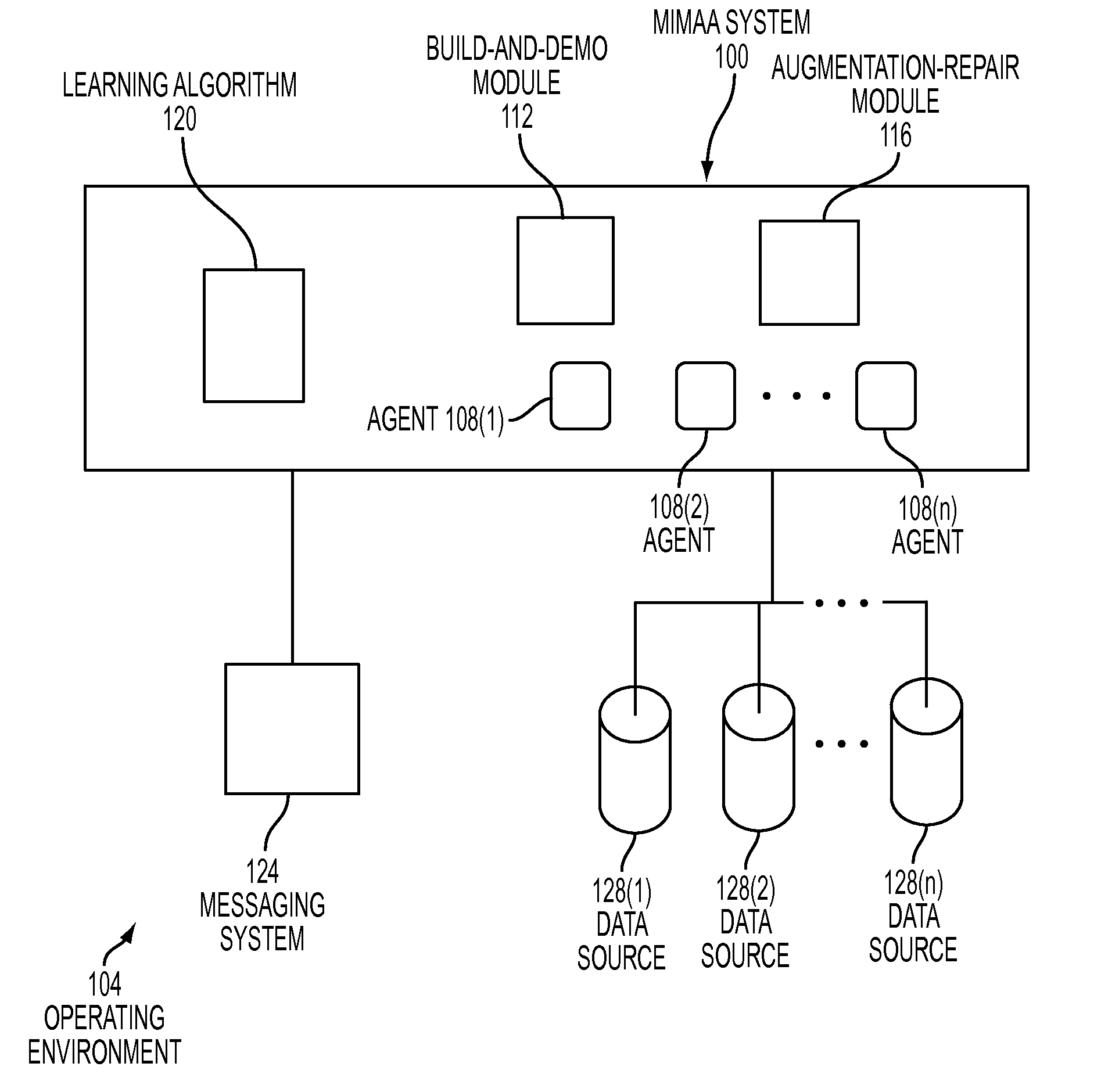

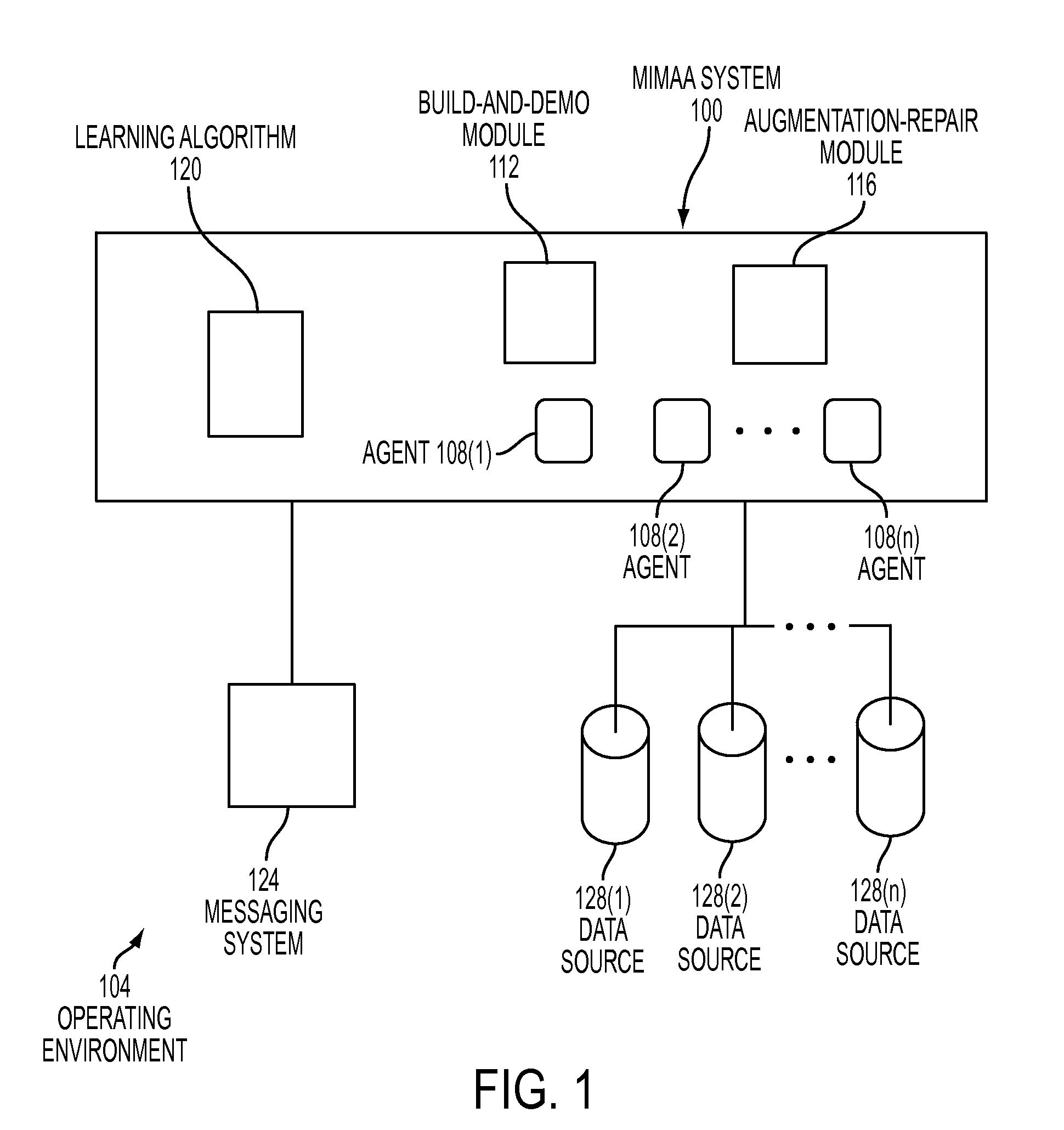

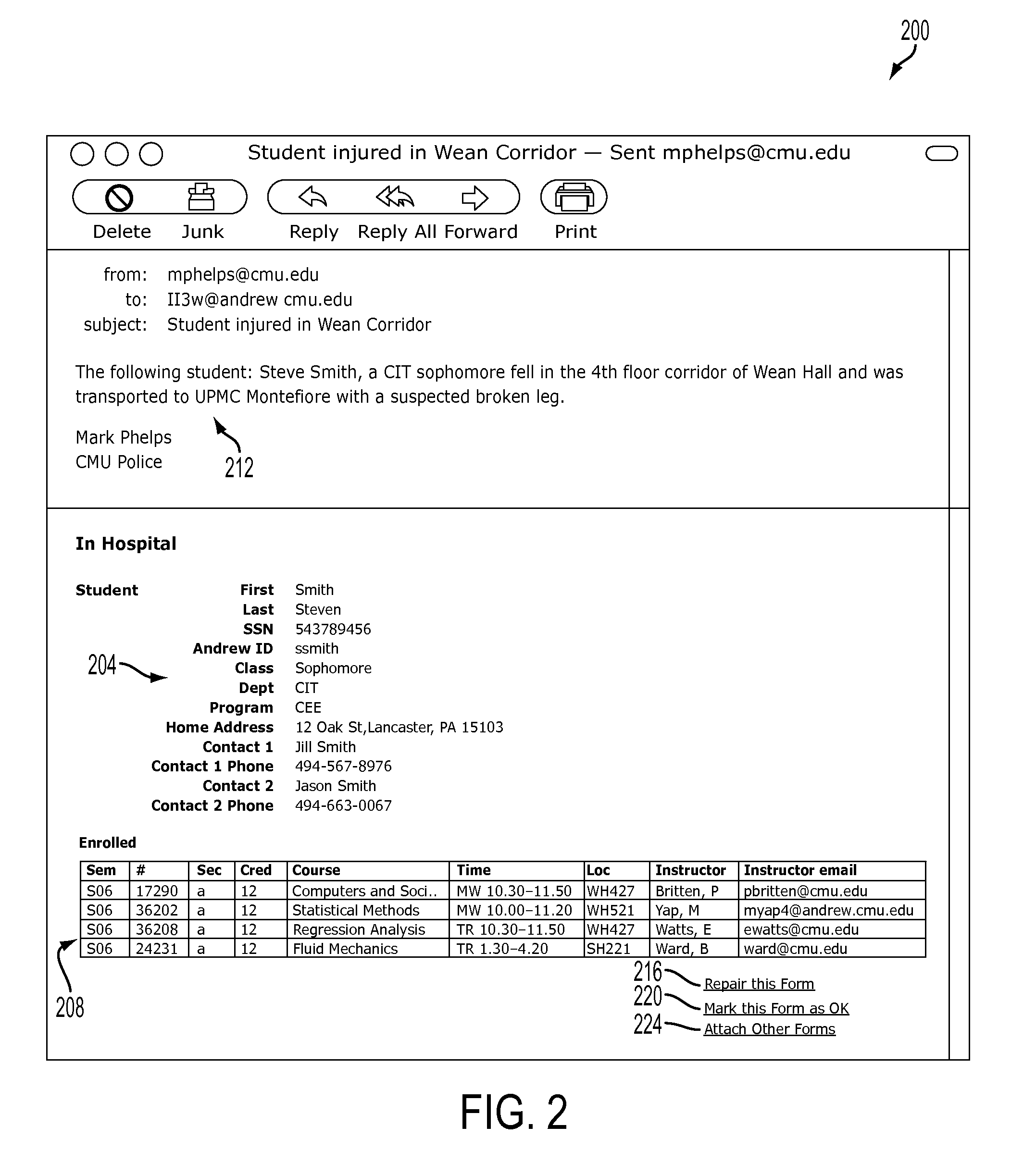

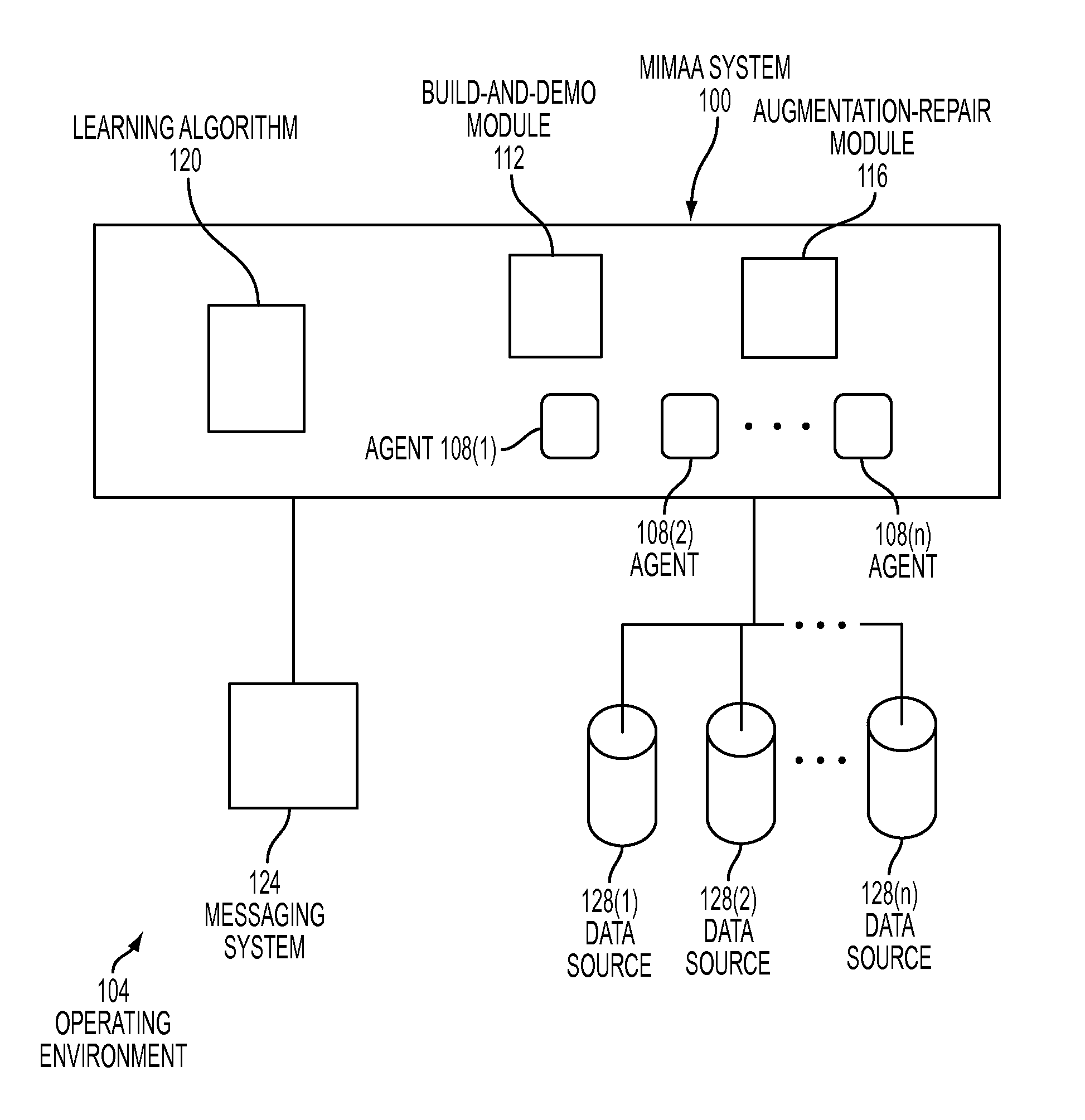

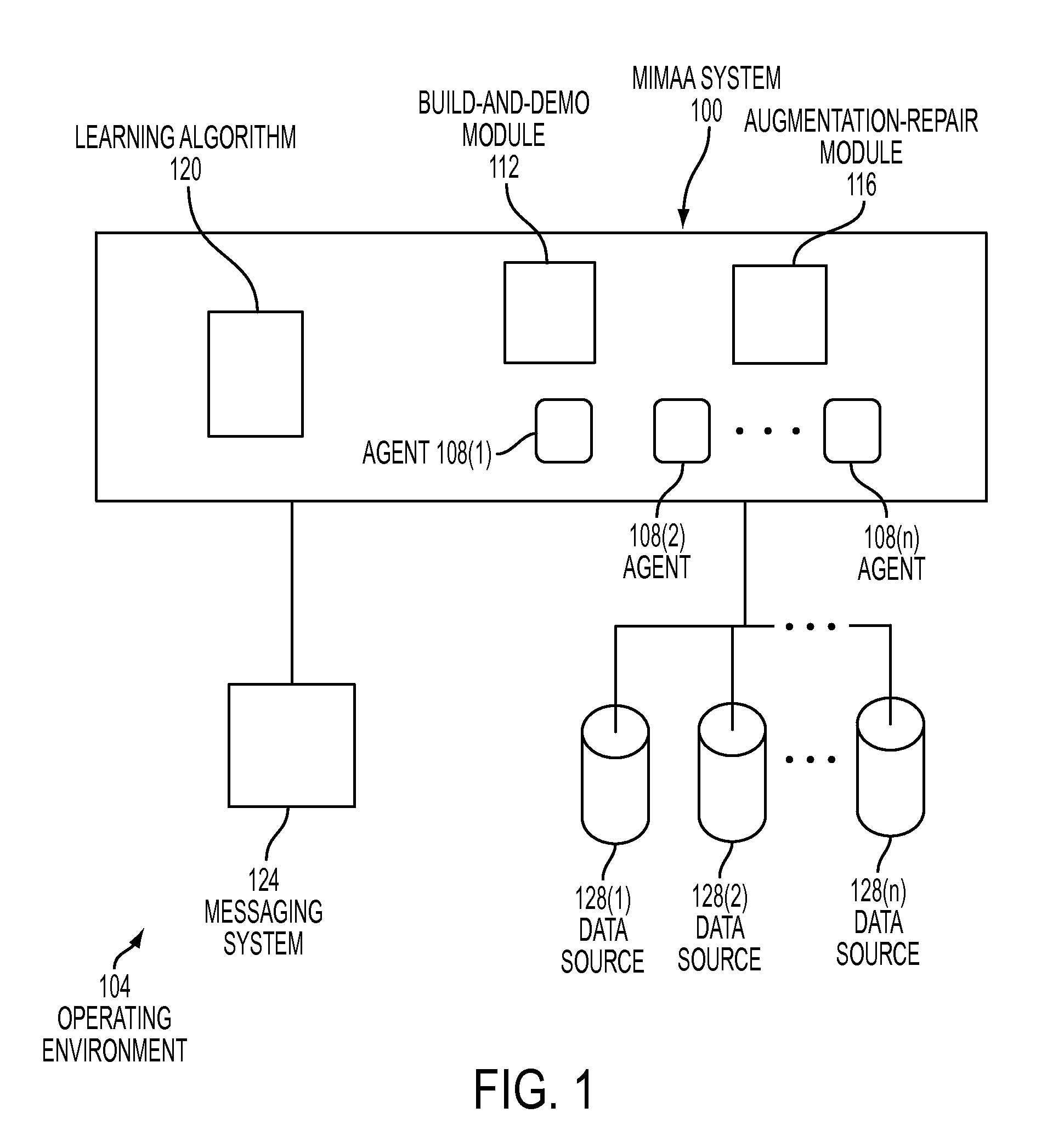

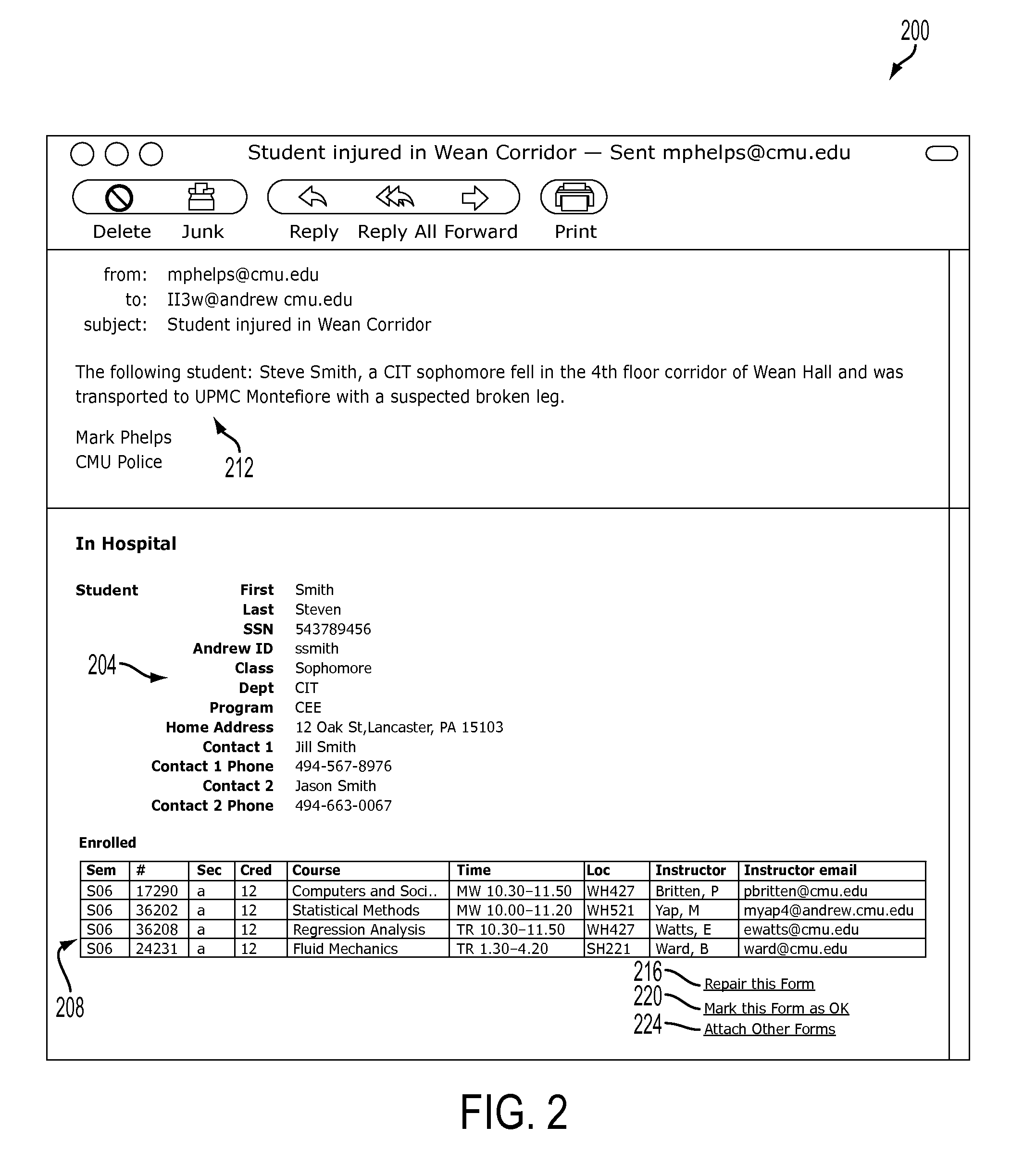

Systems and methods for implementing a machine-learning agent to retrieve information in response to a message

Mixed-initiative message-augmenting agent systems and methods that provide users with tools that allow them to respond to messages, such as email messages, containing requests for information or otherwise requiring responses that require information that needs to be retrieved from one or more data sources. The systems and methods allow users to train machine-learning agents how to retrieve and present information in responses to like messages so that the machine-learning agents can eventually automatedly generate responses with minimal involvement by the users. Embodiments of the systems and methods allow users to build message-augmenting forms containing the desired information for responding to messages and to demonstrate to the machine-learning agents where to retrieve pertinent information for populating the forms. Embodiments of the systems and methods allow users to modify and repair automatically generated forms to continually improve the knowledge of the machine-learning agents.

Owner:CARNEGIE MELLON UNIV

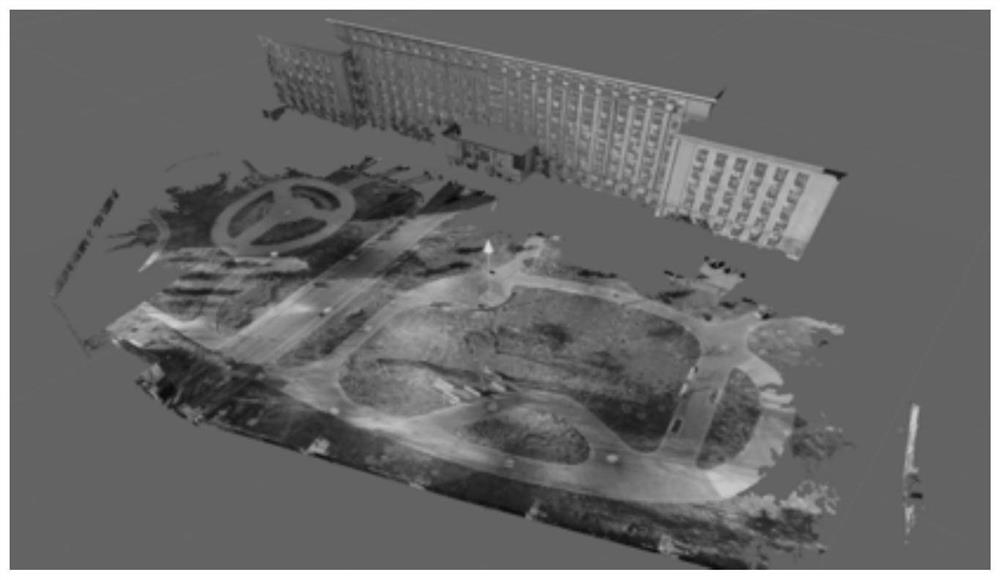

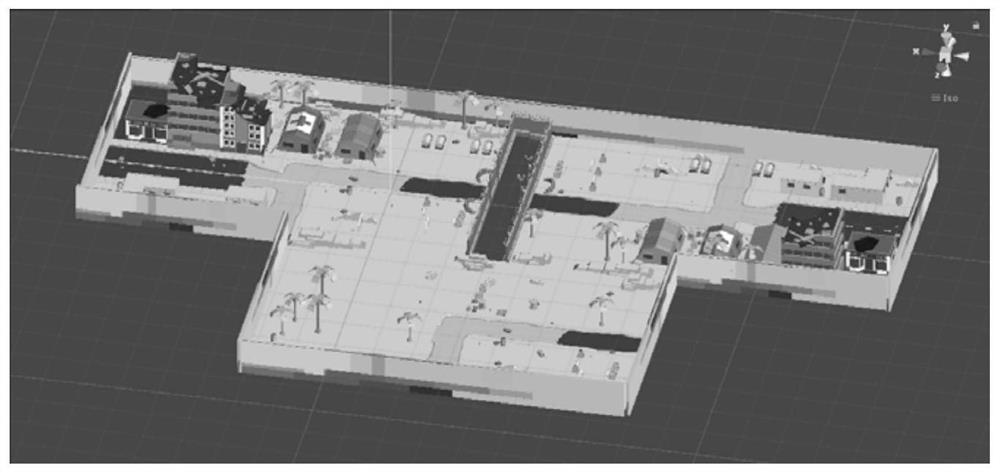

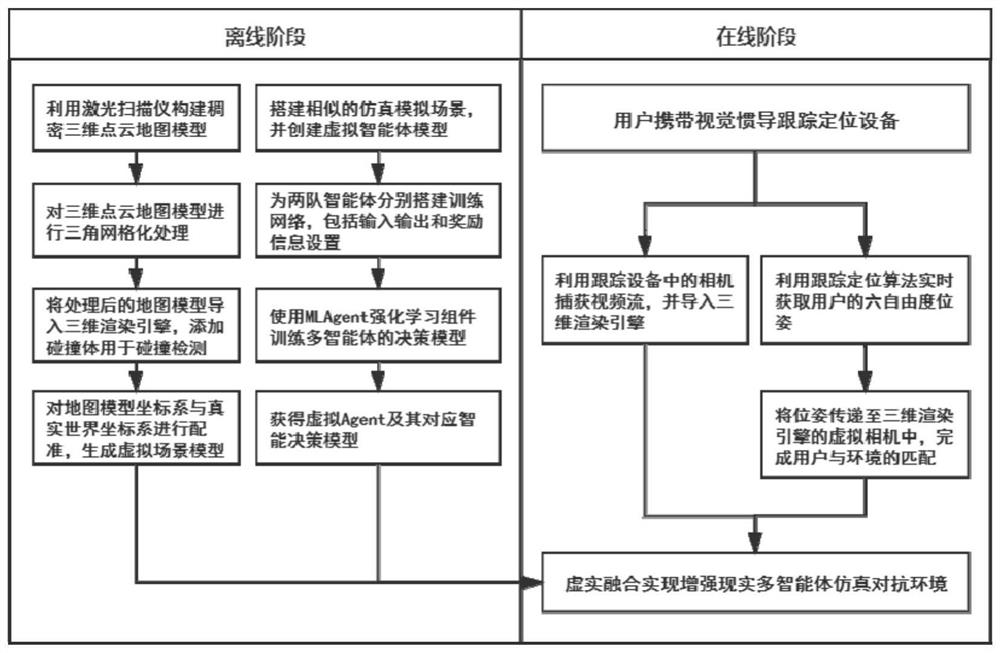

Augmented reality multi-agent cooperative confrontation implementation method based on reinforcement learning

InactiveCN113435564ACollaboration strategies are flexible and changeableSolve the problem of poor human-computer interaction experienceDetails involving 3D image dataArtificial lifeEngineeringHuman machine interaction

The invention discloses a multi-agent confrontation simulation environment implementation method in the augmented reality environment, a deep reinforcement learning network is combined with curriculum learning to predict behaviors of all agents and make decisions, and then a reinforcement learning agent model which completes training is migrated to the augmented reality environment. The problem of poor man-machine interaction experience caused by a single virtual multi-agent cooperation strategy in an augmented reality confrontation simulation environment can be solved, and the effect of enabling the cooperation confrontation strategy between a real user and the virtual multi-agent to be flexible and changeable is achieved.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

Predictive content placement on a managed services systems

ActiveUS8826313B2Digital computer detailsAnalogue secracy/subscription systemsSet top boxService system

A distributed stochastic learning agent analyzes viewing and / or interactive service behavior patterns of users of a managed services system. The agent may operate on embedded and / or distributed devices such as set-top boxes, portable video devices, and interactive consumer electronic devices. Content may be provided with services such as video and / or interactive applications at a future time with maximum likelihood that a subscriber will be viewing a video or utilizing an interactive service at that future time. For example, user impressions can be maximized for content such as advertisements, and content may be scheduled in real-time to maximize viewership from across all video and / or interactive services.

Owner:CSC HLDG

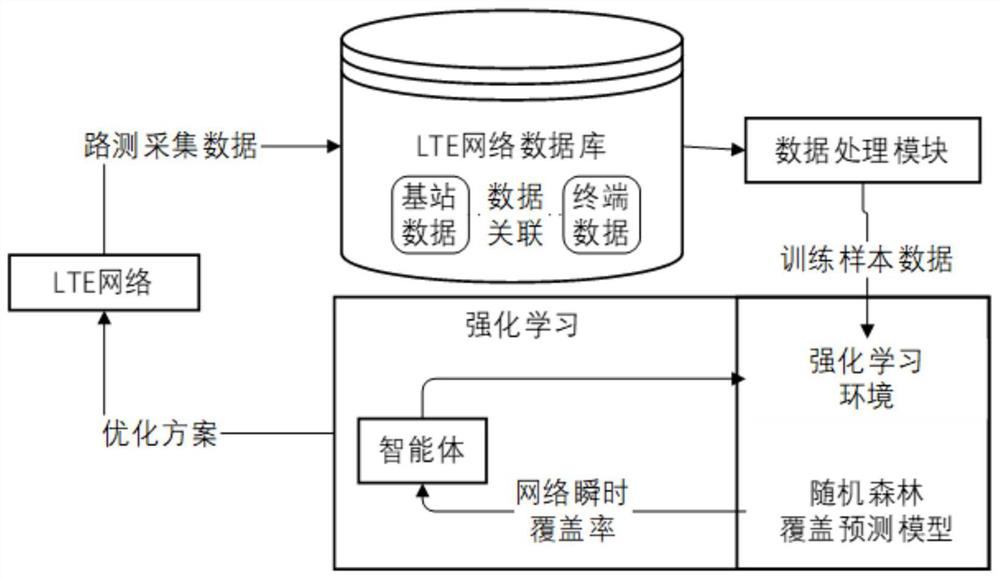

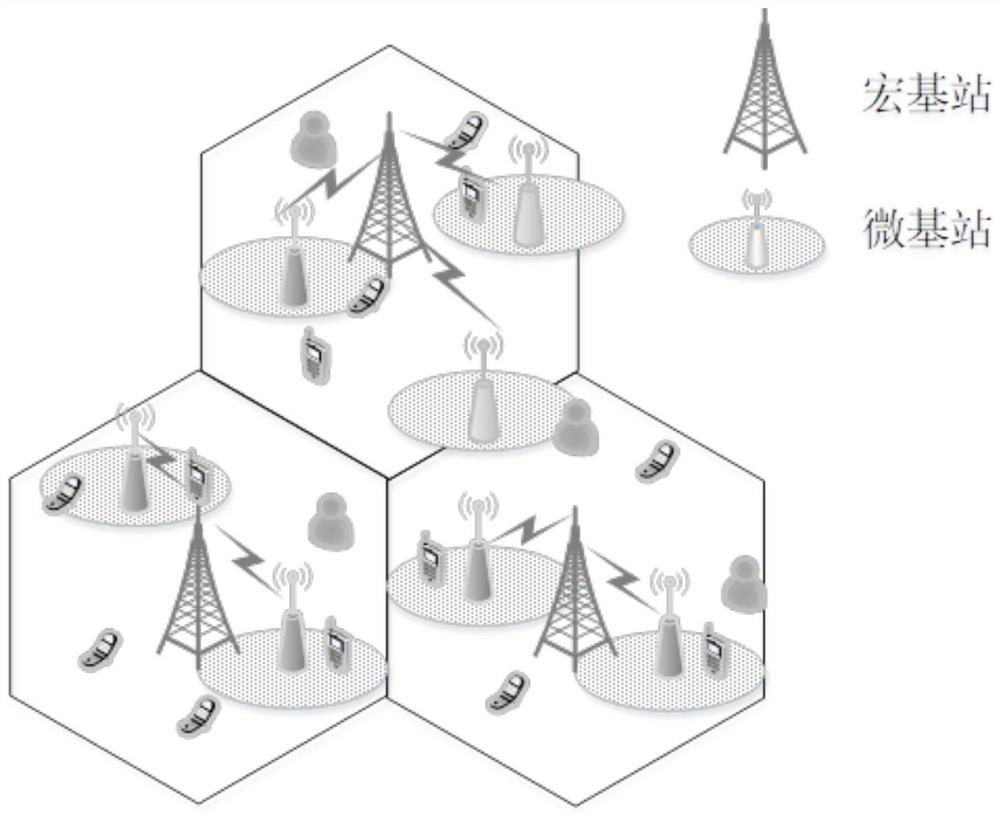

Cellular network-oriented improved reinforcement learning network coverage optimization method

ActiveCN113473480APerformance impactAutomatically improve optimization behaviorNeural learning methodsHigh level techniquesData setEngineering

The invention discloses a cellular network-oriented improved reinforcement learning network coverage optimization method, which comprises the following steps of: (1) acquiring terminal drive test data and base station side static data from a heterogeneous wireless network environment, and processing to obtain a balanced data set; (2) selecting a part of data from the balanced data set as a training set, inputting the training set into a random forest model, and training the random forest model to obtain a network coverage prediction model; (3) setting a target function of coverage optimization; and (4) setting space mapping of reinforcement learning and network coverage optimization problems, and training a reinforcement learning agent to obtain an adjustment strategy of engineering parameters and a coverage optimization result. According to the method, the optimization behavior is automatically improved, so that the convergence speed is higher, meanwhile, a large amount of operation and maintenance optimization experience can be accumulated, an optimization strategy is autonomously formed, and the great influence of the optimization process on the network performance is avoided.

Owner:NANJING UNIV OF POSTS & TELECOMM

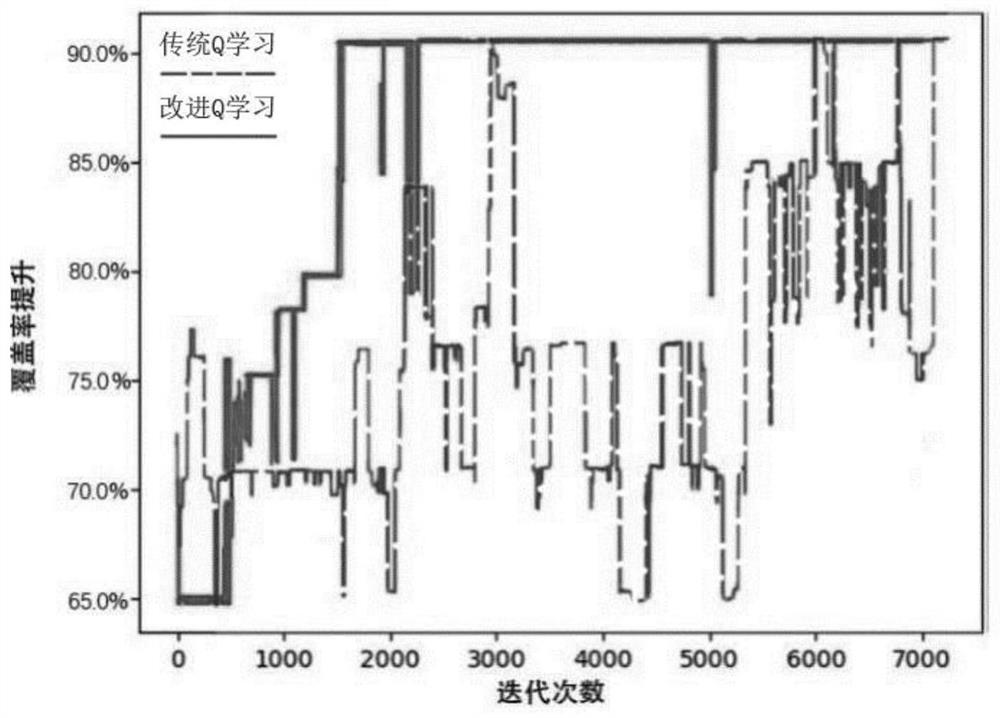

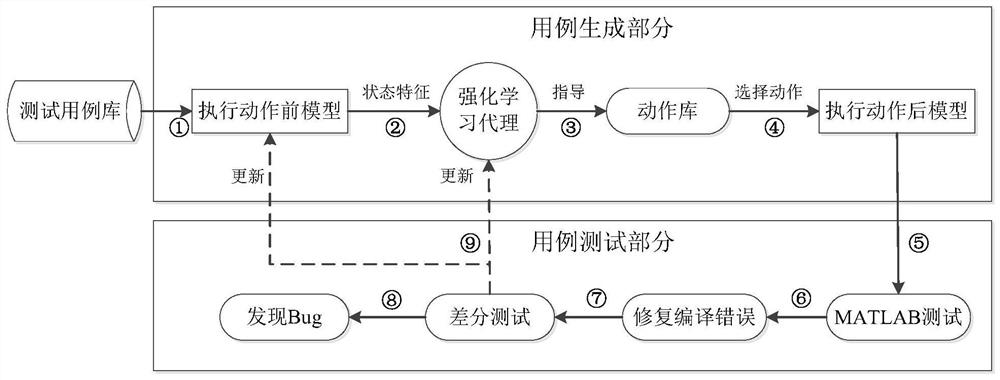

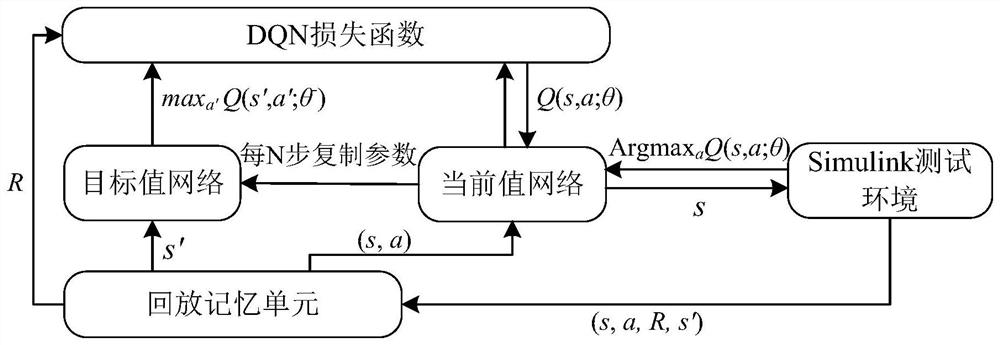

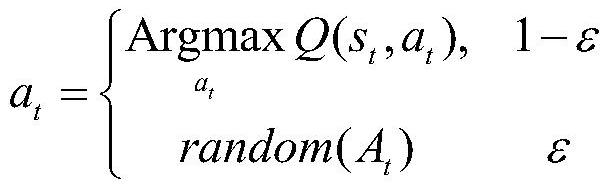

Reinforcement learning-based Simulink software testing method

PendingCN114706762AWork around the constraints of shortagesAddressing the Shortcoming of Lack of GuidanceSoftware testing/debuggingNeural architecturesEngineeringMATLAB

The invention discloses a reinforcement learning-based Simulink software testing method. The reinforcement learning-based Simulink software testing method comprises two parts: a use case generation part and a use case testing part, the case generation part comprises the following steps: (1) selecting an initial model in a test case library, (2) inputting the state characteristics of the initial model into a reinforcement learning agent, (3) selecting the next action to be executed by the model in an action library by the agent according to the input, and (4) outputting an action index to the model, and executing the action by the model. A case test part: (5) carrying out compiling test on the model after action execution by MATLAB, (6) repairing compiling errors if compiling is not passed, (7) carrying out differential test on the model after compiling is passed, (8) judging whether a test result is equivalent in function or not, if the test result is equivalent, considering that no bug is found, if a difference exists, considering that the bug is found, and (9) based on the test result, judging that the bug is not found. And updating the reinforcement learning agent, so that the reinforcement learning agent tends to generate a model of the easily triggered bug.

Owner:DALIAN MARITIME UNIVERSITY

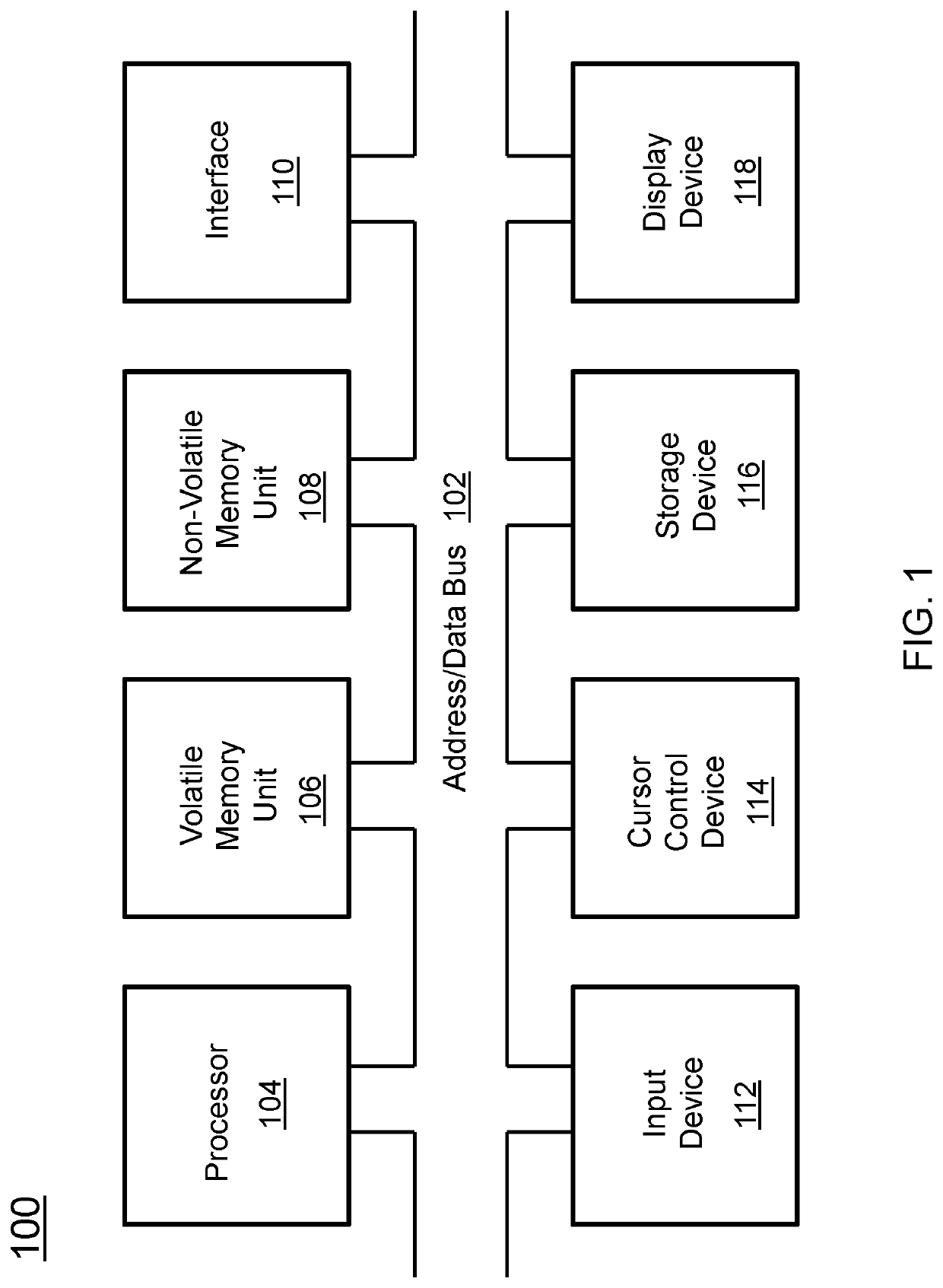

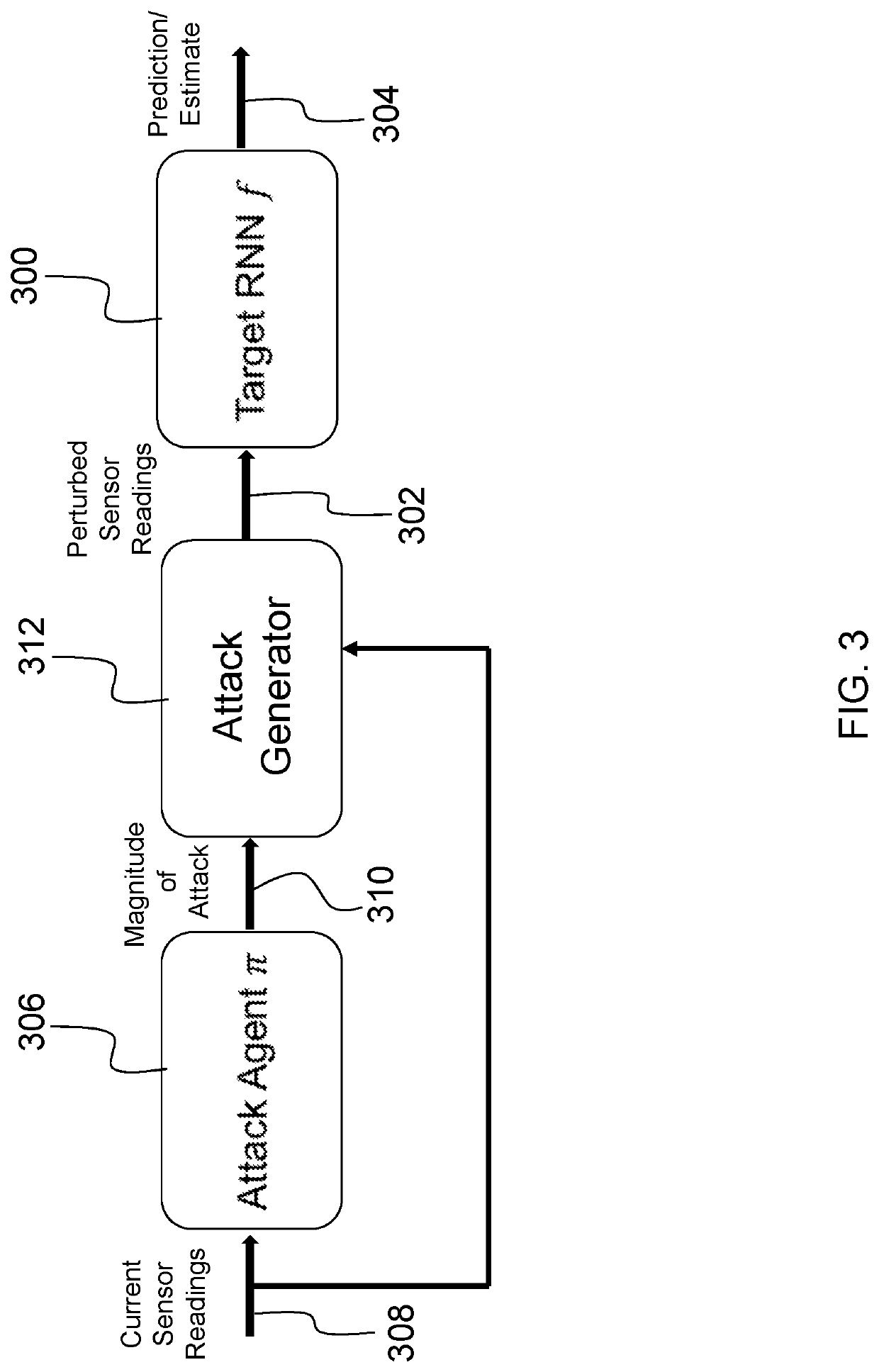

Deep reinforcement learning based method for surreptitiously generating signals to fool a recurrent neural network

PendingUS20210089891A1Optimization StrategyArtificial lifeNeural architecturesEngineeringData mining

Described is an attack system for generating perturbations of input signals in a recurrent neural network (RNN) based target system using a deep reinforcement learning agent to generate the perturbations. The attack system trains a reinforcement learning agent to determine a magnitude of a perturbation with which to attack the RNN based target system. A perturbed input sensor signal having the determined magnitude is generated and presented to the RNN based target system such that the RNN based target system produces an altered output in response to the perturbed input sensor signal. The system identifies a failure mode of the RNN based target system using the altered output.

Owner:HRL LAB

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com