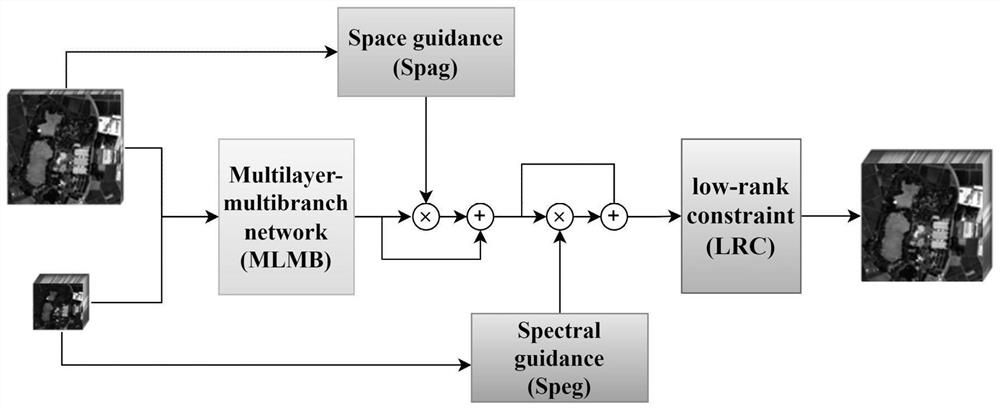

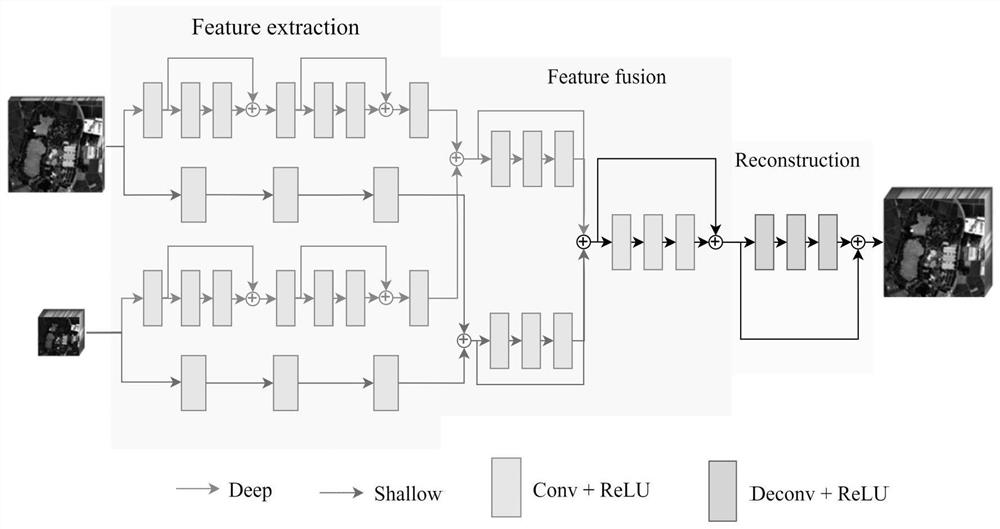

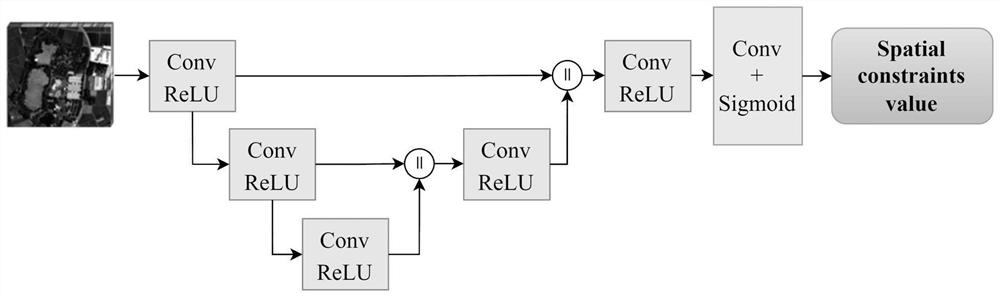

Multi-hyperspectral image fusion method guided by low-rank prior and spatial spectrum information

A multi-spectral image and image fusion technology, applied in neural learning methods, image enhancement, image data processing, etc., can solve the problems of easily ignoring the prior characteristics of hyperspectral images, lack of physical interpretability, lack of space-spectral guidance, etc. Avoid mutual interference, reduce spatial spectral distortion, and improve the effect of fusion accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0081] Step 1: Perform Gaussian filtering and downsampling on the given hyperspectral image and multispectral image to generate the input during network training, and the original hyperspectral image is used as the target image to calculate the loss function.

[0082] In the present invention, when Gaussian filtering is performed on the original hyperspectral image, the filter kernel size is 5×5, and the standard deviation is 2. After filtering, downsampling is performed, the downsampling rate is 3, and the sampling method is bilinear sampling.

[0083] The size of the multispectral image in the embodiment is 2187×2187, including 4 bands, and the size of the hyperspectral image is 729×729, including 150 bands. In the specific implementation, the multispectral image and the hyperspectral image are subjected to Gaussian filtering with a filter kernel size of 5×5 and a standard deviation of 2. After filtering, bilinear downsampling with a downsampling rate of 3 is performed, and ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com