Neural network prediction method for colorectal cancer treatment effect based on MRI and CT images

A colorectal cancer, treatment effect technology, applied in the field of neural network prediction, can solve the problems of misjudgment, interference, feature artifacts, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0030] The technical solutions of the present invention will be described in detail below with reference to the accompanying drawings.

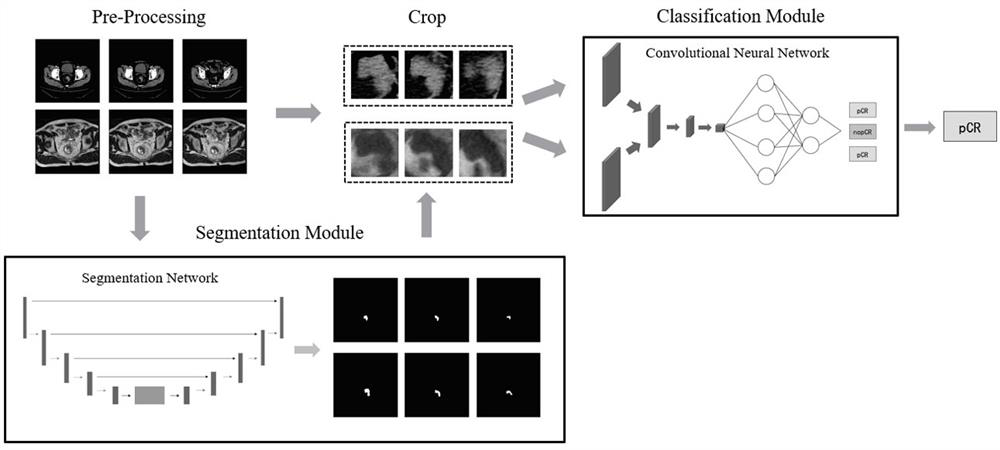

[0031] The invention provides a neural network prediction method for colorectal cancer treatment effect based on MRI and CT images. First, the tumor region of the input image is segmented through a deep learning network; then, the region of interest ROI is automatically extracted according to the segmentation result; , fused MRI and CT features by channel, and used a convolutional neural network for pCR classification.

[0032] The present invention also provides a neural network prediction system for the treatment effect of colorectal cancer based on MRI and CT images, including a segmentation module and a classification module;

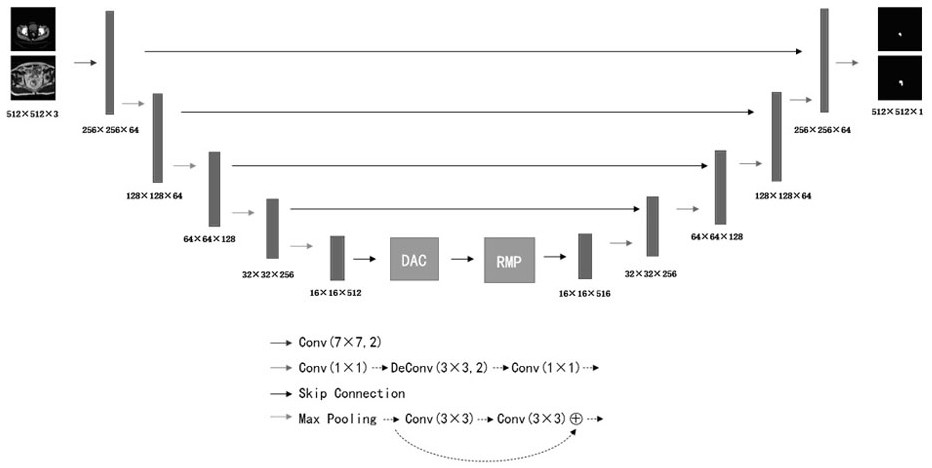

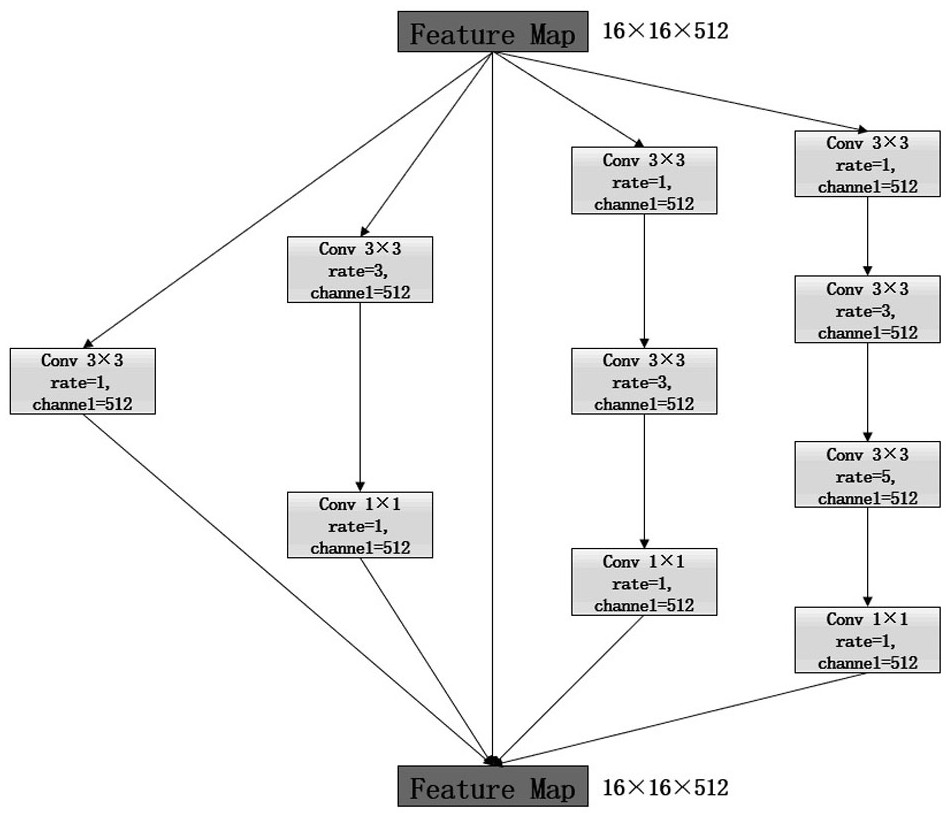

[0033] The segmentation module uses the CE-Net network to automatically segment the tumor region of the input image, and uses the binary mask obtained from the segmentation to locate and crop the image tumor region...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com