Method and apparatus for region-based moving image encoding and decoding

a technology of moving image and region, applied in the field of method and apparatus for region-based moving image encoding and decoding, can solve the problems of natural limit in encoding which can be adapted to the scene structure or features of images, limit each region to a rectangular shape, etc., and achieve the effect of facilitating more accurate region partitioning and accurate decoding

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

first embodiment

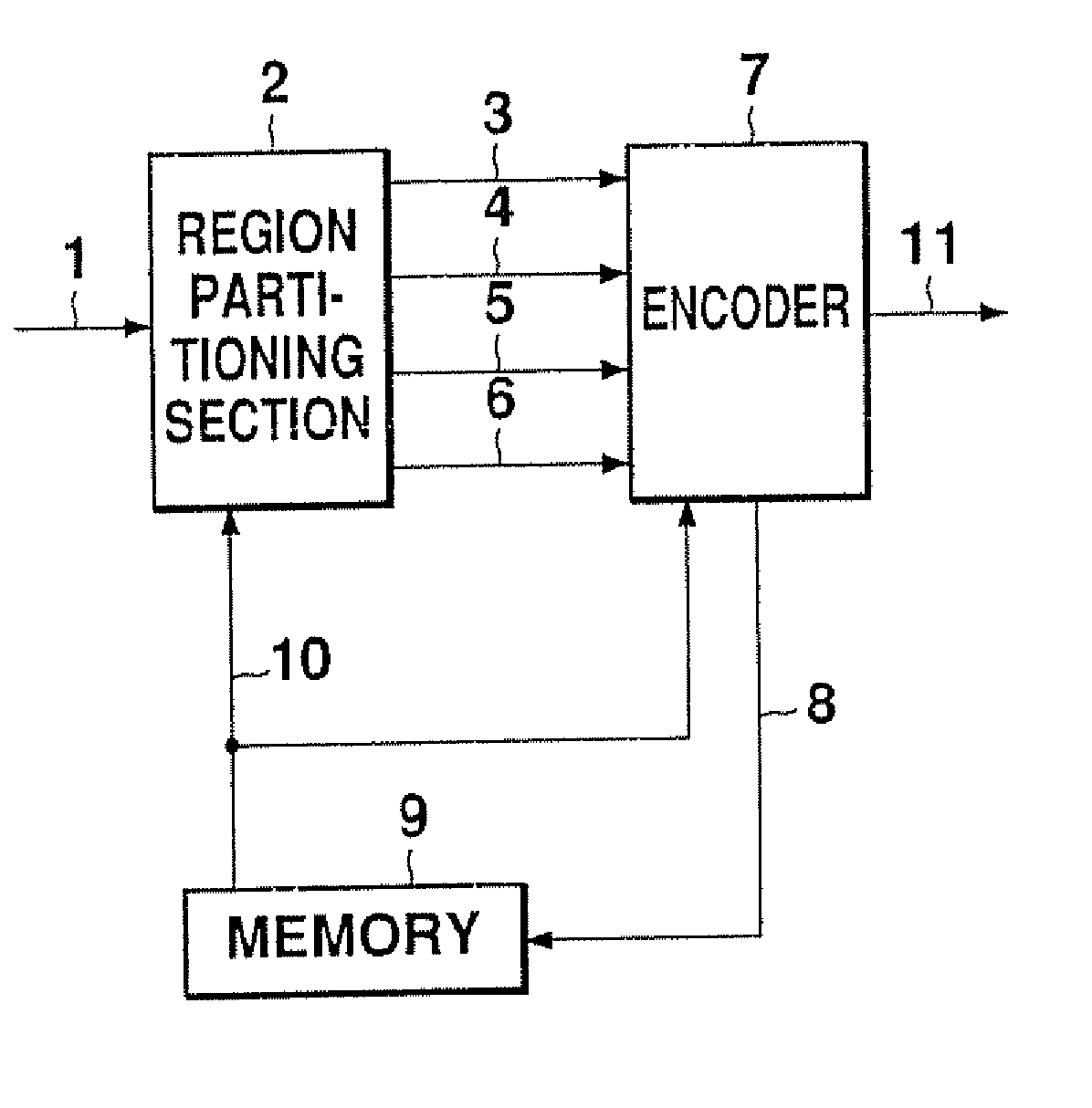

[0046]FIG. 3 is a block diagram showing a configuration of a moving image encoding apparatus related to this embodiment. This apparatus can be used in portable or stationary equipment for image communications, such as TV telephones and TV conferencing. It can also be used as a moving image encoding apparatus in image storage and recording apparatus such as digital VCRs and video servers. Furthermore, the processes in this apparatus can also be used as a moving image encoding program to be installed in the form of software or DSP firmware.

[0047] In FIG. 3, numeral 1 indicates the input image, numeral 2 indicates a region partitioning section, numeral 3 indicates region shape information, numeral 4 indicates a region image signal, numeral 5 indicates region motion information, numeral 6 indicates region attribute information, numeral 7 indicates an encoder, numeral 8 indicates a local decoded image, numeral 9 indicates a memory, numeral 10 indicates a reference image, and numeral 11 ...

second embodiment

[0080] This embodiment relates to an apparatus wherein region partitioning section 2 of the first embodiment has been partially modified. FIG. 16 is an internal block diagram of region partitioning section 2 in this embodiment. As shown in this diagram, region partitioning section 2 of the second embodiment has a configuration wherein partitioning processing section 12 of FIG. 5 has been replaced by uniform partitioning section 15. As shown in FIG. 17, a threshold judgment of the activity is not performed in the initial partitioning process in this configuration, and uniform partitioning is unconditionally performed in square blocks of minimum region area. This minimum region area may be made selectable.

[0081] Setting of the threshold is unnecessary in this embodiment, and region partitioning is performed only for amount of code—distortion cost as the evaluation value. Therefore, the procedure associated with threshold setting becomes unnecessary, as do activity calculation and com...

third embodiment

[0082] In the partitioning process of this embodiment, a judgment is made as to whether or not partitioning is possible, not only including the activity, but also including an index (hereinafter called a class) indicating the importance of the region. It is preferable to perform detailed encoding for regions having high importance, and to reduce region areas. Regions having low importance are made as large as possible so as to reduce the amount of code per pixel.

[0083] The activity is, for example, a closed, local statistical value within the region. On the other hand, the classes in this embodiment are based on the features of the image spanning regions. In this embodiment, the classes are defined on the basis as to what degree a person views the region, namely, a person's degree of observation, due to the object structure traversing the region. For example, when the edge distribution of a given region spans a wide range and the connection with adjacent regions is strong, it is hi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com