Model-Based Stereo Matching

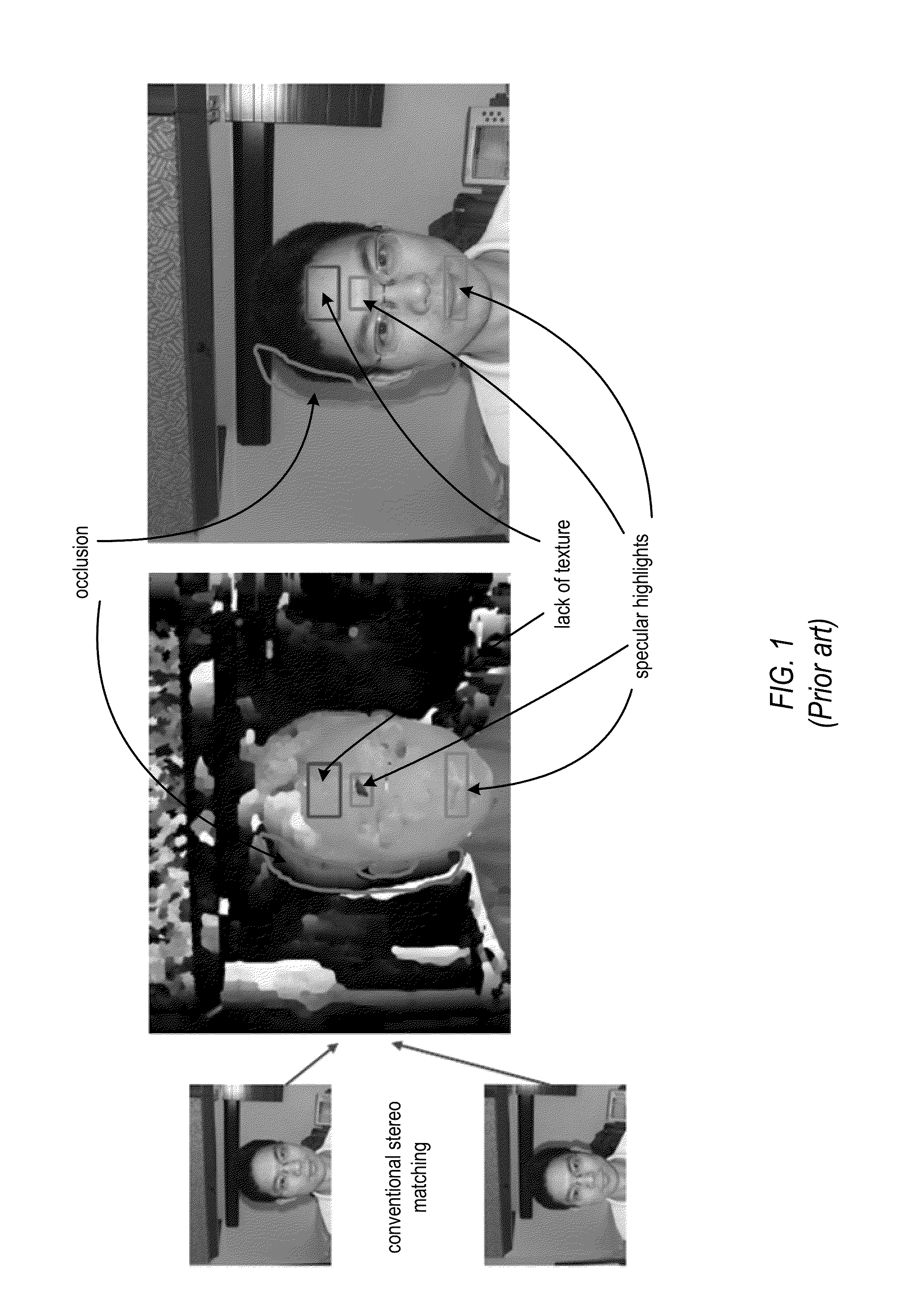

a stereo matching and model technology, applied in the field of image processing, can solve the problems of lack of texture, unreliable conventional stereo matching techniques, lack of texture,

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

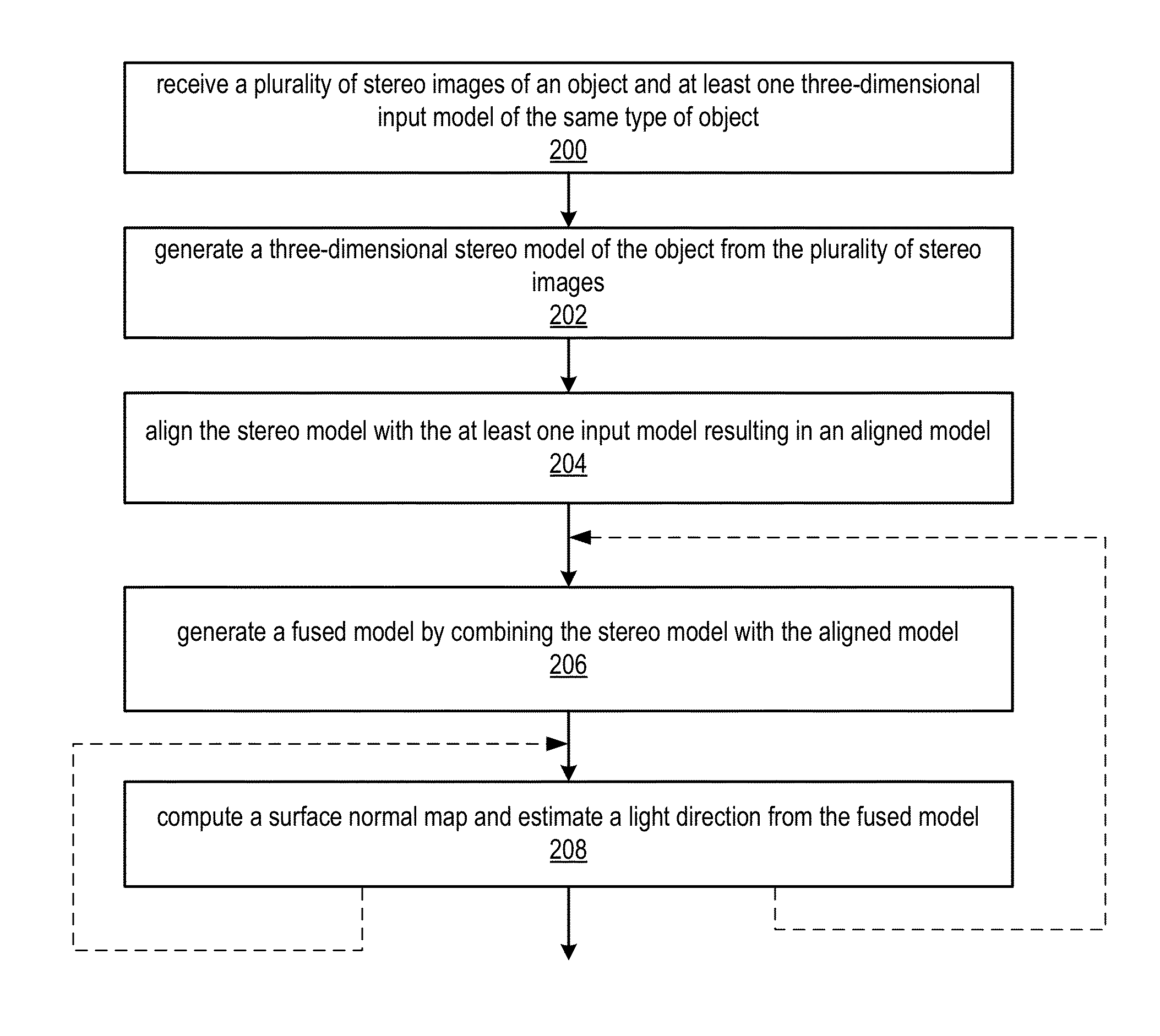

Method used

Image

Examples

example results

[0100]FIG. 11 illustrates modeling results for an example face, according to some embodiments. FIG. 11 (a) and FIG. 11 (b) are the input stereo images. FIG. 11 (c) is the close-up of the face in FIG. 11 (a). FIG. 11 (d) and FIG. 11 (e) are the confidence map and depth map computed from stereo matching, respectively. FIG. 11 (f) is the registered laser-scanned model and 11 (g) is the fused model. FIG. 11 (h)-(j) are the screenshots of the stereo model, laser-scanned model and fused model, respectively. FIG. 11 (k) is the estimated surface normal map, and FIG. 11 (l) is the re-lighted result of FIG. 11 (c) using the estimated normal map in FIG. 11 (k).

[0101]FIG. 11 illustrates modeling results of a person whose face is quite different from the laser-scanned model used, as can be seen from the stereo model in FIG. 11 (h) and registered laser-scanned model in FIG. 11 (i). The fused model is presented in FIG. 11 (j). The incorrect mouth and chin are corrected in FIG. 11 (j). FIG. 11 (k) ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com