Luminance measurement method, luminance measurement device, and image quality adjustment technique using the method and device

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

embodiment 1

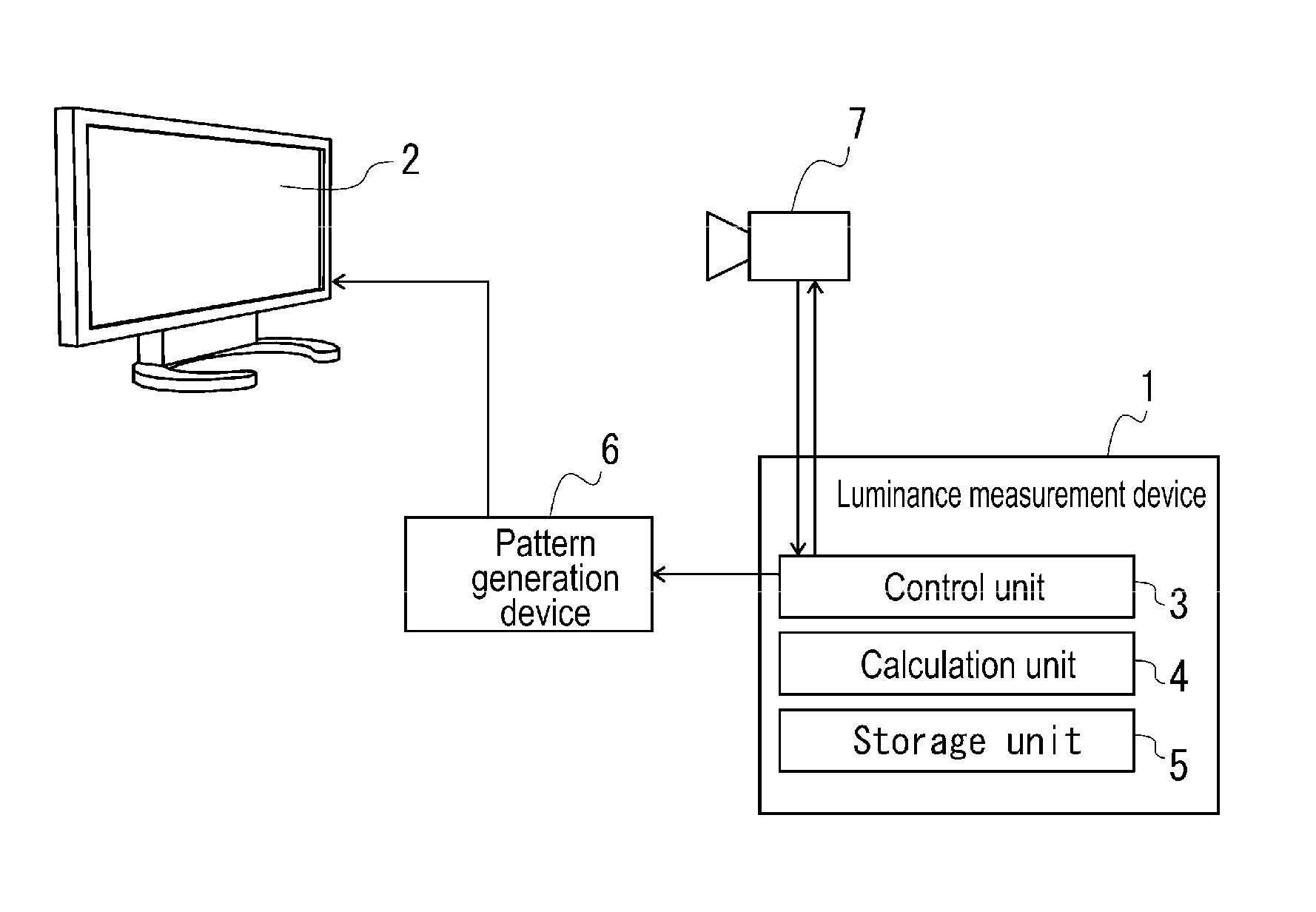

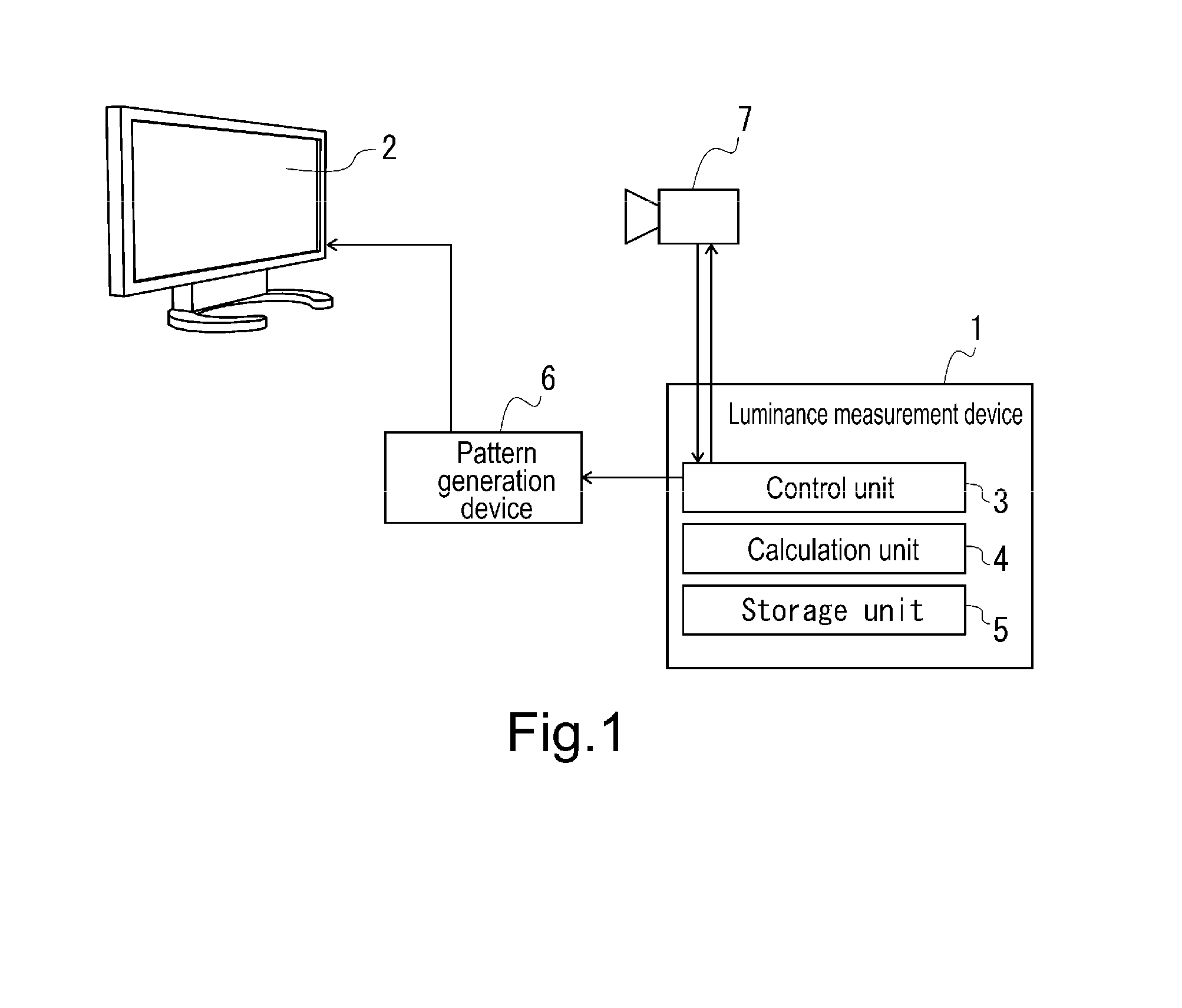

[0045]FIG. 1 illustrates a luminance measurement device according to the present embodiment. This luminance measurement device 1 measures the luminance of all pixels of an organic EL panel 2 (each pixel of the organic EL panel 2 is made up of R, G, and B subpixels, and it is assumed in the present invention that “pixel” encompasses such subpixels), and includes a control unit 3, a calculation unit 4, and a storage unit 5. The control unit 3 controls display of the organic EL panel 2 via a pattern generation device 6, and controls imaging by a monochrome solid-state imaging camera 7 that is disposed facing the organic EL panel 2. The calculation unit 4 performs various types of calculation based on the image that was imaged by the camera 7 or the like, and the storage unit 5 stores the imaging result of the camera 7 and the calculation results of the calculation unit 4.

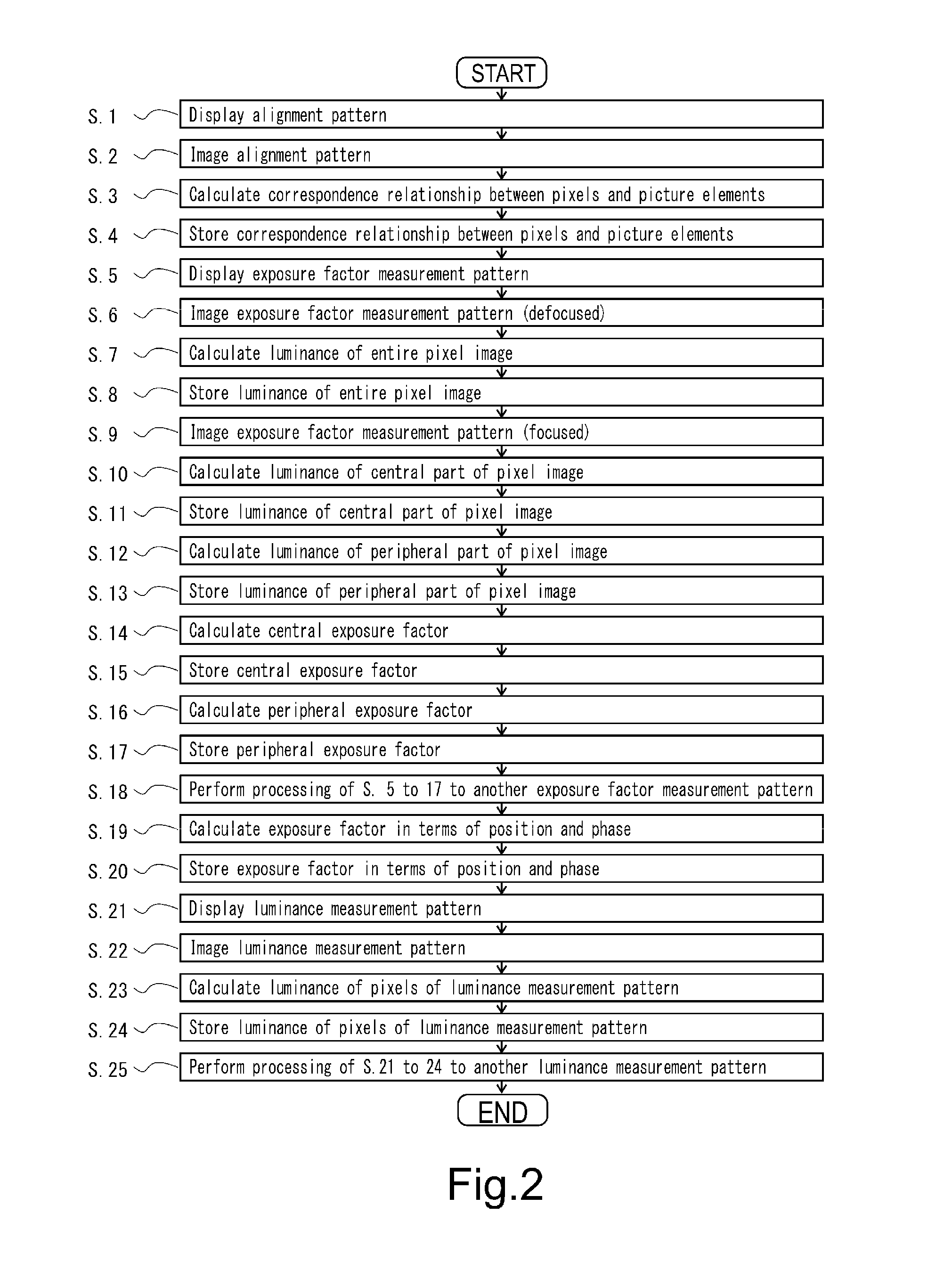

[0046]As illustrated in FIG. 2, when the luminance measurement device 1 measures the luminance of pixels of the orga...

embodiment 2

[0073]FIG. 9 illustrates a correction data generation device according to the present embodiment. This correction data generation device 10 is configured by additionally connecting a ROM writer 11 to the luminance measurement device 1 according to Embodiment 1. As shown in FIG. 109, the correction data generation device 10, like the luminance measurement device 1, measures the luminance of all pixels of the organic EL panel 2 (steps 1 to 25), and then the calculation unit 4 generates correction data for reducing display unevenness (luminance unevenness) of the organic EL panel 2 on the basis of the luminance of each pixel (step 26). The generated correction data is written in a ROM (nonvolatile memory) 12 by the ROM writer 11 (step 27), and an image quality adjustment type organic EL panel 14 is manufactured by an image quality adjustment circuit 13 in which this ROM 12 is provided being mounted on the organic EL panel 2 (step 28).

[0074]In the image quality adjustment type organic E...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com