Multi-frame morphology area detection method based on frame difference

A detection method and morphological technology, applied in image data processing, television, instruments, etc., can solve the problems of inaccurate extraction of moving object information and low computational complexity, achieve high anti-interference and detection accuracy, eliminate The effect of background noise

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0028] Below just in conjunction with accompanying drawing and the present invention will be further described.

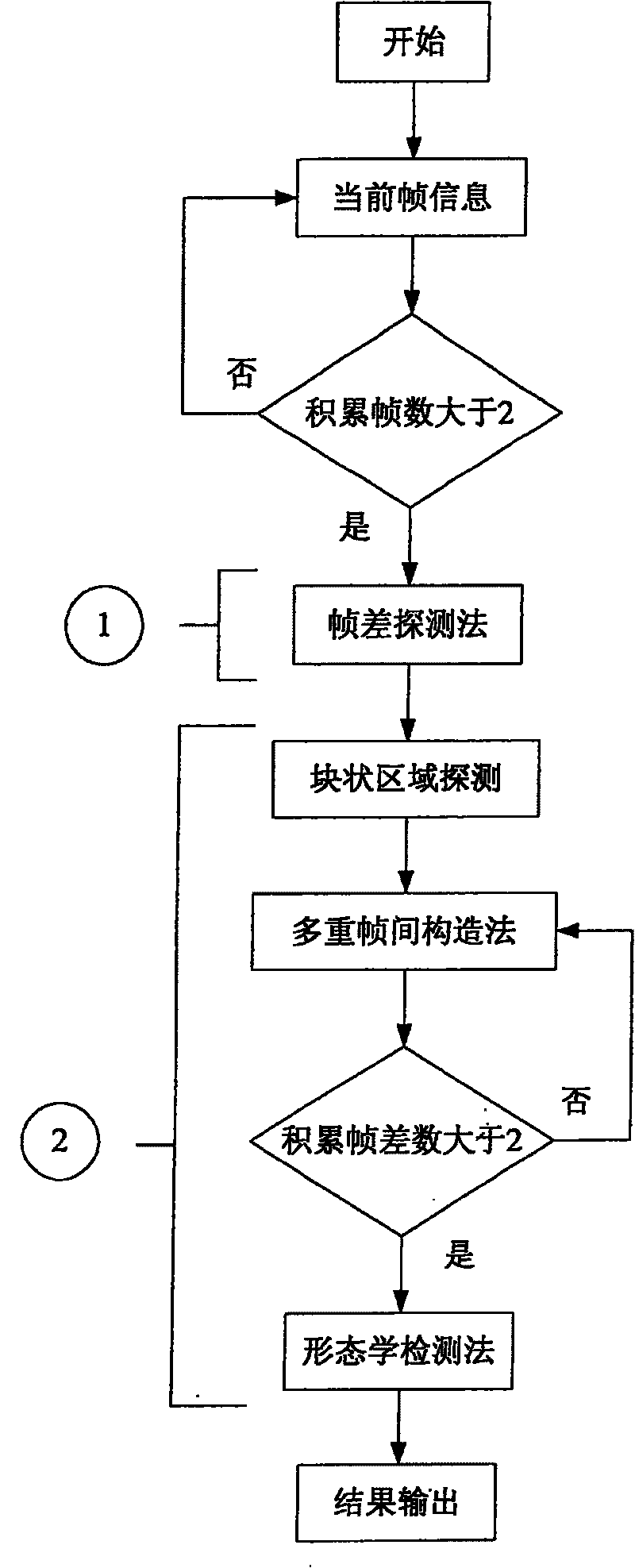

[0029] The present invention is a multi-frame morphological region detection method based on frame difference. The working flow chart of the method is as follows figure 1 shown.

[0030] First: read the current frame information, and when the cumulative frame is greater than 2, carry out the first step of the present invention: start to detect the frame difference; its purpose is to detect the initial possible moving area and to be accurate in real time for the next step Get ready for probing. The specific steps are: set the gray value of a certain pixel point (x, y) as I(x, y, t) and I(x, y) for two consecutive frames of 256-level grayscale images at time t and t+1 , t+1), by setting the automatic threshold Td to calculate the change of the gray value of the two frames of images to obtain the foreground change area. The setting of the automatic threshold can be ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com