User face data inverse-transmitting method for realizing the switching across base station

A technology for user plane data and cross-base stations, applied in digital transmission systems, data exchange networks, transmission systems, etc., can solve the problems of long data processing paths, increased handover processing delays, and increased handover delays to reduce processing time latency, shortened latency, reduced protocol processing, and the effects of data replication between layers

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

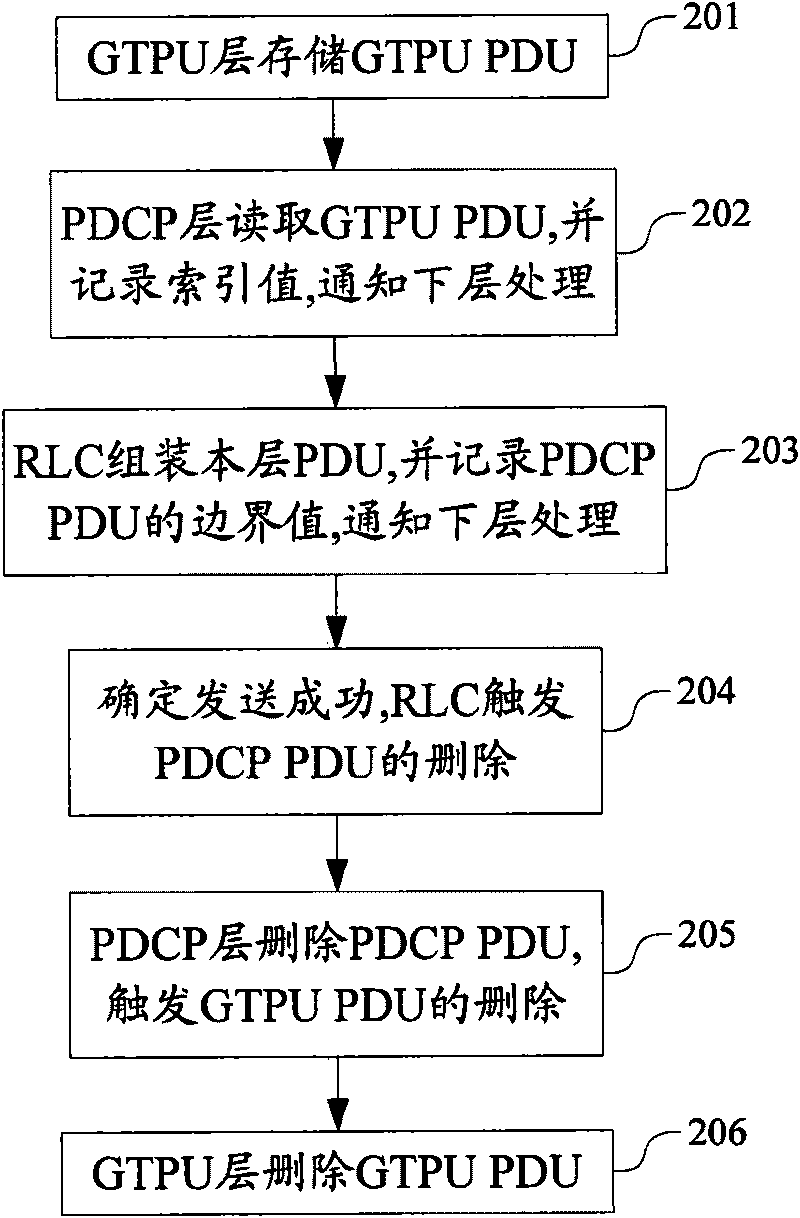

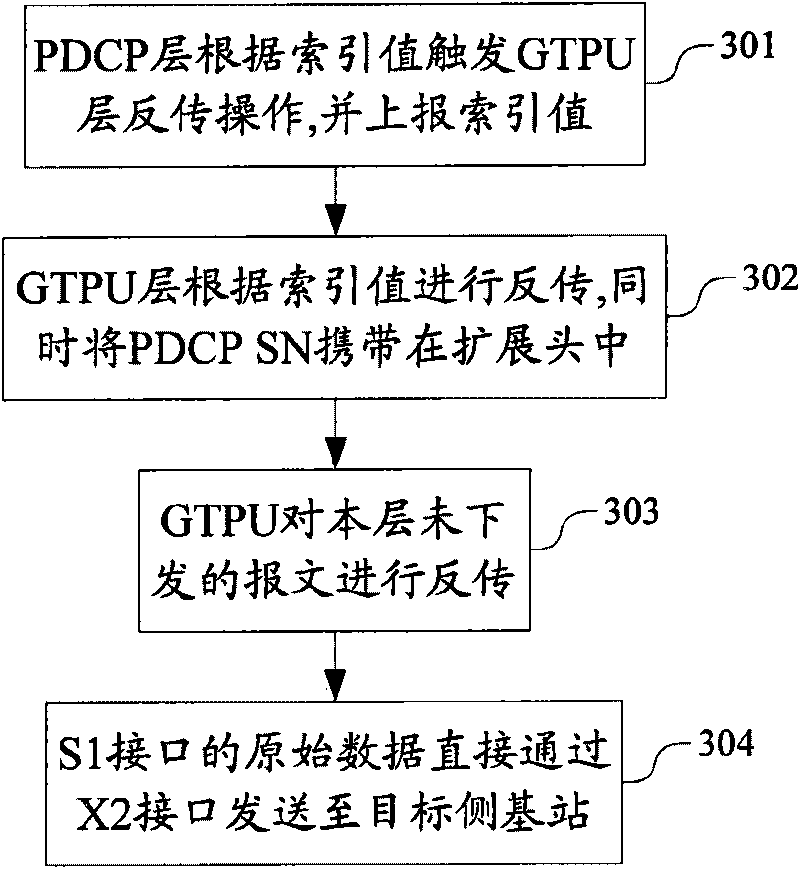

[0030] In the present invention, the user plane receives data, stores the PDU of the message at the upper layer, and notifies the lower layer to perform protocol processing on the message; after the message is successfully sent, the upper layer deletes the PDU of the corresponding message according to the trigger of the lower layer; The upper layer of the side base station sends the stored PDU of the message that needs to be transmitted back to the target side base station.

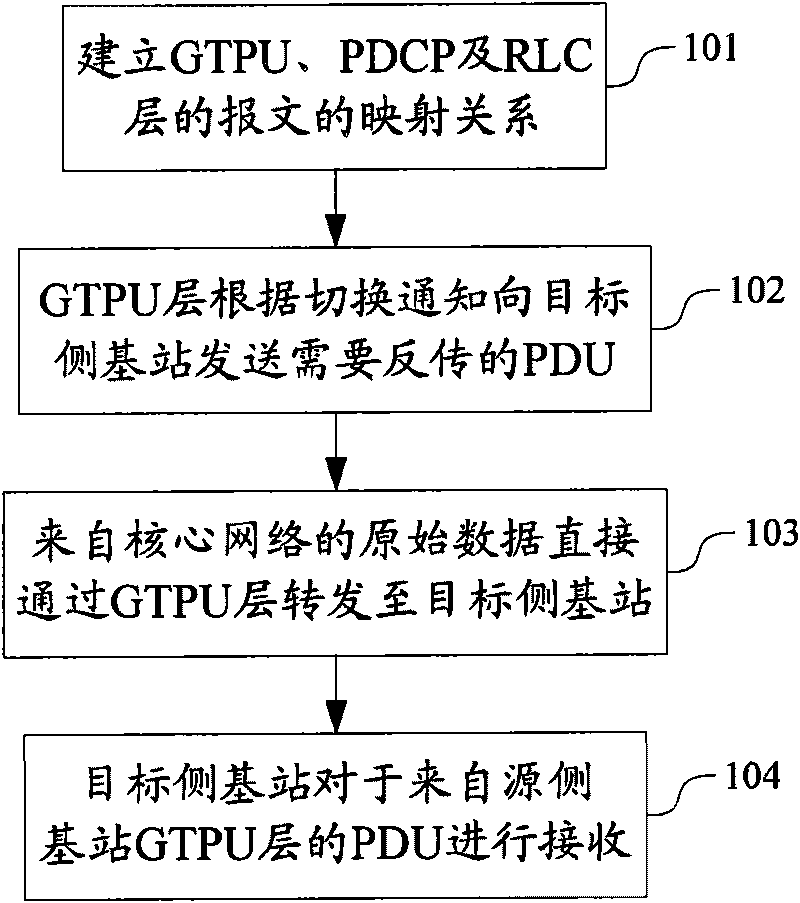

[0031] figure 1 It is the flow chart of back-transmission of user plane data across base stations in the present invention, such as figure 1 As shown, the implementation process of user plane data backtransmission for cross-base station handover includes the following steps:

[0032] Step 101: When the user plane receives data, establish a mapping relationship among the packets of the GTPU layer, PDCP layer, and RLC layer layer by layer. Base stations produced by different manufacturers have different s...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com