Method and system for implementing a stream processing computer architecture

A computer and stream computing technology, applied in the direction of computers, digital computer components, computing, etc., can solve the problems of increasing the access time of big data sets, increasing the transmission bandwidth, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

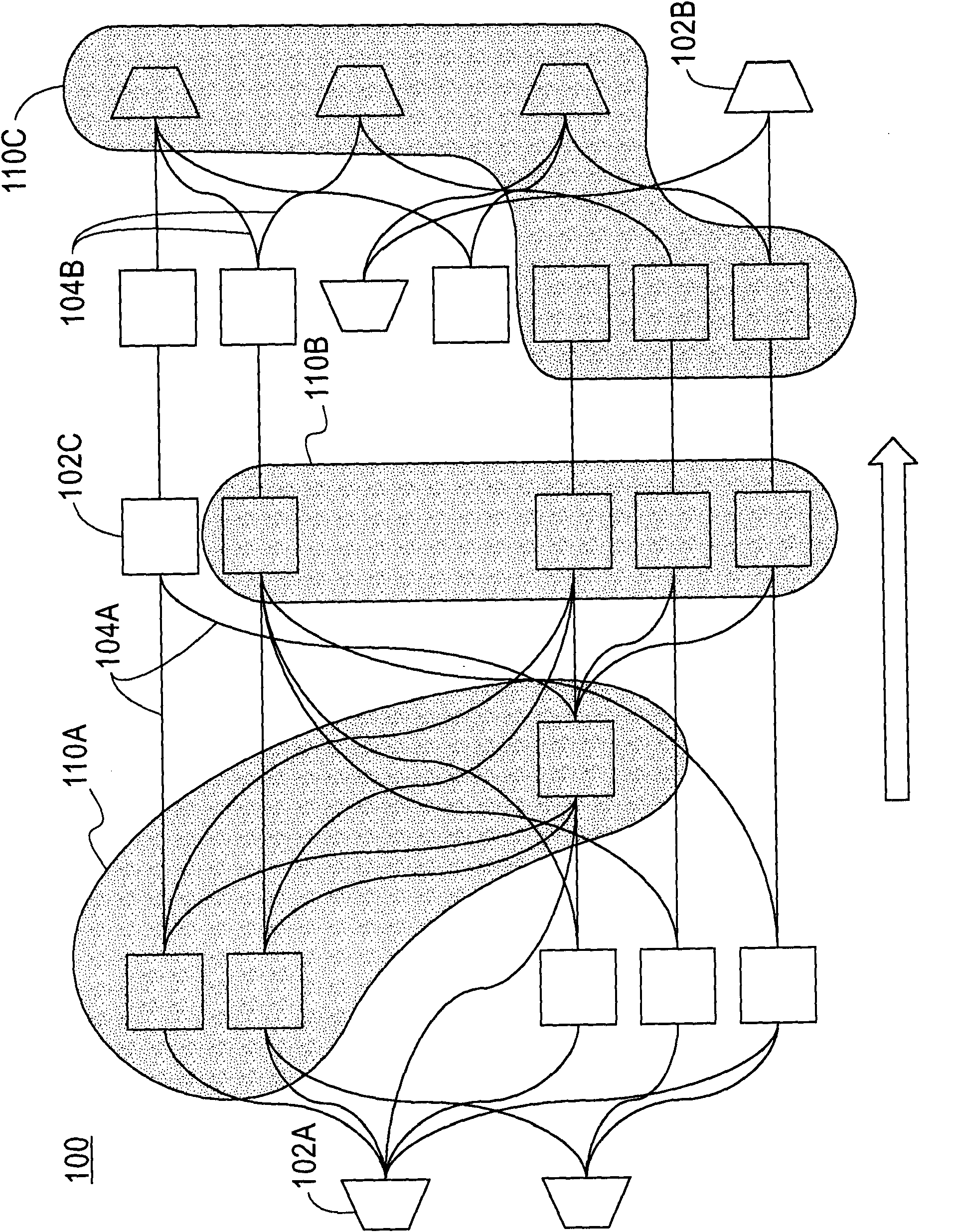

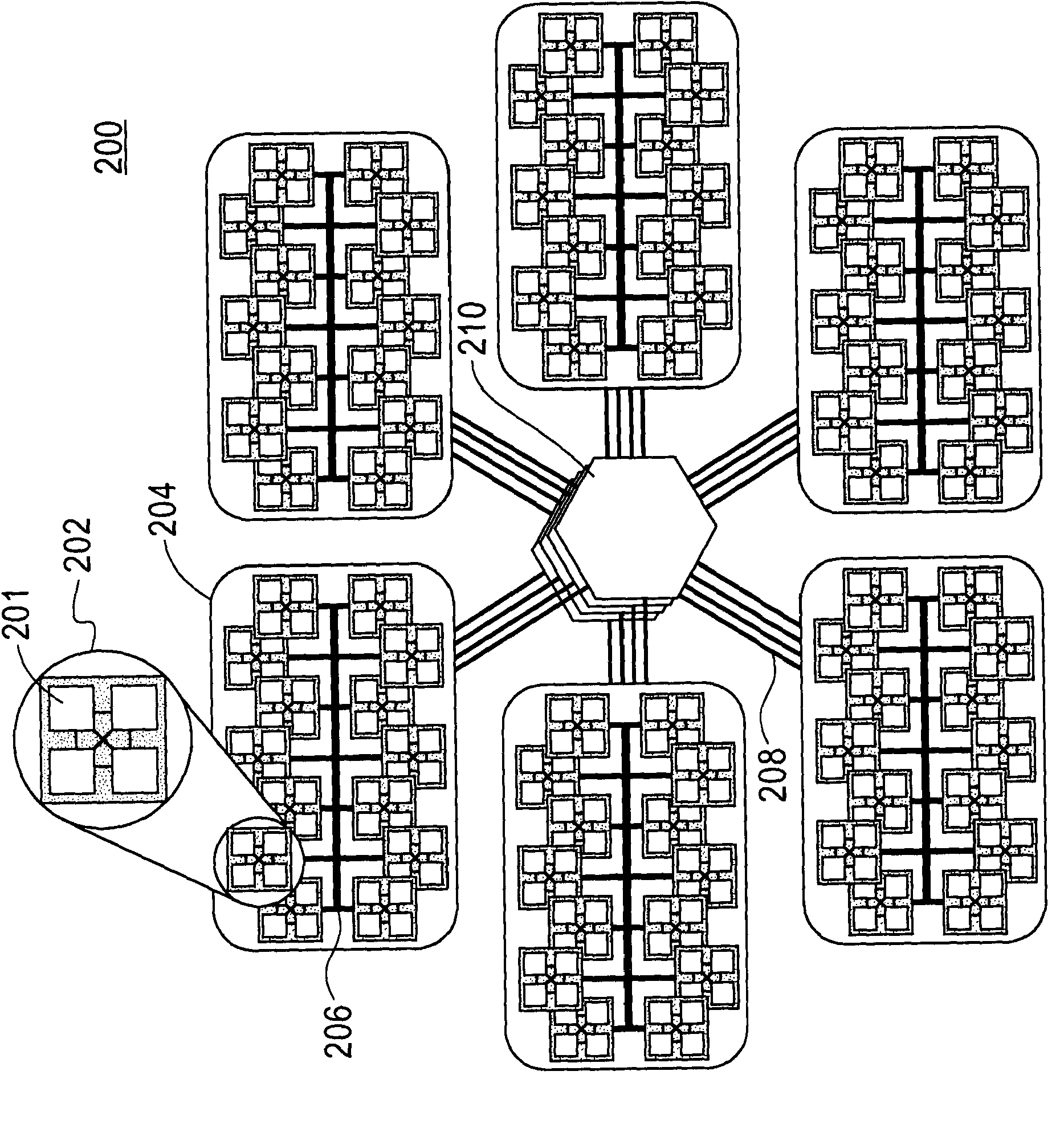

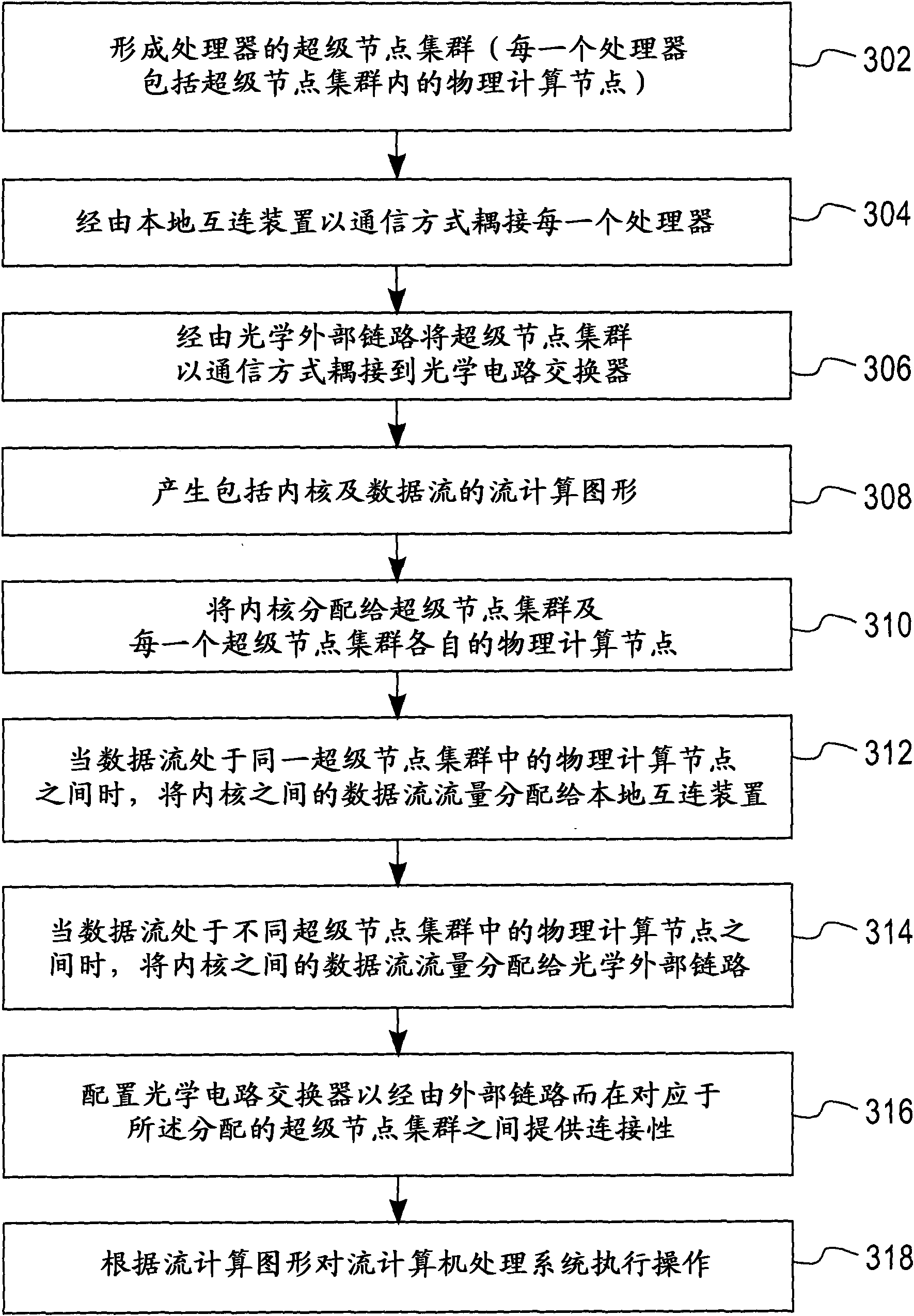

[0018] An exemplary embodiment according to the present invention discloses an interconnected stream processing architecture for a stream computer system and a processing procedure for implementing the interconnected architecture. The interconnection architecture consists of two network types that complement each other's functionality and address connectivity between tightly coupled groups of processing nodes. Such groups or clusters can be locally interconnected using a variety of protocols and both static and dynamic network topologies (eg, 2D / 3D grids, hierarchical fully connected components, switch-based components). Network and switch functionality can be incorporated within the processor chips so that clusters can be obtained by interconnecting the processor chips directly to each other without external switches. An example of such a technology and protocol is HyperTransport3 (HT3). Packaging constraints, transfer signal speeds, and allowable distances of interconnects ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com