Method and system for detecting lane marked lines

A lane line detection and lane line technology, applied in the field of lane line detection, can solve the problems of low precision, wide fluctuation range, complex environment, etc., and achieve the effect of stable operation and good fault tolerance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

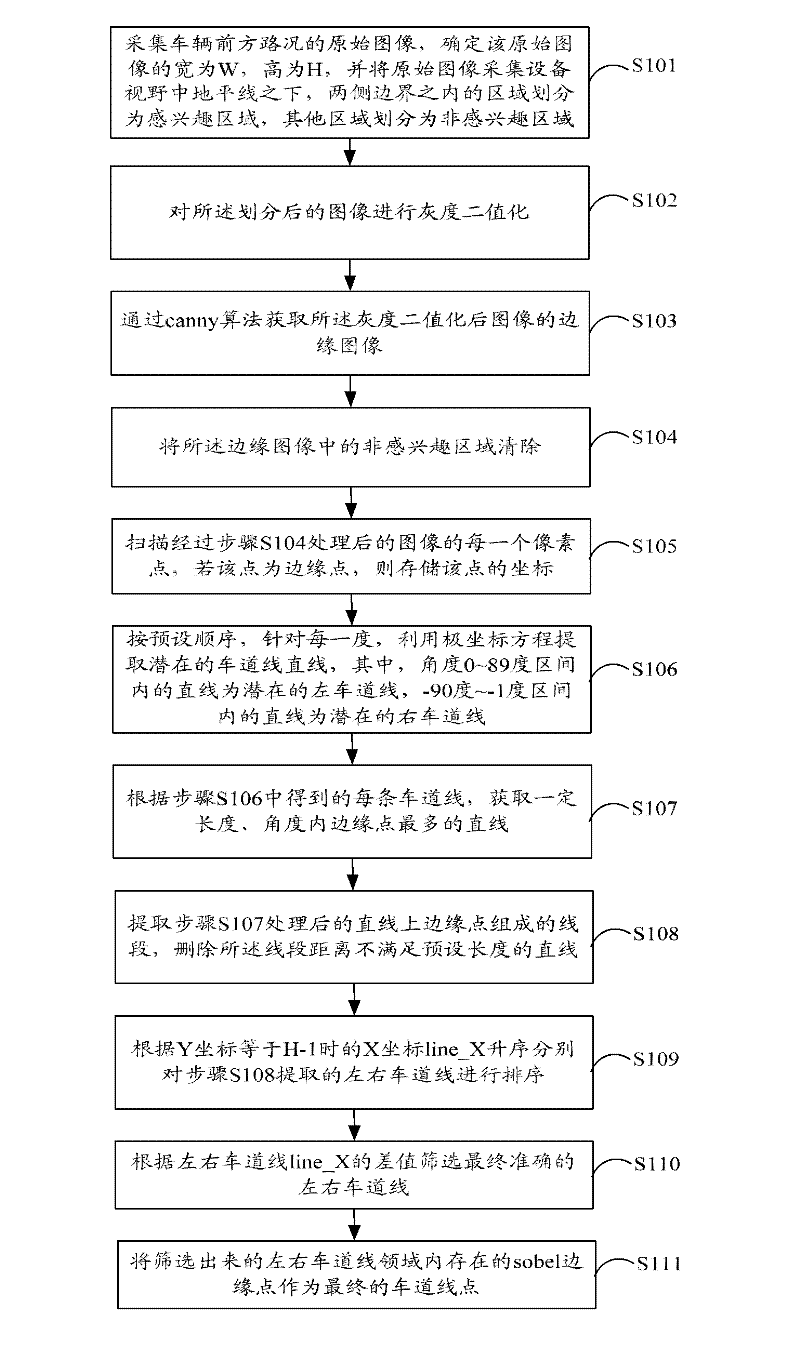

[0040] figure 1 The implementation flow of the lane line detection method provided by Embodiment 1 of the present invention is shown, and the process of the method is described in detail as follows:

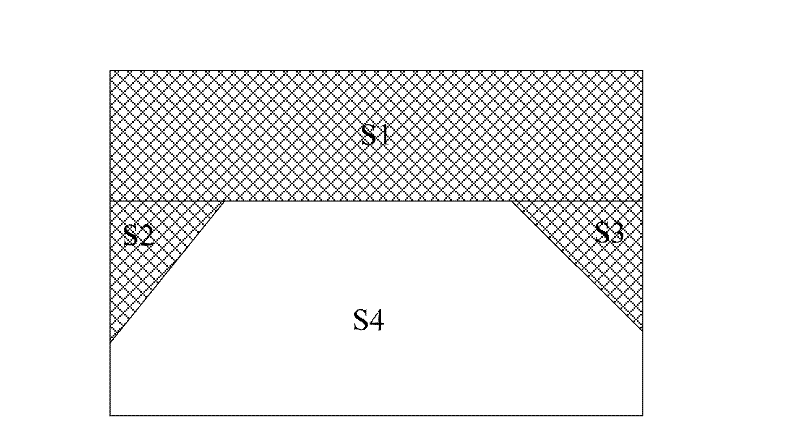

[0041] In step S101, the original image of the road condition in front of the vehicle is collected, the width of the original image is determined to be W, and the height is H, and the area under the horizon line in the field of view of the original image acquisition device and within the borders on both sides is divided into areas of interest region, and other regions are classified as non-interest regions.

[0042] In this embodiment, the original image in front of the vehicle is collected by an image acquisition device (such as a camera) installed on the vehicle, and the image acquisition device is set with internal parameters and external parameters. Wherein, the internal parameters include principal point coordinates, effective focal length, etc., and the external parameters...

Embodiment 2

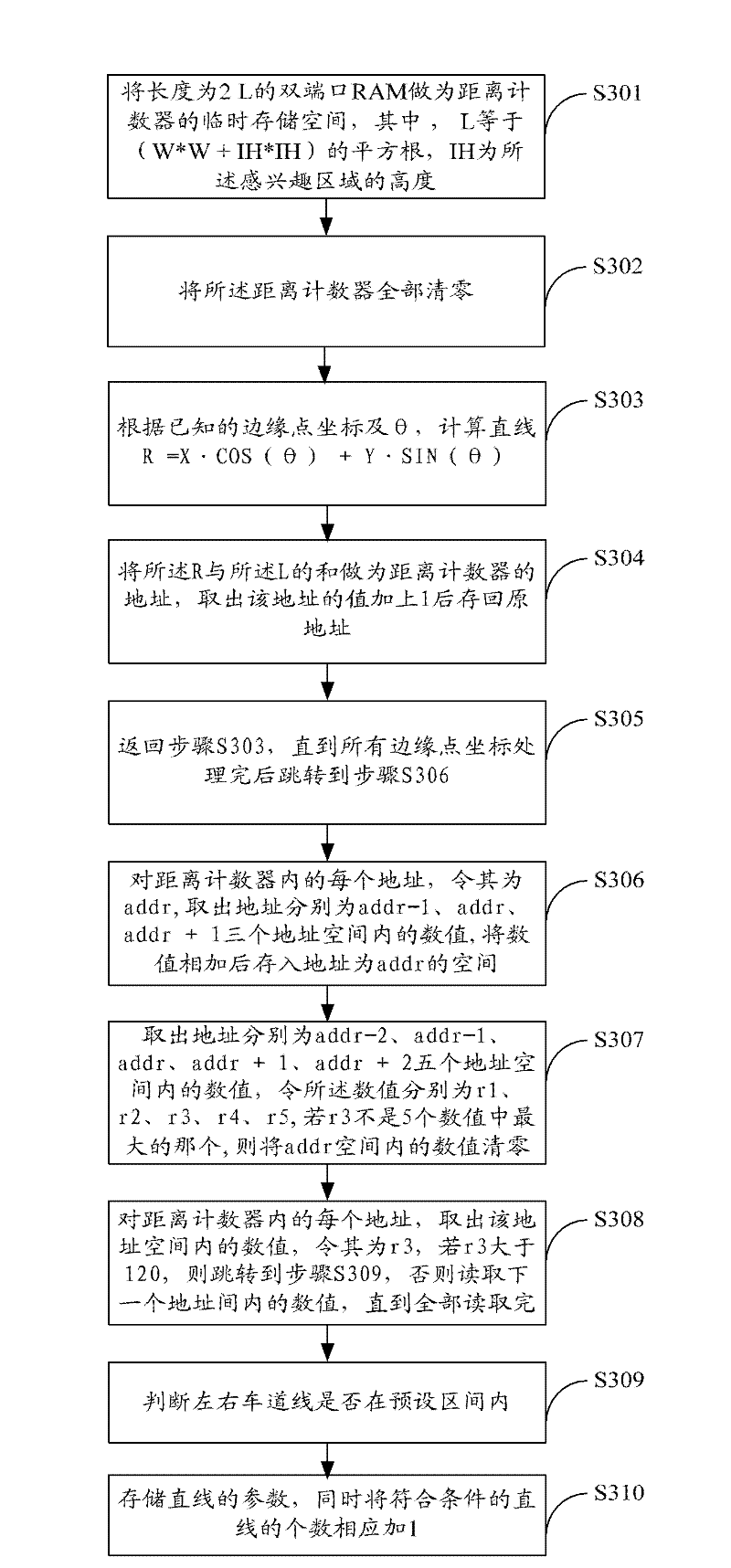

[0059] image 3 The specific flow of the straight line calculation provided by Embodiment 2 of the present invention is shown, and the process is described in detail as follows:

[0060] In step S301, the dual-port RAM with a length of 2L is used as the temporary storage space of the distance counter, wherein IH is the height of the region of interest.

[0061] In this example, let Because among all straight lines passing through any point in the region of interest, the minimum R is -L and the maximum is L, so the length is L 2 The dual-port RAM is used as a temporary storage space for the distance counter.

[0062] In step S302, all the distance counters are cleared.

[0063] In step S303, according to the stored edge point coordinates, calculate R=X cos(θ)+Ysin(θ), where θ is the angle between the vertical line and the horizontal line from the pole to the straight line.

[0064] In this embodiment, when X, Y, and θ are known, the straight line R is calculated accordin...

Embodiment 3

[0075] Figure 4 The specific flow of straight line selection provided by Embodiment 3 of the present invention is shown, and the process is described in detail as follows:

[0076] In step S401, each extracted straight line is processed in the order of [0°, 89°][-90°, -1°], and the parameters R1, θ1, S1, line_x1 of the currently processed straight line are obtained, if described If the parameters are all zero, then take the next straight line for processing, otherwise skip to step S402.

[0077] In step S402, scan the coordinates (X, Y) of each edge point sequentially according to the storage order, and calculate R according to R=Xcos(θ)+Ysin(θ), where θ=θ1, if the absolute value of R-R1 is less than 3 , the point is considered to be on the straight line, and the coordinates of the point are stored in the temporary memory, otherwise they are not stored;

[0078] In step S403, scan the temporary memory according to the storage order, if the distance between two points before...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com