Large data quantity batch processing system and large data quantity batch processing method

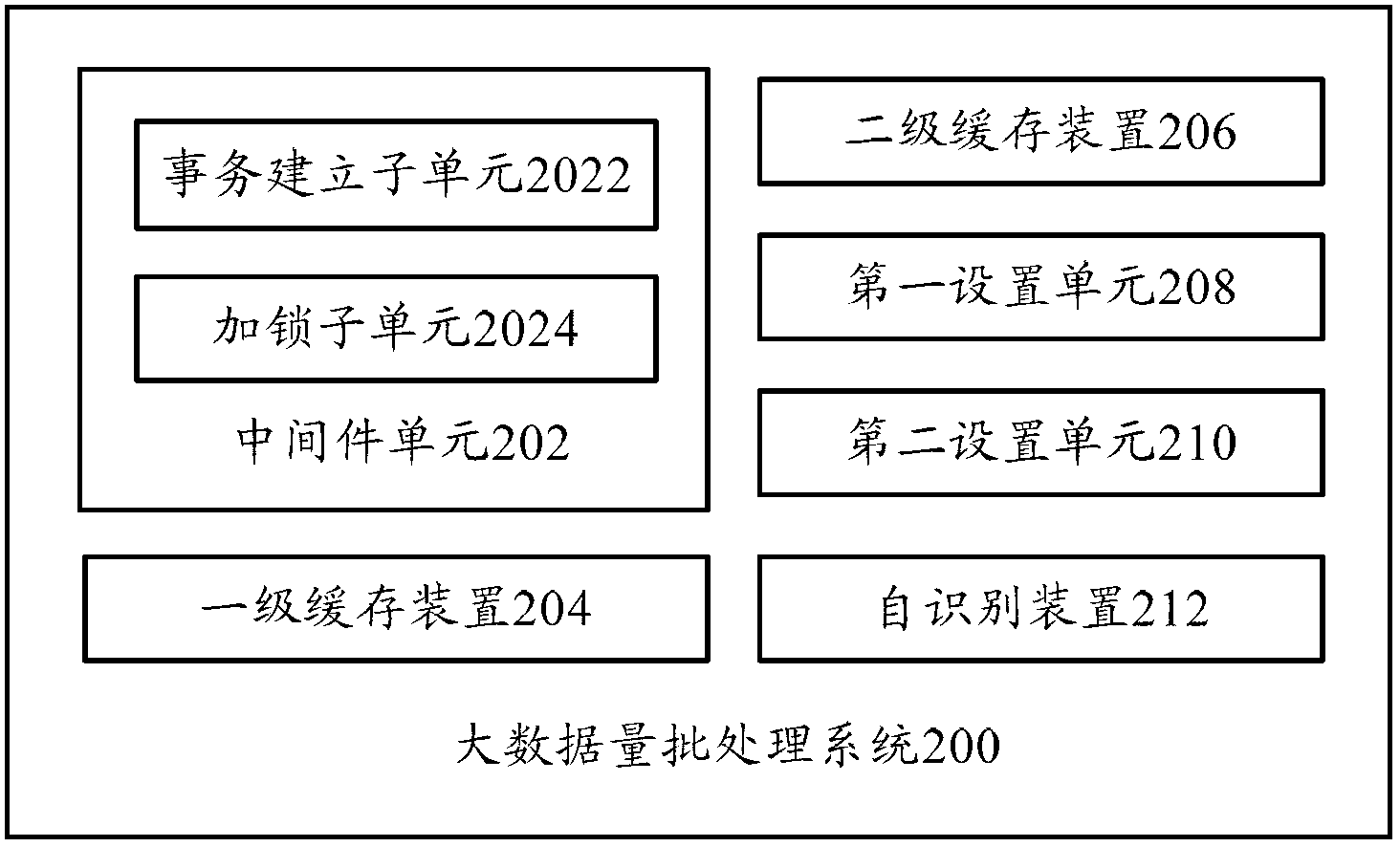

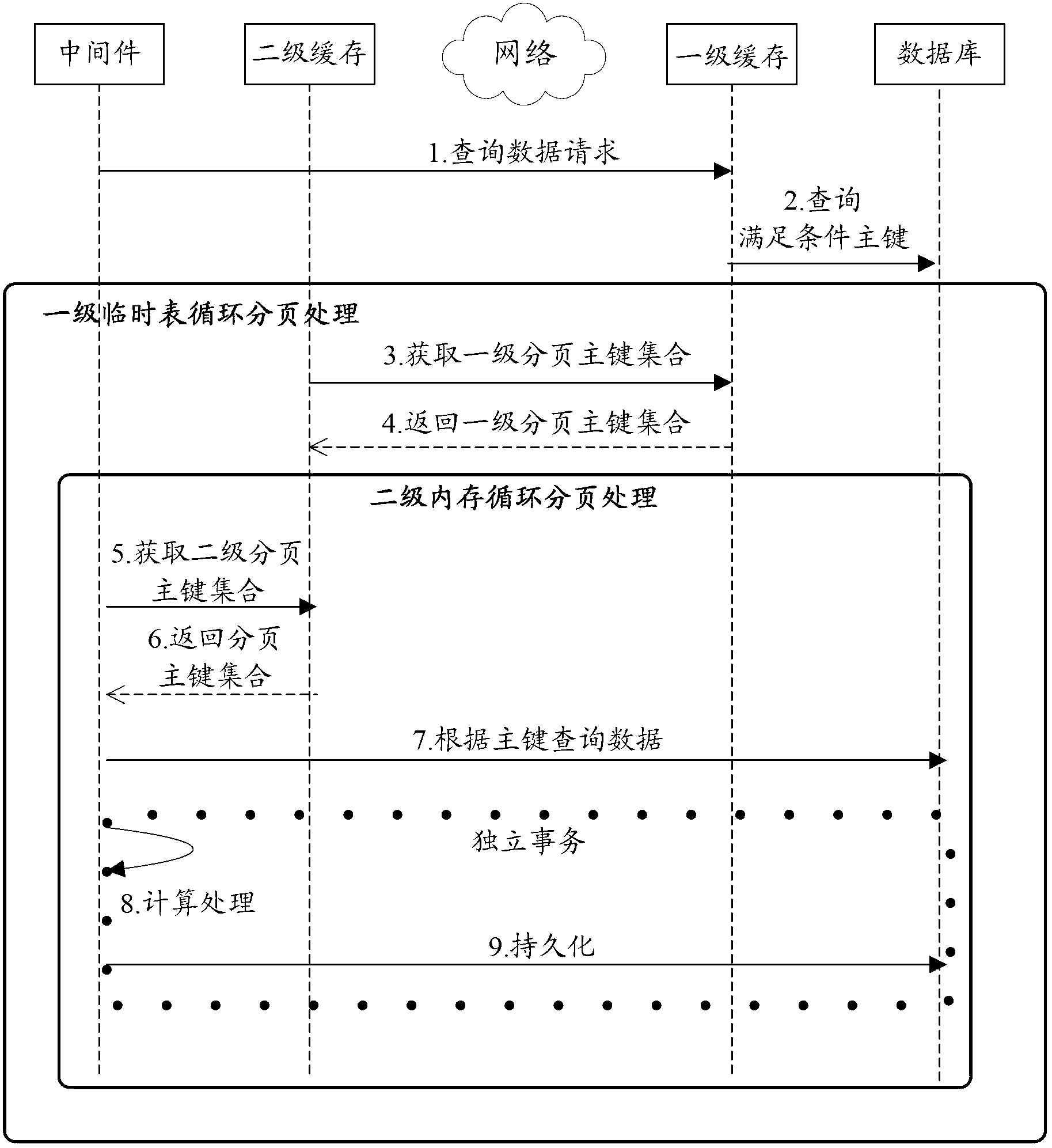

A large data volume and batch processing technology, applied in the computer field, can solve the problems of not using middleware unit resources, not optimizing the processing speed, and high pressure on the database side, so as to improve the overall concurrent processing capability, reduce pressure, and optimize data reading. Take the effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0031] In order to understand the above-mentioned purpose, features and advantages of the present invention more clearly, the present invention will be further described in detail below in conjunction with the accompanying drawings and specific embodiments.

[0032] In the following description, many specific details are set forth in order to fully understand the present invention, but the present invention can also be implemented in other ways different from those described here, therefore, the present invention is not limited to the specific embodiments disclosed below limit.

[0033] Before explaining the large data volume batch processing system according to the present invention, the existing large data matching processing process is briefly introduced.

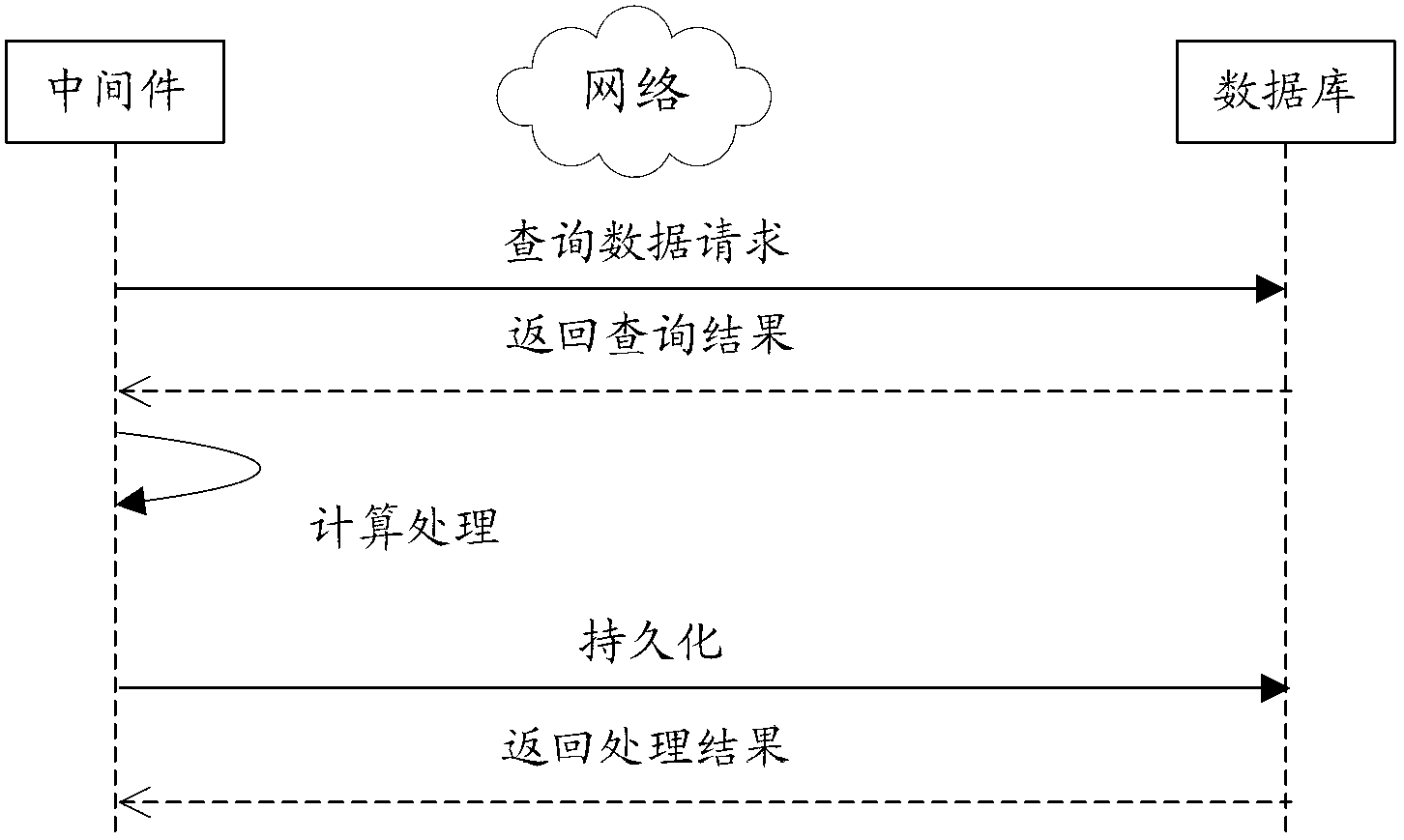

[0034] Such as figure 1 As shown, in general large-scale batch processing business scenarios, all processing logic and algorithms are roughly divided into the following processes: middleware initiates a request to query...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com