Method and device for man-machine interaction

A human-computer interaction and legal technology, applied in the field of human-computer interaction, can solve the problems that the machine cannot recognize gestures accurately and timely, and cannot distinguish the color of human hands well

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

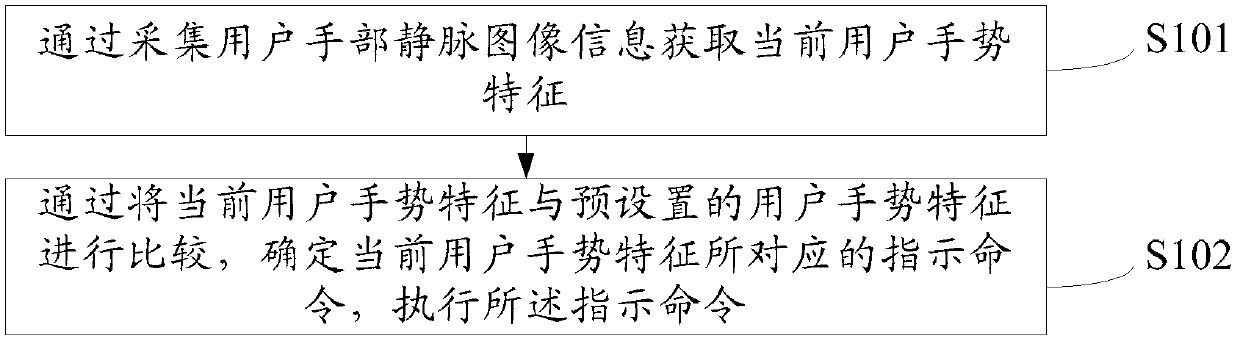

[0021] The embodiment of the present invention provides a human-computer interaction method, such as figure 1 shown, including:

[0022] S 101. Obtain the gesture characteristics of the current user by collecting the vein image information of the user's hand.

[0023] Vein recognition is based on the characteristic of hemoglobin in the blood that absorbs infrared light. A camera with near-infrared sensitivity will take a picture of the hand, and then the image will be captured in the shadow of the vein, and then the image of the vein will be processed. Digital processing, extraction of eigenvalues of venous blood vessel images.

[0024] According to this principle, after the user makes a certain gesture, the recognition device with a near-infrared sensitive camera takes a camera to collect the hand vein image information of the user to obtain the characteristics of the current user's gesture.

[0025] Wherein the camera with near-infrared sensitivity can be an infrared CCD...

Embodiment 2

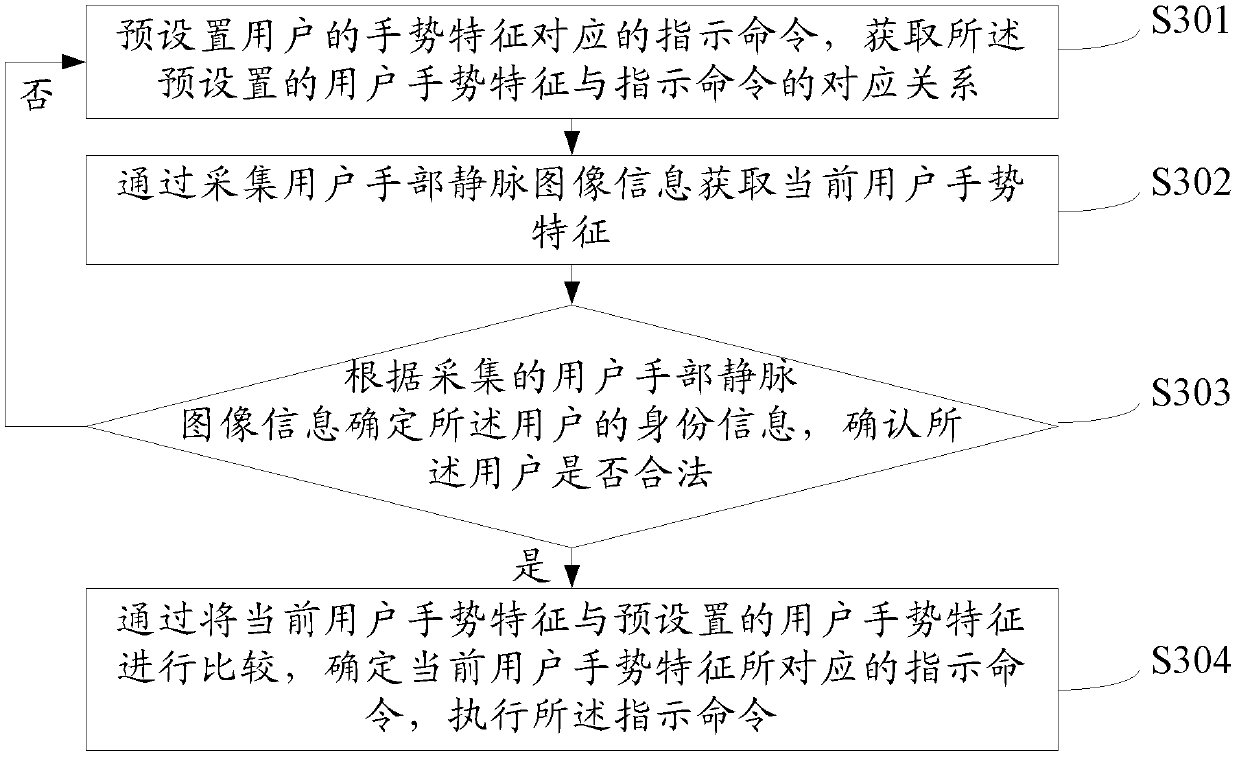

[0042] The embodiment of the present invention provides a human-computer interaction method, such as image 3 shown, including:

[0043] S301. Preset an indication command corresponding to a gesture feature of a user, and acquire a correspondence between the preset user gesture feature and an indication command.

[0044] Vein recognition is based on the characteristic of hemoglobin in the blood that absorbs infrared light. A camera with near-infrared sensitivity will take a picture of the hand, and then the image will be captured in the shadow of the vein, and then the image of the vein will be processed. Digital processing, extraction of eigenvalues of venous blood vessel images.

[0045] According to this principle, users can make various gestures to interact with the recognition device. For example, the recognition device can preset the indication command corresponding to the gesture feature of stretching out the right palm as the "power on" command of the recognition d...

Embodiment 3

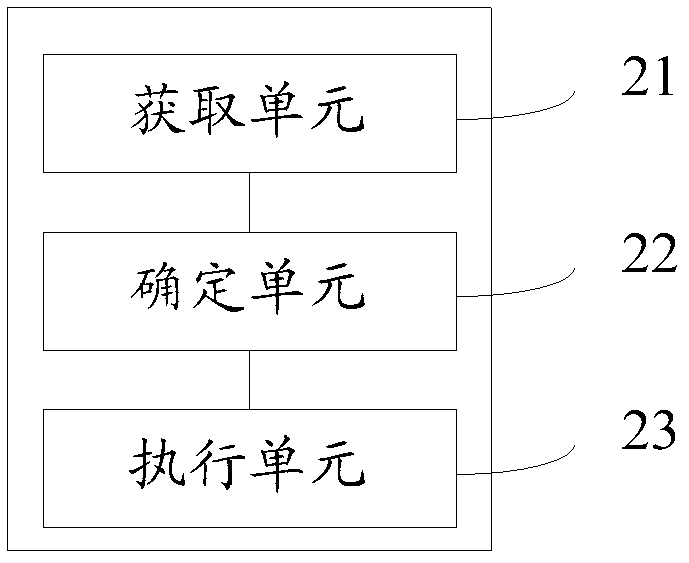

[0061] The embodiment of the present invention provides a device 40 for human-computer interaction, such as Figure 4 As shown, it includes: a setting unit 41 , an acquiring unit 42 , a determining unit 43 and an executing unit 44 .

[0062] The setting unit 41 is configured to preset an indication command corresponding to a user's gesture feature, and obtain a correspondence between the preset user gesture feature and an indication command.

[0063] Vein recognition is based on the characteristic of hemoglobin in the blood that absorbs infrared light. A camera with near-infrared sensitivity will take a picture of the hand, and then the image will be captured in the shadow of the vein, and then the image of the vein will be processed. Digital processing, extraction of eigenvalues of venous blood vessel images.

[0064] According to this principle, the user can make various gestures to perform human-computer interaction with the device 40 . For example, the setting unit 41 ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com