A cache implementation method of interface call

A technology of interface calling and caller, which is applied in memory systems, instruments, and electronic digital data processing. It can solve the problems of caching server performance, waste of system resources, and low update frequency, so as to reduce network transmission overhead, reduce pressure, The effect of avoiding waste of system resources

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0015] In order to make the purpose, technical solution and advantages of the present invention clearer, the present invention will be further described in detail below in conjunction with the accompanying drawings and specific embodiments.

[0016] The core idea of the present invention is: effectively combining the centralized cache and the stand-alone cache, not only ensuring the data consistency of the stand-alone cache, but also making full use of the stand-alone cache, effectively reducing the pressure on the centralized cache, reducing network transmission overhead, and avoiding system Waste of resources.

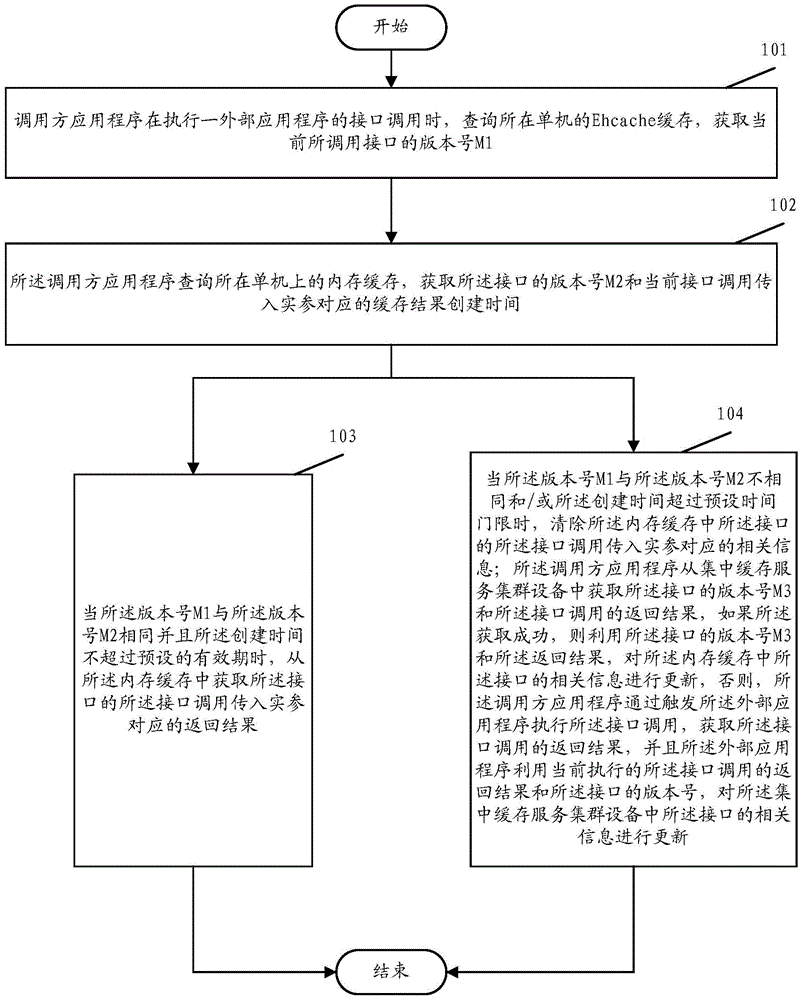

[0017] figure 1 It is a schematic flow chart of Embodiment 1 of the present invention, such as figure 1 As shown, this embodiment mainly includes:

[0018] Step 101 , when the caller application program executes an interface call of an external application program, it queries the Ehcache cache of the stand-alone machine where it is located, and obtains the versio...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com