Video data generation unit, video image display device, video data generation method, video image display method, and video image file data structure

A technology of data generation and generation unit, applied in the direction of image communication, electrical components, video games, etc., to achieve the effect of smooth responsiveness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

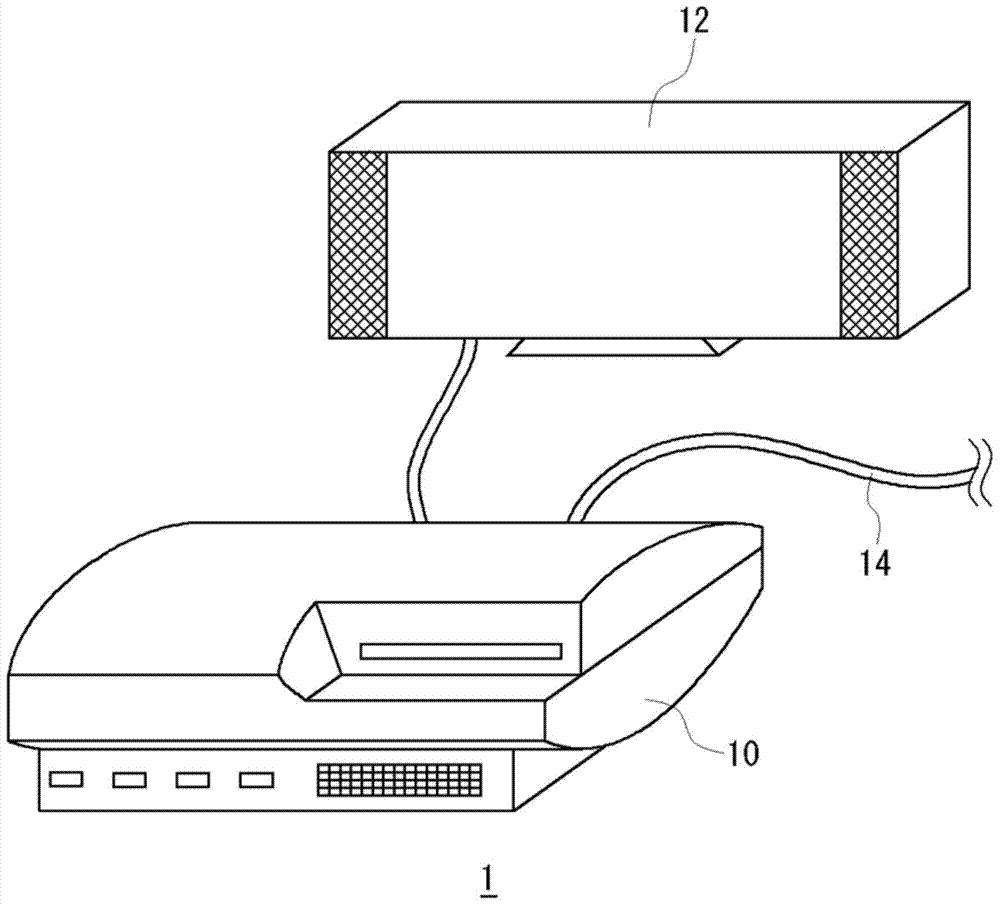

[0036]According to the present embodiment, when a moving image is displayed, the display area can be moved corresponding to a request from the user to move the viewpoint. Moving the viewpoint includes moving the viewpoint closer to or away from the image plane when playing back the moving image, and correspondingly enlarging or reducing the moving image. In such an embodiment, the wider the variable range of resolution expands, and the larger the size of the image becomes, the more difficult it is to display movement of the requested area with smooth and good responsiveness in response to user's operation input image.

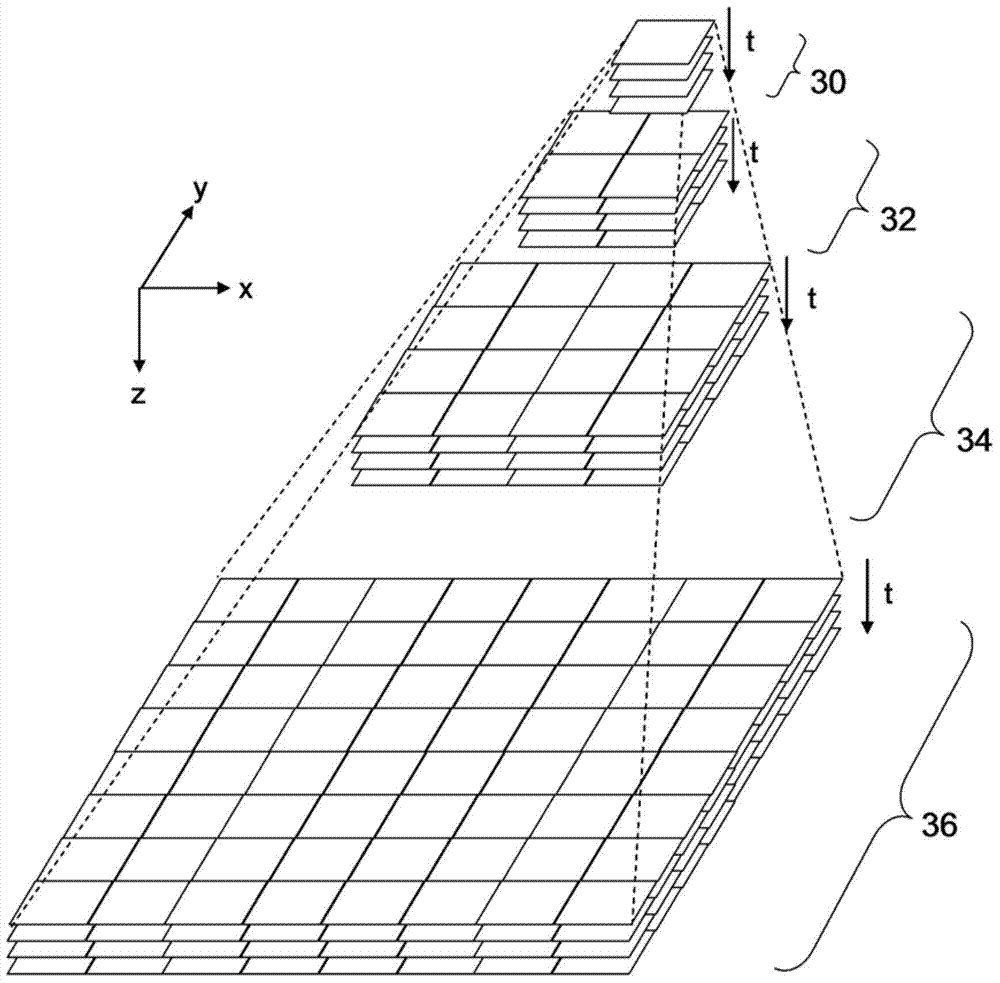

[0037] Therefore, according to the present embodiment, data of a moving image to be displayed is configured in a hierarchical structure in which frames of a moving image are expressed with a plurality of resolutions and arranged in layers in order of resolution. Furthermore, a moving image stream is formed for each block obtained by spatially dividing a frame ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com