Stable vision-induced brain-computer interface-based robot control method

A steady-state visual evoked, brain-computer interface technology, applied in the field of robotics

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0072] The present invention will be further described below in conjunction with the accompanying drawings and specific embodiments.

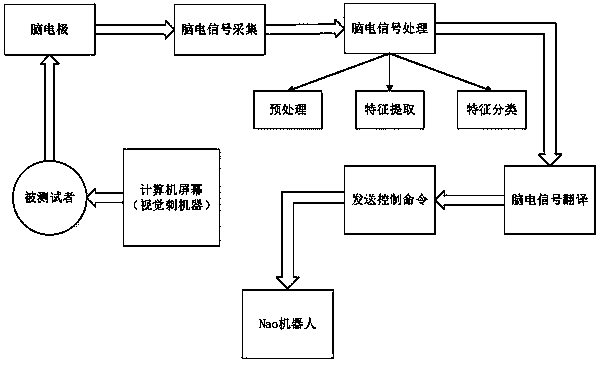

[0073] The method flow chart of this embodiment is as follows figure 1 As shown, it specifically includes the following steps:

[0074] 1. The subjects were required to have normal vision or normal vision after correction. Subjects were seated in a comfortable chair approximately 65 cm from the computer monitor. Place electrodes according to the international 10-20 system electrode placement standard. Brain electrodes were placed at P3, PZ, P4, PO3, POZ, PO4, O1, OZ, and O2 in the occipital area of the subject's head. The ear was used as the reference electrode, the ground electrode was grounded, and conductive paste was injected into the subject's brain electrode. , the purpose is to ensure that the electrode impedance remains below 5kΩ, so that the experiment is more accurate.

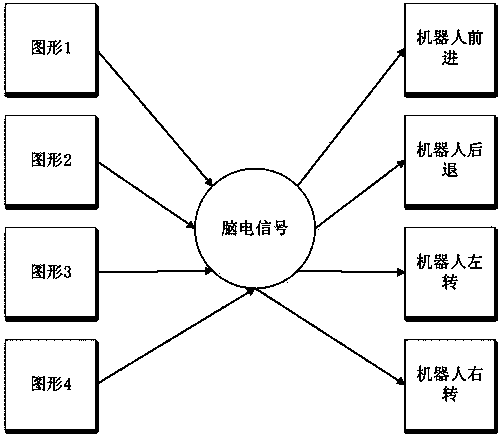

[0075] 2. Presentation of stimulus paradigm. Use the Psyc...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com