Adaptive Color Image Segmentation Method Based on Binocular Parallax and Active Contour

A binocular parallax, active contour technology, applied in image analysis, image data processing, instruments, etc., can solve the problem of affecting the segmentation results, cannot accurately set the initial contour position adaptively, and cannot apply binocular color images well and other problems, to achieve the effect of fast evolution rate, reduced number of iterations, and improved accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach 1

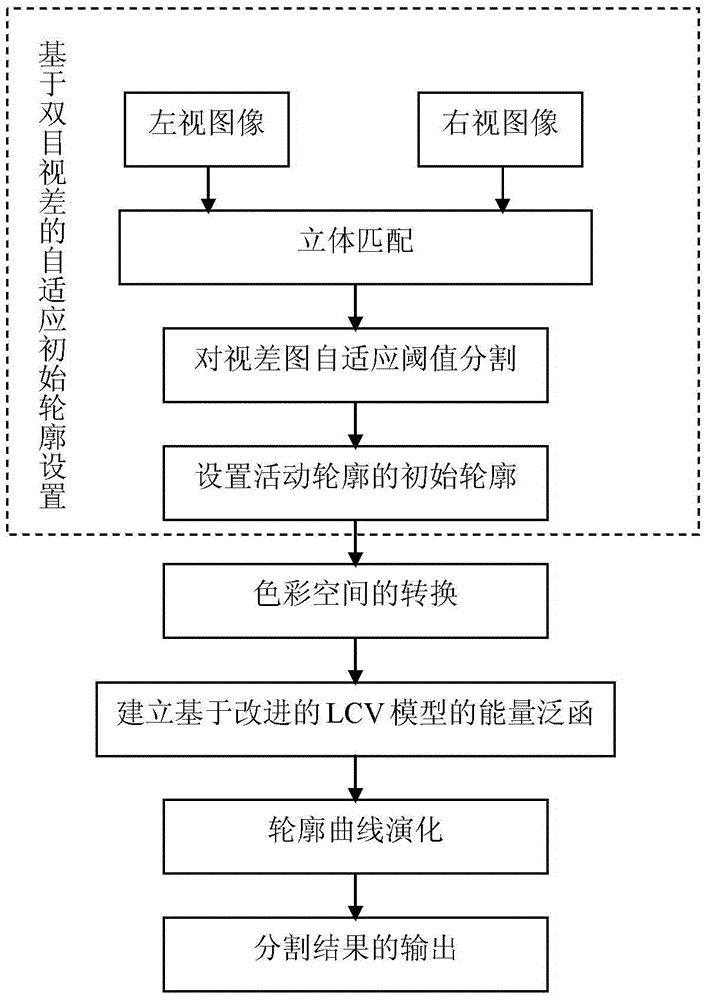

[0020] Specific Embodiment 1: The adaptive color image segmentation method based on binocular parallax and active contour described in this embodiment is implemented according to the following steps:

[0021] Step 1. Adaptive initial contour setting based on binocular parallax;

[0022] Step 2, conversion of color space;

[0023] Step 3, establishing an energy functional based on the improved LCV model;

[0024] Step 4, the evolution of the contour curve;

[0025] Step 5, outputting the segmentation result. combine figure 1 understand this embodiment.

specific Embodiment approach 2

[0026] Specific embodiment 2: The difference between this embodiment and specific embodiment 1 is that the initial contour setting described in step 1 is realized according to the following steps:

[0027] Step 1 (1), using the left-view image in the binocular stereo image as the target image, and the right-view image as the reference image, using an adaptive weighted stereo matching algorithm to obtain the disparity map of the left-view image in the binocular stereo image;

[0028] Step 1 (2), performing threshold segmentation on the disparity map, extracting the target object area of interest, and then using median filtering to suppress noise in the disparity map;

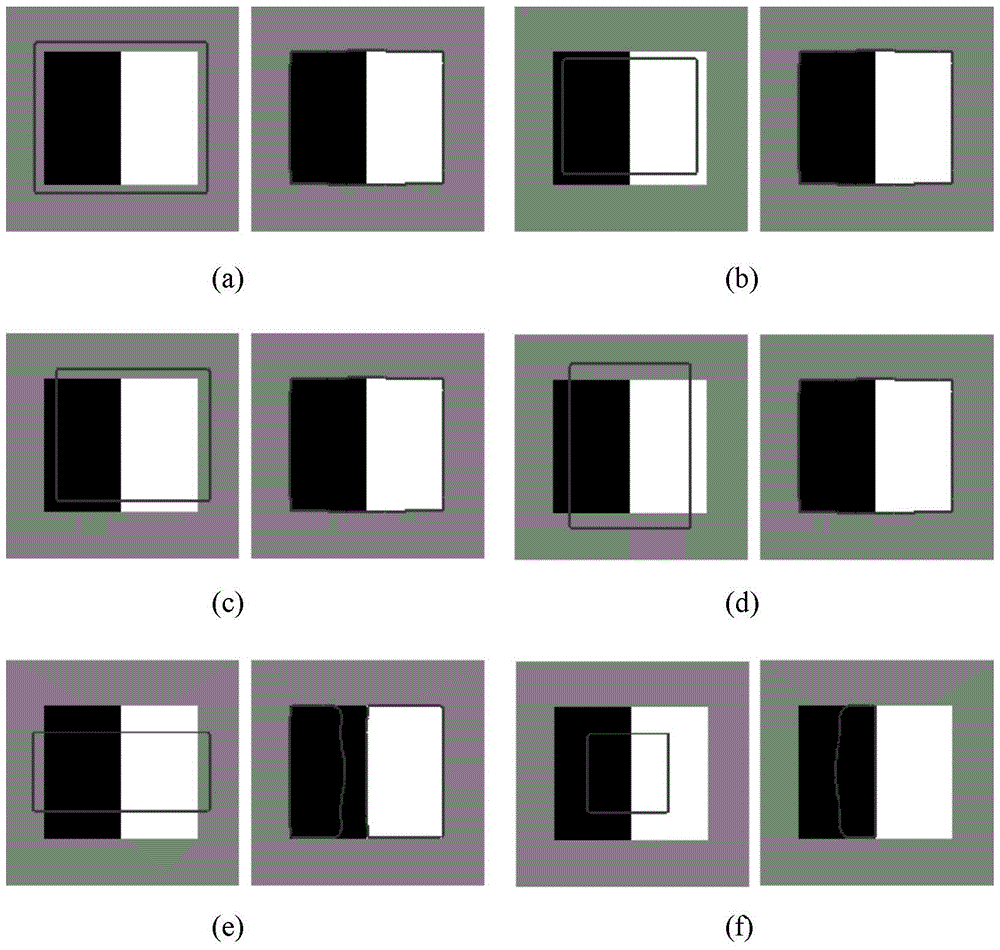

[0029] Step 1 (3), set the boundary of the obtained target region of interest as the initial contour of the active contour model, the specific process is: select the target object region, the surface of the object is generally smooth, so the points on the surface of the object on the image The projection is con...

specific Embodiment approach 3

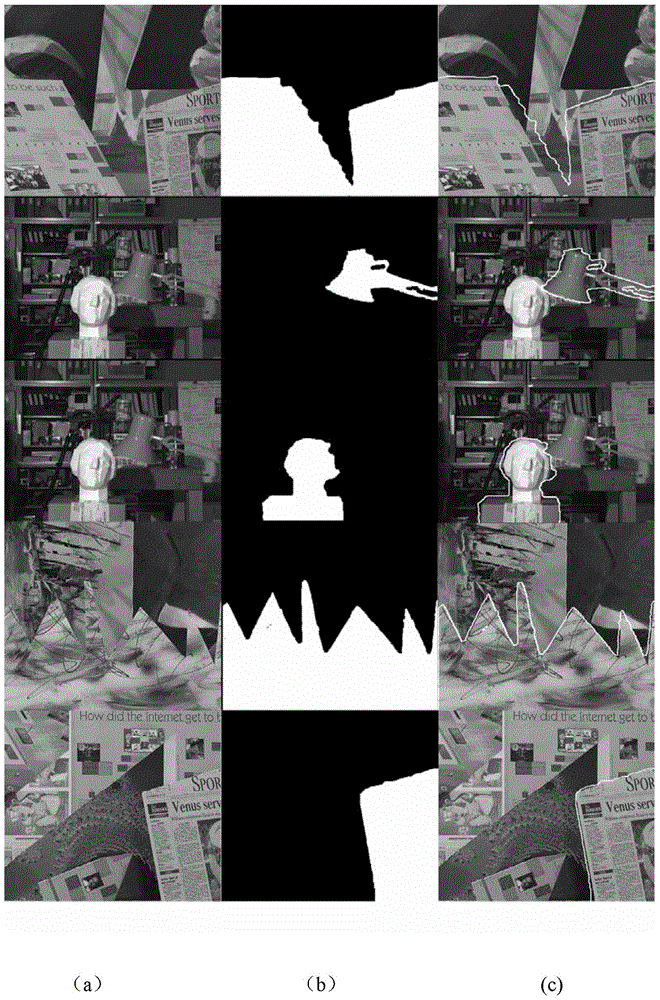

[0032] Specific embodiment three: the difference between this embodiment and specific embodiment one or two is: the conversion of the color space described in step 2 is realized by the following RGB color space and YCbCr color space conversion formula:

[0033] Y=0.299R+0.587G+0.114B

[0034] Cb=0.564(B-Y)

[0035] Cr=0.713(R-Y), where Y, Cb, and Cr respectively represent the brightness, blue chroma and red chroma of the YCbCr color space; R, G, B represent the red, green, blue of the RGB color space respectively Three servings. combine Figure 4 To understand this embodiment, other steps and parameters are the same as those in the first or second specific embodiment.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com