Multi-video real-time panoramic fusion splicing method based on CUDA

A technology of fusion splicing and multi-video, applied in the field of multi-video real-time panoramic fusion splicing based on CUDA, to achieve the effect of remarkable monitoring effect and wide application prospect.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0038] The implementation process of the present invention will be described below in conjunction with the accompanying drawings, taking four video sources output by four cameras (Camera1, Camera2, Camera3, Camera4) as an example.

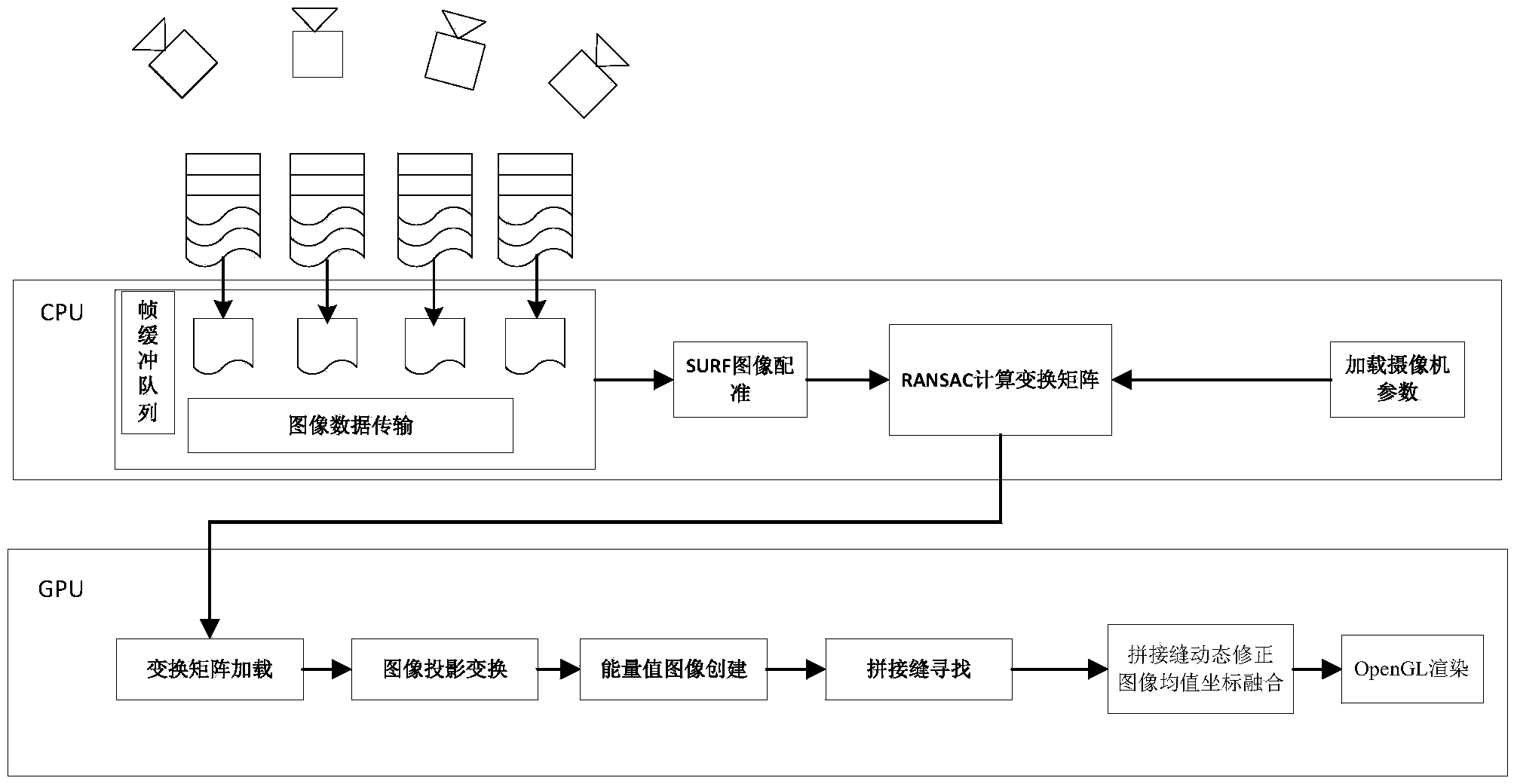

[0039] Such as figure 1 As shown, the implementation process of the present invention is mainly divided into two stages of system initialization and real-time video frame fusion:

[0040] 1. System initialization stage:

[0041] (1) Obtain the first frame image of each channel of video, perform registration operation and cylindrical projection transformation to obtain the overall perspective transformation model;

[0042] (2) Perform perspective transformation processing on the first frame of each video according to the perspective transformation model, and at the same time obtain the perspective transformation mask and the overlap between adjacent video sources (Camera1-2, Camera2-3, Camera3-4) Area mask map;

[0043] (3) use the dynamic progra...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com