Cache management method and device

A cache management, unified technology

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

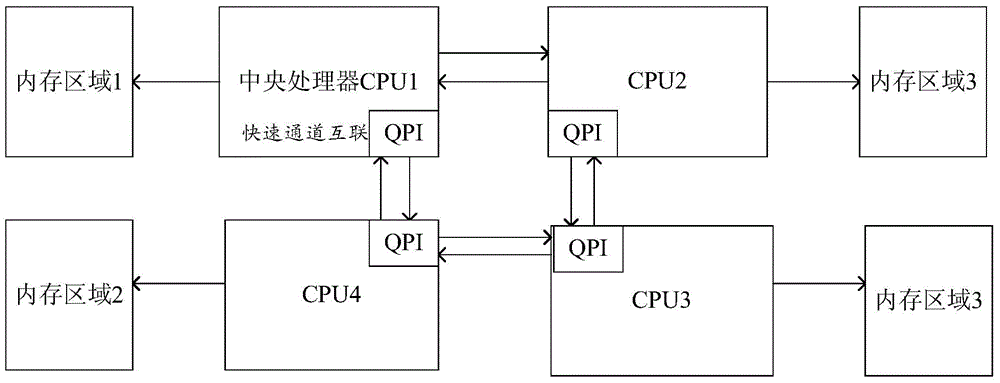

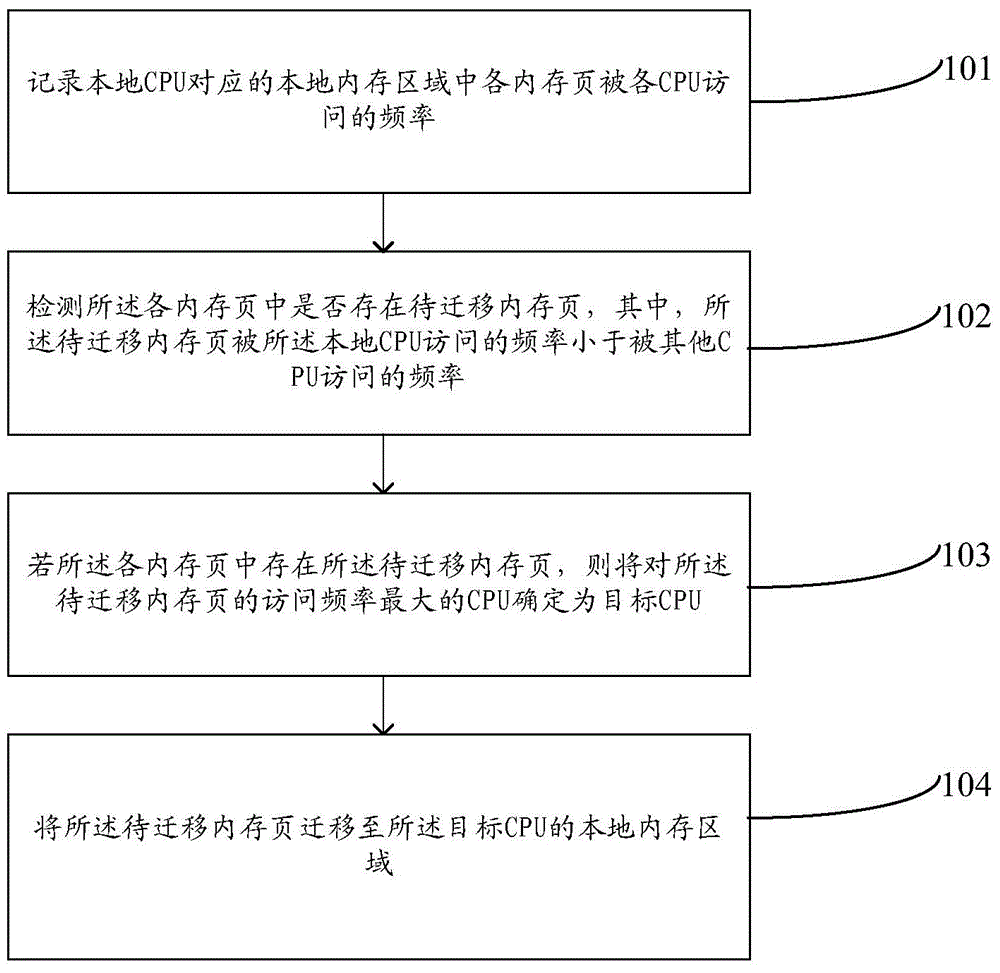

[0072] An embodiment of the present invention provides a cache management method, which is applied to a device with a NUMA architecture, the device includes at least two central processing units CPU, the device includes a data buffer, and the data buffer is divided into at least two Local memory area, each CPU corresponds to a local memory area, for each CPU. Such as figure 2 Said, said method comprises the following steps:

[0073] 101. Record the frequency at which each memory page in the local memory area corresponding to the local CPU is accessed by each CPU.

[0074] For each CPU in the server (cache management device), corresponding to the method provided in the embodiment of the present invention, when a certain CPU is used as an execution subject, the CPU is called a local CPU. Each CPU will record the frequency of memory pages in its local memory area being accessed by each CPU, which is convenient for each CPU to detect the frequency of access to each memory page,...

Embodiment 2

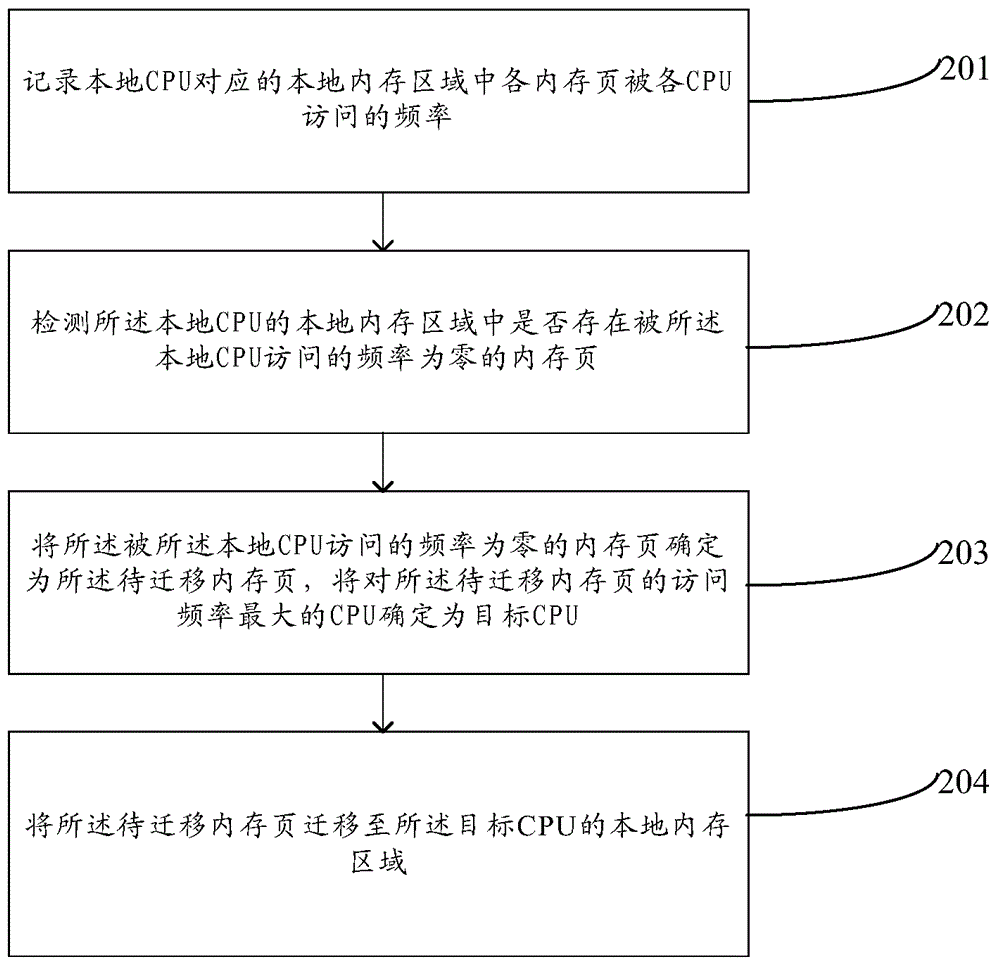

[0118] An embodiment of the present invention provides a cache management method, which is applied to a non-uniform memory access architecture NUMA device, the NUMA device includes at least two central processing unit CPUs and a data buffer, the NUMA device includes a data buffer, and the NUMA device includes a data buffer. The data buffer contains at least one local memory area, and each CPU corresponds to a local memory area. Such as image 3 Said, for each CPU, the execution subject is a local CPU, and the method includes the following steps:

[0119] 201. Record the frequency at which each memory page in the local memory area corresponding to the local CPU is accessed by each CPU.

[0120] It should be noted that when the memory management method provided by the present invention is applied to a CPU, the CPU will be called a local CPU. That is, when the CPU is the execution subject, the CPU is called a local CPU.

[0121] Refer to the above combination figure 1 It can ...

Embodiment 3

[0147] The embodiment of the present invention provides a cache management method, such as Figure 4 As shown, for each CPU in the server, when a certain CPU is used as the execution subject, the CPU is called a local CPU, and the method includes the following steps:

[0148] 301. The local CPU uses a local index and a global index to record the correspondence between the storage address of each memory page in the local memory area and the number of each memory page.

[0149] Wherein, the local CPU uses the local index to record the storage address of each memory page in the local memory area in the local memory area, and the corresponding relationship between the number of each memory page; uses the global index to record the storage address of each memory page in the local memory area; The corresponding relationship between storage addresses in cache areas other than the local memory area of the local CPU and the numbers of the memory pages.

[0150] In implementation, th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com