Cross-media retrieval method based on uniform sparse representation

A sparse representation, cross-media technology, applied in network data retrieval, other database retrieval, network data indexing, etc., can solve the problem of reducing cross-media data noise, without considering sparsity, errors, etc., to enhance the ability of analysis and mining , high cross-media retrieval accuracy, and the effect of improving effectiveness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0022] The present invention will be further described in detail below in conjunction with the accompanying drawings and specific embodiments.

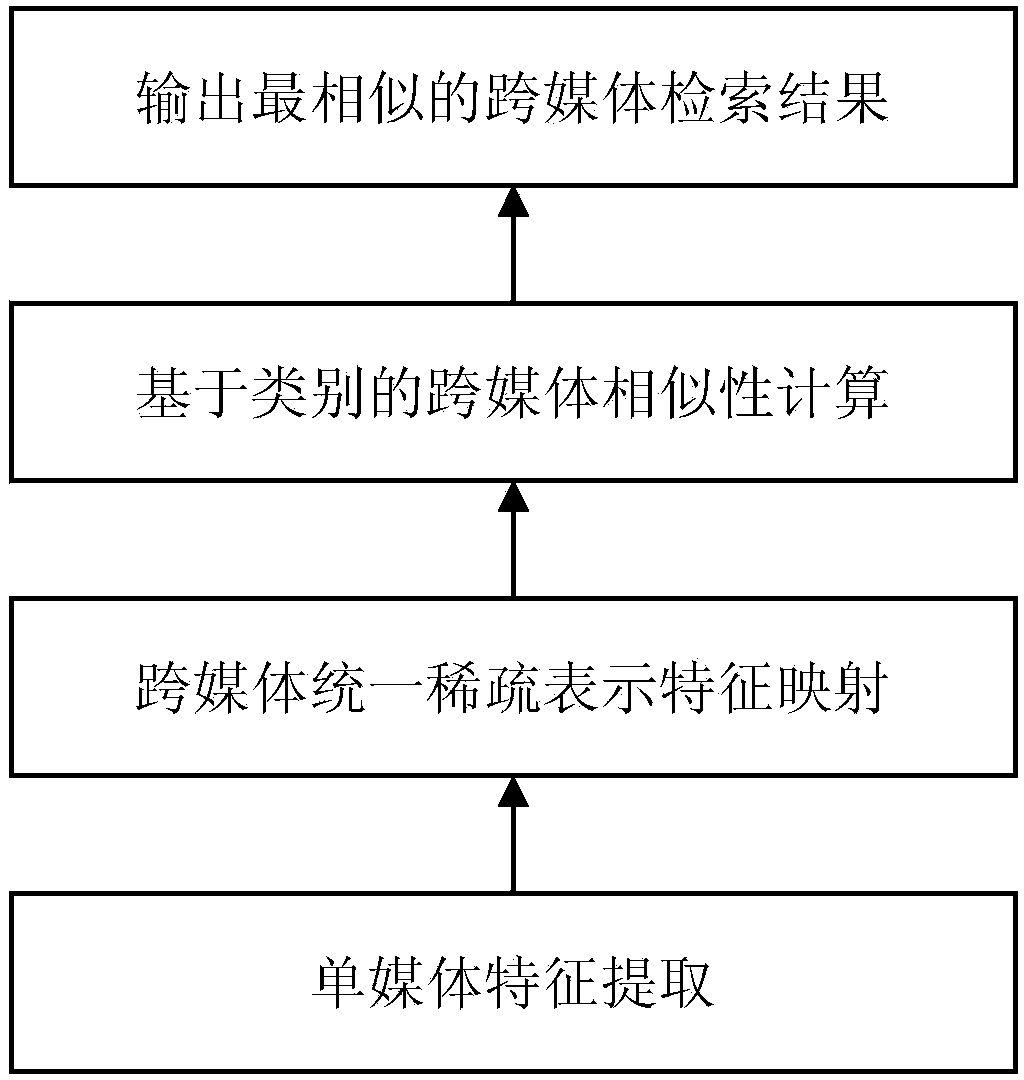

[0023] A cross-media retrieval method based on unified sparse representation of the present invention, its flow is as follows figure 1 As shown, it specifically includes the following steps:

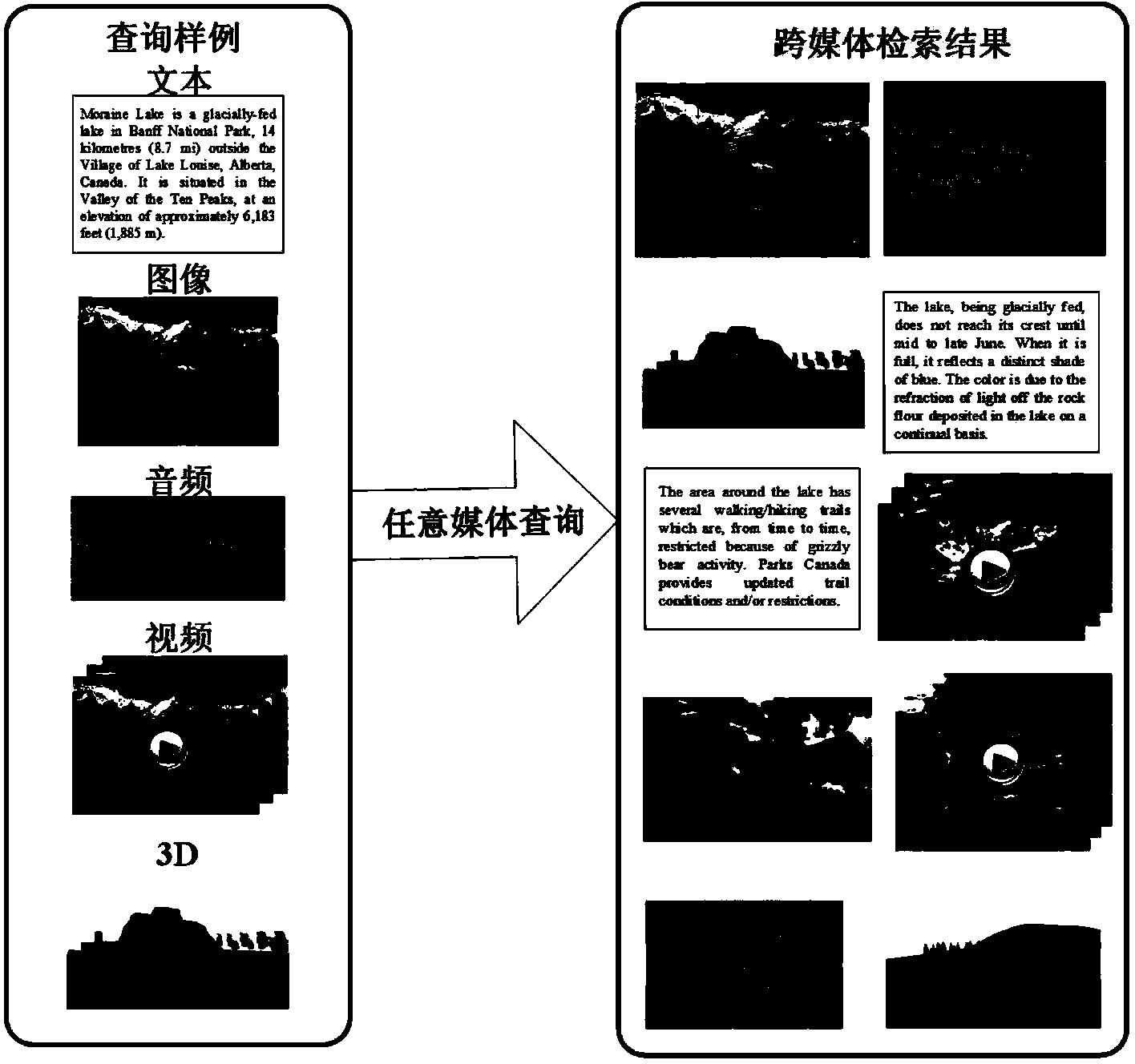

[0024] (1) Establish a cross-media database containing multiple media types, divide the database into a training set and a test set, and extract the feature vector of each media type data.

[0025] In this embodiment, the multiple media types are five media types, including text, image, video, audio and 3D.

[0026] For text data, extract its hidden Dirichlet distribution feature vector; for image data, extract its bag-of-words feature vector; for video data, extract its bag-of-words feature vector; for audio data, extract its Mel frequency cepstral coefficient feature Vector; for 3D data, extract its light field feature vector. The method of...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com