Indoor positioning method based on visual feature matching and shooting angle estimation

A visual feature and indoor positioning technology, applied in computing, surveying and mapping and navigation, navigation computing tools, etc., can solve the problems of a large number of equipment and infrastructure investment, improve visual positioning accuracy, loosen the requirements of positioning scenarios, and improve positioning accuracy Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach 1

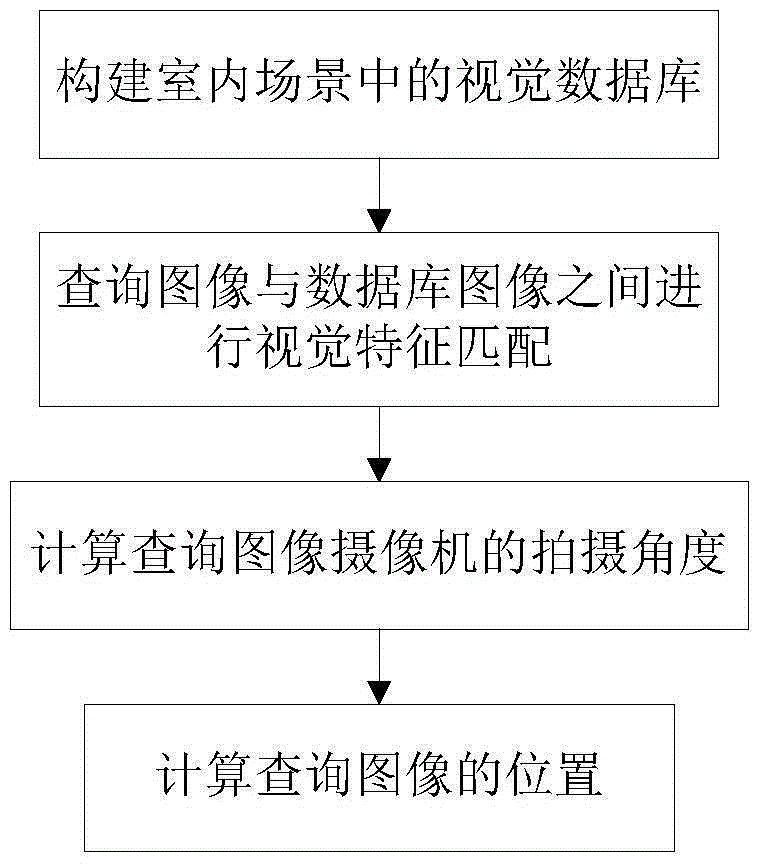

[0017] Specific implementation mode one: combine figure 1 Description of this embodiment, an indoor positioning method based on visual feature matching and shooting angle estimation, is characterized in that an indoor positioning method based on visual feature matching and shooting angle estimation is specifically carried out according to the following steps:

[0018] Step 1: Build a visual database in the indoor scene, which includes indoor visual features, the position coordinates of the visual features in the indoor coordinate system, and the camera coordinate system position of the visual features during the acquisition process; figure 2 ;

[0019] Step 2: According to the visual database in the indoor scene obtained in step 1, solve and query the image P 1 Q and P 2 Q The database image with the largest matching rate; such as image 3 ;

[0020] Step 3: Calculate the query image P 1 Q The corresponding rotation matrix R 1 and query image P 2 Q The correspondin...

specific Embodiment approach 2

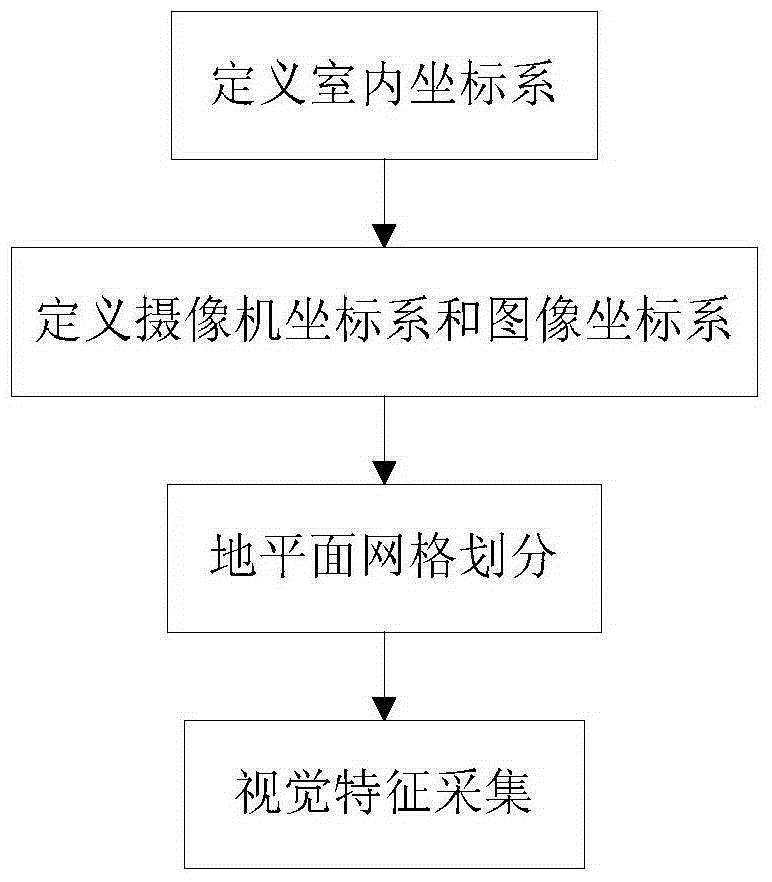

[0022] Specific embodiment 2: The difference between this embodiment and specific embodiment 1 is that the visual database in the indoor scene is constructed in the step 1, and the visual database includes indoor visual features, position coordinates of visual features in the indoor coordinate system, and The position of the camera coordinate system of the visual feature during the acquisition process; the specific process is:

[0023] Step 11: define the indoor coordinate system;

[0024] In the indoor scene, define a three-dimensional Cartesian orthogonal coordinate system O D x D Y D Z D , where Z D The direction of the axis is true north, and X D The direction of the axis is due east, Y D The direction of the axis is perpendicular to the indoor ground plane downward, O D is a three-dimensional Cartesian orthogonal coordinate system O D x D Y D Z D the origin of

[0025] Step 1 and 2: Define the camera coordinate system and the image coordinate system;

[0026]...

specific Embodiment approach 3

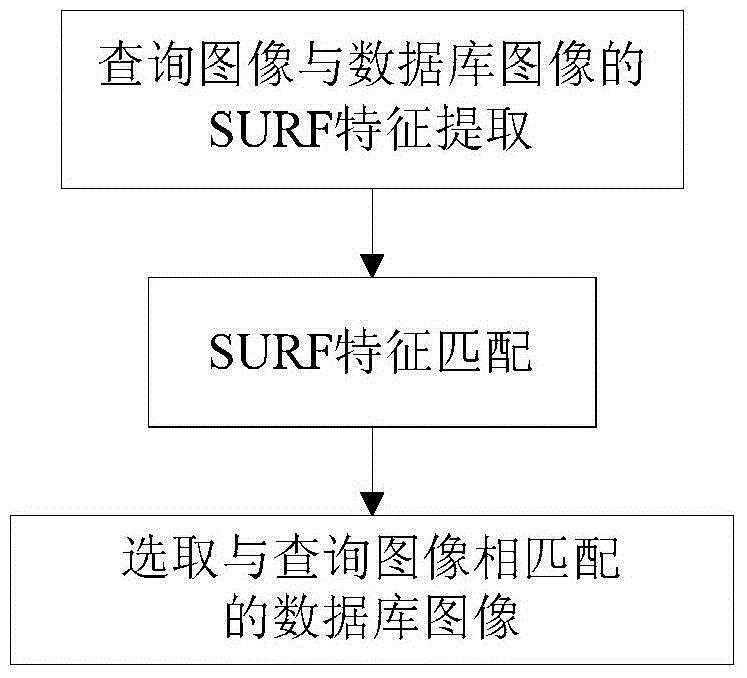

[0034] Specific embodiment three: the difference between this embodiment and specific embodiment one or two is that in said step two, according to the visual database in the indoor scene obtained in step one, the solution and query image P 1 Q and P 2 Q The database image with the largest matching rate; the specific process is:

[0035] Step 21: SURF feature extraction of query image and database image;

[0036] The SURF algorithm is an accelerated robust feature algorithm, spelled as SpeededupRobustFeatures; in this step, the input of the SURF algorithm is the database image, and the output of the SURF algorithm is the feature vector of the database image; the user to be positioned uses the camera to perform two image acquisitions at the same position , two query images containing different visual features can be obtained, denoted as P k Q , the superscript Q indicates that the image is a query image, and the subscript k indicates the number of the query image, where k=1, ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com