Behavior identification method based on AP cluster bag of words modeling

A technology of AP clustering and recognition methods, applied in character and pattern recognition, instruments, computer parts, etc., can solve problems such as low efficiency and low recognition rate, achieve reduced clustering time, better clustering effect, and improved The effect of behavior recognition rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0016] The present invention will be further described below in conjunction with drawings and embodiments.

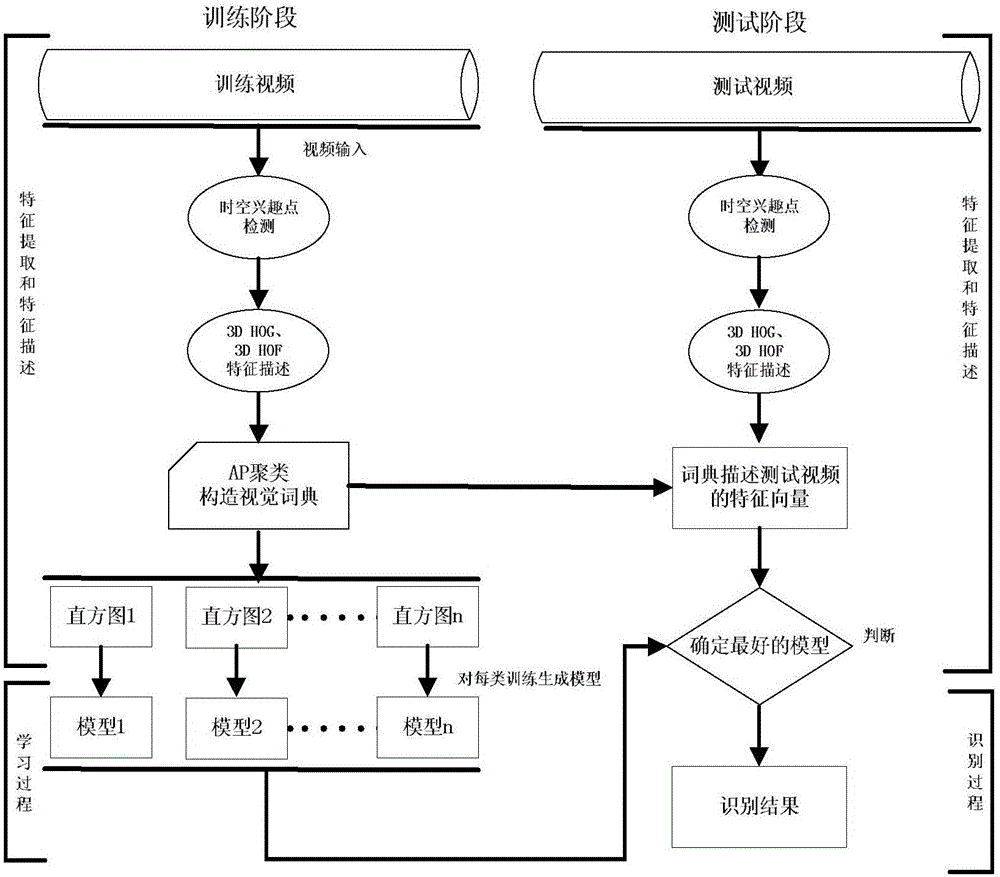

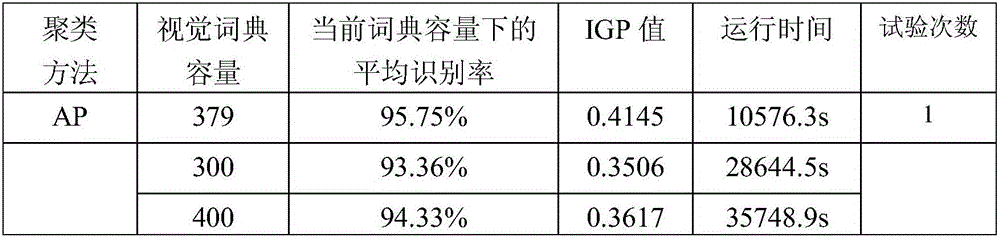

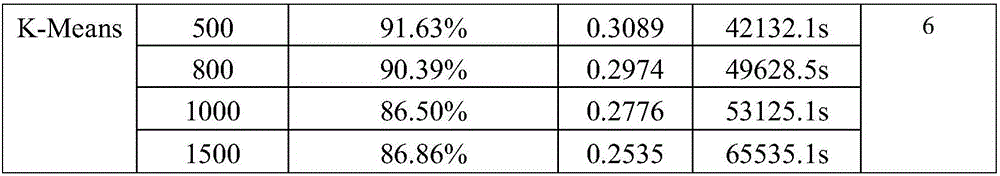

[0017] refer to figure 1 , an action recognition method based on AP clustering bag-of-words modeling, using the currently recognized classic action recognition algorithm test data set KTH for verification, the video has illumination changes, scale changes, noise effects, camera shakes, etc. Experiments were carried out on all the videos in the data set, and compared with the traditional bag-of-words model based on K-Means clustering, the visual dictionary capacity of the bag-of-words model based on K-Means clustering was taken in turn as 300, 400, 500, 800, 1000, 1500 for comparison. The leave-one-out cross-validation method is adopted for the behavior data set, that is, for each action class, 80% of the videos are randomly selected as the training set, and the remaining 20% are used as the test set.

[0018] The implementation process of the behavior recognition me...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com