Three dimensional SLAM method based on events with depth enhanced vision sensor

A vision sensor and vision-enhancing technology, applied in directions such as road network navigators, can solve the problems of expensive power, resource consumption, consumption, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0050] The present invention will be further described below in conjunction with the accompanying drawings.

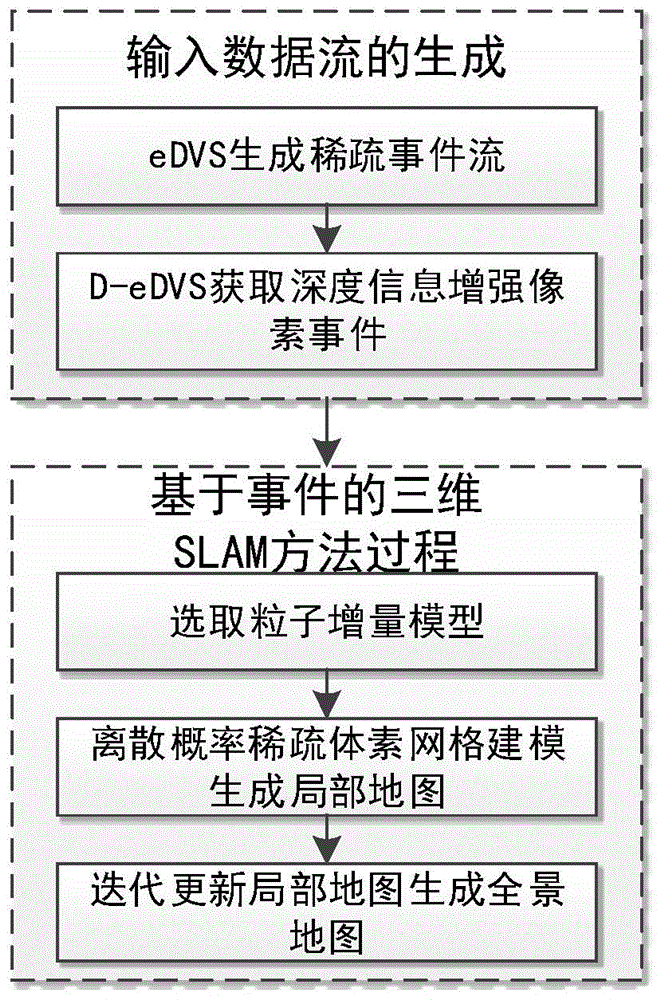

[0051] An event-based three-dimensional SLAM method with a depth enhanced vision sensor, characterized in that: the method comprises the steps of:

[0052] Step 1: Generation of input data stream:

[0053] Step 1.1: eDVS generates sparse event streams: The embedded dynamic vision sensor (eDVS) is used to directly generate dynamically changing sparse event streams, which only requires hardware support without software preprocessing.

[0054] Step 1.2: D-eDVS Acquires Depth Information Enhanced Pixel Events: Combining an Embedded Dynamic Vision Sensor (eDVS) and a Separate Active Depth Sensing Sensor (PrimeSense RGB-D) Asus Xtion Pro Live with a resolution of 320*240, frequency 69Hz, and calibrate the corresponding pixels on the two sensors; the depth sensing sensor (PrimeSense RGB-D) can obtain the depth information of the corresponding pixel position of each generated...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com