Face appearance editing method based on real-time video proper decomposition

A real-time video and editing technology, applied in image analysis, image enhancement, instruments, etc., can solve the problem that the decomposition is not accurate eigendecomposition, does not consider hair and neck, limited editing, etc., to reduce computing overhead and improve eigendecomposition Efficiency and versatility

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

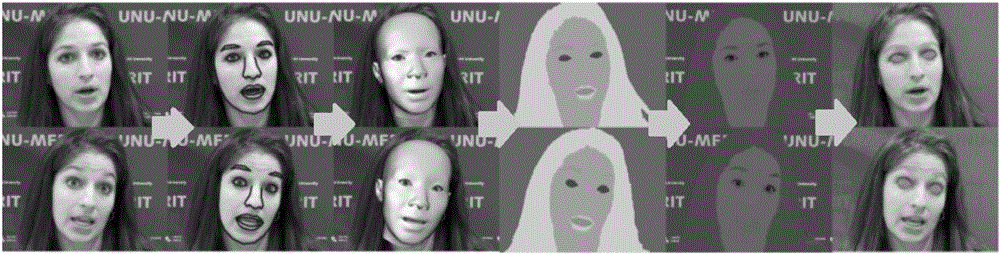

[0079] The inventor has realized the implementation example of the present invention on a machine equipped with Intel dual-core i5 central processing unit, NVidia GTX660 graphics processor and 16GB internal memory. The inventors obtained all experimental results shown in the accompanying drawings using all parameter values listed in the detailed description. For a webcam with a resolution of 640×480, most ordinary users can complete the interactive segmentation within one minute, and the automatic preprocessing time of the reference image is usually 30 seconds, of which GMM fitting takes 10 seconds. The construction of the lookup table It takes less than 20 seconds. In the running stage, the processing speed of the system exceeds 20 frames per second, and the processing content includes face tracking, correspondence of different regions based on graph cuts, eigendecomposition and appearance editing.

[0080] The inventors conducted experiments on various face appearance edi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com