Pedestrian re-identification method based on CNN and convolutional LSTM network

A pedestrian re-recognition and network technology, applied in character and pattern recognition, instruments, computer parts, etc., can solve the problems of difficult appearance features, inability to learn features, and the appearance relationship of pedestrians is not close, and achieve the effect of close relationship

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

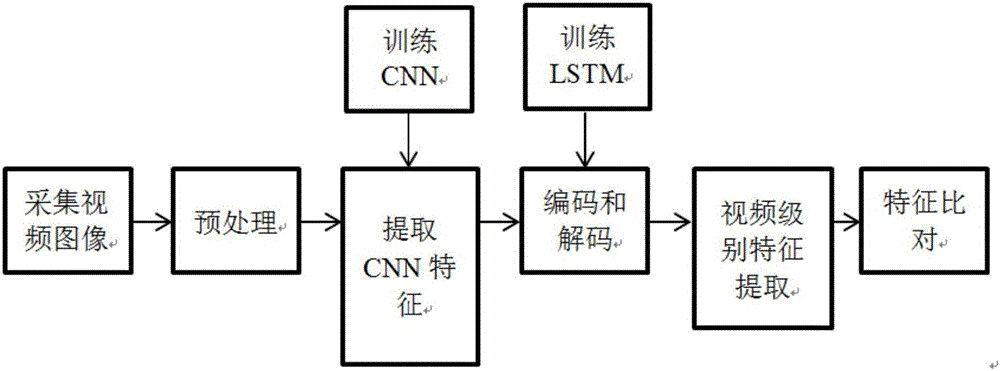

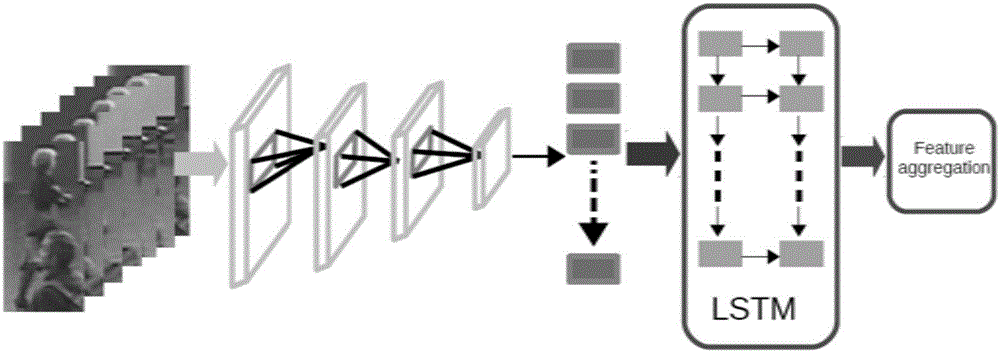

[0029] The method scheme of the present invention: Given a series of continuous pedestrian images in the video, first use the frame-level convolution layer in CNN to extract its CNN features, so as to capture complex changes in the appearance, and then send the extracted features to the convolution In the LSTM encoding-decoding framework, the encoding framework uses a local adaptive kernel to capture the actions of pedestrians in a sequence, thereby encoding the input sequence into a hidden representation, and then uses the decoder to decode the hidden representation output by the encoding framework into a sequence. After encoding and decoding by LSTM, a frame-level deep spatio-temporal appearance descriptor is obtained. Finally, Fisher vector encoding is used so that the descriptor can describe video-level features.

[0030] In order to make the pedestrian re-identification method based on CNN and convolutional LSTM network proposed in the present invention more clear, the fo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com