Multi-feature fused lane line detecting system and method and advanced driving assistance system

A lane line detection and multi-feature fusion technology, applied in the field of image processing, can solve the problems that gray images cannot be filtered out, affect the accuracy of lane line detection, and single features, and achieve the effect of strengthening anti-interference ability.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0059] In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be described in further detail below in conjunction with specific embodiments and with reference to the accompanying drawings.

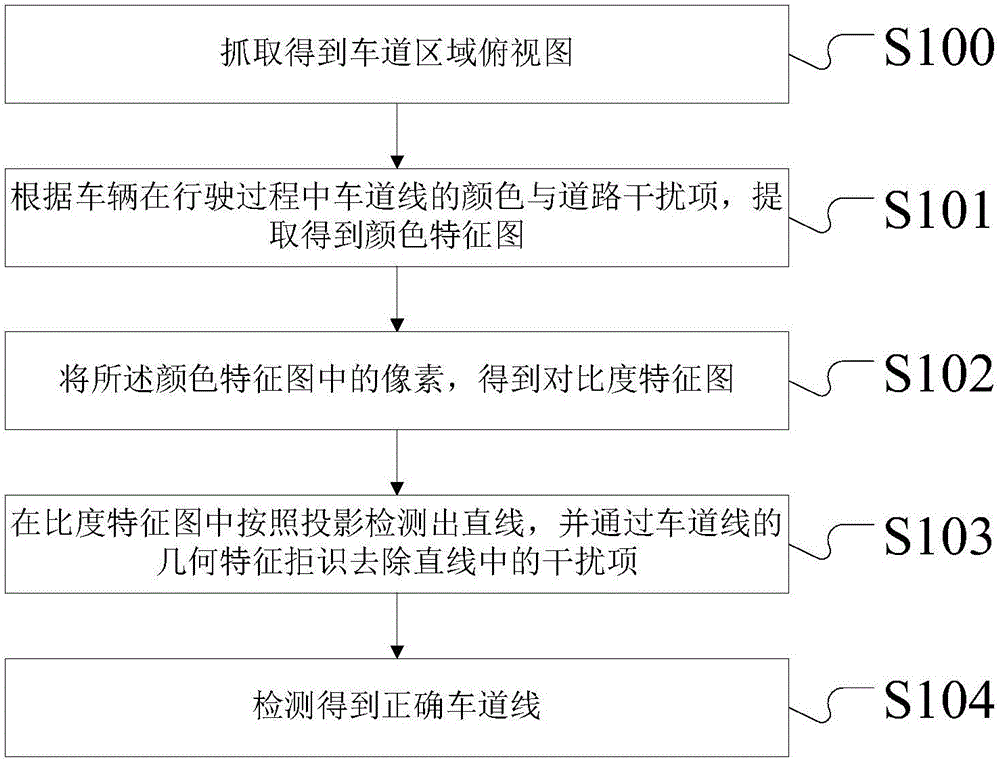

[0060] Please refer to figure 1 It is a schematic flowchart of a lane line detection method based on multi-feature fusion in an embodiment of the present invention.

[0061] In this embodiment, the lane line detection method of multi-feature fusion includes the following steps:

[0062] Step S100 captures the top view of the lane area; in the step S100, after the vehicle enters the lane, the image can be collected by means of the advanced driving assistance system in the vehicle. The advanced driver assistance system, referred to as ADAS. It uses various sensors installed on the car to collect environmental data inside and outside the car at the first time, and perform technical processing such as identification, detect...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com