Image space-based image field depth simulation method

A simulation method and image space technology, applied in the field of image processing, can solve problems such as manual processing, and achieve the effect of reducing image errors

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

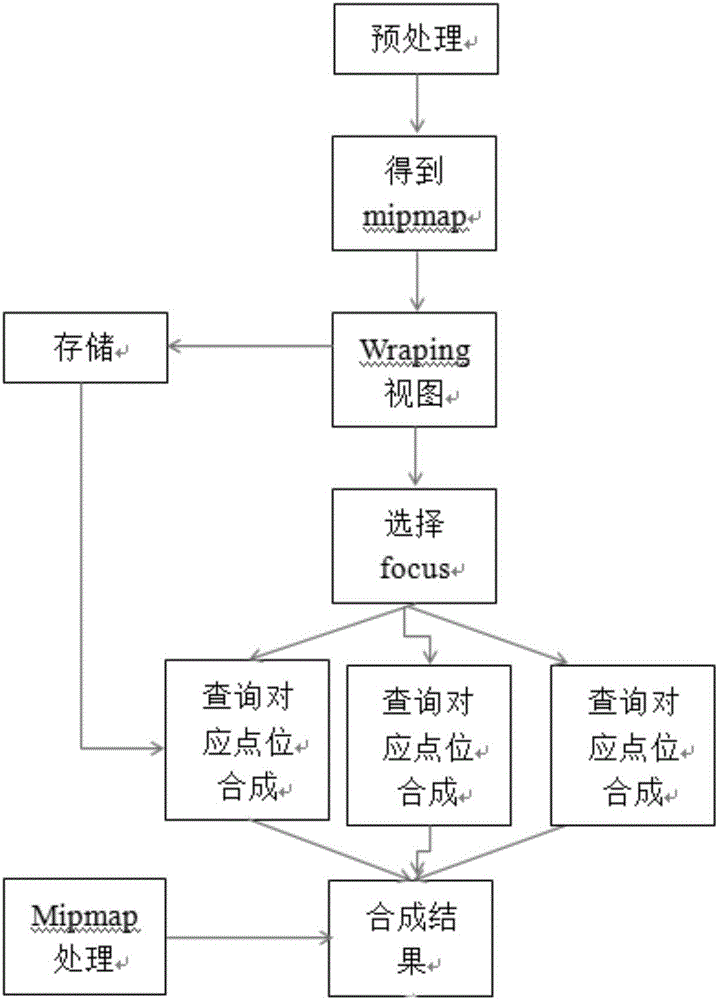

[0020] Such as figure 1 As shown, an algorithm based on the original scene image and a relatively uniform disparity image is improved and optimized according to the algorithm proposed in the real time depth of field rendering via dynamic light field generation and filtering article, and the optimal depth of field effect image is synthesized as much as possible .

[0021] The method of this embodiment is realized through the following technical solutions, comprising the following steps:

[0022] The first step is to process according to the scene image, use the function of opencv to generate mipmap, and use 5x5kernel Gaussian filter for preprocessing before generating mipmap.

[0023] The second step is to perform wrapping processing on the current image, through the mapping function L in (u, v, s, t) makes the mapping of the pixel value of the current reference image to each R st middle.

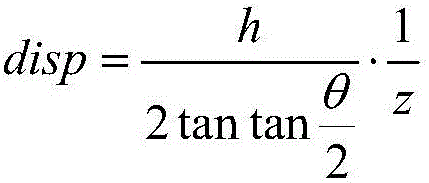

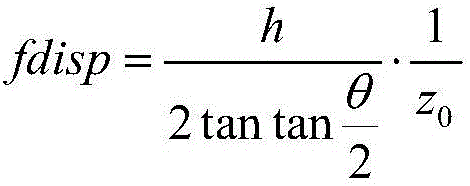

[0024] The third step is to retain the closest point by analyzing and comparing the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com