Gesture recognition method based on bp neural network

A BP neural network and gesture recognition technology, which is applied in the field of gesture recognition based on BP neural network, can solve problems such as the impact of segmentation results, and achieve the effects of simplifying operations, reducing the incidence of accidents, and increasing fun

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

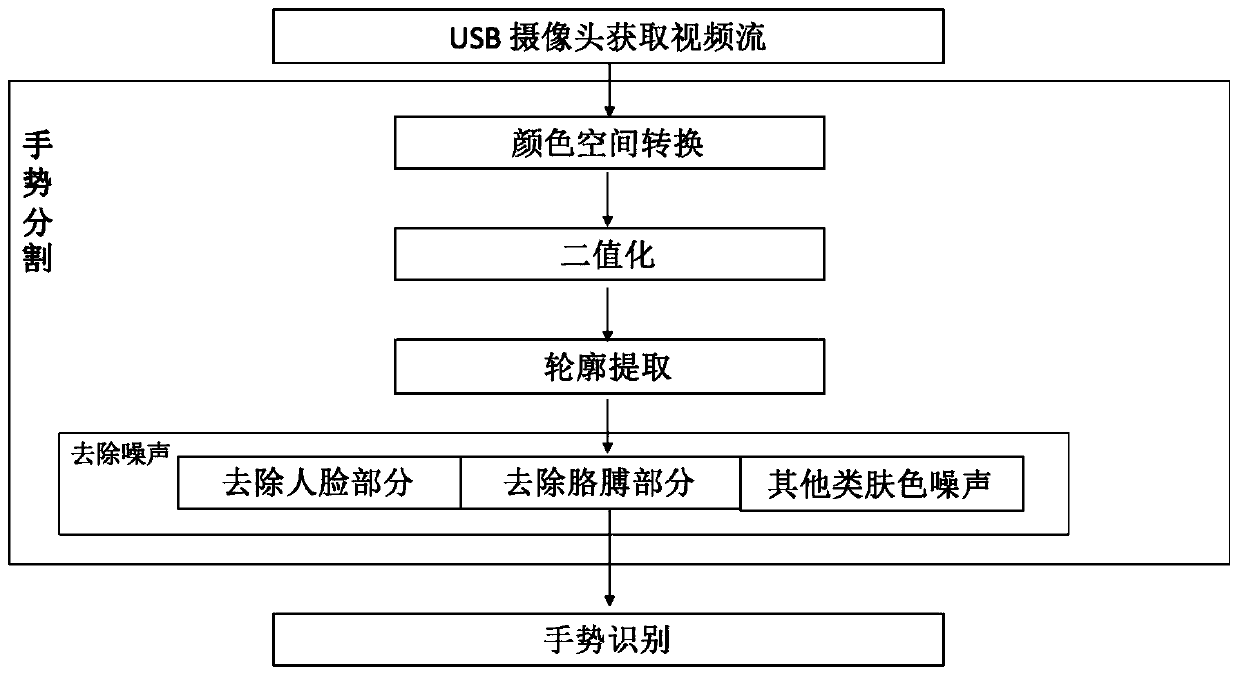

[0062] The gesture recognition method based on the BP neural network of the present embodiment includes three parts of video acquisition, gesture segmentation and gesture recognition:

[0063] (1) Video acquisition

[0064] Get a video containing human hands through the camera.

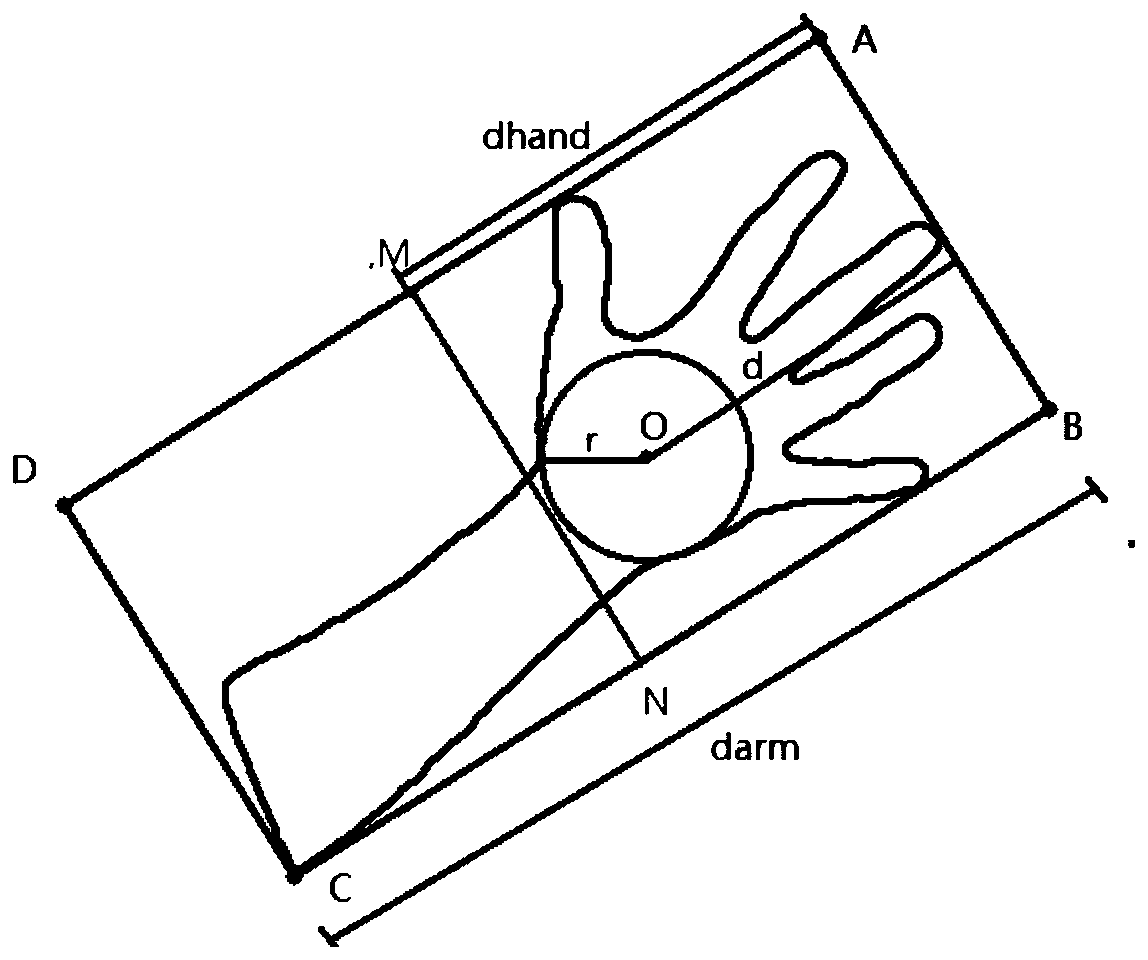

[0065] (2) Gesture segmentation

[0066] The present invention adopts the method of color space combined with geometric features to realize gesture segmentation. Convert the RGB type image directly obtained by the camera to the YCrCb color space, perform adaptive threshold processing on the Cr channel, convert the image into a binary image, find the gesture contour as the input value for recognition, and finally remove the noise. Specific methods include:

[0067] 2.1 Color space conversion

[0068] The YCrCb color space has the characteristics of separating chroma and brightness. It has better clustering characteristics for skin color and is less affected by brightness changes. It can distinguish...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com