Video object cooperative segmentation method based on track directed graph

A video object, collaborative segmentation technology, applied in the image field

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0079] Embodiments of the present invention will be described in further detail below in conjunction with the accompanying drawings.

[0080] The simulation experiment carried out by the present invention is programmed on a PC test platform with a CPU of 3.4GHz and a memory of 16G.

[0081] Such as figure 1 As shown, the video object collaborative segmentation method based on trajectory directed graph of the present invention, its specific steps are as follows:

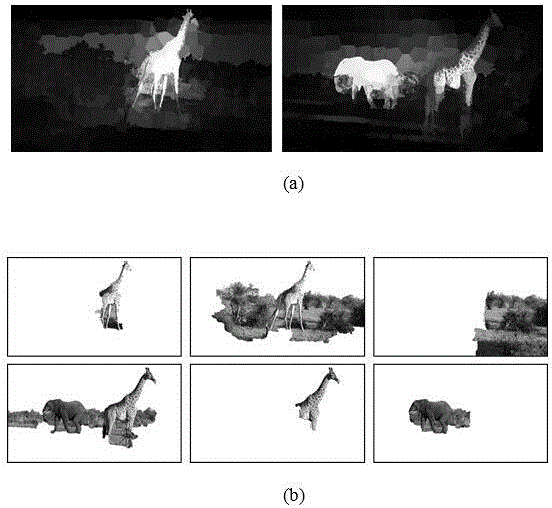

[0082] (1), respectively input the mth video sequence V in the original video group m (m=1,...,M), for video V m The tth frame is denoted as F m,t (t=1,...,N m ),Such as figure 2 Shown are the first frames of the two videos respectively;

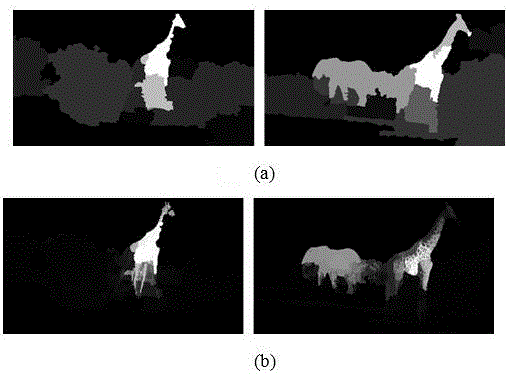

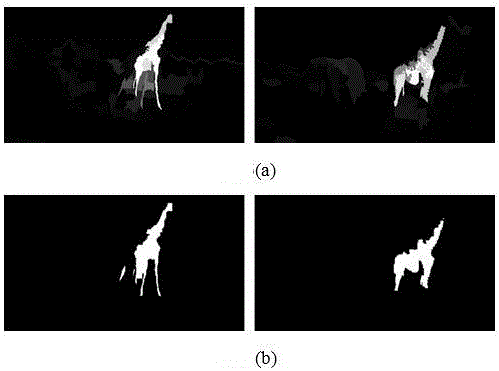

[0083] (2), using the dense optical flow algorithm to obtain the video frame F m,t The motion vector field of the pixels of each video frame F m,t Generate initial saliency map IS m,t , using the candidate object generation method, for each video frame F m,t Generate q cand...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com