Deep theme model-based large-scale text classification method

A topic model and text classification technology, applied in the field of text processing, can solve the problems of complex gradient calculation and inability to adjust the gradient update step size, and achieve the effect of simplifying model training, enhancing practicability, and accelerating convergence speed.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

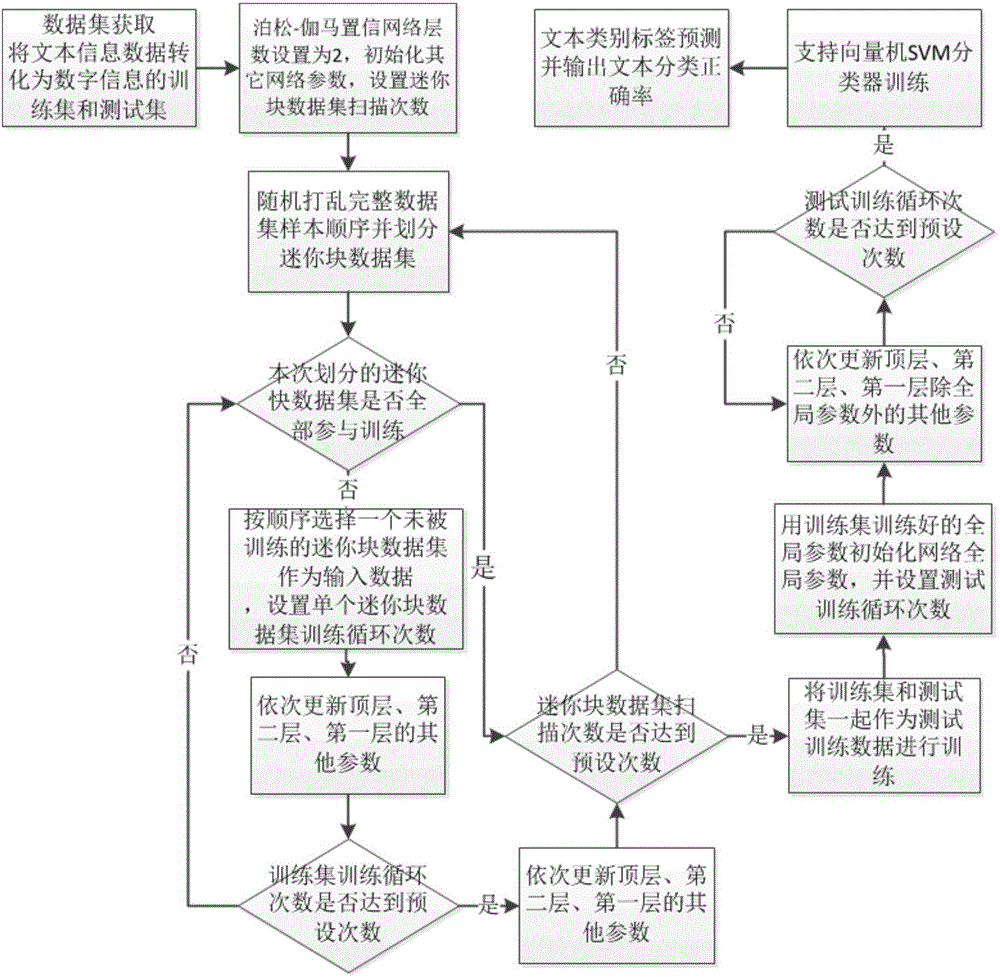

[0036] refer to figure 1 , the specific implementation steps of the present invention are as follows:

[0037] Step 1, construct the training set and test set of digital information.

[0038] Randomly select a training text set and a test text set from the text corpus;

[0039] The format of the training text set and the test text set is converted from text information into a training set and a test set of digital information by using the bag of words method;

[0040] The bag of words method is a method commonly used in the field of text processing to convert text information into digital information. For details, refer to the web page https: / / en.wikipedia.org / wiki / Bag-of-words_model.

[0041]Step 2, initialize the global parameters of the Poisson-Gamma belief network, hidden variable parameters and other network parameters.

[0042] 2.1) Set the maximum number of layers of the Poisson-Gamma belief network to 2, the number of samples in a single mini-block dataset to 200, a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com