Autonomous landing method of unmanned aerial vehicle based on vision/inertial navigation

A technology for autonomous landing and unmanned aerial vehicles, applied in the field of integrated navigation, which can solve the problems of error accumulation, not being able to be used alone, poor vertical positioning function, etc., and achieve the effect of improving real-time performance and high precision

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0042] The present invention will be further described below in conjunction with the accompanying drawings and embodiments, and the present invention includes but not limited to the following embodiments.

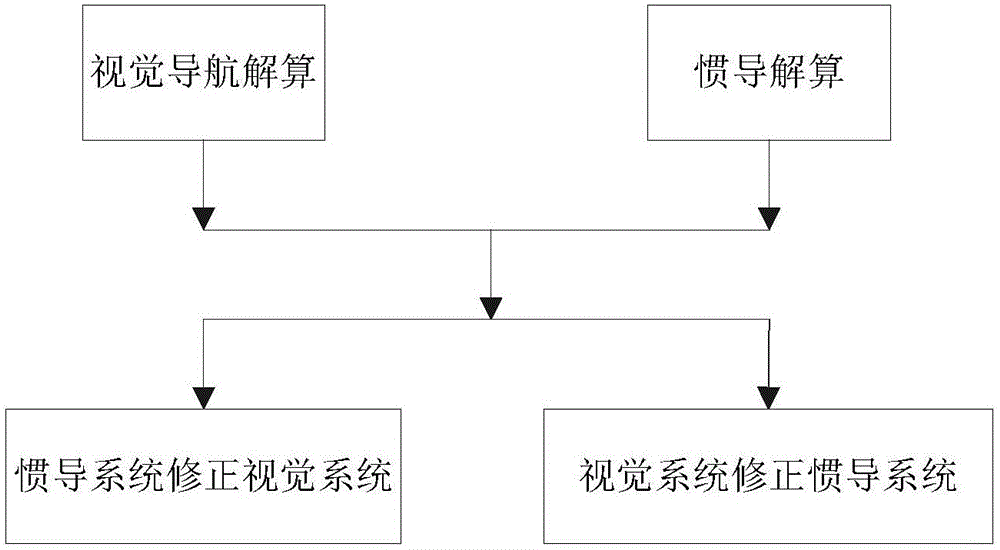

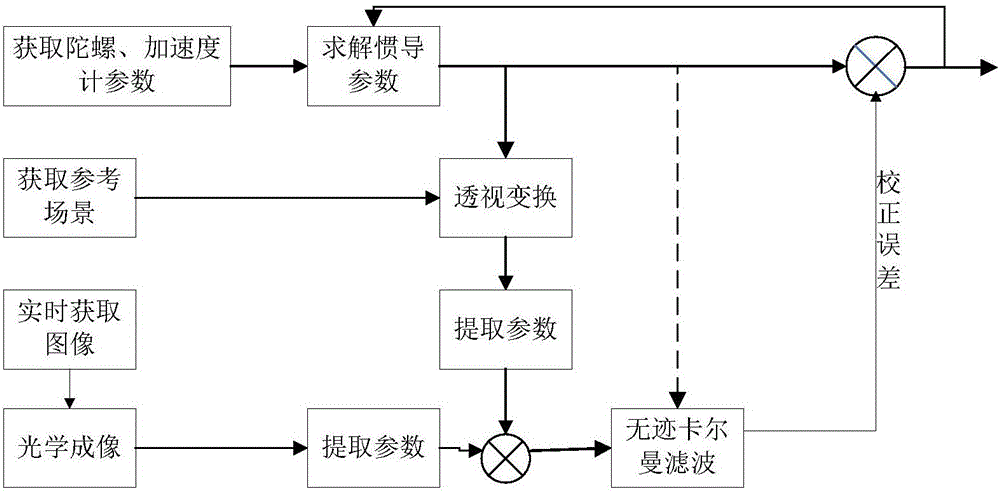

[0043] Combined navigation method of the present invention is according to following steps 1) to step 4) on the basis of cycle operation:

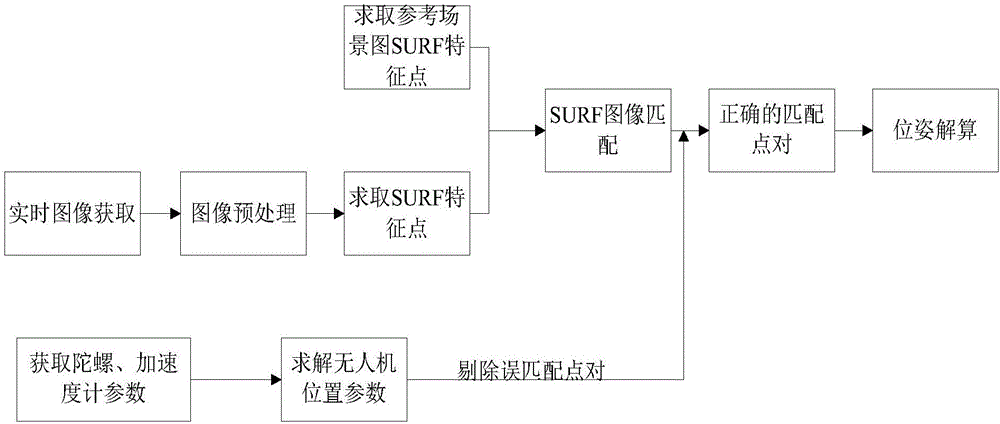

[0044] Step 1) Use the visual navigation algorithm to solve the position and pose of the drone, including 1.1) to 1.8);

[0045] 1.1) Utilize the UAV airborne camera to obtain real-time images, go to step 1.2);

[0046] 1.2) judge whether the real-time image acquired is the first frame image, if go to step 1.3), otherwise go to step 1.4);

[0047] 1.3) The image processing process of the first frame is as follows:

[0048] 1.3.1) Preprocessing the image acquired in real time, extracting the SURF feature points of the real-time image, and turning to step 1.3.2);

[0049] 1.3.2) carry out SURF matching with the SURF feature points extr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com