Sampling inertial guidance-based visual IMU direction estimation method

A technology of direction estimation and inertial guidance, applied in computing, instrumentation, electrical digital data processing, etc., can solve problems such as low precision, long-term error accumulation, and time-consuming matching

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0067] The present invention will be further described in detail below in conjunction with the accompanying drawings and examples.

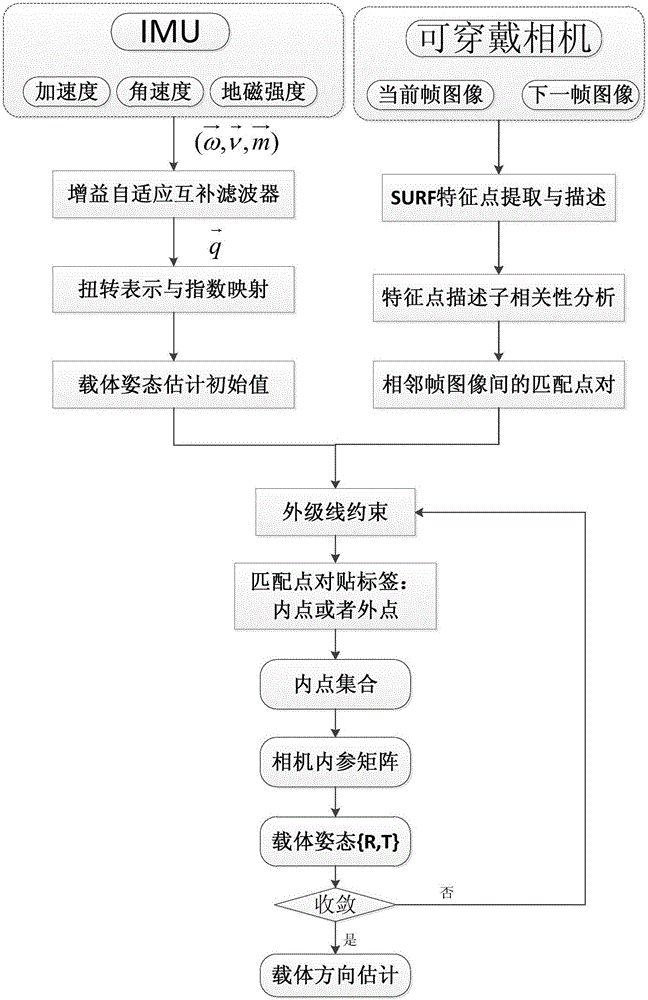

[0068] The present invention is mainly divided into three parts, figure 1 Shown is the principle diagram of the method of the present invention, and the specific implementation process is as follows.

[0069] Step 1: IMU orientation estimation based on gain-adaptive complementary filter.

[0070] The IMU contains three main sensors: a three-axis gyroscope, a three-axis accelerometer, and a three-axis magnetometer. The attitude estimation of the IMU includes the direction estimation of the three types of sensors, and their estimated values are fused.

[0071] Step 1.1: Compute the orientation estimate for the gyroscope.

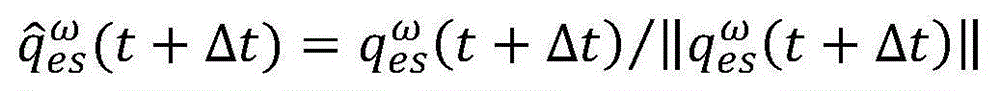

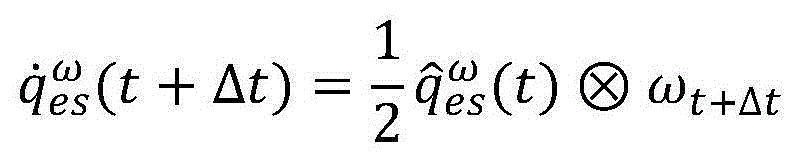

[0072] Step 1.1.1: Solve the quaternion describing the direction of the IMU at time t+Δt The rate of change (also known as the derivative) The formula is as follows:

[0073]

[0074] Among them, the q in the quate...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com