Virtual reality interaction system

A virtual reality, interactive system technology, applied in the input/output of user/computer interaction, instruments, electrical and digital data processing, etc. Interactive experience, user experience improvement, accurate gesture recognition effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0065] In order to have a clearer understanding of the technical features, purposes and effects of the present invention, the specific implementation manners of the present invention will be described in detail below with reference to the accompanying drawings. It should be understood that the following descriptions are only specific illustrations of the embodiments of the present invention, and should not limit the protection scope of the present invention.

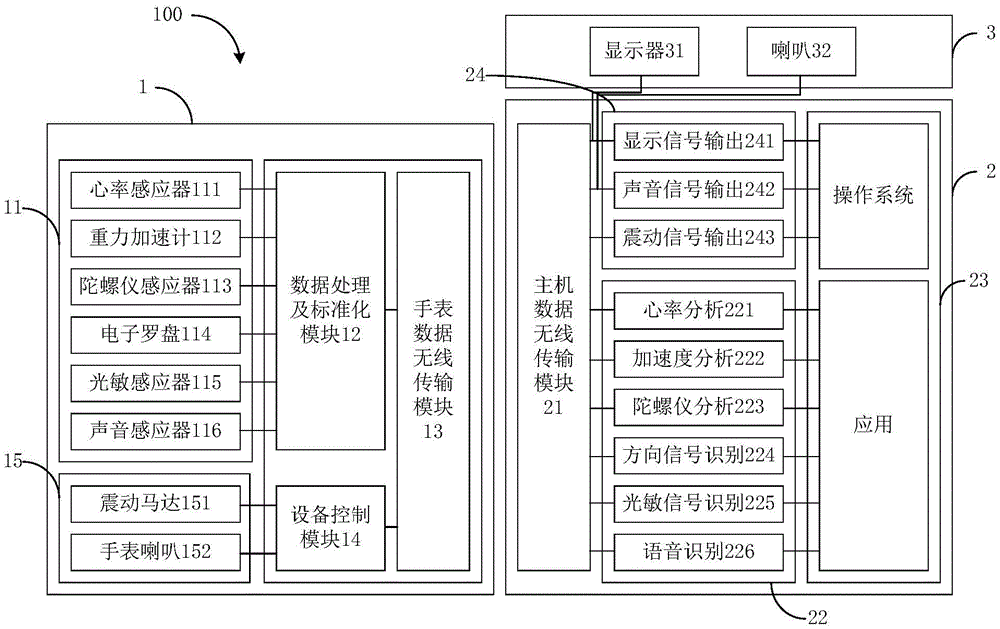

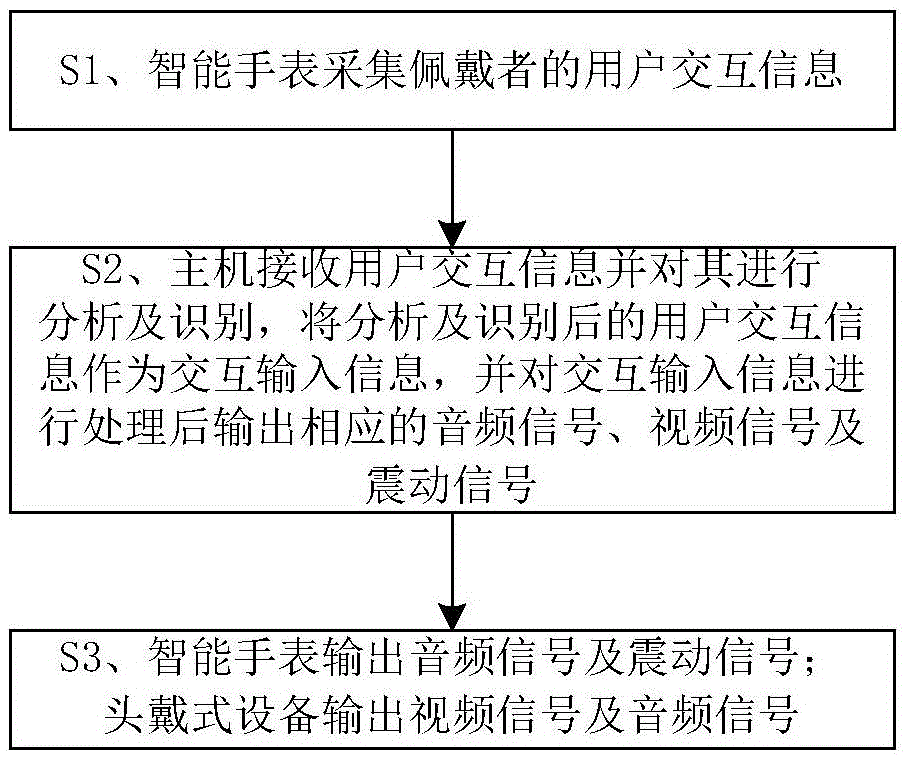

[0066] The present invention provides a virtual reality interactive system and method, the purpose of which is to adapt to most smart watches 1 on the market through a multi-sensor real-time data acquisition module, and realize data collection and transmission according to the sensors carried by the smart phone. to the virtual reality device. Through the real-time transmission of collected sensor data, the real-time efficiency requirement is high. To achieve intelligent interaction, the system can intelligently analyze ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com