Method for converting 3D model into three-dimensional double-viewpoint view

A dual-viewpoint and model technology, applied in the field of 3D display, achieves strong applicability, realistic and scientific effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0057] The present invention will be described in detail below in combination with specific embodiments.

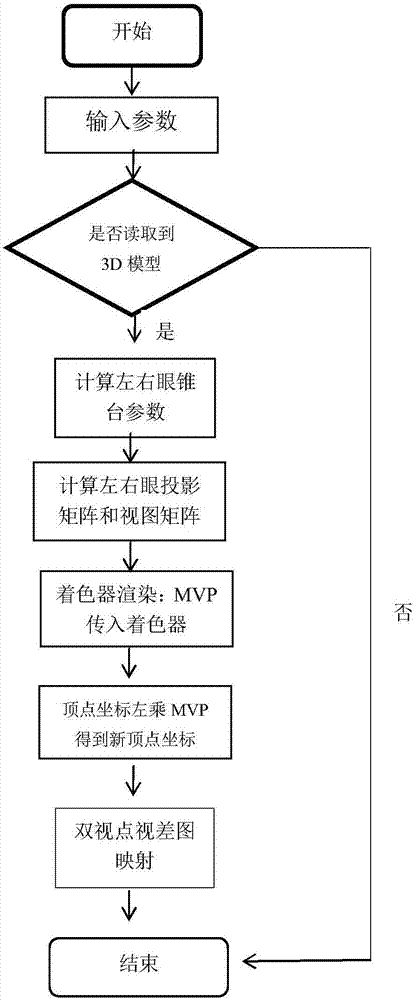

[0058] Such as Figure 1-3 As shown, a method for converting a 3D model to a stereoscopic dual-viewpoint view, specifically includes the following steps:

[0059] Step 1: Select a pooled observation model

[0060] Observation models mainly include convergent observation models and parallel observation models. The present invention selects the convergent observation model.

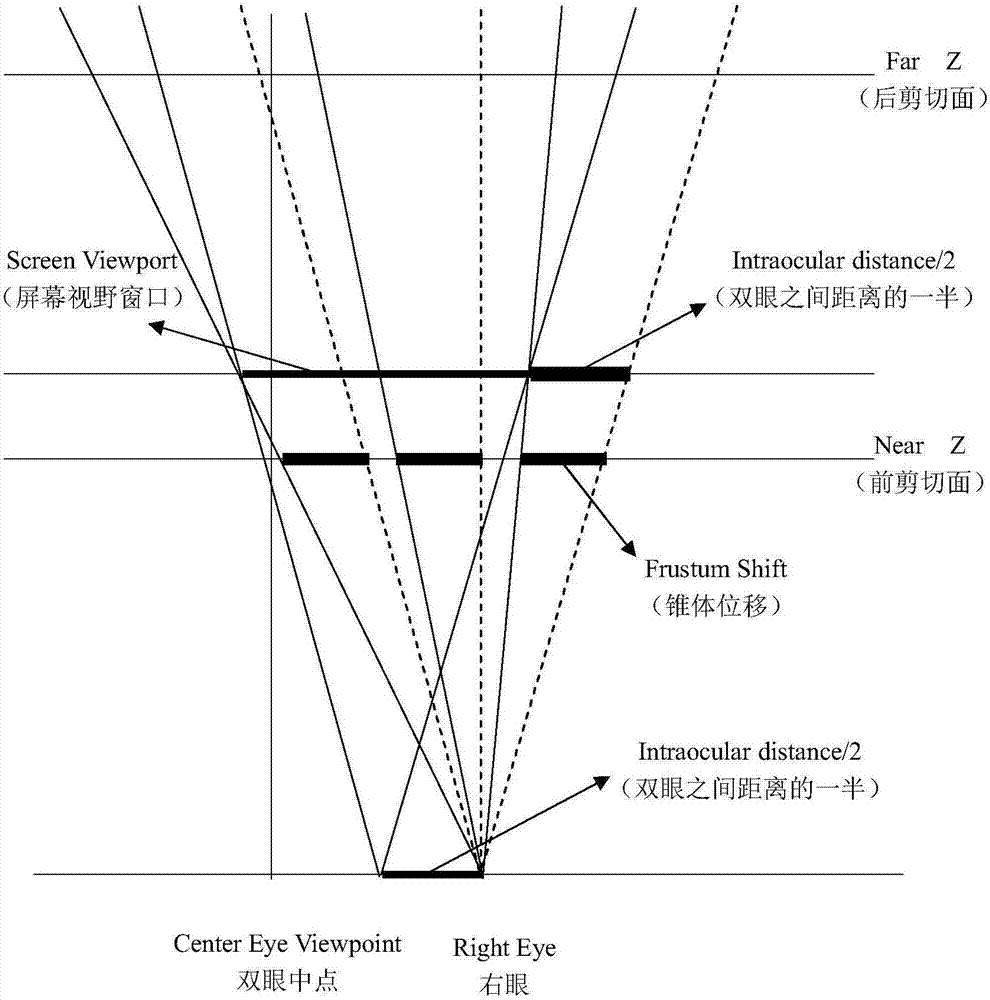

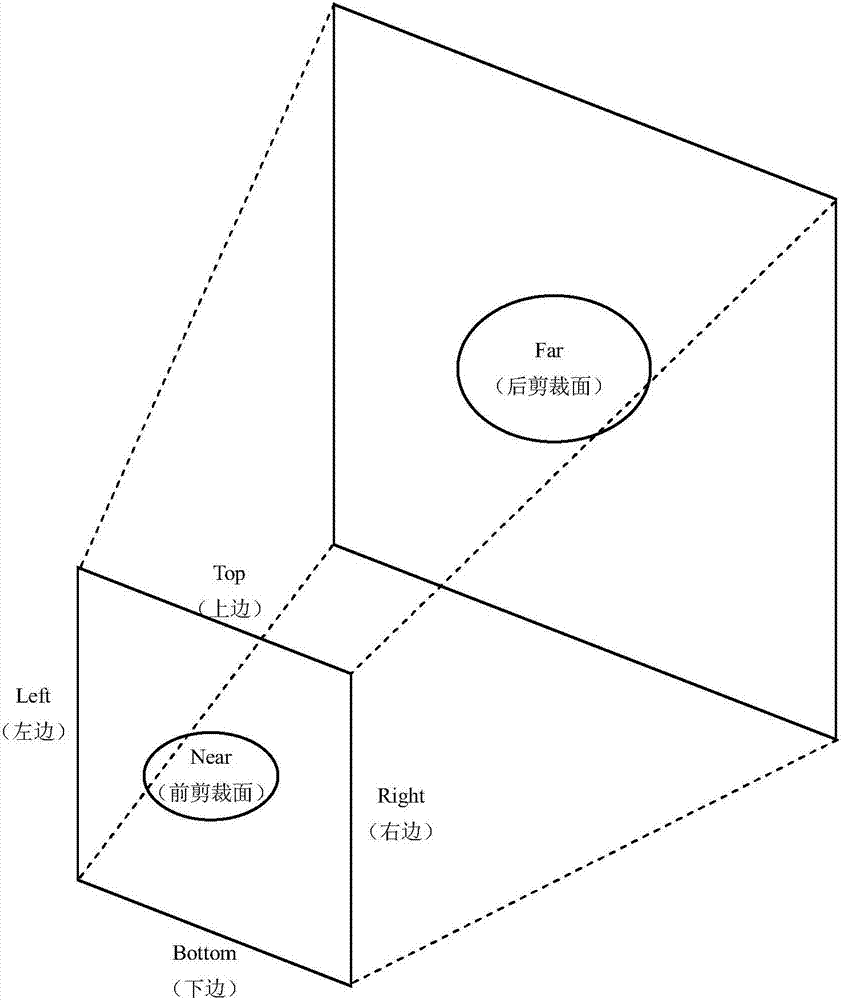

[0061] attached figure 1 A schematic diagram of convergent projection, attached figure 2 A truncated pyramid for convergent projection. Among them, top, bottom, Left, and Right are the distances from the upper, lower, left, and right sides of the front clipping surface of the pyramid shared by the left and right eyes to the center, Near is the distance from the front clipping surface to the viewpoint, and Far is the distance from the rear clipping surface to the viewpoint.

[0062] Step 2: Calcu...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com