Matching method of feature points in robot vision navigation positioning image

A robot vision, image feature point technology, applied in image analysis, image data processing, graphic image conversion and other directions, can solve the problems of lack of height information, large interference in similar small areas, easy to cause mismatch, etc., to expand the screening range. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

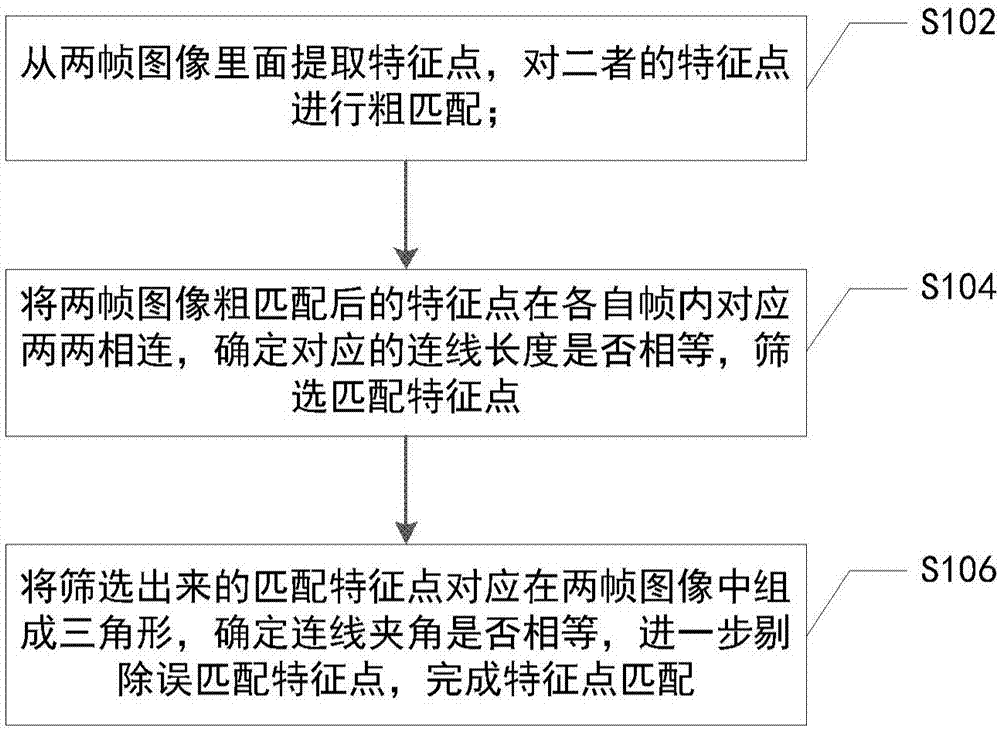

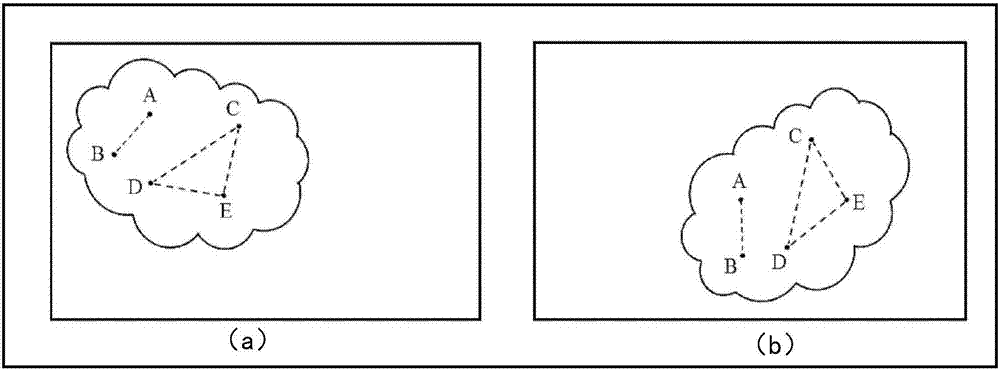

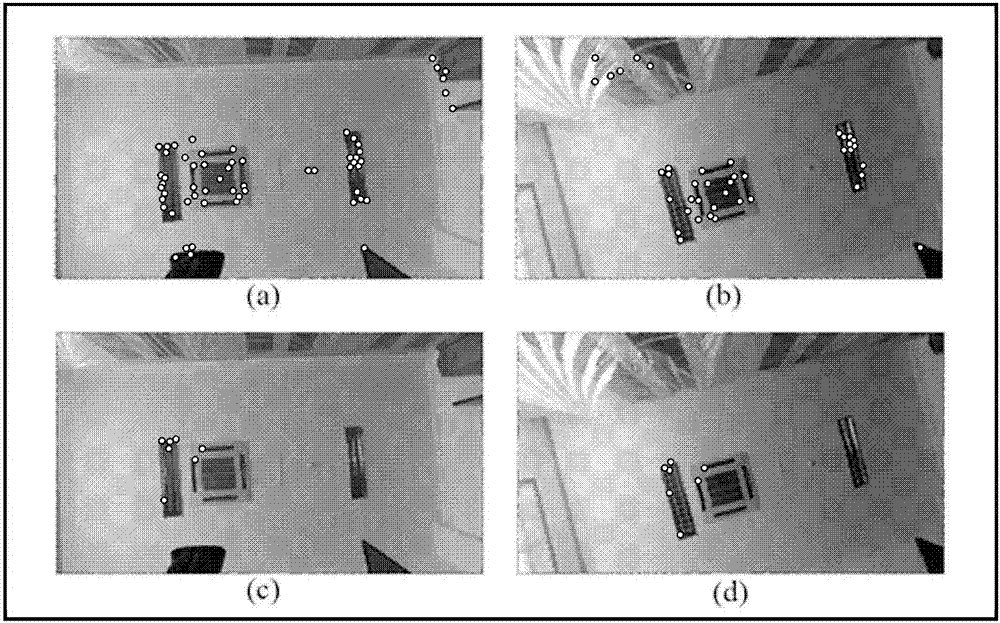

[0026] The invention provides a matching method for robot visual navigation and positioning image feature points. By utilizing the characteristics of affine transformation invariance of captured image feature points between different frames, according to the connection length of feature pairs in two frames of images , the included angles are equal to screen out the mismatching feature points, to achieve accurate matching of valid feature points, and use the threshold judgment method to determine whether the length of the connection line and the included angle are equal, which broadens the screening range of effective feature points, and is also suitable for Capture blurry, pixelated scenes.

[0027] In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be further described in detail below in conjunction with specific embodiments and with reference to the accompanying drawings.

[0028] The working principle ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com