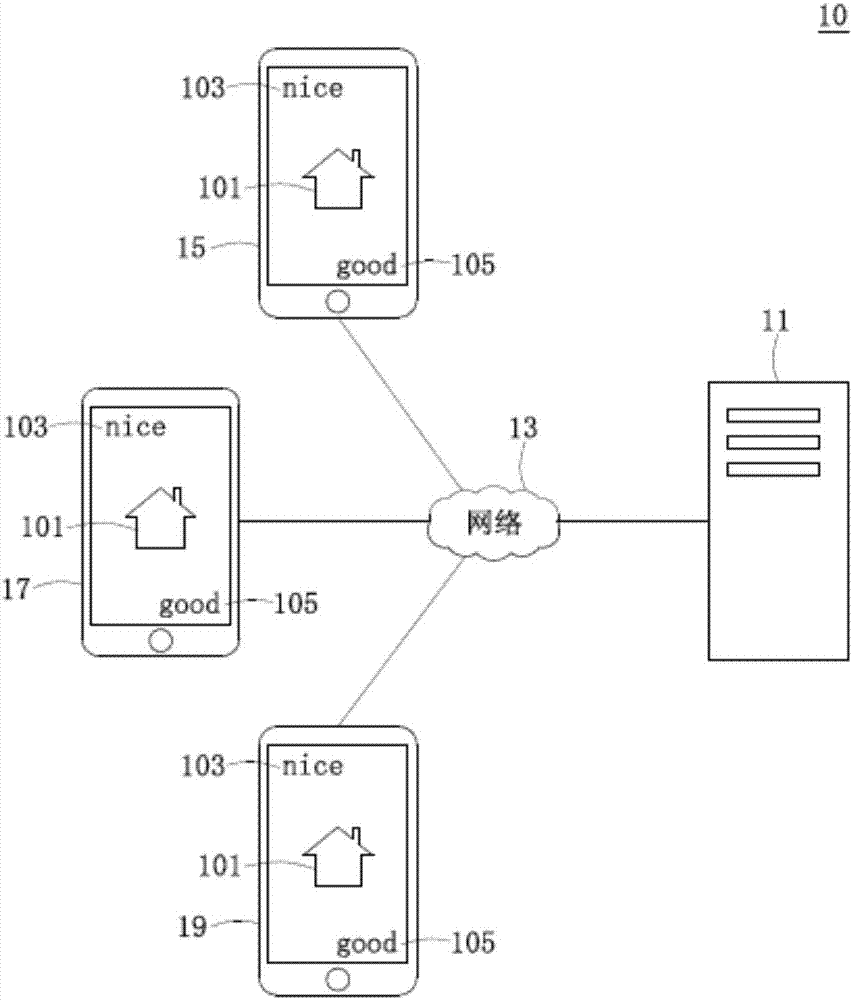

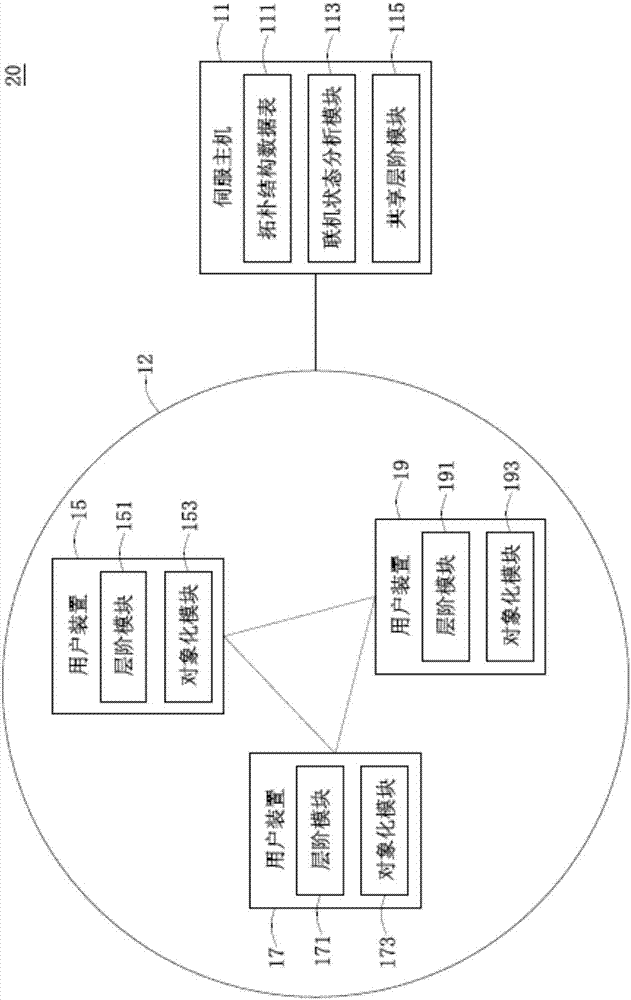

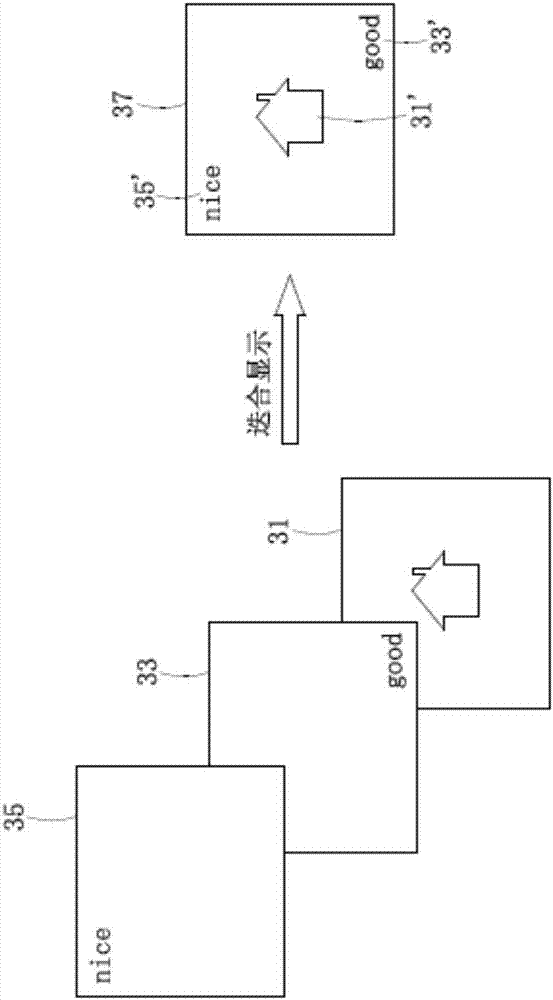

Remote interaction method and system

A remote interaction and program technology, applied in the transmission system, the input/output process of data processing, instruments, etc., can solve the problems of large network bandwidth, large image data, and rigid interaction, and achieve the effect of reducing the bandwidth load

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0028] Various exemplary embodiments will be described more fully hereinafter with reference to the accompanying drawings, in which some exemplary embodiments are shown. However, inventive concepts may be embodied in many different forms and should not be construed as limited to the illustrative embodiments set forth herein. Rather, these exemplary embodiments are provided so that this disclosure will be thorough and complete, and will fully convey the scope of the inventive concept to those skilled in the art. In the drawings, the size and relative sizes of layers and regions may be exaggerated for clarity. Like numbers indicate like components throughout.

[0029] It should be understood that although the terms first, second, third, etc. may be used herein to describe various components or signals etc., these components or signals should not be limited by these terms. These terms are used to distinguish one component from another component, or one signal from another signa...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com