Server based on transparent service platform data access and cache optimization method thereof

A service platform and data access technology, applied in the field of computer networks, can solve the problems of lack of transparent computing user access behavior research work, cache strategy effect is not significant, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

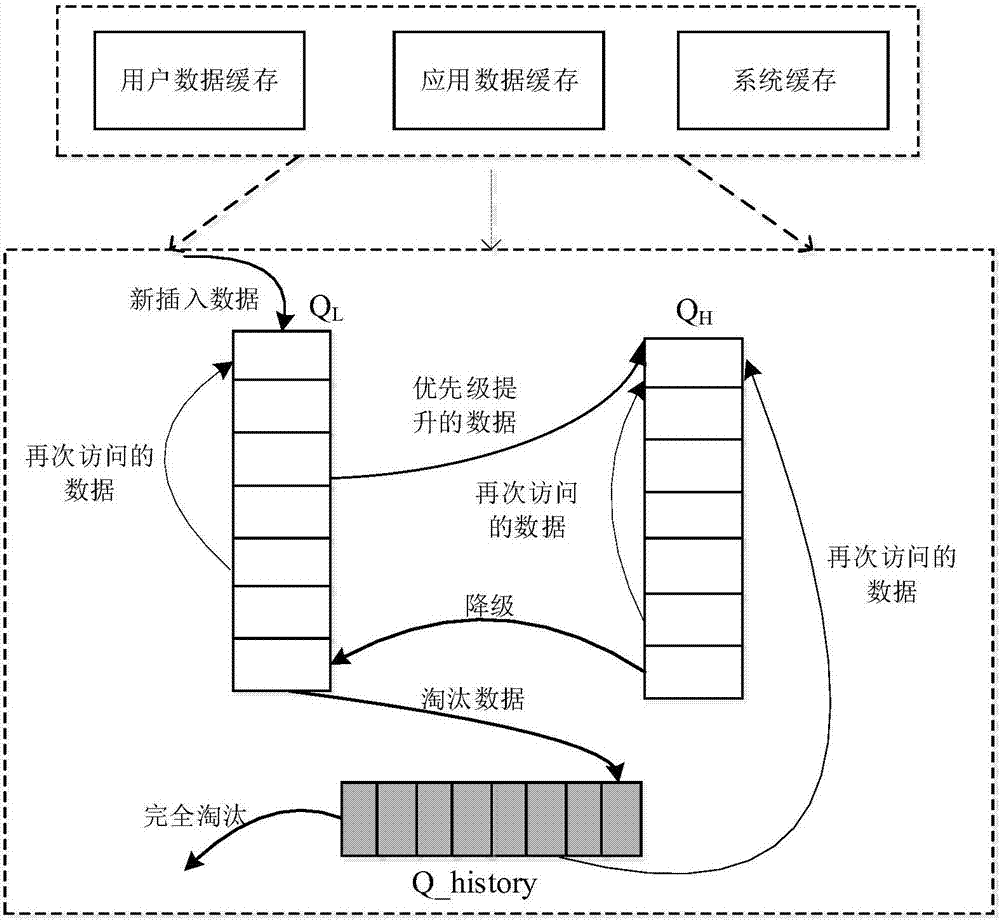

[0063] This embodiment discloses a server-side cache optimization method based on transparent service platform data access.

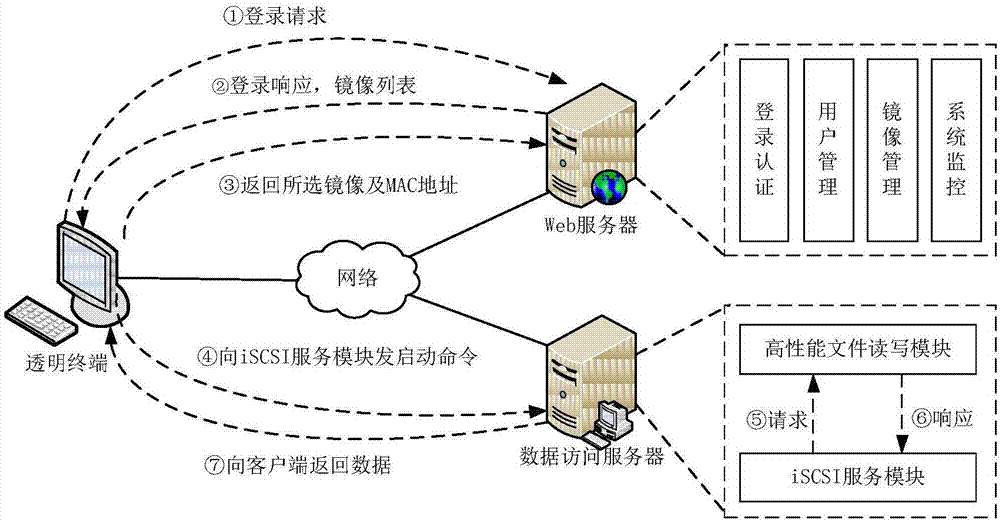

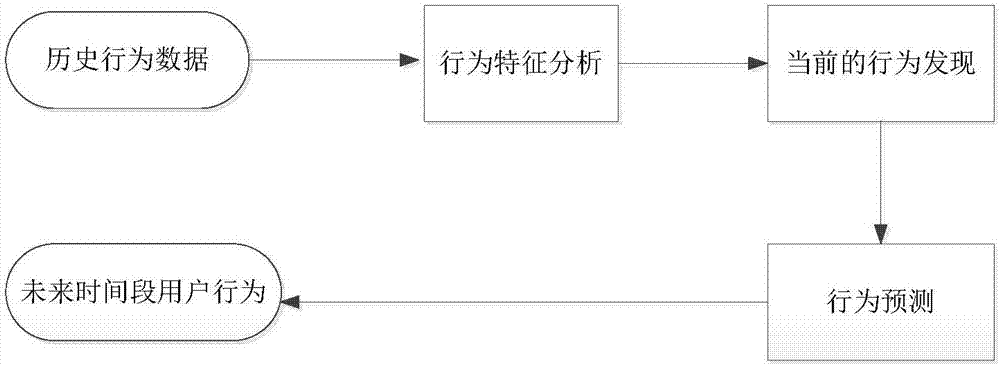

[0064] In the transparent service platform, the transparent terminal without a hard disk accesses the data stored on the server with the help of virtual disk technology, and realizes the remote loading and operation of the terminal operating system. figure 1 It is the interaction process between the service management platform and the transparent client. The request packet sent by the client to the server contains the original data set of user behavior, from which the characteristic values representing user behavior are extracted: TYPE, IP, OFFSET, DATA LENGTH, TIME. TYPE is the operation code of the data packet, describing the request of establishing a session, disconnecting a session, reading, writing, etc., which contains 6 types of operation codes. IP is the IP of the client sending the data packet, which is used to identify the client. OFFSET d...

Embodiment 2

[0098] Corresponding to the foregoing method embodiments, this embodiment discloses a server for executing the foregoing methods.

[0099] Referring to Embodiment 1, the cache optimization method of the server based on transparent service platform data access performed by the server in this embodiment includes:

[0100] Perform frequency statistics on a large number of end users' access behaviors to transparent computing server data blocks in different time intervals, and use information entropy to quantify users' data block access behaviors to determine whether the current user access behaviors are centralized;

[0101] When it is judged that the user's access behavior is centralized, screen out the data blocks with high frequency of current visits, and use the exponential smoothing prediction algorithm to predict the access frequency distribution of the screened out data blocks for a period of time in the future;

[0102] Optimize the cache on the server side according to th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com