Motion identification method based on human bone joint point distance

An action recognition and node distance technology, applied in character and pattern recognition, instruments, computer parts, etc., can solve the problems of not meeting the needs of interactive action recognition, immature recognition, etc., to reduce randomness and subjective selection. Interference, objective and credible recognition results, and obvious action characteristics

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0033] The present invention will be further described in detail below in conjunction with the accompanying drawings and through specific embodiments. The following embodiments are only descriptive, not restrictive, and cannot limit the protection scope of the present invention.

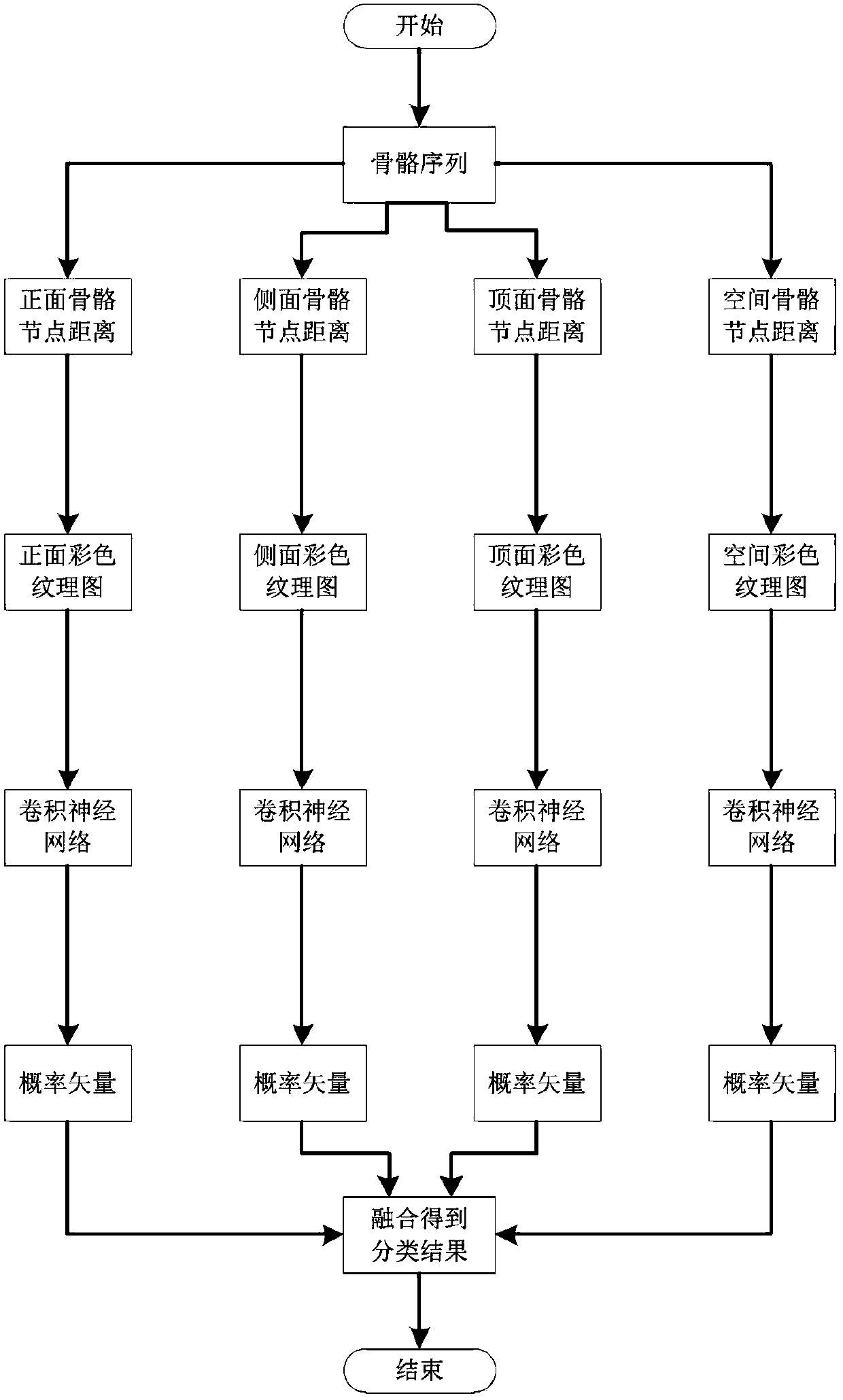

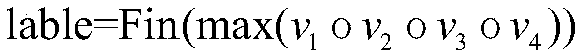

[0034] An action recognition method based on the distance of human skeleton nodes, the steps are as follows:

[0035] 1) Mapping of bone sequence to picture

[0036] Assuming that there is a series of skeleton sequences of human actions, it is now necessary to recognize the actions, usually the number of frames of the skeleton sequences of each action is t x uncertain.

[0037] In the first step, use bilinear interpolation to fix the frame number of the skeleton sequence for all actions to t.

[0038] In the second step, it is assumed that from each frame skeleton map V xyz Extract m human skeleton nodes, use

[0039] to represent the three-dimensional position information of the jth bone node ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com