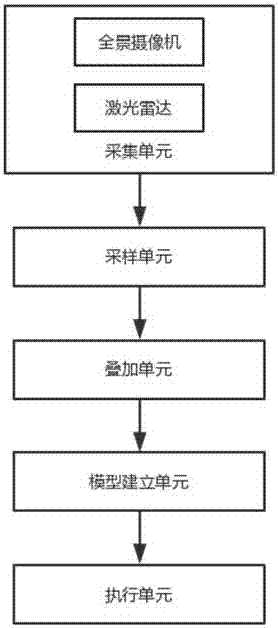

Target detection method and target detection system of visual radar spatial and temporal information fusion

A target detection and radar technology, which is used in radio wave measurement systems, measurement devices, and radio wave reflection/re-radiation, etc., can solve problems such as low recognition accuracy, and achieve the effect of comprehensive data and improved accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment

[0071] Using vision and radar on an unmanned vehicle, a dataset consisting of RGB images and their corresponding depth maps is collected. A color camera is installed on the unmanned vehicle to collect RGB images, and a Velodyne HDL-64E lidar is installed to collect radar 3D point cloud data, and the positions of these two sensors have been calibrated.

[0072] A total of 7,481 RGB images and corresponding radar 3D point clouds were collected. Using the above method, a total of 6,843 (1,750 cars, 1,750 pedestrians, 1,643 trucks, 1,700 bicycles) RGB-LIDAR space-time fusion were produced. pictures and labels. And 5475 pieces of data were used for training and 1368 pieces of data were used for testing to detect the effect of multi-task classification based on the fusion of vision and radar spatio-temporal information.

[0073] use Figure 9 The convolutional neural network shown is used as a model for classification. The model has six convolutional layers and 3 fully connected ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com