Method for improving video compression coding efficiency based on deep learning

A technology of video compression and coding efficiency, applied in the field of multimedia video coding, to achieve the effect of improving effect, high degree of innovation, and improving coding efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0050] The present invention will be described in detail below in combination with specific embodiments.

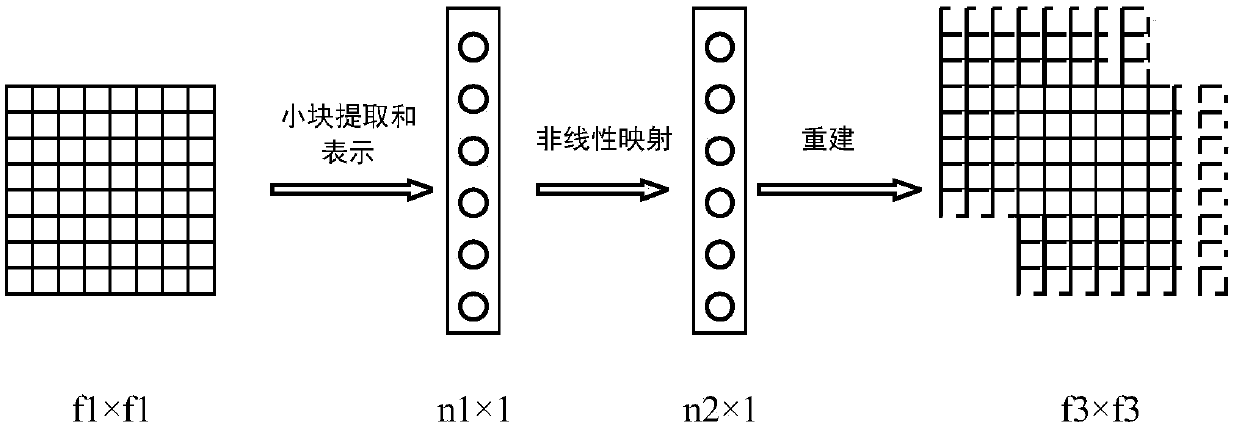

[0051] Such as figure 1 As shown, a method for improving the efficiency of video compression coding based on deep learning is performed according to the following steps:

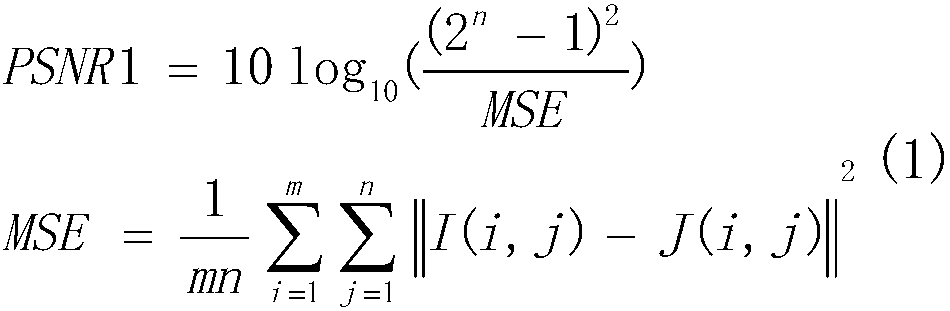

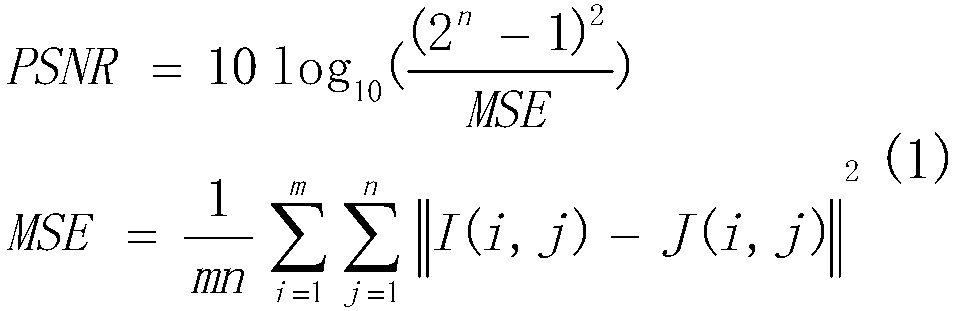

[0052] Step 1. Taking foreman and flowers video sequences as examples, obtain the peak signal-to-noise ratio PSNR1 between the picture obtained after the original inter-frame prediction (the most basic motion estimation and motion compensation) and the real picture. The specific method for obtaining this PSNR1 is as follows:

[0053] a. Block-based motion estimation:

[0054] Motion estimation refers to a set of technologies for extracting motion information from video sequences. The main content of the research is how to quickly and effectively obtain sufficient motion vectors. The specific method is that for a block in the previous frame of the foreman video sequence (frame i, denoted as im_src) in ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com