A Human Pose Mapping Method Applied to Action Imitation of Humanoid Robot

A humanoid robot, human body posture technology, applied in the field of human-computer interaction, can solve the problems of no joint angle analysis, limit the similarity of humanoid robots imitating human body posture and action, and achieve fast calculation speed, improve imitation similarity, and small calculation loss. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

[0037] This embodiment discloses a human body posture mapping method applied to humanoid robot action imitation, using Kinect II as a depth camera, and Nao robot as a humanoid robot imitator and a mapping object of human body posture.

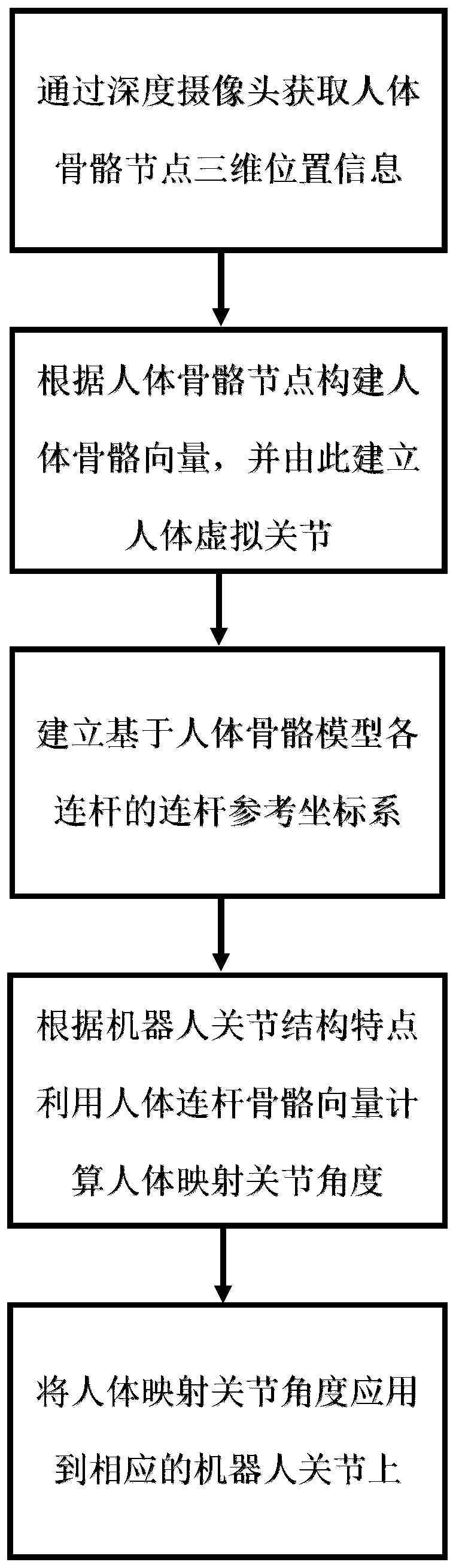

[0038] The specific implementation of the human body posture mapping method applied to humanoid robot action imitation, the flow chart is as follows figure 1 shown, including the following steps:

[0039] S1. Obtain the three-dimensional position information of human skeleton nodes through the Kinect II depth camera;

[0040] S2. Construct human skeleton vectors according to human skeleton nodes, and establish human virtual joints according to human skeleton vectors and robot joint structures to form human skeleton models;

[0041] S3. Establish a link reference coordinate system based on each link of the human skeleton model;

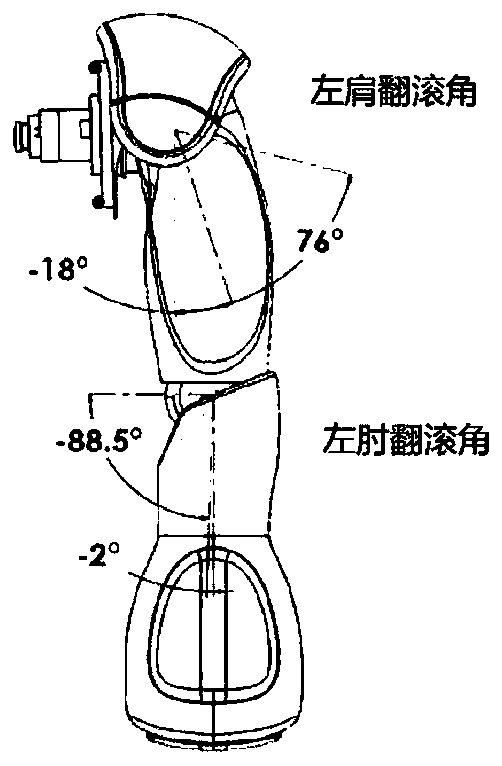

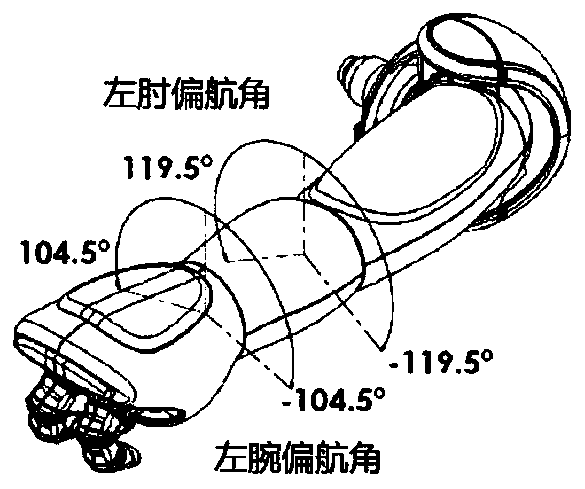

[0042] S4. Calculate the joint angles of the human body by using the human bone vector according to the structural ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com