Dynamic multi-channel neural network SOC (system on a chip) and channel resource distribution method thereof

A neural network and multi-channel technology, applied in the field of artificial intelligence equipment, to achieve the effect of solving bandwidth problems

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

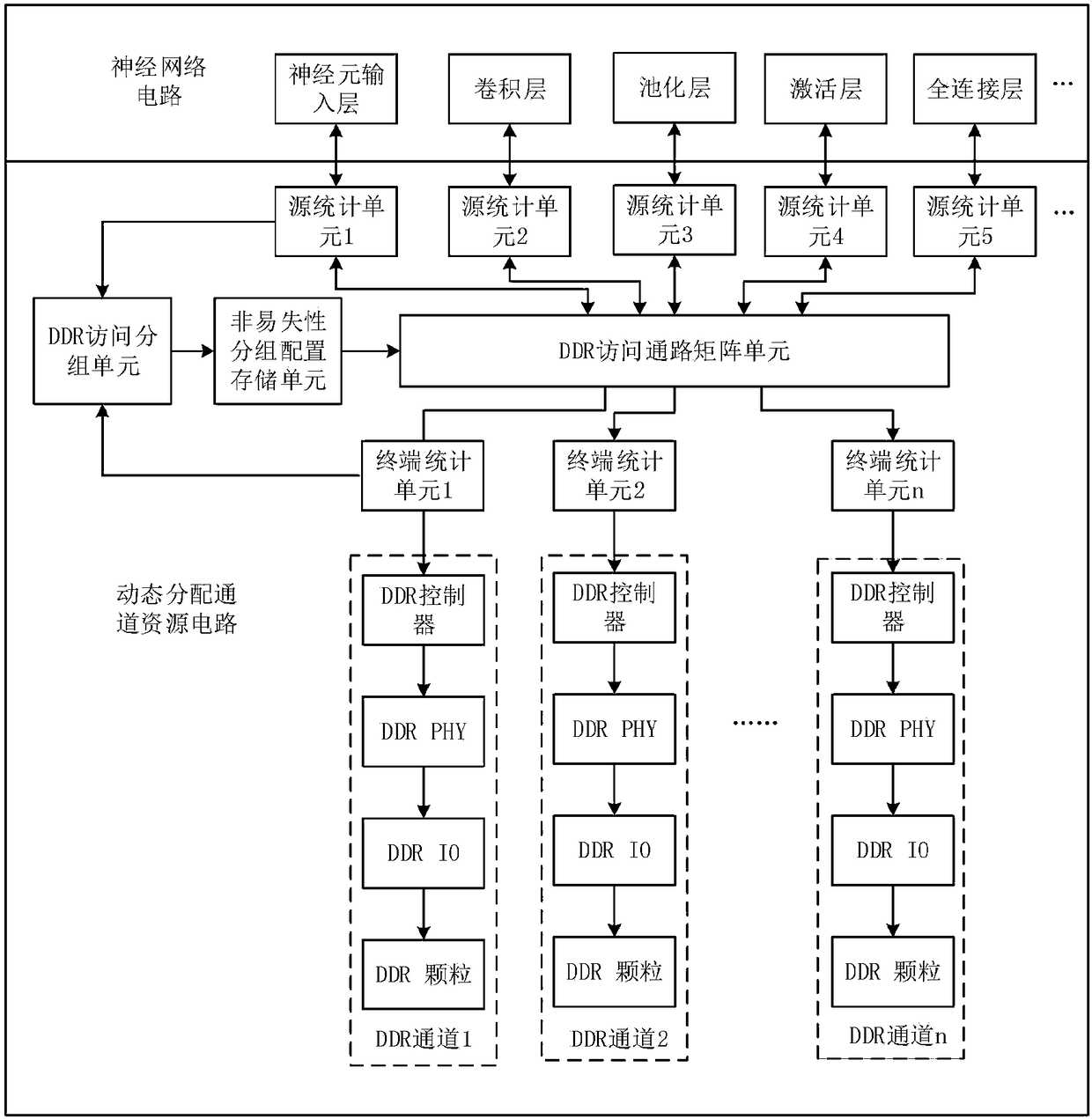

[0026] see figure 1 As shown, the neural network SOC chip of the present invention includes a neural network circuit and a circuit for dynamically allocating channel resources;

[0027] The neural network circuit includes a plurality of neural network layers. Generally, a neural network circuit has hundreds of neural network layers, such as the neuron input layer, convolution layer, pooling layer, activation layer, and full neuron layer as shown in the figure. Connection layer, etc., each neural network layer has a data source path;

[0028] The circuit for dynamically allocating channel resources includes a plurality of source statistics units, a DDR access grouping unit, a grouping configuration storage unit, a DDR access path matrix unit, a plurality of terminal statistics units and a plurality of DDR channels; a plurality of the source statistics The units are respectively connected one by one to the data source paths of the neural network layers; the plurality of source ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com