Network training method and device, image processing method and device, storage medium and electronic equipment

An image processing and training method technology, applied in the field of image processing, can solve the problems of high-quality training data, image processing parameters, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

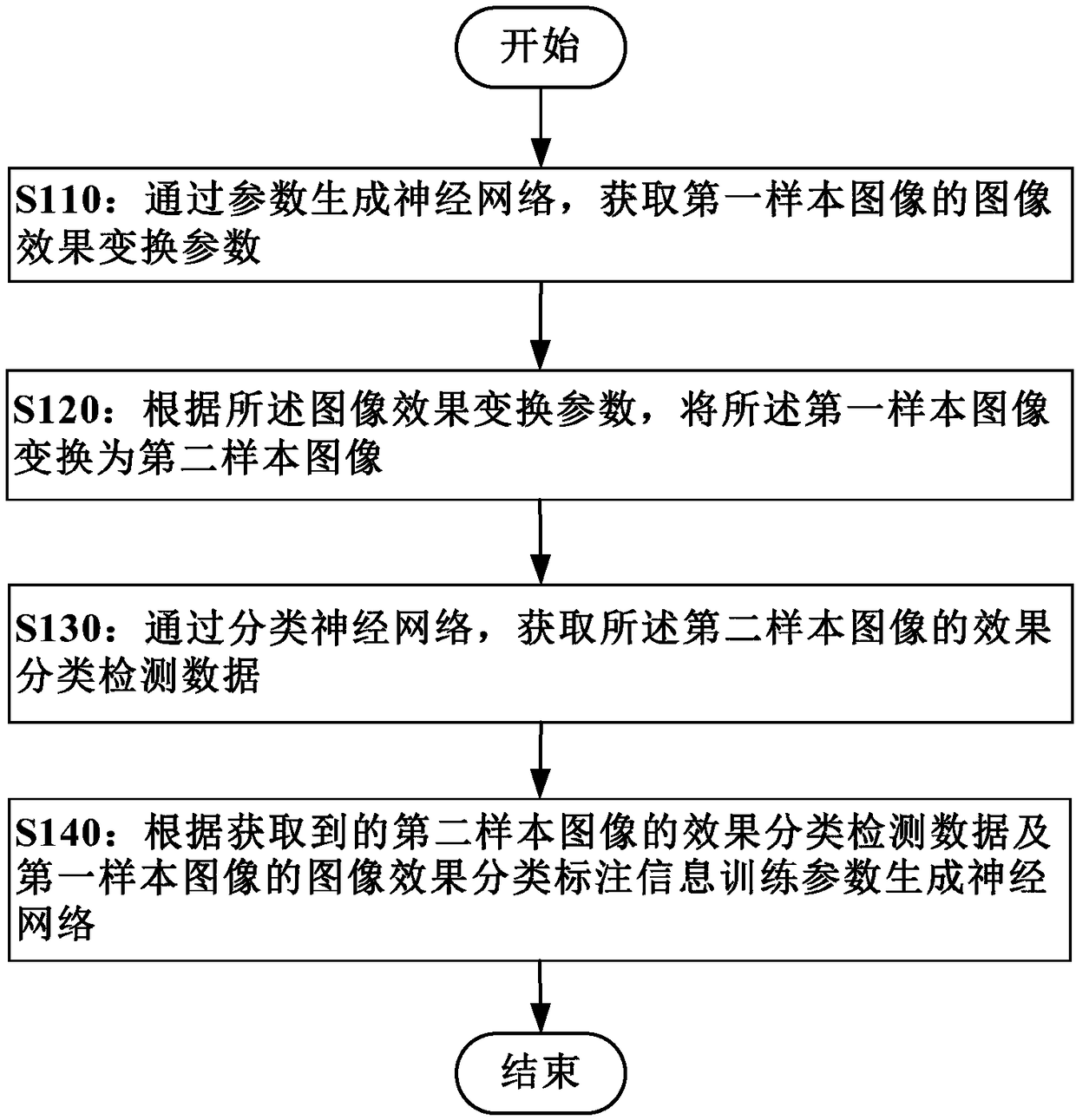

[0066] figure 1 It is a flow chart showing the training method of the image processing neural network according to Embodiment 1 of the present invention.

[0067] Embodiments of the present invention propose a weakly supervised learning method based on generative adversarial networks, which considers the relationship between image effect classification and image effect transformation, and is used to train an image processing neural network with strong image effect transformation capabilities. The image processing neural network only learns parameters for image effect transformation processing based on the labeled data of image effect classification as training supervision information. In this process, there is no need to carry out fine effect enhancement data annotation on the sample image, and the learning of image effect transformation parameters with weak supervision is realized. For example, in the task of image aesthetic enhancement processing, it is only necessary to pe...

Embodiment 2

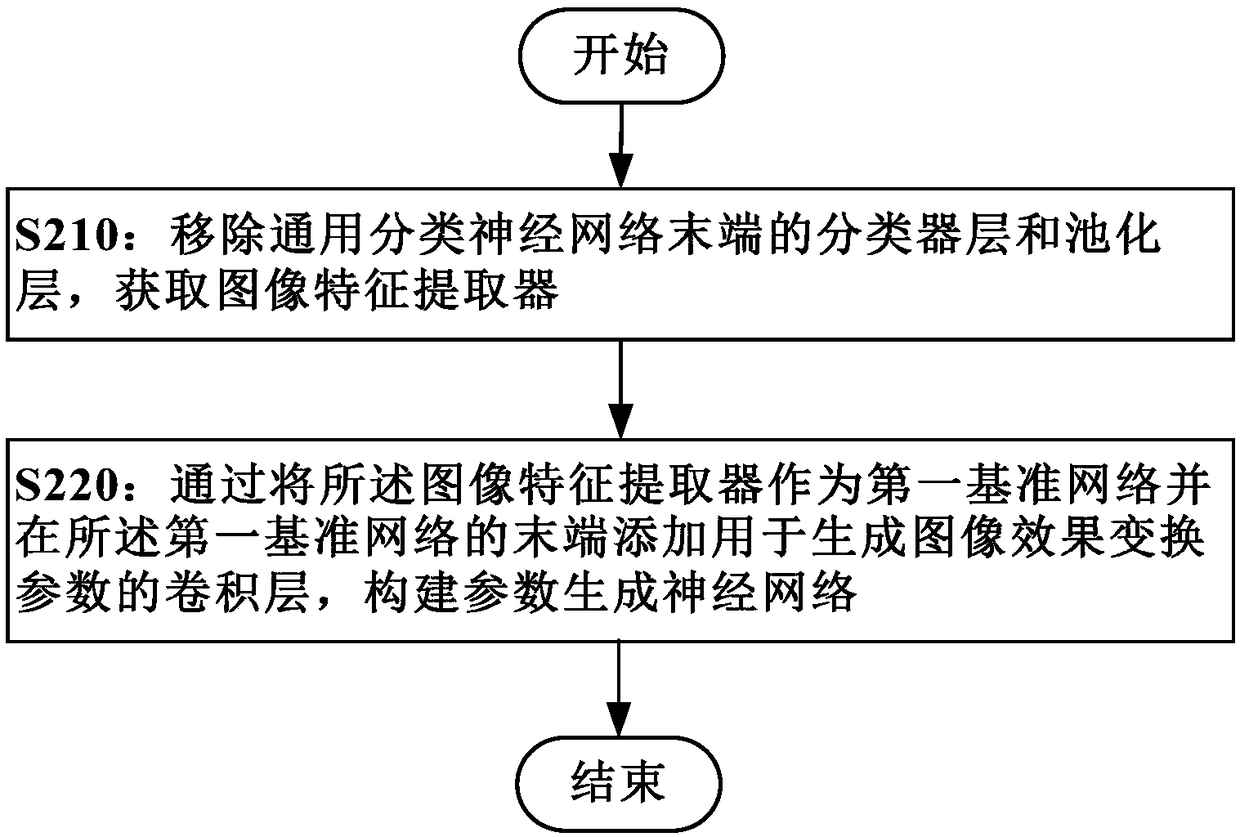

[0085] An exemplary way of constructing a parametric generative neural network as a generator and a classification neural network as a discriminator is described below.

[0086] According to the second embodiment of the present invention, the parameter generation neural network is obtained by transforming the general classification neural network. The general classification neural network may be, for example, a general neural network for generating image classifications or a general neural network for generating certain image effect classifications.

[0087] The general classification neural network can be pre-trained using an applicable machine learning method, or a trained general classification neural network can be used. Since the general classification neural network used for image effect classification has good feature extraction ability related to the expected image effect, the parameter generation neural network (and classification neural network) can be constructed ba...

Embodiment 3

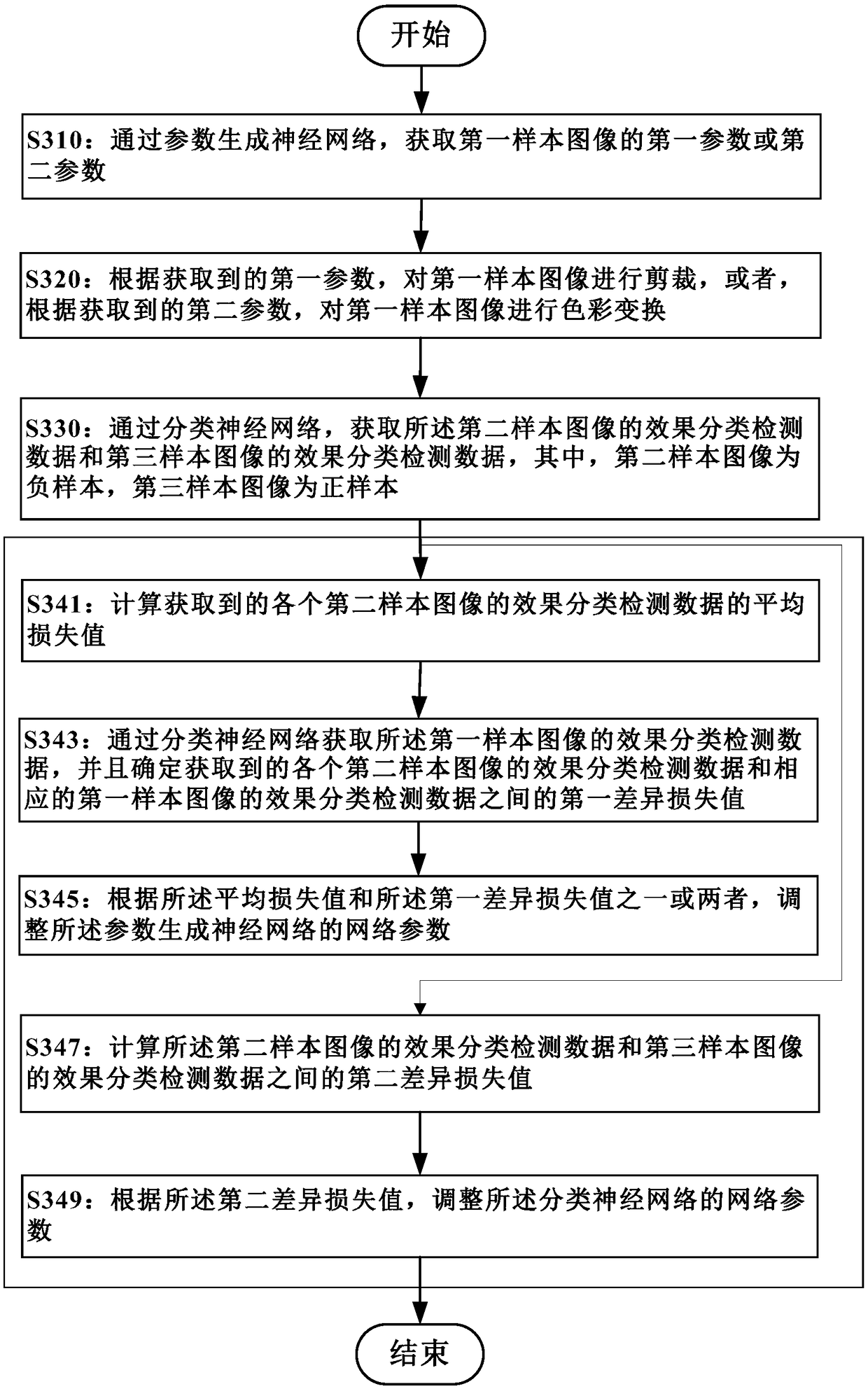

[0096] According to an exemplary embodiment of the present invention, the image effect transformation parameters may include, but are not limited to, at least one of the following parameters: a first parameter for image clipping and a second parameter for image color enhancement. It should be pointed out that the training method proposed by the present invention is applicable to the training of the image processing neural network with any existing or possibly applicable image effect transformation parameters, and is not limited to the above two parameters.

[0097] Correspondingly, a first output branch of the first parameter used for image clipping and a second output branch of the second parameter used for image color enhancement may be respectively set at the end of the parameter generating neural network.

[0098] A detailed description is given below for exemplary training of the classification neural network and the parameter generation neural network for generating the f...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com