Method for recognizing visual motions in virtual assembly sitting operation

A technology of motion recognition and virtual assembly, applied in the field of somatosensory interaction

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0054] A visual action recognition method in a virtual assembly sitting operation, the detection method comprising the following steps:

[0055] Step 1. Collect bone and eye coordinates, and construct an eye center coordinate system.

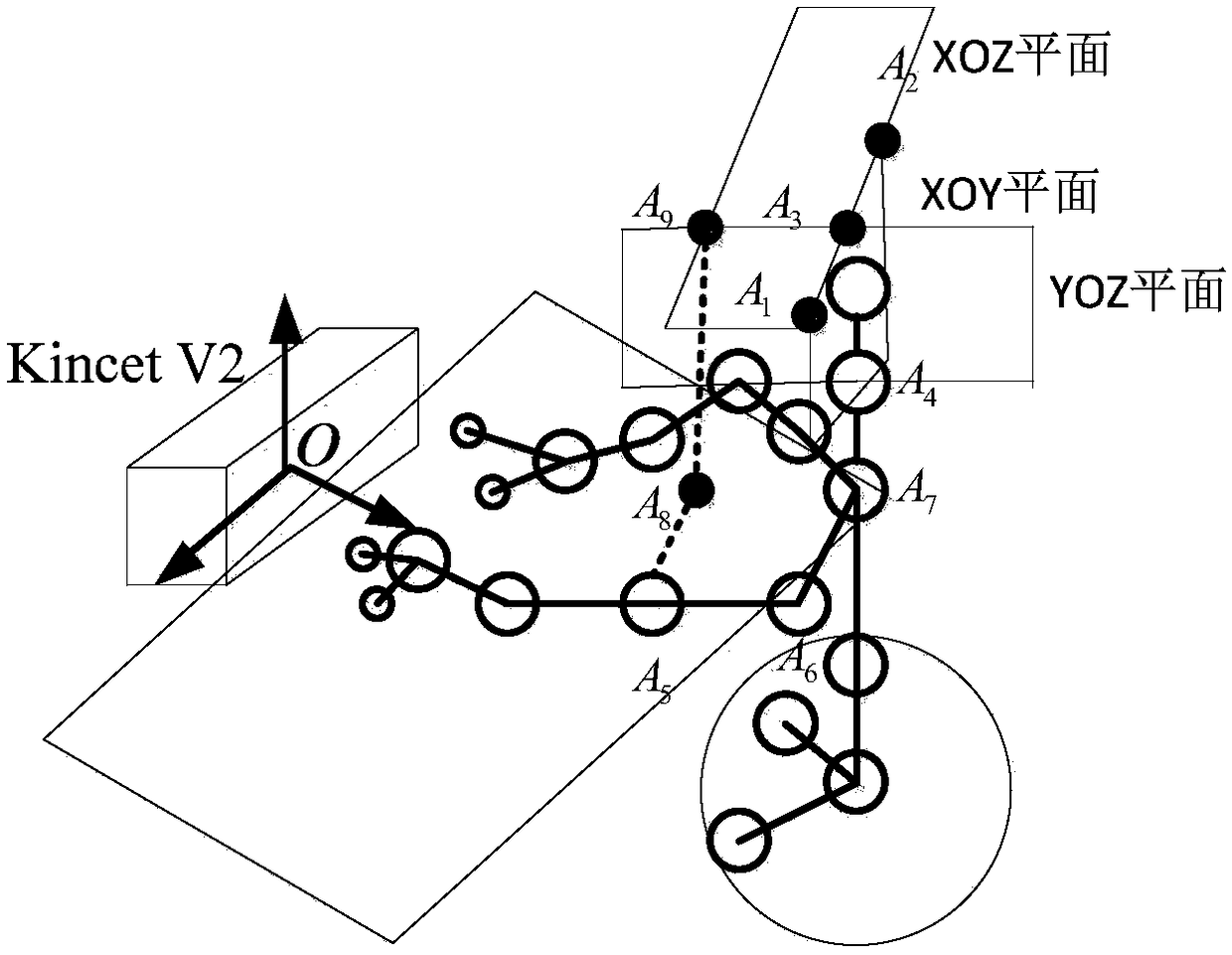

[0056] refer to figure 1 , the user stands facing the Kincet V2 on the left, and straightens the left arm, and collects the coordinate point A of the left eye through the depth camera in the Kinect V2 human-computer interaction device 1 (x 1 ,y 1 ,z 1 ), right eye coordinate point A 2 (x 2 ,y 2 ,z 2 ), calculate the coordinates of the center of the eyes Collect 16 skeletal points of the human body, including head and neck A 4 (x 4 ,y 4 ,z 4 ), shoulder center, left thumb, right thumb, left fingertip, right fingertip, left hand, right hand, left wrist, right wrist, left elbow A 5 (x 5 ,y 5 ,z 5 ), right elbow, left shoulder A 6 (x 6 ,y 6 ,z 6 ), right shoulder, hip center A 7 (x 7 ,y 7 ,z 7 ). First make the relative off...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com